Pan Chuang AI sharing

Author AYUSH3987

Compile | Flin

Source | analyticsvidhya

introduce

In neural networks, we have many super parameters, and it is very difficult to adjust the super parameters manually. Therefore, we can use Keras Tuner, which makes it very easy to adjust the super parameters of neural network. Just like the grid search or random search you see in machine learning.

In this article, you will learn how to use Keras Tuner to adjust the super parameters of neural network. We will start with a very simple neural network, then adjust the super parameters and compare the results. You will learn all about Keras Tuner.

What are hyperparameters?

Developing a deep learning model is an iterative process, starting with the initial architecture and then reconfigured until a model that can be trained effectively in terms of time and computing resources is obtained.

These settings you adjust are called super parameters. When you have an idea, write code and check the performance, and then perform the same process again until you get good performance.

Therefore, there is a method to adjust the setting of neural network, which is called hyperparameter. The process of finding a good set of hyperparameters is called hyperparameter adjustment.

Super parameter adjustment is a very important part of construction. If it is not completed, it may lead to major problems in the model, such as spending a lot of time, useless parameters and so on.

There are usually two types of superparameters:

- Model based hyperparameters: these types of hyperparameters include the number of hidden layers, neurons, etc.

- Algorithm based: these types will affect speed and efficiency, such as learning rate in gradient descent, etc.

For more complex models, the number of super parameters will increase sharply, and manually adjusting them can be very challenging.

The benefit of the Keras tuner is that it will help to accomplish one of the most challenging tasks, that is, it is very easy to tune hyperparameters in just a few lines of code.

Keras tuner

Keras tuner is a library for adjusting neural network superparameters, which can help you select the best superparameters in the neural network implementation in Tensorflow.

To install Keras tuner, you just run the following command,

pip install keras-tuner

But wait!, Why do we need Keras tuner?

The answer is that hyperparameters play an important role in developing a good model. It can produce great differences, it will help you prevent over fitting, it will help you make a good trade-off between deviation and variance, and so on.

Use Keras Tuner to adjust our super parameters

First, we will develop a baseline model, and then we will use Keras tuner to develop our model. I'll implement it using Tensorflow.

Step 1 (download and prepare dataset)

from tensorflow import keras # importing keras

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data() # loading the data using keras datasets api

x_train = x_train.astype('float32') / 255.0 # normalize the training images

x_test = x_test.astype('float32') / 255.0 # normalize the testing images

Step 2 (develop baseline model)

Now we will build our baseline neural network using mnist data sets that help identify numbers, so let's build a deep neural network.

model1 = keras.Sequential() model1.add(keras.layers.Flatten(input_shape=(28, 28))) # flattening 28 x 28 model1.add(keras.layers.Dense(units=512, activation='relu', name='dense_1')) # you have 512 neurons with relu activation model1.add(keras.layers.Dropout(0.2)) # we added a dropout layer with the rate of 0.2 model1.add(keras.layers.Dense(10, activation='softmax')) # output layer, where we have total 10 classes

Step 3 (compiling and training models)

Now that we have established our baseline model, it is time to compile our model and train the model. We will use Adam optimizer with a learning rate of 0.0. For training, we will run our model for 10 periods and verify that the segmentation is 0.2

model1.compile(optimizer=keras.optimizers.Adam(learning_rate=0.001),

loss=keras.losses.SparseCategoricalCrossentropy(),

metrics=['accuracy'])

model1.fit(x_train, y_train, epochs=10, validation_split=0.2)

Step 4 (evaluate our model)

Now that we have trained, we will evaluate our model on the test set to see the performance of the model.

model1_eval = model.evaluate(img_test, label_test, return_dict=True)

Adjusting the model using Keras Tuner

Step 1 (import library)

import tensorflow as tf import kerastuner as kt

Step 2 (build the model using Keras Tuner)

Now, you will set a supermodel (the model you set for overshoot is called supermodel). We will use the model builder function to define your supermodel. You can see in the following function that the function returns the compiled model with adjusted superparameters.

In the following classification model, we will fine tune the model hyperparameters, that is, the learning rate of several neurons and Adam optimizer.

def model_builder(hp):

'''

Args:

hp - Keras tuner object

'''

# Initialize the Sequential API and start stacking the layers

model = keras.Sequential()

model.add(keras.layers.Flatten(input_shape=(28, 28)))

# Tune the number of units in the first Dense layer

# Choose an optimal value between 32-512

hp_units = hp.Int('units', min_value=32, max_value=512, step=32)

model.add(keras.layers.Dense(units=hp_units, activation='relu', name='dense_1'))

# Add next layers

model.add(keras.layers.Dropout(0.2))

model.add(keras.layers.Dense(10, activation='softmax'))

# Tune the learning rate for the optimizer

# Choose an optimal value from 0.01, 0.001, or 0.0001

hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3, 1e-4])

model.compile(optimizer=keras.optimizers.Adam(learning_rate=hp_learning_rate),

loss=keras.losses.SparseCategoricalCrossentropy(),

metrics=['accuracy'])

return model

In the above code, here are some precautions:

- Int() method to define the search space for dense cells. This allows you to set the minimum and maximum values and the step size when increasing between these values.

- The Choice() method of learning rate. This allows you to define discrete values to be included in the search space when overshoot.

Step 3 instantiate tuner and adjust the super parameters

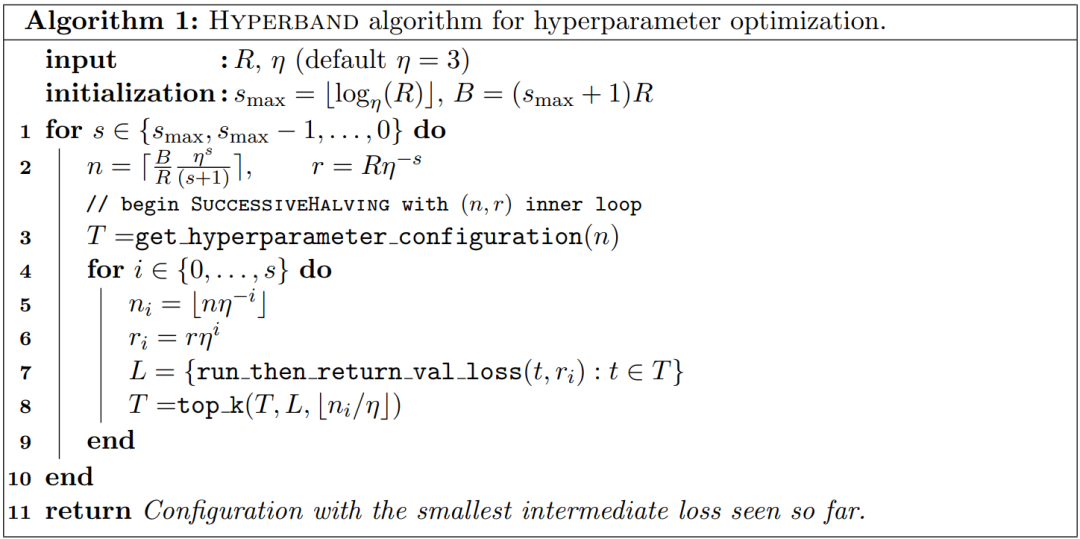

You will use HyperBand Tuner, an algorithm developed for hyperparametric optimization. It uses adaptive resource allocation and early stop to quickly converge to a high-performance model.

You can here( https://arxiv.org/pdf/1603.06560.pdf )Read more about this intuition.

But the basic algorithm is shown in the figure below. If you can't understand it, please ignore it and move on. This is a big topic that needs another blog.

Hyperband calculates 1 + log_factor(max_epochs) and round it to the nearest integer to determine the number of models to be trained in parentheses.

# Instantiate the tuner

tuner = kt.Hyperband(model_builder, # the hypermodel

objective='val_accuracy', # objective to optimize

max_epochs=10,

factor=3, # factor which you have seen above

directory='dir', # directory to save logs

project_name='khyperband')

# hypertuning settings

tuner.search_space_summary()

Output:-

# Search space summary

# Default search space size: 2

# units (Int)

# {'default': None, 'conditions': [], 'min_value': 32, 'max_value': 512, 'step': 32, 'sampling': None}

# learning_rate (Choice)

# {'default': 0.01, 'conditions': [], 'values': [0.01, 0.001, 0.0001], 'ordered': True}

Step 4 (search for the best super parameter)

stop_early = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=5) # Perform hypertuning tuner.search(x_train, y_train, epochs=10, validation_split=0.2, callbacks=[stop_early])

best_hp=tuner.get_best_hyperparameters()[0]

Step 5 (reconstruct and train the model with the best super parameters)

# Build the model with the optimal hyperparameters h_model = tuner.hypermodel.build(best_hps) h_model.summary() h_model.fit(x_train, x_test, epochs=10, validation_split=0.2)

Now, you can evaluate the model,

h_eval_dict = h_model.evaluate(img_test, label_test, return_dict=True)

Comparison of tuning with and without hyperparameters

Baseline model performance:

BASELINE MODEL: number of units in 1st Dense layer: 512 learning rate for the optimizer: 0.0010000000474974513 loss: 0.08013473451137543 accuracy: 0.9794999957084656

HYPERTUNED MODEL: number of units in 1st Dense layer: 224 learning rate for the optimizer: 0.0010000000474974513 loss: 0.07163219898939133 accuracy: 0.979200005531311

- If you see that the training time of the baseline model is more than that of the hyperparametric adjustment model, it is because it has fewer neurons, so it is faster.

- The hyperparametric model is more robust. You can see the loss of your baseline model and the loss of overshoot model, so we can say that this is a more robust model.

Endnote

Thank you for reading this article. I hope you find this article very helpful, and you will implement Keras tuner in your neural network to get a better neural network.