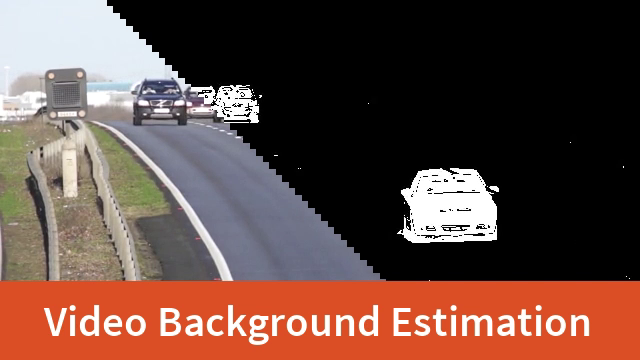

Introduction: This paper presents a simple and reliable algorithm to estimate the video background. For the video with fixed lens, which contains a small number of moving objects, the background image of the image can be obtained by median filtering. With the help of inter frame difference, the mask of moving objects in the image can be obtained for further object detection, tracking and recognition.

Keywords: background modeling, inter frame difference

Based on Simple Background Estimation in Videos using OpenCV (C++/Python) From the contents of.

§ 00 brief introduction

in many computer vision applications, the computing power at your disposal is not enough. In this case, we need to use effective and simple image processing technology.

for the application of background estimation in the scene with fixed camera and moving objects in the lens, this paper will discuss the related technologies. This kind of application scenario is actually not uncommon. For example, many traffic surveillance cameras are fixed and different.

Note that the code in this article was tested in OpenCV 4.4.

0.1 time median filtering

in order to understand the algorithm later in this paper, we first consider a simple 1D problem.

suppose we need to estimate a physical quantity every 10ms (such as the temperature in the room). For example, the room temperature is 70 degrees Fahrenheit.

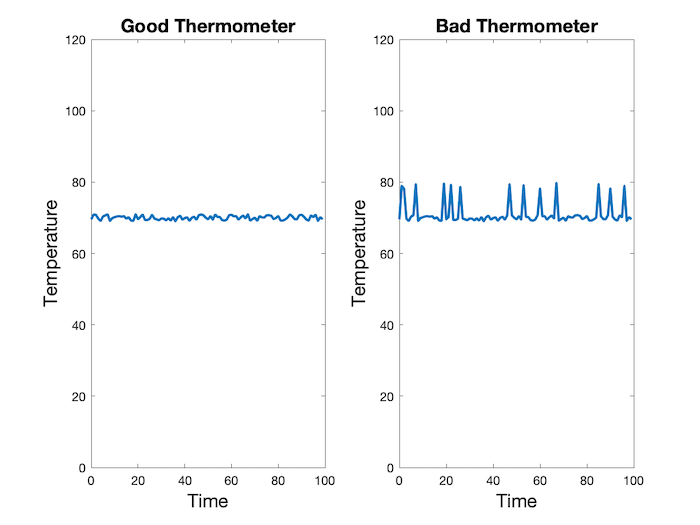

in the chart above, we show the measurement curves of two thermometers. One is good and the other is a little wrong.

the curve on the left shows the curve from a good thermometer measurement, which contains a defined level of Gaussian noise. In order to get a more accurate temperature value, we can average the values in a few seconds. Since high-speed noise has positive and negative values, simple average calculation can eliminate the influence of noise. In fact, the average in this particular case is 70.01 degrees Fahrenheit.

if there is a faulty thermometer, otherwise, its output value is the same as that of a good thermometer in most cases. But sometimes the wind blows and outputs completely wrong values.

in fact, if we still use the average algorithm, the output value of the bad thermometer is 71.07 degrees Fahrenheit. Obviously, the temperature estimation is too high.

can an accurate estimate of temperature be obtained in this case?

the answer is: Yes. If there are "outliers" in the data, that is, completely wrong values, using the median of the data can produce a more reliable and stable estimation of the values to be estimated.

the so-called median is the middle value when all data are arranged in ascending or descending order.

for the above bad thermometer data, if the temperature obtained by median filtering is 70.05 degrees Fahrenheit, it is better than the average value of 71.07.

the only certainty of median calculation is that it requires more computational power than the average algorithm.

§ 01 median background estimation

1.1 background estimation using median

now let's return to the problem of video background estimation taken by a still camera. We can assume that in most cases, each pixel sees the same location of Beijing, because the camera is fixed. Occasionally, a car or other moving object passes through the front and blocks the background.

for video sequences, we can randomly select some video frames (for example, 25 frames, about 1 second). In other words, we have 25 estimates for each pixel in the image. Since each pixel in the image is not blocked by moving objects or vehicles for more than 50%, the median value of all pixels in the 25 frame image is the best estimate of the background.

for each pixel in the image, the median value is used for estimation, and a median image can be obtained, which represents the background of the scene.

1.2 background estimation code

let's take a look at the actual code.

- Python

import numpy as np

import cv2

from skimage import data, filters

# Open Video

cap = cv2.VideoCapture('video.mp4')

# Randomly select 25 frames

frameIds = cap.get(cv2.CAP_PROP_FRAME_COUNT) * np.random.uniform(size=25)

# Store selected frames in an array

frames = []

for fid in frameIds:

cap.set(cv2.CAP_PROP_POS_FRAMES, fid)

ret, frame = cap.read()

frames.append(frame)

# Calculate the median along the time axis

medianFrame = np.median(frames, axis=0).astype(dtype=np.uint8)

# Display median frame

cv2.imshow('frame', medianFrame)

cv2.waitKey(0)

- C++

#include <opencv2/opencv.hpp> #include <iostream> #include <random> using namespace std; using namespace cv;

next, we need to create several functions to calculate the median image frame.

int computeMedian(vector<int> elements)

{

nth_element(elements.begin(), elements.begin()+elements.size()/2, elements.end());

//sort(elements.begin(),elements.end());

return elements[elements.size()/2];

}

cv::Mat compute_median(std::vector<cv::Mat> vec)

{

// Note: Expects the image to be CV_8UC3

cv::Mat medianImg(vec[0].rows, vec[0].cols, CV_8UC3, cv::Scalar(0, 0, 0));

for(int row=0; row<vec[0].rows; row++)

{

for(int col=0; col<vec[0].cols; col++)

{

std::vector<int> elements_B;

std::vector<int> elements_G;

std::vector<int> elements_R;

for(int imgNumber=0; imgNumber<vec.size(); imgNumber++)

{

int B = vec[imgNumber].at<cv::Vec3b>(row, col)[0];

int G = vec[imgNumber].at<cv::Vec3b>(row, col)[1];

int R = vec[imgNumber].at<cv::Vec3b>(row, col)[2];

elements_B.push_back(B);

elements_G.push_back(G);

elements_R.push_back(R);

}

medianImg.at<cv::Vec3b>(row, col)[0]= computeMedian(elements_B);

medianImg.at<cv::Vec3b>(row, col)[1]= computeMedian(elements_G);

medianImg.at<cv::Vec3b>(row, col)[2]= computeMedian(elements_R);

}

}

return medianImg;

}

int main(int argc, char const *argv[])

{

std::string video_file;

// Read video file

if(argc > 1)

{

video_file = argv[1];

} else

{

video_file = "video.mp4";

}

VideoCapture cap(video_file);

if(!cap.isOpened())

cerr << "Error opening video file\n";

// Randomly select 25 frames

default_random_engine generator;

uniform_int_distribution<int>distribution(0,

cap.get(CAP_PROP_FRAME_COUNT));

vector<Mat> frames;

Mat frame;

for(int i=0; i<25; i++)

{

int fid = distribution(generator);

cap.set(CAP_PROP_POS_FRAMES, fid);

Mat frame;

cap >> frame;

if(frame.empty())

continue;

frames.push_back(frame);

}

// Calculate the median along the time axis

Mat medianFrame = compute_median(frames);

// Display median frame

imshow("frame", medianFrame);

waitKey(0);

}

as you can see, we randomly selected 25 frames of pictures and calculated the median value of each pixel in 25 frames. This median frame is a good estimate of the background. This is because each pixel is a background image at least 50% of the time.

the background results are shown below.

§ 02 inter frame difference

obviously, the next question is whether we can get a moving object mask for each frame.

it can be completed through the following steps:

1. Converting the background frame into a gray image;

2. Convert all frames in the video into corresponding gray images;

3. The absolute value of the difference between the gray image of the current frame and the background frame;

4. Through threshold judgment, noise removal and binarization, the output result is finally obtained.

the following is the corresponding program code:

- Python

# Reset frame number to 0

cap.set(cv2.CAP_PROP_POS_FRAMES, 0)

# Convert background to grayscale

grayMedianFrame = cv2.cvtColor(medianFrame, cv2.COLOR_BGR2GRAY)

# Loop over all frames

ret = True

while(ret):

# Read frame

ret, frame = cap.read()

# Convert current frame to grayscale

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Calculate absolute difference of current frame and

# the median frame

dframe = cv2.absdiff(frame, grayMedianFrame)

# Treshold to binarize

th, dframe = cv2.threshold(dframe, 30, 255, cv2.THRESH_BINARY)

# Display image

cv2.imshow('frame', dframe)

cv2.waitKey(20)

# Release video object

cap.release()

# Destroy all windows

cv2.destroyAllWindows()

- C++

// Reset frame number to 0

cap.set(CAP_PROP_POS_FRAMES, 0);

// Convert background to grayscale

Mat grayMedianFrame;

cvtColor(medianFrame, grayMedianFrame, COLOR_BGR2GRAY);

// Loop over all frames

while(1)

{

// Read frame

cap >> frame;

if (frame.empty())

break;

// Convert current frame to grayscale

cvtColor(frame, frame, COLOR_BGR2GRAY);

// Calculate absolute difference of current frame and the median frame

Mat dframe;

absdiff(frame, grayMedianFrame, dframe);

// Threshold to binarize

threshold(dframe, dframe, 30, 255, THRESH_BINARY);

// Display Image

imshow("frame", dframe);

waitKey(20);

}

cap.release();

return 0;

}

※ general ※ conclusion ※

this paper presents a simple and reliable algorithm for estimating the video background. For the video with fixed lens, which contains a small number of moving objects, the background image of the image can be obtained by median filtering. With the help of inter frame difference, the mask of moving objects in the image can be obtained for further object detection, tracking and recognition.

there are many moving objects in the image, which are frequent, and the background can not be accurately obtained by median filtering. Then:

- Kalmann Filter based Video Background Estimation

- A Batch-Incremental Video Background Estimation Model using Weighted Low-Rank Approximation of Matrices

- Background estimation with Gaussian distribution for image segmentation, a fast approach

■ links to relevant literature:

- Simple Background Estimation in Videos using OpenCV (C++/Python)

- Kalmann Filter based Video Background Estimation

- A Batch-Incremental Video Background Estimation Model using Weighted Low-Rank Approximation of Matrices

- Background estimation with Gaussian distribution for image segmentation, a fast approach

● links to relevant charts: