Part I: api implementation of session window based on flink

WaterMark is translated as water mark. When is the water mark used?

For example, when the water control reaches the tight ladder along the water, it will be triggered. If the water is not discharged, the dangerous situation can be found

When the spark program is divided into windows, it mainly measures when to trigger, which is also the water level line that needs to be used. In fact, it is to judge the trigger mechanism of the water level window, and the water level line will increase continuously in this window.

In fact, there are two ways to obtain the water level. One is to extract it according to the data time, and the other is to generate the water level regularly.

When the data we input is large and small, it will use the largest Eventime in this partition as its water mark.

So how is this water mark calculated?

The real watermark also plays a role in delaying the sending of the window. For example, in our production environment, the pull data is pulled from the middleware, such as kafka.

There are multiple partitions under kakfa, which are written in by the producer. There are two or more writes in the producer. When one-to-one writing is completed, it will also switch writing. In kafka, if there is only one partition, it is orderly, but multiple partitions cannot ensure that it is orderly.

At the beginning of writing a piece of data, there will be a delay in another consumer. For example, the first producer will have a delay effect due to network problems, as shown in the figure:

Next, use flick to pull the data from kakfa, but how to pull it? In fact, no matter how large the parallel of flink is, its solt has only two state s. Pulling data through direct connection may be delayed, but how to tolerate the duration of data is to solve the problem of data disorder. In fact, late data in the window will not be triggered

With this question, can we design it? tolerable

1. Watermarks are designed primarily from} their definition of when to stop waiting for early events

The event processing in Flink depends on a special time stamped element called watermarks, which is inserted into the stream by the data source or watermarks generator. Watermarks with timestamp t can be understood as asserting that all events with timestamp < t have arrived (with a reasonable probability)

2. We can imagine different strategies to decide how to generate watermarks

We know that each event will arrive after a delay of some time, and these delays will be different, so some events will be delayed more than others. A simple approach is to assume that these delays are limited by some maximum delays. Flink calls this strategy bounded unordered watermarks. It's easy to imagine a more complex watermarks method, but for many applications, the fixed delay effect is good.

If you want to build an application like a stream classifier, Flink's ProcessFunction is the right building block. It provides access to event time timers (i.e., callbacks triggered based on the arrival of watermarks) and has hooks for managing the required state of buffered events until their turn to be sent downstream

Code implementation:

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

/**

* First keyBy, then divide the EventTime, and scroll the window -- [unbounded flow]

* Set the delay time to 2 seconds

*

*/

public class EventTimeTumblingWindowAllDemo4 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.createLocalEnvironmentWithWebUI(new Configuration());

//1000,spark,3

//1200,spark,5

//2000,hadoop,2

//The parallelism returned by socketTextStream is 1

DataStreamSource<String> lines = env.socketTextStream("Master", 8888);

//The maximum EventTime carried by the data in TODO's current partition - out of order delay time > = the end time of the window will trigger the window

SingleOutputStreamOperator<String> dataWithWaterMark = lines.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<String>(Time.seconds(0)) { //Set to 0, which indicates the delay time of data

@Override

public long extractTimestamp(String element) {

//Time in extracted data

return Long.parseLong(element.split(",")[0]);

}

});

SingleOutputStreamOperator<Tuple2<String,Integer>> workAndCount = dataWithWaterMark.map(new MapFunction<String, Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> map(String s) throws Exception {

String[] fields = s.split(",");

return Tuple2.of(fields[1],Integer.parseInt(fields[2]));

}

});

env.execute();

}

}

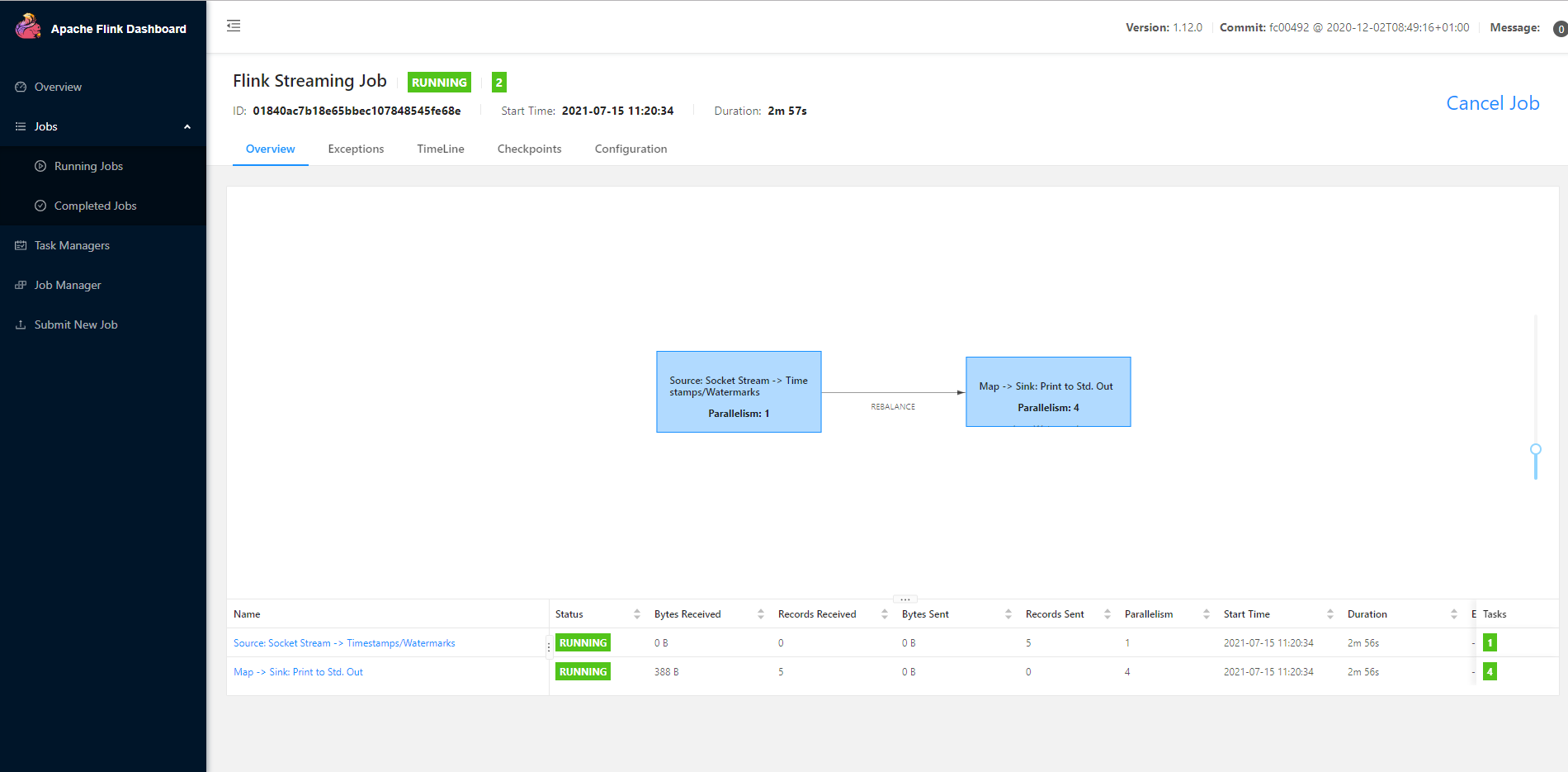

To view a job: http://localhost:8081/#/job/01840ac7b18e65bbec107848545fe68e/overview

Usage scenarios (problem solving)

Processing out of order data: in flink, data is processed in real time, but when processing data, there will be problems due to network transmission, so the data generated first will arrive later. When being processed, there will be data confusion, and because the window is opened, the window is closed, but the data of this window is late, resulting in data loss;

watermark under multi parallelism

In a subtask, watermark will be sent to all subtasks in the next operator, that is, more than one,

Similarly, a subtask will receive the watermarks of all subtasks in the previous operator. At this time, the smallest watermark will play a role.

watermark can be understood as a special data. This data does not participate in the calculation, but only plays a role in triggering the closing of the window;