When we use the Linux system, if there is a problem with the network or disk I/O, we will find that the process is stuck. Even using kill -9 can't kill the process. Many commonly used debugging tools, such as strace and pstack, also fail. What's the matter?

At this point, we use ps to view the process list. We can see that the status of the stuck process is displayed as D.

The D state described in man ps is Uninterruptible Sleep.

Linux processes have two sleep states:

- Interruptible Sleep, which interrupts sleep and displays S in the ps command. A process in this sleep state can be awakened by sending a signal to it.

- Uninterruptible Sleep, which can not interrupt sleep, displays D in the ps command. A process in this sleep state cannot immediately process any signal sent to it, which is why it cannot be killed with kill.

There is an answer in Stack Overflow:

kill -9 just sends a SIGKILL signal to the process. When a process is in a special state (signal processing, or system call), it will not be able to process any signals, including SIGKILL, which will lead to the process not being killed immediately, that is, we often call D state (uninterrupted sleep state). Those commonly used debugging tools (such as strace, pstack, etc.) are generally implemented by using a special signal, and can not be used in this state.

It can be seen that the process in D state is generally in a kernel state system call. How do you know which system call it is and what it is waiting for? Fortunately, procfs (the / proc directory under Linux) is provided under Linux, through which you can see the current kernel call stack of any process. Let's use the process of accessing JuiceFS to simulate (because JuiceFS client is based on FUSE and is a user state file system, which is easier to simulate I/O failure).

First mount JuiceFS to the foreground (add a - f parameter to the. / juicefs mount command), and then stop the process with Cltr+Z. at this time, use ls /jfs to access the mount point, and you will find that ls is stuck.

You can see that ls is stuck in VFS through the following command_ On the fstatat call, it will send a getattr request to the FUSE device and wait for a response. The JuiceFS client process has been stopped by us, so it is stuck:

$ cat /proc/`pgrep ls`/stack [<ffffffff813277c7>] request_wait_answer+0x197/0x280 [<ffffffff81327d07>] __fuse_request_send+0x67/0x90 [<ffffffff81327d57>] fuse_request_send+0x27/0x30 [<ffffffff8132b0ac>] fuse_simple_request+0xcc/0x1a0 [<ffffffff8132c0f0>] fuse_do_getattr+0x120/0x330 [<ffffffff8132df28>] fuse_update_attributes+0x68/0x70 [<ffffffff8132e33d>] fuse_getattr+0x3d/0x50 [<ffffffff81220c6f>] vfs_getattr_nosec+0x2f/0x40 [<ffffffff81220ee6>] vfs_getattr+0x26/0x30 [<ffffffff81220fc8>] vfs_fstatat+0x78/0xc0 [<ffffffff8122150e>] SYSC_newstat+0x2e/0x60 [<ffffffff8122169e>] SyS_newstat+0xe/0x10 [<ffffffff8186281b>] entry_SYSCALL_64_fastpath+0x22/0xcb [<ffffffffffffffff>] 0xffffffffffffffff

At this time, pressing Ctrl+C cannot exit.

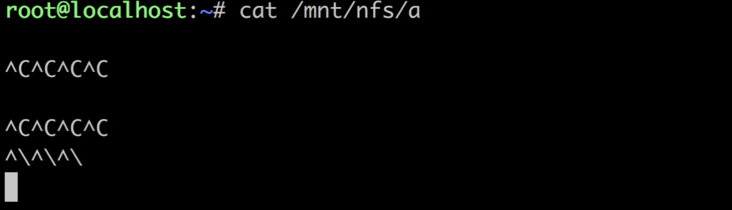

root@localhost:~# ls /jfs ^C ^C^C^C^C^C

But strace can wake it up, start processing the previous interrupt signal, and then exit.

root@localhost:~# strace -p `pgrep ls`

strace: Process 26469 attached

--- SIGINT {si_signo=SIGINT, si_code=SI_KERNEL} ---

rt_sigreturn({mask=[]}) = -1 EINTR (Interrupted system call)

--- SIGTERM {si_signo=SIGTERM, si_code=SI_USER, si_pid=13290, si_uid=0} ---

rt_sigreturn({mask=[]}) = -1 EINTR (Interrupted system call)

. . .

tgkill(26469, 26469, SIGINT) = 0

--- SIGINT {si_signo=SIGINT, si_code=SI_TKILL, si_pid=26469, si_uid=0} ---

+++ killed by SIGINT +++If kill -9 is used at this time, it can also be killed:

root@localhost:~# ls /jfs ^C ^C^C^C^C^C ^C^CKilled

Because VFS_ The simple system call of lstatat () does not shield SIGKILL, SIGQUIT, SIGABRT and other signals. It can also be processed routinely.

Let's simulate a more complex I/O error, configure a storage type that cannot be written to JuiceFS, mount it, and use cp to try to write data into it. At this time, cp will also get stuck:

root@localhost:~# cat /proc/`pgrep cp`/stack [<ffffffff813277c7>] request_wait_answer+0x197/0x280 [<ffffffff81327d07>] __fuse_request_send+0x67/0x90 [<ffffffff81327d57>] fuse_request_send+0x27/0x30 [<ffffffff81331b3f>] fuse_flush+0x17f/0x200 [<ffffffff81218fd2>] filp_close+0x32/0x80 [<ffffffff8123ac53>] __close_fd+0xa3/0xd0 [<ffffffff81219043>] SyS_close+0x23/0x50 [<ffffffff8186281b>] entry_SYSCALL_64_fastpath+0x22/0xcb [<ffffffffffffffff>] 0xffffffffffffffff

Why is it stuck in the close_fd() ? This is because writing data to JFS is asynchronous. When cp calls write(), the data will be cached in the client process of JuiceFS and written to the back-end storage asynchronously. When cp finishes writing the data, it will call close to ensure that the data is written, corresponding to the flush operation of FUSE. When the client of JuiceFS encounters a flush operation, it needs to ensure that all the written data is persisted to the back-end storage, and the back-end storage fails to write. It is in the process of multiple retries, so the flush operation is stuck and has not replied to cp, so cp is also stuck.

At this time, if you use Cltr+C or kill, you can interrupt the operation of cp. because JuiceFS implements the interrupt processing of various file system operations, let it abandon the current operation (such as flush) and return to EINTR, so that the applications accessing JuiceFS can be interrupted in case of various network failures.

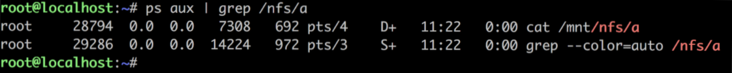

At this time, if I stop the JuiceFS client process so that it can no longer process any FUSE requests (including interrupt requests), if I try to kill it at this time, I can't kill it, including kill -9. Use ps to check the process status, which is already in D status.

root 1592 0.1 0.0 20612 1116 pts/3 D+ 12:45 0:00 cp parity /jfs/aaa

But at this time, you can use cat /proc/1592/stack to see its kernel call stack

root@localhost:~# cat /proc/1592/stack [<ffffffff8132775d>] request_wait_answer+0x12d/0x280 [<ffffffff81327d07>] __fuse_request_send+0x67/0x90 [<ffffffff81327d57>] fuse_request_send+0x27/0x30 [<ffffffff81331b3f>] fuse_flush+0x17f/0x200 [<ffffffff81218fd2>] filp_close+0x32/0x80 [<ffffffff8123ac53>] __close_fd+0xa3/0xd0 [<ffffffff81219043>] SyS_close+0x23/0x50 [<ffffffff8186281b>] entry_SYSCALL_64_fastpath+0x22/0xcb [<ffffffffffffffff>] 0xffffffffffffffff

The kernel call stack shows that it is stuck in the flush call of FUSE. At this time, as long as the JuiceFS client process is restored, you can immediately interrupt cp to exit.

Operations involving data security such as close are not restartable, so they cannot be interrupted at will by SIGKILL. For example, they can only be interrupted when the implementation end of FUSE responds to the interrupt operation.

Therefore, as long as the client process of JuiceFS can respond to the interruption healthily, there is no need to worry about the application accessing JuiceFS getting stuck. Or kill the JuiceFS client process to end the current mount point and interrupt all applications accessing the current mount point.

If you are helpful, please pay attention to our project Juicedata/JuiceFS Yo! (0ᴗ0✿)