parallelStream action

Multithreading can speed up the processing of collection operations. The underlying principle is to use the thread pool forkjoinpool (we look forward to your sharing)

Must parallel streams be faster than streams?

When the amount of data processed is not large, the code of "parallelStream()" is sometimes slower than that of "stream()".

Because: parallelStream () always needs to perform more than sequential execution, it will bring great overhead to divide the work among multiple threads and merge or combine the results. Use cases such as converting short strings to lowercase strings are very small, and they are negligible compared to the parallel splitting overhead.

Using multiple threads to process data may have some initial setup costs, such as initializing the thread pool. These overhead may inhibit the benefits of using these threads, especially when the runtime CPU is already very low. In addition, if there are other threads running background processes, or the contention is very high, the performance of parallel processing will be further reduced.

Thread safety should be carefully considered

Unreasonable use of data types leads to high CPU consumption

After the following code runs for a period of time in the generation environment, the cpu occupation of the system display service is very high, up to 100%.

Set<TruckTeamAuth> list = new HashSet<>(); // 1. Variable declaration

List<STruckDO> sTruckDOList = isTruckService.lambdaQuery().select(STruckDO::getId, STruckDO::getTeamId).isNotNull(STruckDO::getTeamId).in(STruckDO::getTeamId, teamIdList).list();

sTruckDOList.parallelStream().forEach(t -> { // 2. Parallel processing

if (StrUtil.isNotBlank(t.getId()) && StrUtil.isNotBlank(t.getTeamId())) {

list.add(TruckTeamAuth.builder().teamId(t.getTeamId()).truckId(t.getId()).build()); // 3. Operation set

}

});

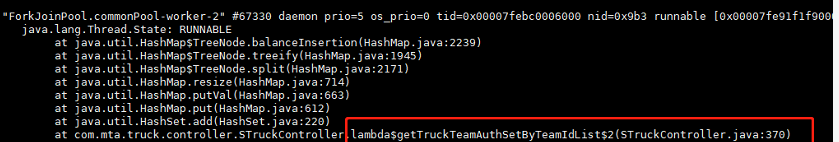

Through the log information of jstack, it is found that when operating HashSet, the internal resource competition leads to excessive CPU consumption, as shown in the following figure

Reason: HashSet is non thread safe. It is actually implemented internally through HashMap. HashSet is operated in multiple threads, resulting in red black conversion competition

Null pointer exception

The parallel stream pair list will occasionally report null pointer exceptions, as shown in the following figure

List<OrderListVO> orderListVOS = new LinkedList<OrderListVO>();

baseOrderBillList.parallelStream().forEach(baseOrderBill -> {

OrderListVO orderListVO = new OrderListVO();

// Set properties in order

orderListVO.setOrderbillgrowthid(baseOrderBill.getOrderbillgrowthid());

orderListVO.setOrderbillid(baseOrderBill.getOrderbillid());

......

orderListVOS.add(orderListVO);

}

The code itself is split into multiple tables and then assembled in the business layer. The use of parallel flow can improve this pure CPU intensive operation. parallelStream takes the number of server CPU cores as the thread pool by default.

Because it is a parallel stream, multiple threads are actually operating the orderListVOS container concurrently, but this container can not guarantee thread safety`

resolvent

1. The polymerization method provided by stream is recommended as follows:

orderListVOS.parallelStream()

.sorted(Comparator.comparing(OrderListVO::getCreatetime).reversed())

.collect(Collectors.toList());

2. Adopt Java util. Features provided by concurrent (Note: related classes provided by this package will have locks)

ParallelStream risk

Although the streaming programming of parallelStream brings great convenience for multithreading development, it also brings an implicit logic, which is not described in the interface notes:

/**

* Returns a possibly parallel {@code Stream} with this collection as its

* source. It is allowable for this method to return a sequential stream.

*

* <p>This method should be overridden when the {@link #spliterator()}

* method cannot return a spliterator that is {@code IMMUTABLE},

* {@code CONCURRENT}, or <em>late-binding</em>. (See {@link #spliterator()}

* for details.)

*

* @implSpec

* The default implementation creates a parallel {@code Stream} from the

* collection's {@code Spliterator}.

*

* @return a possibly parallel {@code Stream} over the elements in this

* collection

* @since 1.8

*/

The above is all the comments of the interface. The so-called implicit logic here is that not every code that independently calls parallelstream will independently maintain and run a multithreading strategy, but JDK will call the same ForkJoinPool thread pool maintained by the running environment by default, that is, no matter where the list is written parallelStream(). forEach(); In fact, the bottom layer of such a piece of code will be run by a set of ForkJoinPool thread pool. Generally, the problems of conflict and queuing encountered in the operation of thread pool will also be encountered here, and will be hidden in the code logic.

Of course, the most dangerous thing here is the deadlock of the thread pool. Once deadlock occurs, all places calling parallelStream will be blocked, whether you know whether others have written code like this or not.

Take this code as an example

list.parallelStream().forEach(o -> {

o.doSomething();

...

});

Just in doSomething()Is there any in the that causes the current execution to be blocked hold Living conditions, due to parallelStream Execute when finished join Operation, any iteration that is not completed will result in join Operation is hold This causes the current thread to get stuck.

Typical operations are: threads are wait,Lock, cyclic lock, external operation (access network) jamming, etc.