Why can't k8s pv and PVC bind together

The statefuse is used to deploy statefuse applications. The application is always in pending state. Before starting, it introduces what statefuse is. In k8s, deployment is used to manage stateless applications. The statefuse is used to manage stateful applications, such as redis, mysql, zookper and other distributed applications. These applications start and stop. There will always be a strict order.

I. stateful set

Headless (headless service), no cluserIP, resource identifier, used to generate parsable dns records

StatefulSet for pod resource management

-

Volume ClaimTemplates provides storage

II. Stateful set deployment

Using nfs for network storage

Build nfs

Configure shared storage directories

Create pv

-

Arrangement of yaml

Build nfs

yum install nfs-utils -y

Mkdir-p/usr/local/k8s/redis/pv{7.12}# Create mount directory

cat /etc/exports /usr/local/k8s/redis/pv7 172.16.0.0/16(rw,sync,no_root_squash) /usr/local/k8s/redis/pv8 172.16.0.0/16(rw,sync,no_root_squash) /usr/local/k8s/redis/pv9 172.16.0.0/16(rw,sync,no_root_squash) /usr/local/k8s/redis/pv10 172.16.0.0/16(rw,sync,no_root_squash) /usr/local/k8s/redis/pv11 172.16.0.0/16(rw,sync,no_root_squash exportfs -avr

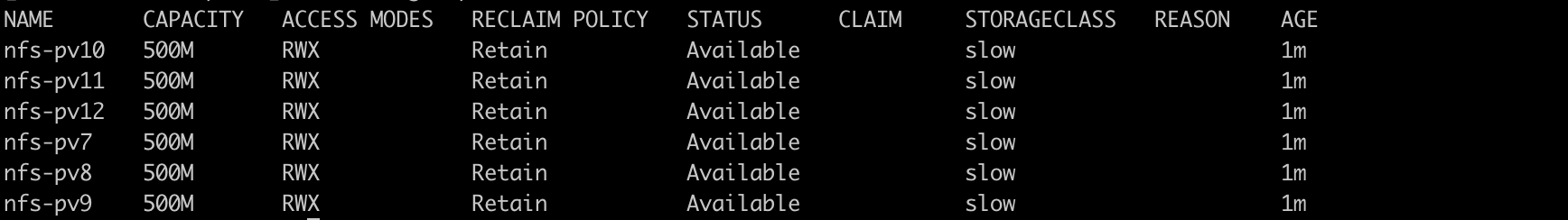

Create pv

cat nfs_pv2.yaml

apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv7 spec: capacity: storage: 500M accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Retain storageClassName: slow nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv7" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv8 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv8" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv9 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv9" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv10 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv10" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv11 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv11" --- apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv12 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: slow persistentVolumeReclaimPolicy: Retain nfs: server: 172.16.0.59 path: "/usr/local/k8s/redis/pv12"

kubectl apply -f nfs_pv2.yaml

View # Creation Success

Writing yaml Arrangement Application

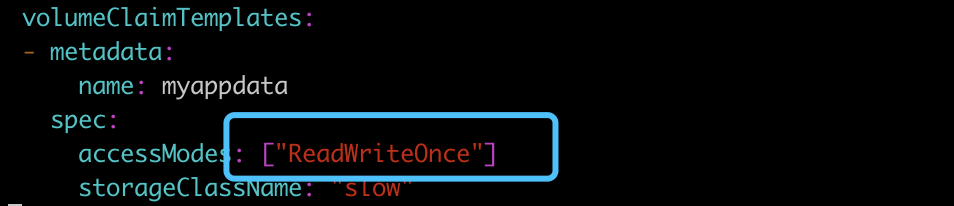

apiVersion: v1 kind: Service metadata: name: myapp labels: app: myapp spec: ports: - port: 80 name: web clusterIP: None selector: app: myapp-pod --- apiVersion: apps/v1 kind: StatefulSet metadata: name: myapp spec: serviceName: myapp replicas: 3 selector: matchLabels: app: myapp-pod template: metadata: labels: app: myapp-pod spec: containers: - name: myapp image: ikubernetes/myapp:v1 resources: requests: cpu: "500m" memory: "500Mi" ports: - containerPort: 80 name: web volumeMounts: - name: myappdata mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: myappdata spec: accessModes: ["ReadWriteOnce"] storageClassName: "slow" resources: requests: storage: 400Mi

kubectl create -f new-stateful.yaml

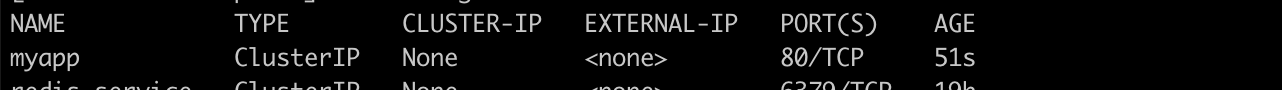

View headless creation success

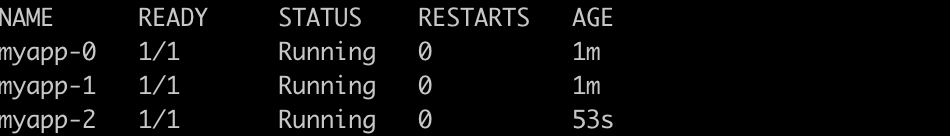

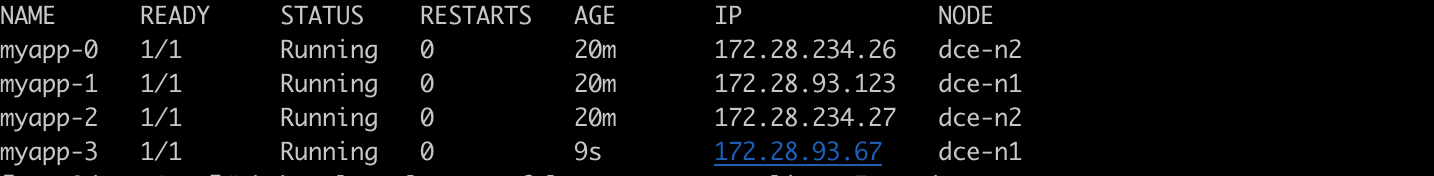

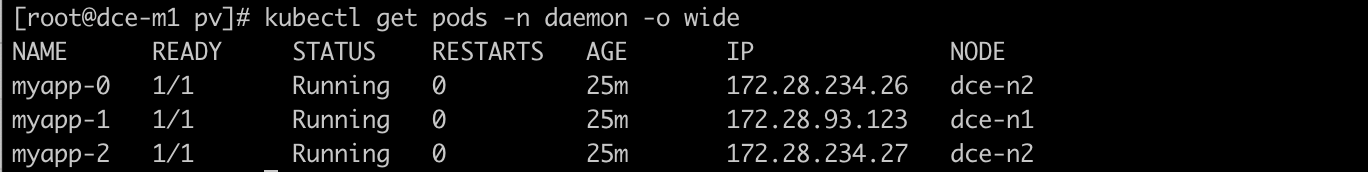

Check whether the pod was created successfully

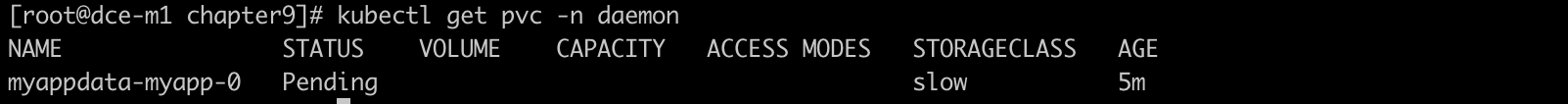

Check whether the pvc was created successfully

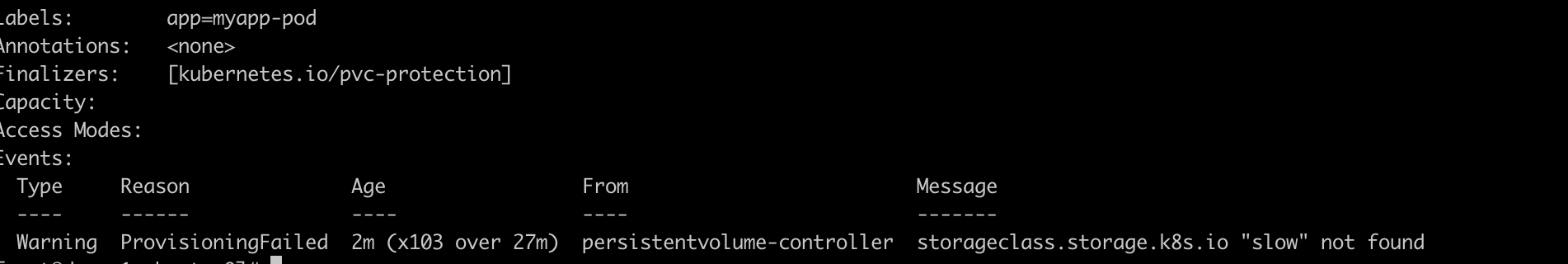

pod startup was unsuccessful, depending on pvc, check the PVC log, did not find the corresponding pvc, clearly written ah

View the association information, with the following attributes

storageClassName: "slow"

3. statefulset barrier removal

Unable to create pvc, resulting in pod can not start properly, yaml file re-checked several times,

Direction of thinking: how pvc binds pv and associates it with storageClassName, pv has been created successfully, and there is also the attribute of storageClassName: slow, which can't be found.

. . . .

. . . .

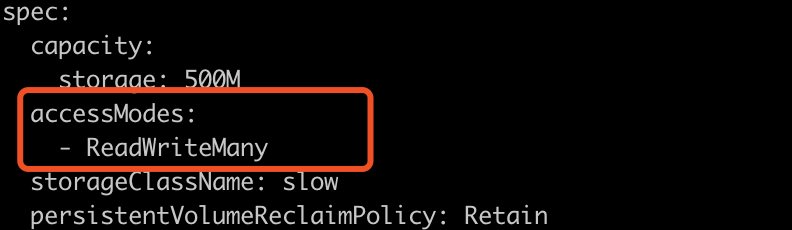

Later check whether the permissions of pv and pvc have been maintained

Discover permissions set by pv

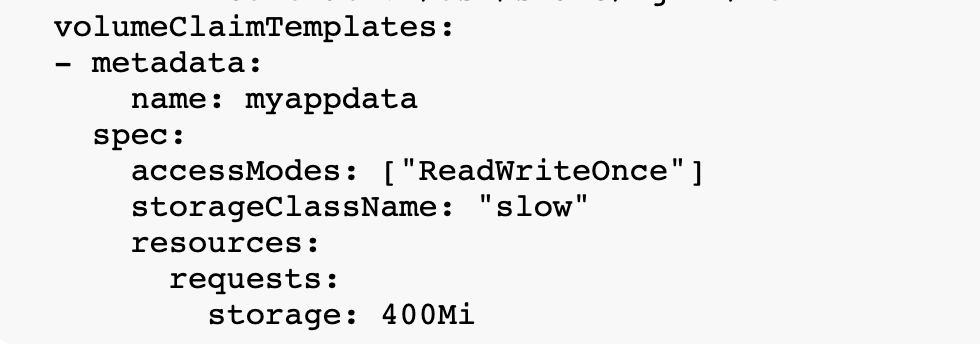

Volume ClaimTemplates: declared pvc permissions

The authority on both sides is inconsistent.

Operation

Delete pvc kubectl delete pvc myappdata-myapp-0-n daemon

Delete yaml file, kubectl delete-f new-stateful.yaml-n daemon

Try modifying access Modes: ["ReadWriteMany"]

Look again

Tip: pv and PVC set permission note

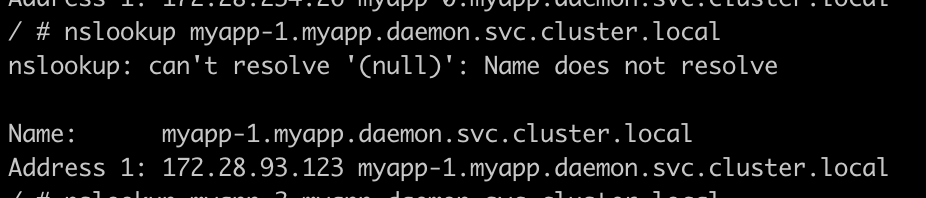

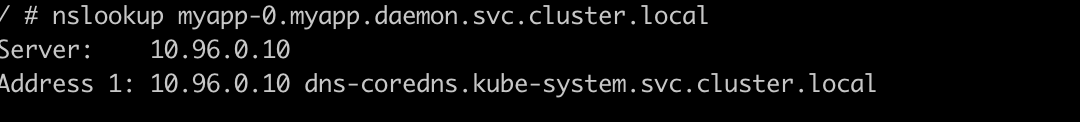

4. statefulset test, domain name resolution

kubectl exec -it myapp-0 sh -n daemon

nslookup myapp-0.myapp.daemon.svc.cluster.local

The rules of analysis are as follows

myapp-0 myapp daemon

FQDN: $(podname).(headless server name).namespace.svc.cluster.local

If there is no nsllokup in the container, the corresponding package needs to be installed. busybox can provide similar functions.

Provide yaml files

apiVersion: v1 kind: Pod metadata: name: busybox namespace: daemon spec: containers: - name: busybox image: busybox:1.28.4 command: - sleep - "7600" resources: requests: memory: "200Mi" cpu: "250m" imagePullPolicy: IfNotPresent restartPolicy: Never

V. Extension of statefulset

Capacity expansion:

The expansion of Statefulset resources is similar to that of Deployment resources, that is, by modifying the number of replicas, the expansion process of Statefulset resources is similar to the creation process, and the index number of application names is increased in turn.

You can use kubectl scale

kubectl patch

Practice: kubectl scale stateful set myapp -- replicas = 4

Volume reduction:

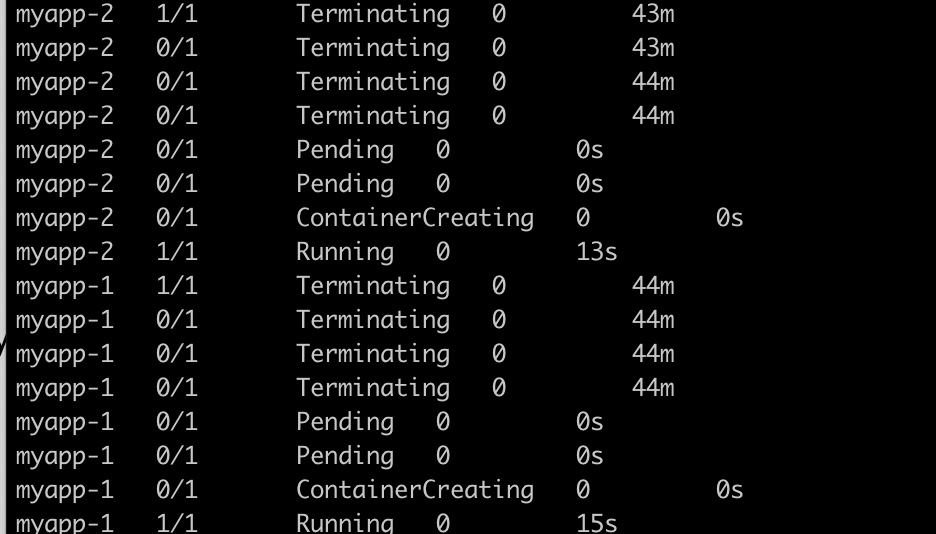

Reducing the number of pod copies is all you need to do.

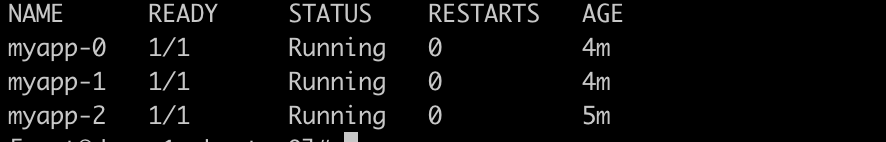

kubectl patch statefulset myapp -p '{"spec":{"replicas":3}}' -n daemon

Tip: Resource scaling requires dynamic creation of binding relationship between pv and pvc, where nfs is used for persistent storage, and how many PVS are pre-created

6. Rolling update of statefulset

-

Rolling update

Canary Release

Scroll Update

Rolling updates start with the largest index pod number, terminate a resource, and start the next pod. Rolling updates are the default update strategy of statefulset

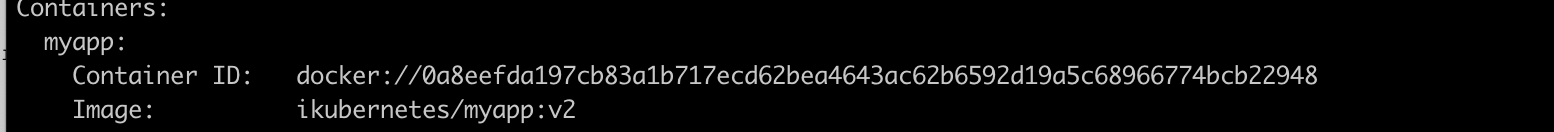

kubectl set image statefulset/myapp myapp=ikubernetes/myapp:v2 -n daemon

Upgrade process

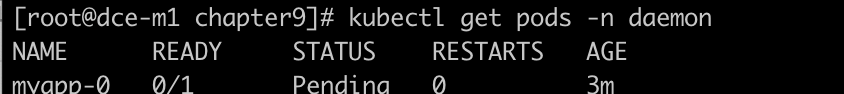

View pod status

kubectl get pods -n daemon

See if the image is updated after the upgrade

kubectl describe pod myapp-0 -n daemon