Chapter 4 RocketMQ application

1, General message

1 message sending classification

Producer also has a variety of options for sending messages. Different methods will produce different system effects.

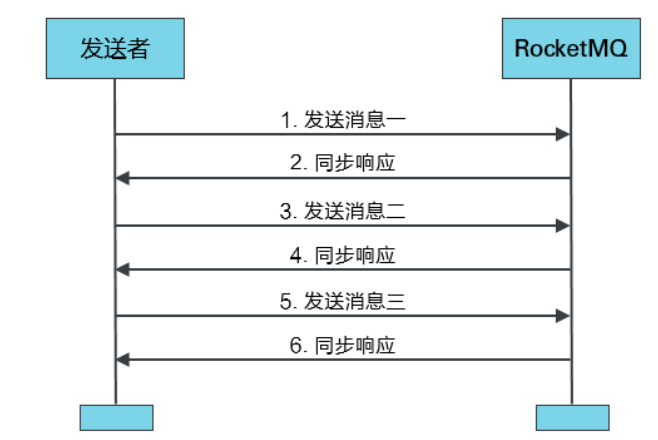

Send messages synchronously

Sending a message synchronously means that after the Producer sends a message, it will send the next message after receiving the ACK returned by MQ. This method has the highest message reliability, but the message sending efficiency is too low.

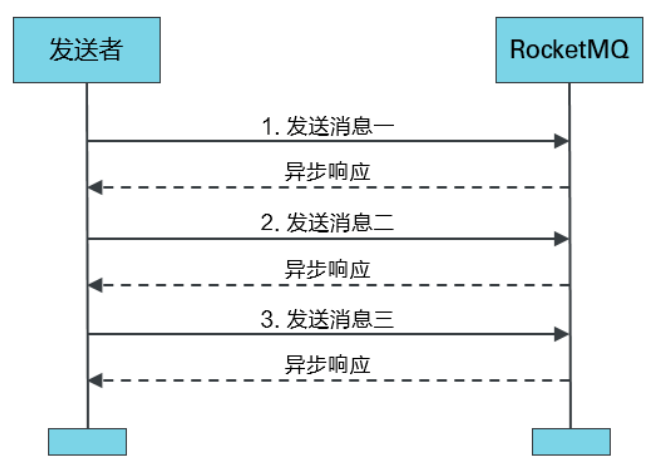

Send message asynchronously

Sending a message asynchronously means that after sending a message, the Producer directly sends the next message without waiting for MQ to return an ACK. The message reliability of this method can be guaranteed, and the message sending efficiency can also be improved.

One way send message

One way message sending means that Producer is only responsible for sending messages and does not wait for or process MQ acks. MQ does not return ACK when sending this method. This method has the highest message sending efficiency, but the message reliability is poor.

2 code example

Create project

Create a Maven Java project rocketmq test.

Import dependency

Import the client dependency of rocketmq.

<properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <maven.compiler.source>1.8</maven.compiler.source> <maven.compiler.target>1.8</maven.compiler.target> </properties> <dependencies> <dependency> <groupId>org.apache.rocketmq</groupId> <artifactId>rocketmq-client</artifactId> <version>4.8.0</version> </dependency> </dependencies>

Define asynchronous message sending producers

public class SyncProducer {

public static void main(String[] args) throws Exception {

// Create a producer. The parameter is the Producer Group name

DefaultMQProducer producer = new DefaultMQProducer("pg");

// Specify the nameServer address

producer.setNamesrvAddr("rocketmqOS:9876");

// Set the number of times to retry sending when sending fails. The default is 2 times

producer.setRetryTimesWhenSendFailed( 3 );

// Set the sending timeout to 5s, and the default is 3s

producer.setSendMsgTimeout( 5000 );

// Turn on producer

producer.start();

// Produce and send 100 messages

for (int i = 0 ; i < 100 ; i++) {

byte[] body = ("Hi," + i).getBytes();

Message msg = new Message("someTopic", "someTag", body);

// Specify a key for the message

msg.setKeys("key-" + i);

// send message

SendResult sendResult = producer.send(msg);

System.out.println(sendResult);

}

// Close producer

producer.shutdown();

}

}

// Status of message sending

public enum SendStatus {

SEND_OK, // Sent successfully

FLUSH_DISK_TIMEOUT, // Disk brushing timeout. This abnormal state may occur only when the disk brushing policy set by the Broker is synchronous disk brushing. Asynchronous disk brushing will not appear

FLUSH_SLAVE_TIMEOUT, // Slave synchronization timed out. This abnormal state may occur only when the replication mode of the master slave set by the Broker cluster is synchronous replication. Asynchronous replication does not occur

SLAVE_NOT_AVAILABLE, // No Slave is available. This abnormal state may occur only when the Broker cluster is set to master Slave and the replication mode is synchronous replication. Asynchronous replication does not occur

}

Define asynchronous message sending producers

public class AsyncProducer {

public static void main(String[] args) throws Exception {

DefaultMQProducer producer = new DefaultMQProducer("pg");

producer.setNamesrvAddr("rocketmqOS:9876");

// Specifies that no retry sending will be performed after asynchronous sending fails

producer.setRetryTimesWhenSendAsyncFailed( 0 );

// Specify that the number of queues for newly created topics is 2, and the default is 4

producer.setDefaultTopicQueueNums( 2 );

producer.start();

for (int i = 0 ; i < 100 ; i++) {

byte[] body = ("Hi," + i).getBytes();

try {

Message msg = new Message("myTopicA", "myTag", body);

// Send asynchronously. Specify callback

producer.send(msg, new SendCallback() {

// When the producer receives the ACK sent by MQ, it will trigger the execution of the callback method

@Override

public void onSuccess(SendResult sendResult) {

System.out.println(sendResult);

}

@Override

public void onException(Throwable e) {

e.printStackTrace();

}

});

} catch (Exception e) {

e.printStackTrace();

}

} // end-for

// sleep for a while

// Since asynchronous transmission is adopted, if sleep is not used here,

// If the message is not sent, the producer will be closed and an error will be reported

TimeUnit.SECONDS.sleep( 3 );

producer.shutdown();

}

}

Define one-way message sending producers

public class OnewayProducer { public static void main(String[] args) throws Exception{ DefaultMQProducer producer = new DefaultMQProducer("pg"); producer.setNamesrvAddr("rocketmqOS:9876"); producer.start(); for (int i = 0 ; i < 10 ; i++) { byte[] body = ("Hi," + i).getBytes(); Message msg = new Message("single", "someTag", body); // Unidirectional transmission producer.sendOneway(msg); } producer.shutdown(); System.out.println("producer shutdown"); }}

Define message consumers

public class SomeConsumer {

public static void main(String[] args) throws MQClientException {

// Define a pull consumer

// DefaultLitePullConsumer consumer = new DefaultLitePullConsumer("cg");

// Define a push consumer

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("cg");

// Specify nameServer

consumer.setNamesrvAddr("rocketmqOS:9876");

// Specifies that consumption starts from the first message

consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

// Specify consumption topic and tag

consumer.subscribe("someTopic", "*");

// Specify "broadcast mode" for consumption, and the default is "cluster mode"

// consumer.setMessageModel(MessageModel.BROADCASTING);

// Register message listener

consumer.registerMessageListener(new MessageListenerConcurrently() {

// Once the broker has the subscribed message, it will trigger the execution of the method,

// The return value is the status of current consumer consumption

@Override

public ConsumeConcurrentlyStatus consumeMessage(List<MessageExt> msgs,

ConsumeConcurrentlyContext context) {

// Consumption message by message

for (MessageExt msg : msgs) {

System.out.println(msg);

}

// Return to consumption status: consumption succeeded

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;

}

});

// Open consumer consumption

consumer.start();

System.out.println("Consumer Started");

}

}

2, Sequential message

1 what are sequential messages

Sequential message refers to the message (FIFO) consumed in strict accordance with the sending order of the message.

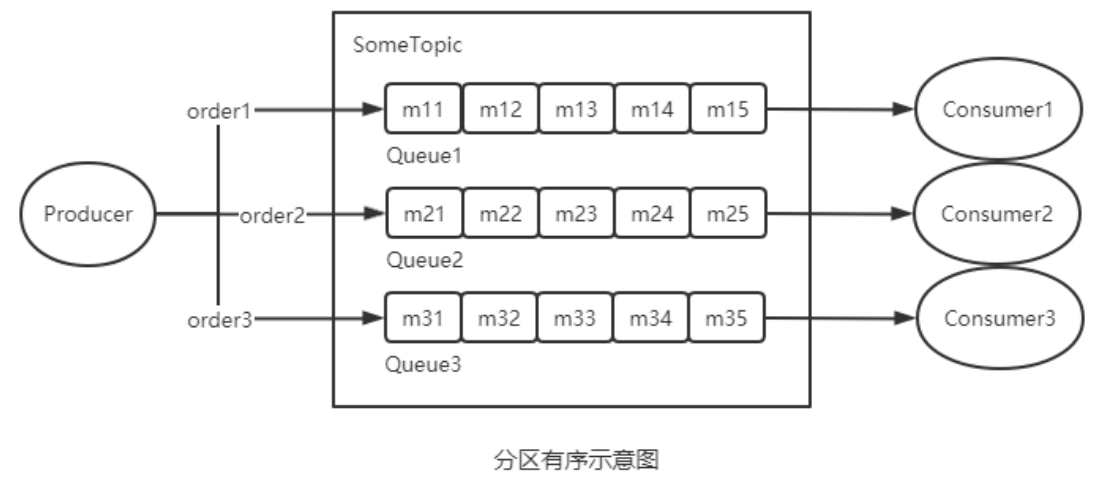

By default, the producer will send messages to different Queue partition queues by Round Robin polling; When consuming messages, messages will be pulled from multiple queues. In this case, the order of sending and consumption cannot be guaranteed. If the message is only sent to the same Queue and the message is only pulled from the Queue during consumption, the order of the message is strictly guaranteed.

2 why do I need sequential messages

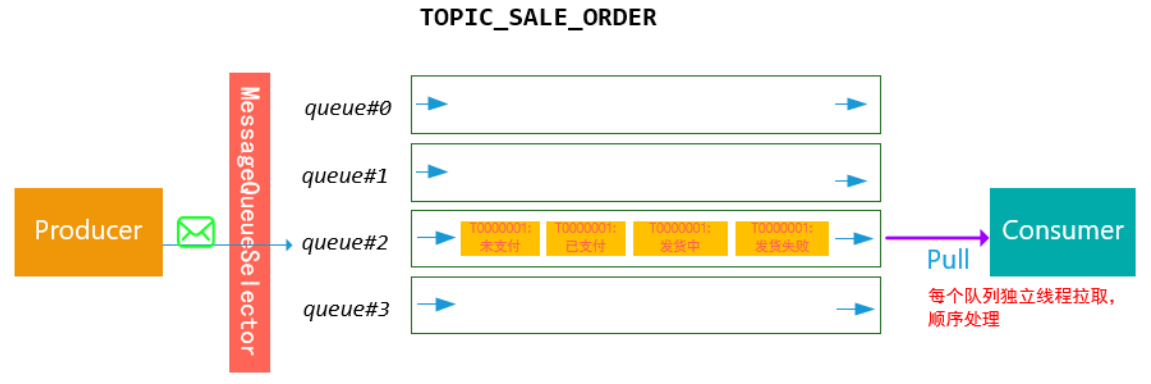

For example, there is now Topic order_ Status (order status), under which there are four Queue queues. Different messages in this Topic are used to describe different statuses of the current order. It is assumed that the order has status: unpaid, paid, in shipment, successful shipment and failed shipment.

According to the above order status, the producer can generate the following messages in terms of timing:

Order t00000001: unpaid -- > order t00000001: paid -- > order t00000001: shipping -- > order t00000001: Shipping failed

After the message is sent to MQ, if the polling strategy is adopted for Queue selection, the message may be stored in MQ as follows:

In this case, we hope that the order of Consumer consumption messages is consistent with our sending. However, we cannot guarantee that the order is correct for the delivery and consumption methods of MQ mentioned above. For messages with abnormal sequence, even if the Consumer is set with a certain state fault tolerance, it can not fully handle so many random combinations.

Based on the above situation, the following scheme can be designed: messages with the same order number are placed in a queue through certain strategies, and then consumers adopt certain strategies (for example, a thread processes a queue independently to ensure the order of processing messages), which can ensure the order of consumption.

3 order classification

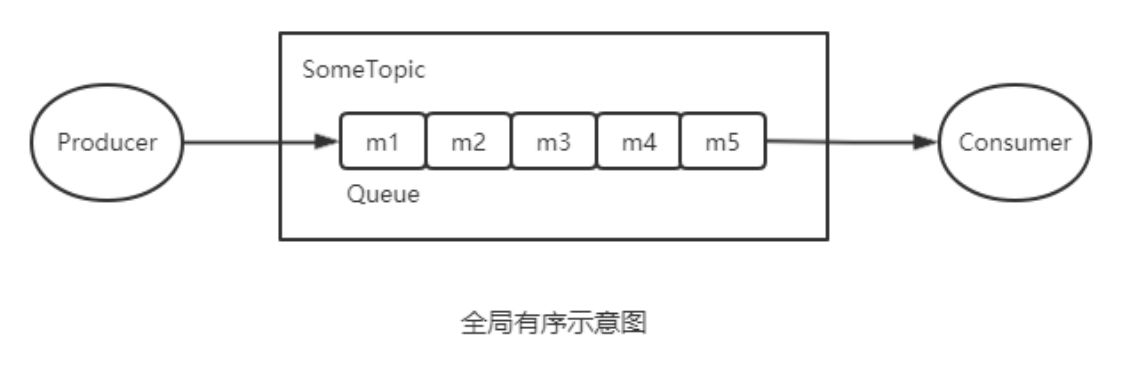

According to different order ranges, RocketMQ can strictly ensure the order of two kinds of messages: partition order and global order.

Global order

When there is only one Queue for sending and consuming, the order guaranteed is the order of messages in the whole Topic, which is called global order.

Specify the number of queues when creating a Topic. There are three ways to specify:

1) When you create a Producer in your code, you can specify the number of Topic queues that it automatically creates

2) Specify the number of queues when manually creating topics in the RocketMQ visual console

3) Specify the number of queues when manually creating a Topic using the mqadmin command

Partition order

If there are multiple queues participating, which can only ensure the message order on the Queue partition Queue, it is called partition ordering.

How to implement Queue selection? When defining the Producer, we can specify the message Queue selector, which is defined by our own implementation of the MessageQueueSelector interface.

When defining the selector selection algorithm, you generally need to use the selection key. This selection key can be message key or other data. However, no matter who makes the choice, the key cannot be repeated and is unique.

The general selection algorithm is to modulo the selected key (or its hash value) and the number of queues contained in the Topic, and the result is the QueueId of the selected Queue.

There is a problem with the modulo algorithm: the modulo results of different numbers of selected keys and queues may be the same, that is, messages with different selected keys may appear in the same Queue, that is, the same Consuemr may consume messages with different selected keys. How to solve this problem? The general method is to obtain the selected key from the message and judge it. If the current Consumer needs to consume messages, it will consume them directly. Otherwise, it will do nothing. This method requires that the selected key can be obtained by the Consumer along with the message. In this case, it is better to use the message key as the selection key.

Will the above approach lead to the following new problems? If the message that does not belong to the Consumer is pulled away, can the Consumer who should consume the message still consume the message? Messages in the same Queue cannot be consumed by different consumers in the same Group at the same time. Therefore, the consumers who consume messages with different selected key s in a Queue must belong to different groups. The consumption of consumers in different groups is isolated and does not affect each other.

4 code example

public class OrderedProducer {

public static void main(String[] args) throws Exception {

DefaultMQProducer producer = new DefaultMQProducer("pg");

producer.setNamesrvAddr("rocketmqOS:9876");

producer.start();

for(int i=0; i<100; i++){

Integer orderId = i;

byte[] body = ("Hi," + i).getBytes();

Message msg = new Message("TopicA", "TagA", body);

SendResult sendResult = producer.send(msg, new MessageQueueSelector() {

@Override

public MessageQueue select(List<MessageQueue> mqs,Message msg, Object arg) {

Integer id = (Integer) arg;

int index = id % mqs.size();

return mqs.get(index);

}

}, orderId);

System.out.println(sendResult);

}

producer.shutdown();

}

}

3, Delay message

1 what is a delayed message

When a message is written to the Broker and can be consumed and processed after a specified time, it is called a delayed message.

The delay message of RocketMQ can realize the function of timing tasks without using a timer. Typical application scenarios are the scenario of closing orders without payment in case of overtime in e-commerce transactions, and the scenario of 12306 platform booking timeout without payment to cancel booking.

In the e-commerce platform, a delay message will be sent when an order is created. This message will be delivered to the background business system (Consumer) in 30 minutes. After receiving the message, the background business system will judge whether the corresponding order has been paid. If not, cancel the order and put the goods back in inventory again; Ignore if payment is complete.

In 12306 platform, a delay message will be sent after ticket reservation is successful. This message will be delivered to the background business system (Consumer) in 45 minutes. After receiving the message, the background business system will judge whether the corresponding order has been paid. If not, cancel the reservation and put the ticket back to the ticket pool again; Ignore if payment is complete.

2 delay level

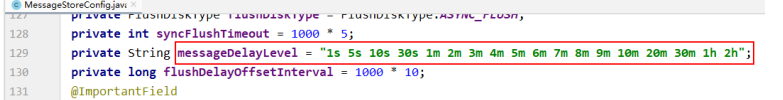

The delay length of the delayed message does not support arbitrary delay, which is specified by a specific delay level. The delay level is defined in the following variables in the MessageStoreConfig class of the RocketMQ server:

That is, if the specified delay level is 3, it means that the delay time is 10s, that is, the delay level is counted from 1.

Of course, if you need to customize the delay level, you can add the following configuration in the configuration loaded by the broker (for example, the level 1d of 1 day is added below). The configuration file is in the conf directory under the RocketMQ installation directory.

messageDelayLevel = 1s 5s 10s 30s 1m 2m 3m 4m 5m 6m 7m 8m 9m 10m 20m 30m 1h 2h 1d

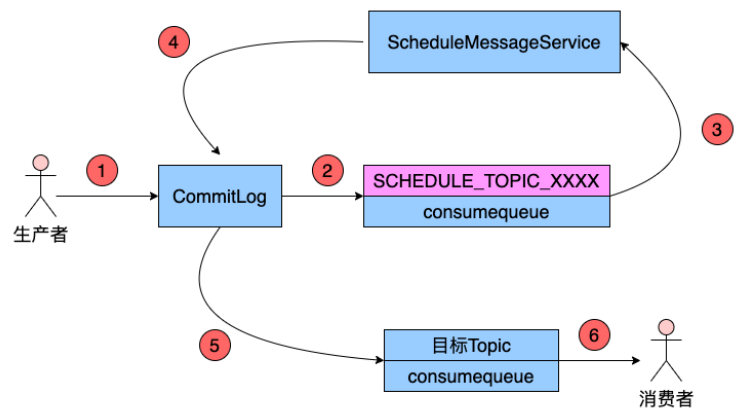

3 implementation principle of delay message

The specific implementation scheme is:

Modify message

After the Producer sends the message to the Broker, the Broker will first write the message to the commitlog file, and then distribute it to the corresponding consumequeue. However, before distribution, the system will first determine whether there is a delay level in the message. If not, it will be distributed directly and normally; If yes, it needs to go through a complex process:

-

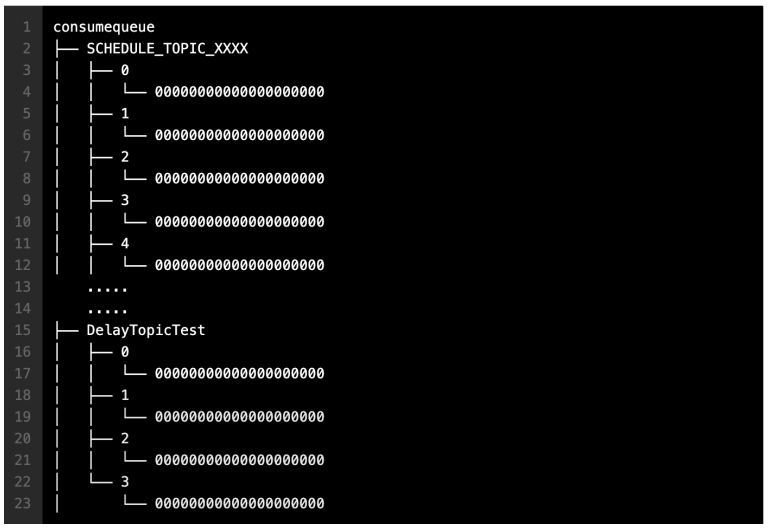

Modify the Topic of the message to SCHEDULE_TOPIC_XXXX

-

Schedule in the consumequeue directory according to the delay level_ TOPIC_ Create the corresponding queueId directory and consumequeue file under XXXX topic (if there are no such directories and files).

The corresponding relationship between delay level and queueId is queueId = delayLevel -1

It should be noted that when creating the queueId directory, you do not create all the directories corresponding to all delay levels at one time, but use which delay level to create which directory

-

Modify the contents of the message index unit. The Message Tag HashCode part in the index unit originally stores the Hash value of the message Tag. It is now modified to the delivery time of the message. Delivery time refers to the time when the message is written to the commitlog again after being modified to the original Topic. Delivery time = message storage time + delay level time. The message storage time refers to the timestamp when the message is sent to the Broker.

-

Write message index to schedule_ TOPIC_ In the corresponding consumequeue under XXXX topic

SCHEDULE_ TOPIC_ How do messages in each delay level Queue in XXXX directory be sorted?

It is sorted by message delivery time. All delay messages of the same level in a Broker will be written to schedule in the consumequeue directory_ TOPIC_ In the same Queue under XXXX directory. That is, the delay level of message delivery time in a Queue is the same. Then the delivery time depends on the message storage time. That is, the messages are sorted according to the time when they are sent to the Broker.

Delivery delay message

There is a delayed message service class ScheuleMessageService inside the Broker, which consumes schedule_ Topic_ The messages in XXXX, that is, the delayed messages are delivered to the target Topic according to the delivery time of each message. However, before delivery, the originally written message will be read out again from the commitlog, and its original delay level will be set to 0, that is, the original message will become an ordinary message without delay. Then post the message to the target Topic again.

When the Broker is started, ScheuleMessageService will create and start a TImer timer TImer to execute corresponding scheduled tasks. The system will define a corresponding number of timertasks according to the number of delay levels. Each TimerTask is responsible for the consumption and delivery of a delay level message. Each TimerTask will detect whether the first message in the corresponding Queue has expired. If the first message is not expired, all subsequent messages will not expire (messages are sorted according to delivery time); If the first message expires, the message is delivered to the target Topic, that is, the message is consumed.

Re write the message to the commitlog

The delayed message service class ScheuleMessageService sends the delayed message to the commitlog again, forms a new message index entry again, and distributes it to the corresponding Queue.

This is actually an ordinary message sending. But this time the message Producer is a delayed message service class

ScheuleMessageService.

4 code example

Define the DelayProducer class

public class DelayProducer {

public static void main(String[] args) throws Exception {

DefaultMQProducer producer = new DefaultMQProducer("pg");

producer.setNamesrvAddr("rocketmqOS:9876");

producer.start();

for (int i = 0 ; i < 10 ; i++) {

byte[] body = ("Hi," + i).getBytes();

Message msg = new Message("TopicB", "someTag", body);

// The specified message delay level is level 3, that is, the delay is 10s

// msg.setDelayTimeLevel(3);

SendResult sendResult = producer.send(msg);

// The time when the output message was sent

System.out.print(new SimpleDateFormat("mm:ss").format(new Date()));

System.out.println(" ," + sendResult);

}

producer.shutdown();

}

}

Define OtherConsumer class

public class OtherConsumer {

public static void main(String[] args) throws MQClientException {

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("cg");

consumer.setNamesrvAddr("rocketmqOS:9876");

consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

consumer.subscribe("TopicB", "*");

consumer.registerMessageListener(new MessageListenerConcurrently() {

@Override

public ConsumeConcurrentlyStatus consumeMessage(List<MessageExt> msgs, ConsumeConcurrentlyContext context) {

for (MessageExt msg : msgs) {

// The time the output message was consumed

System.out.print(new SimpleDateFormat("mm:ss").format(new Date()));

System.out.println(" ," + msg);

}

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;

}

});

consumer.start();

System.out.println("Consumer Started");

}

}

4, Transaction message

1 problem introduction

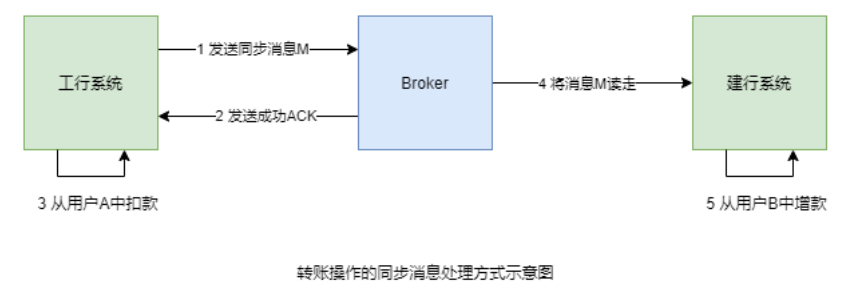

A demand scenario here is: ICBC user a transfers 10000 yuan to CCB user B.

We can use synchronization messages to handle this requirement scenario:

-

ICBC system sends a synchronization message M to Broker for B to increase RMB 10000

-

After the message is successfully received by the Broker, a successful ACK is sent to the ICBC system

-

After receiving the successful ACK, ICBC system will deduct 10000 yuan from user A

-

CCB system obtains message M from Broker

-

CCB system consumption message M, that is, add 10000 yuan to user B

There is a problem: if the deduction operation in step 3 fails, but the message has been successfully sent to the Broker. For MQ, as long as the message is written successfully, the message can be consumed. At this time, user B in CCB system increased by 10000 yuan. Data inconsistency occurred.

2 solutions

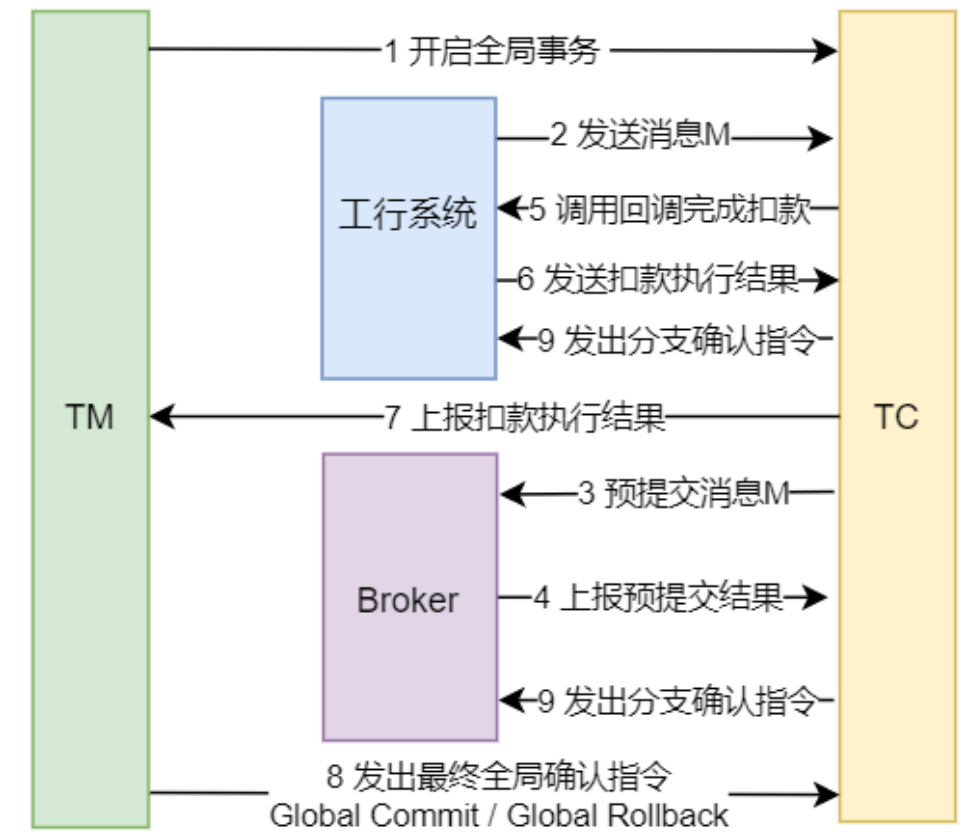

The solution is to make steps 1, 2 and 3 Atomic, either all successful or all failed. That is, after the message is sent successfully, the deduction must be successful. If the deduction fails, the rollback sends a successful message. The idea is to use transaction messages. A distributed transaction solution is used here.

Use the transaction message to process the requirement scenario:

-

The transaction manager TM sends an instruction to the transaction coordinator TC to start the global transaction

-

The ICBC system sends a transaction message M to TC for an increase of RMB 10000 to B

-

TC will send a semi transaction message prepareHalf to the Broker and pre submit the message M to the Broker. At this time, the CCB system cannot see the message M in the Broker

-

The Broker will Report the pre submitted execution results to the TC.

-

If the pre submission fails, TC will report the response of pre submission failure to TM, and the global transaction ends; If the pre submission is successful, TC will call the callback operation of ICBC system to complete the operation of withholding 10000 yuan from ICBC user A

-

ICBC system will send the withholding execution result to TC, that is, the execution status of local transactions

-

After receiving the withholding execution results, TC will report the results to TM.

There are three possibilities for the results of withholding:

// Describe the local transaction execution state public enum localtransactionstate {commit_message, / / the local transaction execution succeeds, ROLLBACK_MESSAGE, / / the local transaction execution fails, unknown, / / uncertain, indicating that a backcheck is needed to determine the execution result of the local transaction} -

TM will send different confirmation instructions to TC according to the reported results

- If the withholding is successful (the local transaction status is COMMIT_MESSAGE), TM sends a Global Commit instruction to TC

- If the withholding fails (the local transaction status is ROLLBACK_MESSAGE), TM sends a Global Rollback instruction to TC

- If the status is unknown (the local transaction status is unknown), the local transaction status check operation of ICBC system will be triggered. The backcheck operation will the backcheck result, i.e. COMMIT_MESSAGE or ROLLBACK_MESSAGE Report to TC. TC reports the results to TM, and TM will send the final confirmation instruction Global Commit or Global Rollback to TC

- After receiving the instruction, TC will send a confirmation instruction to the Broker and ICBC system

-

If the TC receives A Global Commit instruction, it sends A Branch Commit instruction to the Broker and ICBC system. At this time, the message M in the Broker can be seen by the CCB system; At this time, the deduction operation in ICBC user A is really confirmed

-

If the TC receives A Global Rollback instruction, it sends A Branch Rollback instruction to the Broker and ICBC system. At this time, the message M in the Broker will be revoked; The deduction operation in ICBC user A will be rolled back

The above scheme is to ensure that message delivery and deduction operations can succeed in a transaction. If one fails, all operations will be rolled back.

The above scheme is not a typical XA mode. Because the branch transaction in XA mode is asynchronous, and the message pre submission and withholding operation in transaction message scheme are synchronous.

3 Foundation

Distributed transaction

For distributed transactions, generally speaking, an operation consists of several branch operations, which belong to different applications and are distributed on different servers. Distributed transactions need to ensure that these branch operations either succeed or fail. Distributed transactions are the same as ordinary transactions in order to ensure the consistency of operation results.

Transaction message

RocketMQ provides a distributed transaction function similar to X/Open XA. The final consistency of distributed transactions can be achieved through transaction messages. XA is a distributed transaction solution and a distributed transaction processing mode.

Semi transaction message

For a message that cannot be delivered temporarily, the sender has successfully sent the message to the Broker, but the Broker has not received the final confirmation instruction. At this time, the message is marked as "temporarily undeliverable", that is, it cannot be seen by the consumer. Messages in this state are semi transaction messages.

Local transaction status

The result of the Producer callback operation is the local transaction status, which will be sent to TC, and TC will send it to TM. TM will determine the global transaction confirmation instruction according to the local transaction status sent by TC.

// Describes the local transaction execution status

public enum LocalTransactionState {

COMMIT_MESSAGE, // Local transaction executed successfully

ROLLBACK_MESSAGE, // Local transaction execution failed

UNKNOW, // Uncertain indicates that a backcheck is required to determine the execution result of the local transaction

}

Message check back

Message back query, that is, re query the execution status of local transactions. This example is to go back to the DB to check whether the withholding operation is successful.

Note that message lookback is not a callback operation. Callback operation is to perform withholding operation, while message query is to view the execution results of withholding operation.

There are two common reasons for message backtracking:

1) Callback operation returns UNKNWON

2)TC did not receive the final global transaction confirmation instruction from TM

Message callback settings in RocketMQ

There are three common property settings for message lookback. They are all set in the configuration file loaded by the broker, for example:

- transactionTimeout=20, specifies that TM should send the final confirmation status to TC within 20 seconds, otherwise a message query will be triggered. The default is 60 seconds

- transactionCheckMax=5. Specify that you can check back up to 5 times. After that, the message will be discarded and the error log will be recorded. The default is 15 times.

- transactionCheckInterval=10, specifies that the set time interval for multiple message lookback is 10 seconds. The default is 60 seconds.

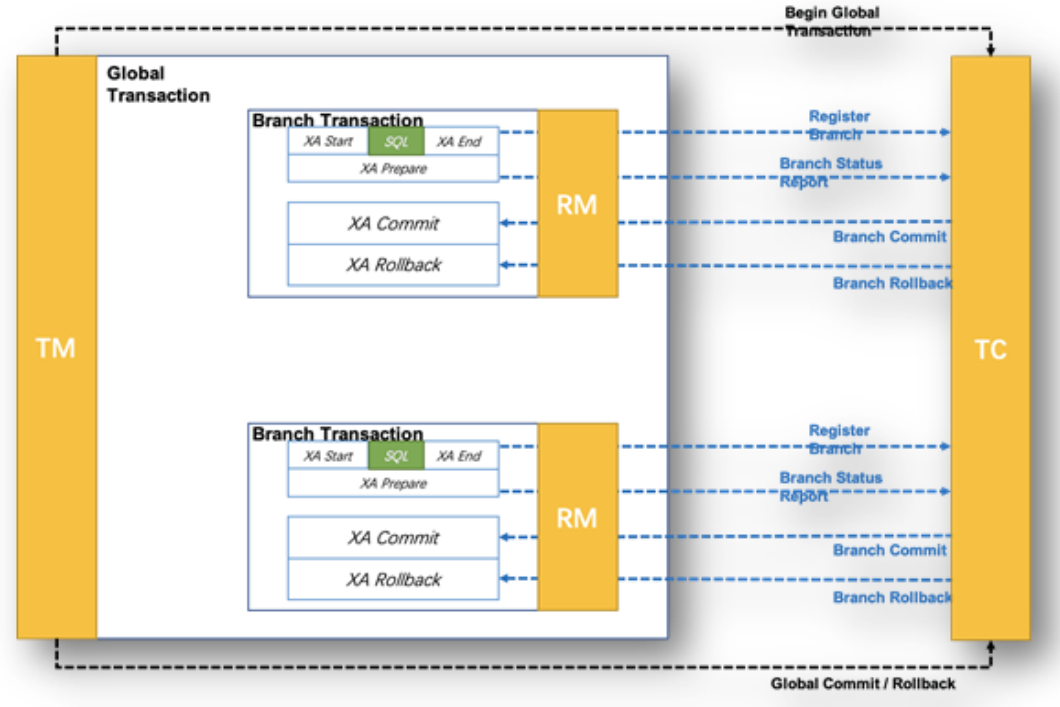

4 XA mode three swordsman

XA protocol

Xa (Unix Transaction) is a distributed transaction solution and a distributed transaction processing mode, which is based on XA protocol. Xa protocol was first proposed by Tuxedo (Transaction for Unix has been Extended for Distributed Operation) and handed over to X/Open organization as the interface standard between resource manager and transaction manager.

There are three important components in XA mode: TC, TM and RM.

TC

Transaction Coordinator, Transaction Coordinator. Maintain the status of global and branch transactions and drive global transaction commit or rollback.

Broker acts as TC in RocketMQ.

TM

Transaction Manager, Transaction Manager. Define the scope of a global transaction: start a global transaction, commit or roll back a global transaction. It is actually the initiator of the global transaction.

The Producer of transaction messages in RocketMQ acts as TM.

RM

Resource Manager, Resource Manager. Manage the resources of branch transaction processing, talk with TC to register branch transactions and report the status of branch transactions, and drive branch transaction commit or rollback.

The Producer and Broker of transaction messages in RocketMQ are RM.

5 XA mode architecture

XA mode is a typical 2PC, and its execution principle is as follows:

-

TM sends an instruction to TC to start a global transaction.

-

According to business requirements, each RM will register branch transactions with TC one by one, and then TC will issue pre execution instructions to RM one by one.

-

After receiving the instruction, each RM will pre execute the local transaction.

-

RM reports the pre execution results to TC. Of course, the result may be success or failure.

-

After receiving the Report from each RM, TC will Report the summary results to TM, and TM will send confirmation instructions to TC according to the summary results.

- If all results are successful responses, send a Global Commit instruction to TC.

- As long as there is a failure response, send a Global Rollback command to TC.

-

After receiving the instruction, TC sends a confirmation instruction to RM again.

The transaction message scheme is not a typical XA pattern. Because the branch transaction in XA mode is asynchronous, and the message pre submission and withholding operation in transaction message scheme are synchronous.

6 attention

-

Transaction messages do not support deferred messages

-

Check the idempotency of transaction messages, because transaction messages may be consumed more than once (because there is a case of committing after rollback)

7 code example

Define ICBC transaction listener

public class ICBCTransactionListener implements TransactionListener {

// Callback operation method

// The successful pre submission of the message will trigger the execution of this method to complete the local transaction

@Override

public LocalTransactionState executeLocalTransaction(Message msg,

Object arg) {

System.out.println("Pre delivery message succeeded:" + msg);

// Assuming that receiving the TAGA message indicates that the deduction operation is successful, and the TAGB message indicates that the deduction fails,

// TAGC said that the deduction result is not clear, so it is necessary to perform a message back check

if (StringUtils.equals("TAGA", msg.getTags())) {

return LocalTransactionState.COMMIT_MESSAGE;

} else if (StringUtils.equals("TAGB", msg.getTags())) {

return LocalTransactionState.ROLLBACK_MESSAGE;

} else if (StringUtils.equals("TAGC", msg.getTags())) {

return LocalTransactionState.UNKNOW;

}

return LocalTransactionState.UNKNOW;

}

// Message retrieval method

// There are two common reasons for message backtracking:

// 1) Callback operation returns UNKNWON

// 2)TC did not receive the final global transaction confirmation instruction from TM

@Override

public LocalTransactionState checkLocalTransaction(MessageExt msg) {

System.out.println("Execute message backcheck" + msg.getTags());

return LocalTransactionState.COMMIT_MESSAGE;

}

}

Define a message producer

public class TransactionProducer {

public static void main(String[] args) throws Exception {

TransactionMQProducer producer = new TransactionMQProducer("tpg");

producer.setNamesrvAddr("rocketmqOS:9876");

/**

* Define a thread pool

* @param corePoolSize Number of core threads in the thread pool

* @param maximumPoolSize Maximum number of threads in the thread pool

* @param keepAliveTime This is a time. When the number of threads in the thread pool is greater than the number of core threads, it is the survival time of redundant idle threads

* @param unit Time unit

* @param workQueue The parameter of the queue for temporarily storing tasks is the length of the queue

* @param threadFactory Thread factory

*/

ExecutorService executorService = new ThreadPoolExecutor( 2 , 5 , 100 , TimeUnit.SECONDS, new ArrayBlockingQueue<Runnable>( 2000 ), new ThreadFactory() {

@Override

public Thread newThread(Runnable r) {

Thread thread = new Thread(r);

thread.setName("client-transaction-msg-check-thread");

return thread;

}

});

// Specify a thread pool for the producer

producer.setExecutorService(executorService);

// Add a transaction listener for the producer

producer.setTransactionListener(new ICBCTransactionListener());

producer.start();

String[] tags = {"TAGA","TAGB","TAGC"};

for (int i = 0 ; i < 3 ; i++) {

byte[] body = ("Hi," + i).getBytes();

Message msg = new Message("TTopic", tags[i], body);

// Send transaction message

// The second parameter is used to specify the business parameters to be used when executing local transactions

SendResult sendResult = producer.sendMessageInTransaction(msg,null);

System.out.println("The sending result is:" + sendResult.getSendStatus());

}

}

}

Define consumer

You can directly use SomeConsumer of ordinary messages as a consumer.

public class SomeConsumer {

public static void main(String[] args) throws MQClientException {

// Define a pull consumer

// DefaultLitePullConsumer consumer = new DefaultLitePullConsumer("cg");

// Define a push consumer

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("cg");

// Specify nameServer

consumer.setNamesrvAddr("rocketmqOS:9876");

// Specifies that consumption starts from the first message

consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

// Specify consumption topic and tag

consumer.subscribe("TTopic", "*");

// Specify "broadcast mode" for consumption, and the default is "cluster mode"

// consumer.setMessageModel(MessageModel.BROADCASTING);

// Register message listener

consumer.registerMessageListener(new MessageListenerConcurrently() {

// Once the broker has the subscribed message, it will trigger the execution of the method,

// The return value is the status of current consumer consumption

@Override

public ConsumeConcurrentlyStatus consumeMessage(List<MessageExt> msgs, ConsumeConcurrentlyContext context) {

// Consumption message by message

for (MessageExt msg : msgs) {

System.out.println(msg);

}

// Return to consumption status: consumption succeeded

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;

}

});

// Open consumer consumption

consumer.start();

System.out.println("Consumer Started");

}

}

5, Batch message

1 send messages in batches

Send limit

When sending messages, producers can send multiple messages at a time, which can greatly improve the sending efficiency of Producer. However, the following points should be noted:

- Messages sent in bulk must have the same Topic

- Messages sent in batches must have the same disk brushing strategy

- Messages sent in batches cannot be delay messages or transaction messages

Bulk send size

By default, the total size of messages sent in a batch cannot exceed 4MB bytes. If you want to exceed this value, there are two solutions:

- Scheme 1: split batch messages into several message sets no larger than 4M and send them in batches for many times

- Scheme 2: modify the properties on the Producer side and the Broker side

**The Producer side needs to set the maxMessageSize property of the Producer before sending

**The Broker side needs to modify the maxMessageSize property in the loaded configuration file

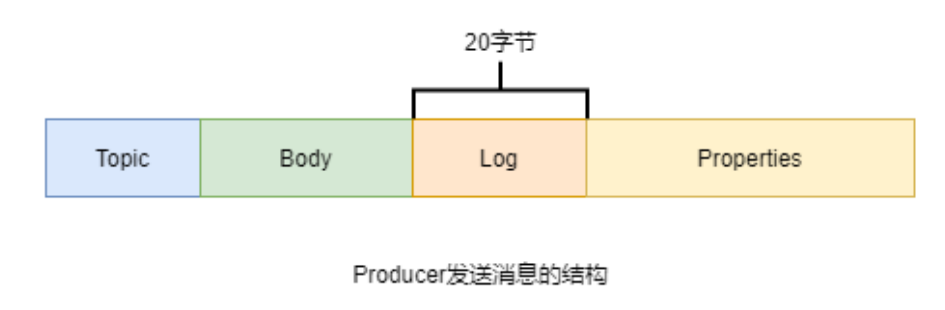

Message size sent by producer

The Message sent by the producer through the send() method is not directly serialized and sent to the network, but sent through a string generated by the Message. This string consists of four parts: Topic, Message Body, Message log (accounting for 20 bytes), and a bunch of attributes key value used to describe the Message. These attributes include, for example, producer address, production time, QueueId to be sent, and so on. The data finally written to the Message unit in the Broker comes from these attributes.

2 batch consumption message

Modify batch properties

The first parameter of the consumeMessage() method of the messagelistenercurrently listening interface of the Consumer is the message list, but by default, only one message can be consumed at a time. To enable it to consume more than one message at a time, you can specify it by modifying the consumeMessageBatchMaxSize property of the Consumer. However, the value cannot exceed 32. Because by default, consumers can pull up to 32 messages at a time. To modify the maximum value of a pull, you can specify it by modifying the pullBatchSize property of the Consumer.

Existing problems

Do you want to set the pullBatchSize property and consumeMessageBatchMaxSize property of the Consumer as large as possible? Of course not.

- The larger the pullBatchSize value is set, the longer it takes for the Consumer to pull each time, and the higher the possibility of transmission problems on the network. If there is a problem in the pulling process, all messages in this batch need to be pulled again.

- The larger the consumeMessageBatchMaxSize value is set, the lower the concurrent message consumption ability of the Consumer, and this batch of consumed messages have the same consumption results. Because a batch of messages specified by consumeMessageBatchMaxSize will only be processed by one thread, and as long as there is a message processing exception in the processing process, all these messages need to be consumed and processed again.

3 code example

The requirement for batch sending is not to modify the default value of maximum sending 4M, but to prevent sending batch messages from exceeding the limit of 4M.

Define message list splitter

// Message list splitter: it can only handle cases where the size of each message does not exceed 4M.

// If there is a message whose size is greater than 4M, the splitter cannot process it,

// It returns this message directly as a sub list. No more segmentation

public class MessageListSplitter implements Iterator<List<Message>> {

// The specified limit value is 4M

private final int SIZE_LIMIT = 4 * 1024 * 1024 ;

// Store all messages to be sent

private final List<Message> messages;

// The starting index of the small set of messages to be sent in bulk

private int currIndex;

public MessageListSplitter(List<Message> messages) {

this.messages = messages;

}

@Override

public boolean hasNext() {

// It is judged that the message index currently started to traverse should be less than the total number of messages

return currIndex < messages.size();

}

@Override

public List<Message> next() {

int nextIndex = currIndex;

// Record the size of this small batch of message list currently to be sent

int totalSize = 0 ;

for (; nextIndex < messages.size(); nextIndex++) {

// Gets the message currently traversed

Message message = messages.get(nextIndex);

// Count the size of the currently traversed message

int tmpSize = message.getTopic().length() + message.getBody().length;

Map<String, String> properties = message.getProperties();

for (Map.Entry<String, String> entry : properties.entrySet()) {

tmpSize += entry.getKey().length() + entry.getValue().length();

}

tmpSize = tmpSize + 20 ;

// Judge whether the current message itself is greater than 4M

if (tmpSize > SIZE_LIMIT) {

if (nextIndex - currIndex == 0 ) {

nextIndex++;

}

break;

}

if (tmpSize + totalSize > SIZE_LIMIT) {

break;

} else {

totalSize += tmpSize;

}

} // end-for

// Get the subset of the current messages list [currIndex, nextIndex)

List<Message> subList = messages.subList(currIndex, nextIndex);

// Start index of next traversal

currIndex = nextIndex;

return subList;

}

}

Define bulk message consumers

public class BatchProducer {

public static void main(String[] args) throws Exception {

DefaultMQProducer producer = new DefaultMQProducer("pg");

producer.setNamesrvAddr("rocketmqOS:9876");

// Specifies the maximum size of the message to be sent. The default is 4M

// However, it is not enough to modify this attribute only. You also need to modify the attribute in the configuration file loaded by the broker

// maxMessageSize property

// producer.setMaxMessageSize(8 * 1024 * 1024);

producer.start();

// Defines the collection of messages to send

List<Message> messages = new ArrayList<>();

for (int i = 0 ; i < 100 ; i++) {

byte[] body = ("Hi," + i).getBytes();

Message msg = new Message("someTopic", "someTag", body);

messages.add(msg);

}

// Define a message list splitter to divide the message list into multiple small lists with a size of no more than 4M

MessageListSplitter splitter = new MessageListSplitter(messages);

while (splitter.hasNext()) {

try {

List<Message> listItem = splitter.next();

producer.send(listItem);

} catch (Exception e) {

e.printStackTrace();

}

}

producer.shutdown();

}

}

Define bulk message producers

public class BatchConsumer {

public static void main(String[] args) throws MQClientException {

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("cg");

consumer.setNamesrvAddr("rocketmqOS:9876");

consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

consumer.subscribe("someTopicA", "*");

// Specify that 10 messages can be consumed at a time. The default value is 1

consumer.setConsumeMessageBatchMaxSize( 10 );

// Specifies that 40 messages can be pulled from the Broker at a time. The default value is 32

consumer.setPullBatchSize( 40 );

consumer.registerMessageListener(new MessageListenerConcurrently() {

@Override

public ConsumeConcurrentlyStatus consumeMessage(List<MessageExt> msgs, ConsumeConcurrentlyContext context) {

for (MessageExt msg : msgs) {

System.out.println(msg);

}

// Return result of successful consumption

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;

// Return result in case of abnormal consumption

// return ConsumeConcurrentlyStatus.RECONSUME_LATER;

}

});

consumer.start();

System.out.println("Consumer Started");

}

}

6, Message filtering

When subscribing to a message, a message maker can not only specify the Topic to subscribe to the message, but also filter the messages in the specified Topic according to the specified conditions, that is, he can subscribe to a message type with finer granularity than the Topic.

There are two filtering methods for the specified Topic message: Tag filtering and SQL filtering.

1 Tag filtering

Specify the Tag to subscribe to the message through the subscribe() method of the consumer. If you subscribe to messages from multiple tags, the tags are connected using the or operator (double vertical bar |).

DefaultMQPushConsumer consumer = newDefaultMQPushConsumer("CID_EXAMPLE");consumer.subscribe("TOPIC", "TAGA || TAGB || TAGC");

2 SQL filtering

SQL filtering is a way to filter user attributes embedded in messages through specific expressions. Complex filtering of messages can be realized through SQL filtering. However, only consumers using PUSH mode can use SQL filtering.

Multiple constant types and operators are supported in SQL filter expressions.

Supported constant types:

- Value: for example: 123, 3.1415

- Characters: must be enclosed in single quotes, such as' abc '

- Boolean: TRUE or FALSE

- NULL: special constant, indicating NULL

Supported operators are:

-

Value comparison: >, > =, <, < =, BETWEEN=

-

Character comparison: =, < >, IN

-

Logical operations: AND, OR, NOT

-

NULL judgment: IS NULL or IS NOT NULL

By default, the Broker does not enable the SQL filtering function of messages. The following attributes need to be added to the configuration file loaded by the Broker to enable this function:

enablePropertyFilter = true

The modified configuration file needs to be specified when starting the Broker. For example, for the startup of a single Broker, the modified configuration file is conf/broker.conf, and the following command is used during startup:

sh bin/mqbroker -n localhost:9876 -c conf/broker.conf &

3 code example

Define Tag filter Producer

public class FilterByTagProducer {

public static void main(String[] args) throws Exception {

DefaultMQProducer producer = new DefaultMQProducer("pg");

producer.setNamesrvAddr("rocketmqOS:9876");

producer.start();

String[] tags = {"myTagA","myTagB","myTagC"};

for (int i = 0 ; i < 10 ; i++) {

byte[] body = ("Hi," + i).getBytes();

String tag = tags[i%tags.length];

Message msg = new Message("myTopic",tag,body);

SendResult sendResult = producer.send(msg);

System.out.println(sendResult);

}

producer.shutdown();

}

}

Define Tag filter Consumer

public class FilterByTagConsumer {

public static void main(String[] args) throws Exception {

DefaultMQPushConsumer consumer = new

DefaultMQPushConsumer("pg");

consumer.setNamesrvAddr("rocketmqOS:9876");

consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

consumer.subscribe("myTopic", "myTagA || myTagB");

consumer.registerMessageListener(new MessageListenerConcurrently() {

@Override

public ConsumeConcurrentlyStatus consumeMessage(List<MessageExt> msgs, ConsumeConcurrentlyContext context) {

for (MessageExt me:msgs){

System.out.println(me);

}

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;

}

});

consumer.start();

System.out.println("Consumer Started");

}

}

Define SQL filter Producer

public class FilterBySQLProducer {

public static void main(String[] args) throws Exception {

DefaultMQProducer producer = new DefaultMQProducer("pg");

producer.setNamesrvAddr("rocketmqOS:9876");

producer.start();

for (int i = 0 ; i < 10 ; i++) {

try {

byte[] body = ("Hi," + i).getBytes();

Message msg = new Message("myTopic", "myTag", body);

msg.putUserProperty("age", i + "");

SendResult sendResult = producer.send(msg);

System.out.println(sendResult);

} catch (Exception e) {

e.printStackTrace();

}

}

producer.shutdown();

}

}

Define SQL filter Consumer

public class FilterBySQLConsumer {

public static void main(String[] args) throws Exception {

DefaultMQPushConsumer consumer = new

DefaultMQPushConsumer("pg");

consumer.setNamesrvAddr("rocketmqOS:9876");

consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

consumer.subscribe("myTopic", MessageSelector.bySql("age between 0 and 6"));

consumer.registerMessageListener(new MessageListenerConcurrently() {

@Override

public ConsumeConcurrentlyStatus consumeMessage(List<MessageExt> msgs, ConsumeConcurrentlyContext context) {

for (MessageExt me:msgs){

System.out.println(me);

}

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;

}

});

consumer.start();

System.out.println("Consumer Started");

}

}

7, Message sending retry mechanism

1 Description

Producer's mechanism for resending failed messages is called message sending retry mechanism, also known as message re delivery mechanism.

For message re delivery, you should pay attention to the following points:

- If the producer sends messages synchronously or asynchronously, the sending failure will be retried, but there is no retry mechanism for the sending failure of oneway message

- Only ordinary messages have a send retry mechanism, and sequential messages do not

- Message re delivery mechanism can ensure that messages are sent successfully and not lost as much as possible, but it may cause message duplication. Message duplication is an unavoidable problem in RocketMQ

- Message duplication does not occur under normal circumstances. When there is a large amount of messages and network jitter, message duplication will become a probability event

- The active retransmission of producer and the change of consumer load (rebalancing will not lead to repeated messages, but repeated consumption may occur) will also lead to repeated messages

- Message duplication cannot be avoided, but repeated consumption of messages should be avoided.

- The solution to avoid repeated consumption of messages is to add a unique identifier (such as a message key) to the message, so that consumers can judge the consumption of the message to avoid repeated consumption

- There are three strategies for message sending retry: synchronous sending failure strategy, asynchronous sending failure strategy and message disk brushing failure strategy

2 synchronous sending failure policy

For ordinary messages, the round robin policy is adopted by default to select the Queue to send. If the message fails to be sent, it will retry twice by default. However, when retrying, the Broker that failed to send last time will not be selected, but other brokers will be selected. Of course, if there is only one Broker, it can only be sent to this Broker, but it will try to send to other queues on this Broker.

// Create a producer. The parameter is the Producer Group name

DefaultMQProducer producer = new DefaultMQProducer("pg");

// Specify the nameServer address

producer.setNamesrvAddr("rocketmqOS:9876");

// Set the number of times to retry sending when synchronous sending fails. The default is 2 times

producer.setRetryTimesWhenSendFailed( 3 );

// Set the sending timeout to 5s, and the default is 3s

producer.setSendMsgTimeout( 5000 );

At the same time, the Broker also has the failure isolation function, which enables the Producer to select the Broker that has not failed to send as the target Broker as far as possible. It can ensure that other messages are not sent to the problem Broker as far as possible. In order to improve the message sending efficiency and reduce the message sending time.

Thinking: let's implement the failure isolation function ourselves. How to do it?

1) Scheme 1: the Producer maintains the Map Set of a JUC. The key is the timestamp of failure, and the value is the Broker instance. The Producer also maintains a Set set in which all Broker instances without sending exceptions are stored. The selected target Broker is selected from the Set set. Then, a scheduled task is defined to periodically send no sending exceptions from the Map Set for a long time The Broker is cleaned out and added to the Set collection.

2) Scheme 2: add an identifier for the Broker instance in the Producer, such as an AtomicBoolean attribute. Set it to true whenever there is a sending exception on the Broker. Selecting the target Broker is to select the Broker with the attribute value of false. Then define a scheduled task and set the attribute of the Broker to false periodically.

3) Scheme 3: add an identifier for the Broker instance in Producer, such as an AtomicLong attribute. As long as there is a sending exception on the Broker, increase its value by one. Selecting the target Broker is to select the Broker with the smallest value of the attribute. If the values are the same, select it by polling.

If the number of retries exceeds, an exception will be thrown, and the Producer will ensure that the message is not lost. Of course, when the Producer appears

When RemotingException, MQClientException, and MQBrokerException, Producer will automatically resend the message.

3 asynchronous sending failure policy

When the asynchronous sending fails to retry, the asynchronous retry will not select other brokers, but only retry on the same broker, so this policy cannot guarantee that the message will not be lost.

DefaultMQProducer producer = new DefaultMQProducer("pg");producer.setNamesrvAddr("rocketmqOS:9876");// Specify that no retry will be performed after asynchronous sending fails. Send producer.setretrytimeswhensensayncfailed (0);

4 message disk brushing failure strategy

When the message swiping timeout (Master or slave) or the slave is unavailable (the slave returns a status other than SEND_OK to the master during data synchronization), the message will not be sent to other brokers by default. However, important messages can be turned on by setting the retryAnotherBrokerWhenNotStoreOK property to true in the Broker's configuration file.

8, Message consumption retry mechanism

1 consumption retry of sequential messages

For sequential messages, when the Consumer fails to consume the message, in order to ensure the order of the message, it will automatically retry the message continuously until the consumption is successful. The default interval between consumption retries is 1000 milliseconds. During retry, the application will be blocked in message consumption.

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("cg");// The consumption retry interval of sequence message consumption failure, unit: ms, default: 1000, and its value range is [1030000] consumer.setsuspundcurrentqueuetimemillis (100);

Since the retry of sequential messages is endless and uninterrupted until the consumption is successful, for the consumption of sequential messages, it is important to ensure that the application can timely monitor and handle the consumption failure, so as to avoid permanent blocking of consumption.

Note that the sequence message does not have a send failure retry mechanism, but has a consumption failure retry mechanism

2 consumption retry of unordered messages

For unordered messages (normal messages, delayed messages, transaction messages), when the Consumer fails to consume messages, the message retry effect can be achieved by setting the return status. However, it should be noted that the retry of disordered messages only takes effect for the cluster consumption mode, and the broadcast consumption mode does not provide the failure retry feature. That is, for broadcast consumption, after consumption fails, the failure message will not be retried and subsequent messages will continue to be consumed.

3. Number and interval of consumption retries

For retry consumption under disordered message cluster consumption, each message can be retried up to 16 times by default, but the interval between retries is different and will gradually become longer. The interval between retries is shown in the following table.

| retry count | Interval from last retry | retry count | Interval from last retry |

|---|---|---|---|

| 1 | 10 seconds | 9 | 7 minutes |

| 2 | 30 seconds | 10 | 8 minutes |

| 3 | 1 minute | 11 | 9 minutes |

| 4 | 2 minutes | 12 | 10 minutes |

| 5 | 3 minutes | 13 | 20 minutes |

| 6 | 4 minutes | 14 | 30 Minutes |

| 7 | 5 minutes | 15 | 1 hour |

| 8 | 6 minutes | 16 | 2 hours |

If a message fails to consume all the time, it will be retried for the 16th time after 4 hours and 46 minutes after normal consumption. If it still fails, the message is delivered to the dead letter queue

Modify consumption retry times

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("cg");

// Modify consumption retry times

consumer.setMaxReconsumeTimes( 10 );

For the modified number of retries, the following policy will be implemented:

- If the modified value is less than 16, retry at the specified interval

- If the modified value is greater than 16, the retry interval of more than 16 times is 2 hours

For a Consumer Group, if the number of consumption retries of only one Consumer is modified, it will be applied to all other Consumer instances in the Group. If multiple consumers are modified, the override method will take effect. That is, the last modified value will overwrite the previously set value.

4 retry queue

For the messages that need to be consumed again, the Consumer does not pull the original messages for consumption after waiting for the specified time, but puts the messages that need to be consumed again into the queue of a special Topic and then consumes them again. This special queue is the retry queue.

When there is a message to retry consumption, the Broker will set a Topic name for each consumption group

Is% RETRY%consumerGroup@consumerGroup Retry queue for.

1) This retry queue is set for message groups, not for each Topic (messages of a Topic can be consumed by multiple consumer groups, so a retry queue will be created for each of these consumer groups)

2) A retry queue is created for the consumer group only when a message requiring retry consumption appears

Note that the time interval of consumption retry is very similar to the delay level of delayed consumption. Except for the first two times without delay level, other times are the same

The Broker handles the retry message by delaying the message. Save the message to schedule first_ TOPIC_ XXXX delay queue. After the delay time expires, the message will be delivered to% RETRY%consumerGroup@consumerGroup Retry queue.

5. Configuration method of consumption retry

In the cluster consumption mode, if you want to retry the consumption after the message consumption fails, you need to explicitly configure one of the following three methods in the implementation of the message listener interface:

- Method 1: return consumeconcurrentlystatus.resume_ Later (recommended)

- Method 2: return Null

- Method 3: throw an exception

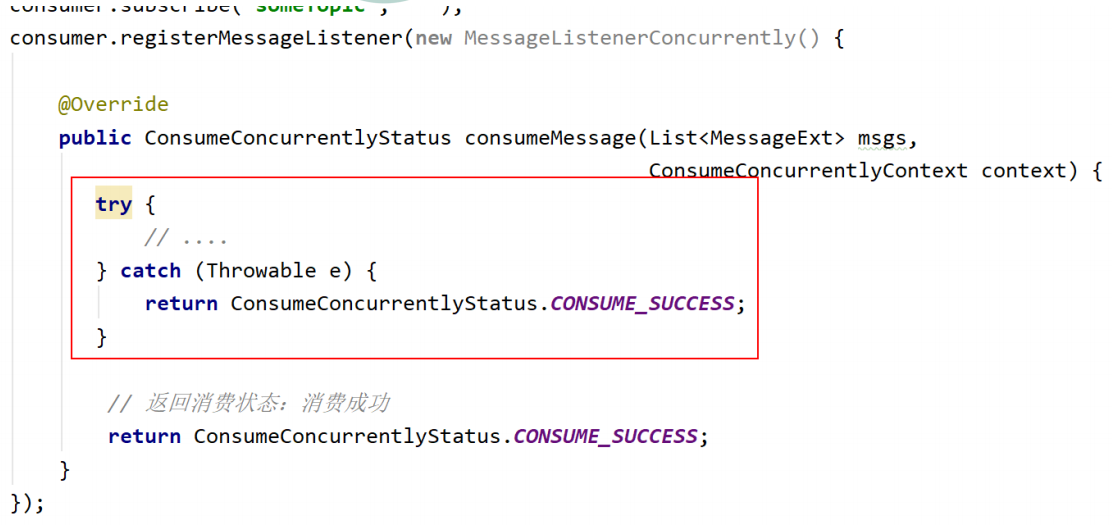

6. Do not retry configuration

In the cluster consumption mode, if you do not want to retry the consumption after the message consumption fails, the same result will be returned after the exception is caught, that is, ConsumeConcurrentlyStatus.CONSUME_SUCCESS, no consumption retry is performed.

9, Dead letter queue

1 what is a dead letter queue

When the first consumption of a message fails, the message queue will automatically retry the consumption; After reaching the maximum number of retries, if the consumption still fails, it indicates that the consumer cannot correctly consume the message under normal circumstances. At this time, the message queue will not immediately discard the message, but send it to the special queue corresponding to the consumer. This queue is the dead letter queue (DLQ), and the messages in it are called dead letter messages (DLM).

Dead letter queue is used to process messages that cannot be consumed normally.

2 characteristics of dead letter queue

The dead letter queue has the following characteristics:

- Messages in the dead letter queue will not be consumed by consumers normally, that is, DLQ is invisible to consumers

- The validity period of dead letter storage is the same as that of normal messages, which is 3 days (the expiration time of commitlog file). It will be automatically deleted after 3 days

- The dead letter queue is a special Topic named% DLQ%consumerGroup@consumerGroup That is, each consumer group has a dead letter queue

- If a consumer group does not produce a dead letter message, the corresponding dead letter queue will not be created for it

3 processing of dead letter message

In fact, when a message enters the dead letter queue, it means that there is a problem in some parts of the system, resulting in consumers unable to consume the message normally. For example, there is a Bug in the code. Therefore, dead letter messages usually require special processing by developers. The most critical step is to check the suspicious factors, solve the possible bugs in the code, and then deliver and consume the original dead letter message again.