Article catalogue

1, Current limiting algorithm

In high concurrency systems, there are three sharp tools to protect the system: caching, degradation and current limiting.

This paper mainly introduces current limiting. There are three current limiting algorithms:

- 1. Counter algorithm

- Fixed window

- sliding window

- 2. Token bucket algorithm

- 3. Leaky bucket algorithm

1. Counter algorithm

1.1 fixed window algorithm

Counter algorithm is the simplest and easiest algorithm in current limiting algorithm. For example, we stipulate that for the A interface, we can't access more than 100 times in one minute. Then we can do this: at the beginning, we can set A counter. Whenever A request comes, the counter will increase by 1. If the value of counter is greater than 100 and the interval between the request and the first request is still within 1 minute, it indicates that there are too many requests; If the interval between the request and the first request is greater than 1 minute and the counter value is still within the current limit, reset the counter.

The specific implementation in java is as follows:

public class CounterTest {

public long timeStamp = getNowTime();

public int reqCount = 0;

public final int limit = 100; // Maximum requests in time window

public final long interval = 1000; // Time window ms

public boolean grant() {

long now = getNowTime();

if (now < timeStamp + interval) {

// Within the time window

reqCount++;

// Judge whether the maximum number of control requests is exceeded in the current time window

return reqCount <= limit;

} else {

timeStamp = now;

// Reset after timeout

reqCount = 1;

return true;

}

}

public long getNowTime() {

return System.currentTimeMillis();

}

}The specific implementation in. NET Core is as follows:

AspNetCoreRateLimit is currently ASP Net core is the most commonly used current limiting solution. The source code implementation of AspNetCoreRateLimit is fixed window. The algorithm is as follows:

var entry = await _counterStore.GetAsync(counterId, cancellationToken);

if (entry.HasValue)

{

// entry has not expired

if (entry.Value.Timestamp + rule.PeriodTimespan.Value >= DateTime.UtcNow)

{

// increment request count

var totalCount = entry.Value.Count + _config.RateIncrementer?.Invoke() ?? 1;

// deep copy

counter = new RateLimitCounter

{

Timestamp = entry.Value.Timestamp,

Count = totalCount

};

}

}Disadvantages of fixed window algorithm

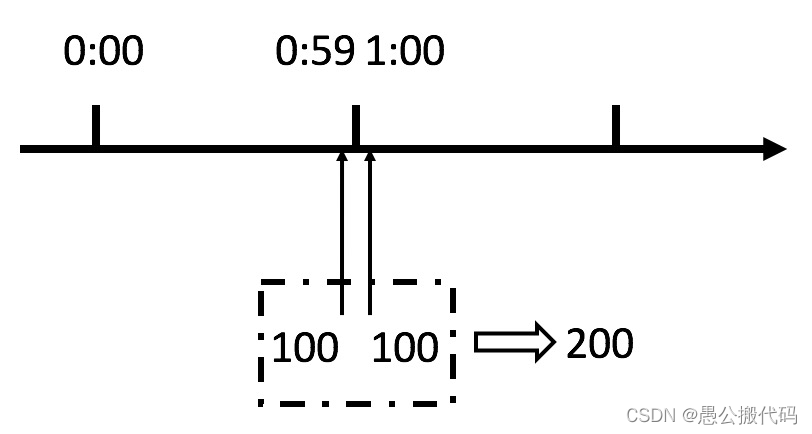

We can see from the above figure that if a malicious user sends 100 requests at 0:59 and 100 requests at 1:00, the user actually sends 200 requests in one second. What we have just stipulated is that there are up to 100 requests per minute, that is, up to 1.7 requests per second. Users can instantly exceed our rate limit by burst requests at the reset node of the time window. Users may crush our application in an instant through this loophole in the algorithm.

1.2 sliding window algorithm

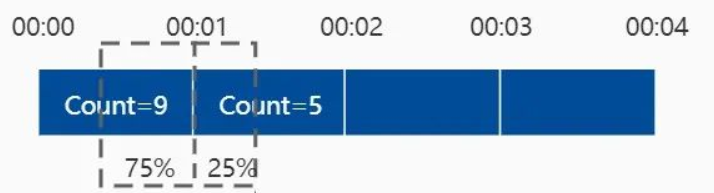

Sliding window is similar to the fixed window algorithm, but it calculates the estimate by adding the weighted count in the previous window to the count in the current window. If the estimate exceeds the count limit, the request will be blocked.

The specific formula is as follows:

Estimate = Previous window count * (1 - Elapsed time of current window / unit time ) + Current window count

There are 9 requests in window [00:00, 00:01) and 5 requests in window [00:01, 00:02). For requests arriving at 01:15, that is, 25% of the position of window [00:01, 00:02), the request count is calculated by the formula: 9 x (1 - 25%) + 5 = 11.75 > 10. Therefore, we reject this request.

Even if neither window exceeds the limit, the request will be rejected because the weighted sum of the previous and Current Windows does exceed the limit.

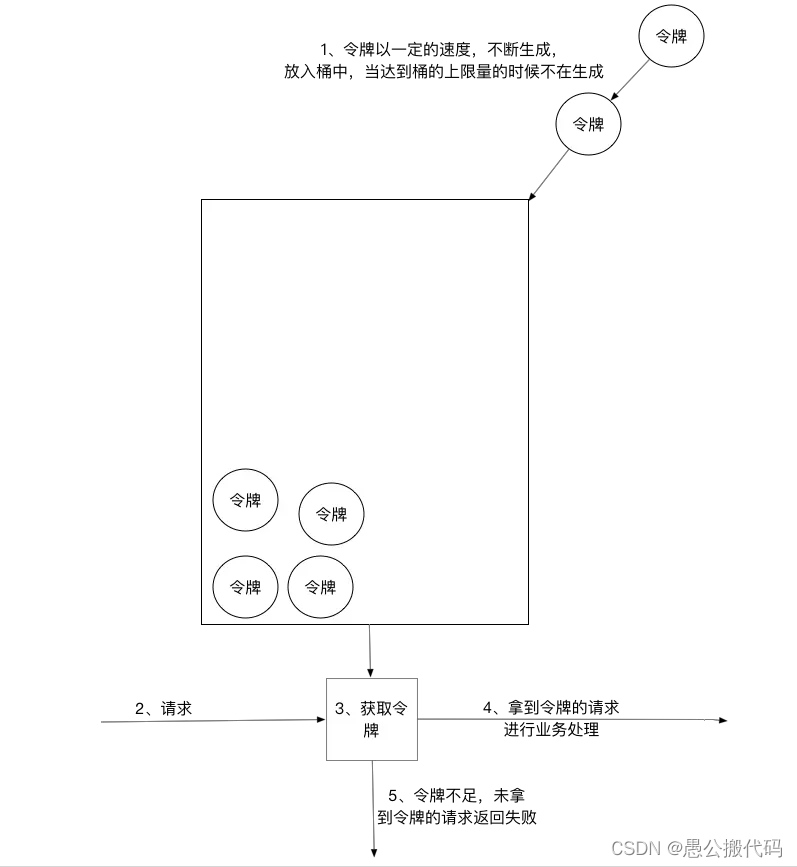

2. Token bucket algorithm

Token bucket algorithm is one of the more common current limiting algorithms, which is roughly described as follows: 1) All requests need to get an available token before being processed; 2) According to the current limit, set a certain rate to add tokens to the bucket; 3) The bucket sets the maximum limit for placing tokens. When the bucket is full, the newly added tokens are discarded or rejected; 4) After the request is reached, first obtain the token in the token bucket, and then carry out other business logic with the token. After processing the business logic, delete the token directly; 5) The token bucket has a minimum limit. When the token in the bucket reaches the minimum limit, the token will not be deleted after the request is processed, so as to ensure sufficient current limit;

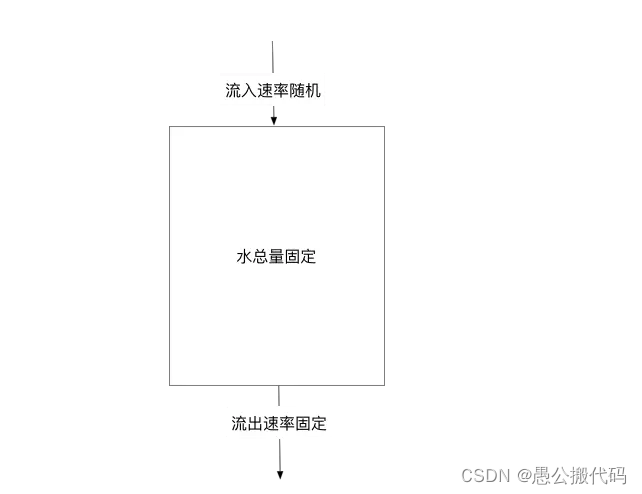

3. Leaky bucket algorithm

The leaky bucket algorithm is actually very simple. It can be roughly regarded as the process of water injection and leakage. It flows out of the bucket at a certain rate and into the water at any rate. When the water exceeds the bucket flow, it will be discarded, because the bucket capacity is unchanged to ensure the overall rate.

2, ASP Net core middleware to realize current limiting

1. Middleware code

public class SlidingWindow

{

private readonly object _syncObject = new object();

private readonly int _requestIntervalSeconds;

private readonly int _requestLimit;

private DateTime _windowStartTime;

private int _prevRequestCount;

private int _requestCount;

public SlidingWindow(int requestLimit, int requestIntervalSeconds)

{

_windowStartTime = DateTime.Now;

_requestLimit = requestLimit;

_requestIntervalSeconds = requestIntervalSeconds;

}

public bool PassRequest()

{

lock (_syncObject)

{

var currentTime = DateTime.Now;

var elapsedSeconds = (currentTime - _windowStartTime).TotalSeconds;

if (elapsedSeconds >= _requestIntervalSeconds * 2)

{

_windowStartTime = currentTime;

_prevRequestCount = 0;

_requestCount = 0;

elapsedSeconds = 0;

}

else if (elapsedSeconds >= _requestIntervalSeconds)

{

_windowStartTime = _windowStartTime.AddSeconds(_requestIntervalSeconds);

_prevRequestCount = _requestCount;

_requestCount = 0;

elapsedSeconds = (currentTime - _windowStartTime).TotalSeconds;

}

var requestCount = _prevRequestCount * (1 - elapsedSeconds / _requestIntervalSeconds) + _requestCount + 1;

if (requestCount <= _requestLimit)

{

_requestCount++;

return true;

}

}

return false;

}

}If the last 2 requests are 2 windows apart, the previous window count can be considered as 0 and the count can be restarted.

public class RateLimitMiddleware : IMiddleware

{

private readonly SlidingWindow _window;

public RateLimitMiddleware()

{

_window = new SlidingWindow(10, 60);

}

public async Task InvokeAsync(HttpContext context, RequestDelegate next)

{

if (!_window.PassRequest())

{

context.SetEndpoint(new Endpoint((context) =>

{

context.Response.StatusCode = StatusCodes.Status403Forbidden;

return Task.CompletedTask;

},

EndpointMetadataCollection.Empty,

"Current limiting"));

}

await next(context);

}

}2. Use in pipeline

It should be noted that when registering Middleware, we must use the singleton mode to ensure that all requests are counted through the same SlidingWindow:

services.AddSingleton<RateLimitMiddleware>();