(first of all, when creating this project, Baidu's robots agreement only banned taobao, so my crawler is legal. But now Baidu's robots have been changed, so this article does not attach the complete code)

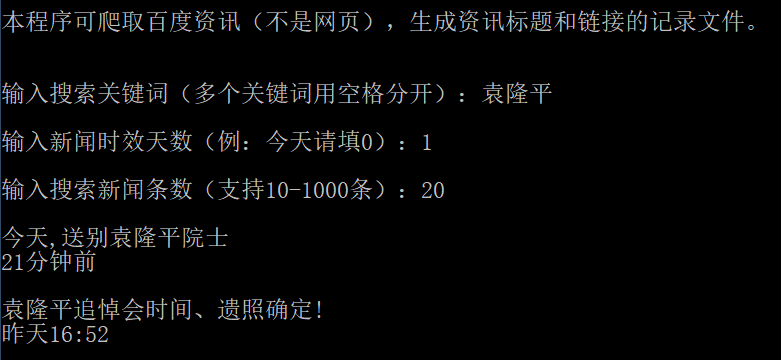

[project preview]

[creative background]

After learning about crawlers, I first created a program to climb today's headlines. Then my husband said that today's headlines are too low for new upstarts. Baidu is a senior aristocrat in China's Internet industry. Go and make a program to climb Baidu news.

[process analysis]

1. Which page to climb? Directly open Baidu, enter the keyword search, and you will enter the "web page". The information in the web page is too complicated. There are encyclopedias, news, advertisements, posts, music... It's not good for a news crawler. So I chose to crawl directly to the "information" page.

2. Timeliness of news: searching news usually hopes to be timeliness. For example, I only want to see news within one day. Baidu lists the time of news release, so it can calculate the timeliness with datetime.

3. News quality: in today's headline project, I also made a "comment number" filter to screen out the news with few comments (in my opinion, it means making up numbers) in order to obtain high-quality selected news. However, baidu information does not have a good number of display comments, so this function can only be abandoned for the time being.

4. Sift out duplicate news: only after climbing once can we know that there are too many duplicate news found by Baidu. All news websites copy it, and some even don't bother to change their names. I can only set up a "name pool". The name of each news can be compared with the "name pool" first, and there is no repeated display and storage.

[completion experience]

The deepest feeling is that today's headlines are not very low. Compared with Baidu, today's headlines are few and precise, and the number of comments is listed, which makes it easy for me to judge which are hot spots. Baidu search is large and comprehensive, but the amount of information is too complex, but people can't find what they really want to see.

[incomplete code] (crawling and parsing statements are omitted)

from openpyxl import Workbook,load_workbook

from bs4 import BeautifulSoup

import requests,datetime,time

def daydiff(day1, day2):

time_array1 = time.strptime(day1, "%Y-%m-%d")

timestamp_day1 = int(time.mktime(time_array1))

time_array2 = time.strptime(day2, "%Y-%m-%d")

timestamp_day2 = int(time.mktime(time_array2))

result = (timestamp_day2 - timestamp_day1) // 60 // 60 // 24

if result<0:

result=-result

return result

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36'}

url='https://www.baidu.com/s'

print('\n This procedure can crawl baidu information (not web pages) and generate record files of information titles and links.\n')

while True:

key=input('\n Enter search keywords (multiple keywords are separated by spaces):')

days=int(input('\n Enter the news aging days (for example, please fill in 0 today):'))

item=int(input('\n Enter the number of search news articles (10 supported)-1000 Article):'))

filename=key+'-Baidu information.xlsx'

wb=Workbook()

ws=wb.active

ws.append(['News headlines','Source website','Release time','link','abstract'])

titlepoor=[]

count=0

count0=0

for x in range(0,item,10):

page=round(x/10)

params={

'rtt': 1,

'bsst': 1,

'cl': 2,

'tn': 'news',

'ie':'utf-8',

'wd': key,

'pn':x,

}

try:

#Crawling and parsing statements (omitting pull ~)

res=xxxxxx

soup=xxxxxx

for i in range(1,11):

count0+=1

contan=soup.find(id='content_left')

idd=contan.find(id=i+x)

titletag=idd.find('h3')

href=titletag.find('a')['href']

title=titletag.find('a').text

source=idd.find(class_="news-source").find(class_="c-color-gray").text.strip()

time0=idd.find(class_="news-source").find(class_="c-color-gray2").text.strip()

zhaiyao=idd.find(class_="c-color-text").text.strip()

if 'minute' in time0 or 'hour' in time0:

day=0

elif 'yesterday' in time0:

day=1

elif 'The day before yesterday' in time0:

day=2

elif 'Days ago' in time0:

day=int(time0[:-2])

else:

try:

today=str(datetime.datetime.now())[:10]

kuaizhao=idd.find('div',class_="c-span-last")

kuaizhaol=kuaizhao.find('a')['href']

#Crawl the snapshot and get the release time (omit pull ~)

res1=xxxxxxxx

res1.encoding=xxxxxx

html1=xxxxxx

soup1=xxxxxxx

span=soup1.find(id="bd_snap_txt").find_all('span')

timee=(span[1].text)[12:24]

timeee=timee[:4]+'-'+timee[5:7]+'-'+timee[8:10]

day=daydiff(today,timeee)

except:

day=101

if days>=day and title not in titlepoor:

titlepoor.append(title)

count+=1

ws.append([title,source,time0,href,zhaiyao])

print('\n'+title)

print(time0)

except:

print('\n Load to page{}Page, there is no more content.'.format(page))

break

if count>0:

save=input('\n Searched{}News, find{}Article eligible. Save to local? Press 1 to save and other keys to discard:'.format(count0,count))

if save=='1':

print('\n Save completed.')

wb.save(filename)

else:

print('\n Oh, I didn't find any qualified news. Please adjust the search criteria and search again.')

print('\n'+'-'*70)

again=input('\n To continue searching, press enter. To exit the program, press 0:')

if again=='0':

input('\n Thanks for using. Bye.')

break