YUV learning notes

- YUV

- Basic concepts

- YUV and RGB convert to each other

- Storage mode of YUV data

- Yuvplay view YUV image

- ffmpeg converting and viewing YUV image

- YUV Parser

- 1. Separate Y, U and V components in YUV420P pixel data

- 2. Separate Y, U and V components in YUV444P pixel data

- 3. Remove color from YUV420P pixel data (grayscale)

- 4. Halve the brightness of YUV420P pixel data

- 5. Add a border around the YUV420P pixel data

- 6. Generate gray scale test chart in YUV420P format

- 7. Calculate PSNR of two YUV420P pixel data

- To be continued

YUV

Notes are organized on other people's blogs and Wikipedia, and Mr. Lei Xiaohua's blog.

Basic concepts

YUV was originally proposed to solve the compatibility problem of color TV and black-and-white TV. YUV consists of brightness information (Y) and color information (UV). The advantage of YUV over RGB is that it does not require three independent video signals to be transmitted at the same time, so it occupies less bandwidth (bandwidth). For historical reasons, YUV and Y'uv are usually used to encode the analog signal of TV, while YCbCr is used to describe the digital image signal, which is suitable for the compression and transmission of video and picture. Sometimes, it can be expressed in the way of Cb and Cr, which is actually equivalent to U and V, but the two proper terms YUV and YCbCr should be strictly distinguished. Sometimes, they are not exactly the same. What you are talking about today is actually YUV Refer to YCbCr.

There are many kinds of YUV, which can be understood as two-dimensional, i.e. "space between" and "space inside", referring to the ideas of frame to frame and frame to frame in h264.

- Space space: different spaces, i.e. different bit numbers describing a pixel, such as YUV444, YUV422, YUV411, YUV420

- Space internal: the same space, that is, the number of bit s describing a pixel is the same, but the storage mode is different. For example, for YUV420, it can be subdivided into YUV420P, YUV420SP, NV21, NV12, YV12, YU12, I420

When understanding YUV format, always remember to study it from two aspects: bit number and storage structure.

YUV Formats are divided into two types:

- planar formats: store Y of all pixels continuously, followed by U of all pixels, followed by V of all pixels

- packed formats: Y, U and V of each pixel point are stored continuously and cross

YUV, divided into three components, "Y" refers to brightness (Luminance or Luma), that is, gray value; and "U" and "V" refers to Chroma (Chroma or Chroma), which describes image color and saturation, and is used to specify the color of pixels.

In fact, the storage format of YUV bitstream is closely related to its sampling mode. There are four main sampling modes:

- YUV444:4:4:4 means complete sampling.

- YUV422:4:2:2 means 2:1 horizontal sampling and vertical full sampling.

- YUV420:4:2:0 means 2:1 horizontal sampling and 2:1 vertical sampling.

- YUV411:4:1:1 means 4:1 horizontal sampling and vertical full sampling.

YUV and RGB convert to each other

U and V components can be expressed as original R, G, and B (R, G, B are pre corrected by γ)

- YUV to RGB

Y=0.299∗R+0.587∗G+0.114∗B Y = 0.299 * R + 0.587 * G + 0.114 * B Y=0.299∗R+0.587∗G+0.114∗B

U=−0.169∗R−0.331∗G+0.5∗B+128 U = -0.169 * R - 0.331 * G + 0.5 * B + 128 U=−0.169∗R−0.331∗G+0.5∗B+128

V=0.5∗R−0.419∗G−0.081∗B+128 V = 0.5 * R - 0.419 * G - 0.081 * B + 128 V=0.5∗R−0.419∗G−0.081∗B+128

- RGB to YUV

R=Y+1.13983∗(V−128) R = Y + 1.13983 * (V - 128) R=Y+1.13983∗(V−128)

G=Y−0.39465∗(U−128)−0.58060∗(V−128) G = Y - 0.39465 * (U - 128) - 0.58060 * (V - 128) G=Y−0.39465∗(U−128)−0.58060∗(V−128)

B=Y+2.03211∗(U−128) B = Y + 2.03211 * (U - 128) B=Y+2.03211∗(U−128)

Storage mode of YUV data

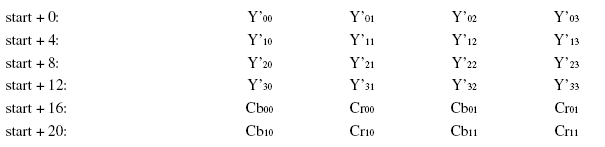

-

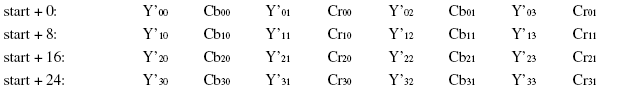

YUYV (YUV422)

Two adjacent y shares two adjacent Cb and Cr, for example, for y'00 and Y'01, the mean value of Cb and Cr is Cb00, Cr00, and so on.

-

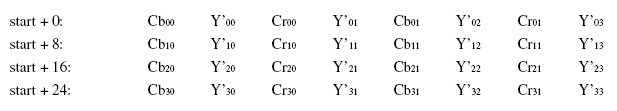

UYVY (belonging to YUV422)

Two adjacent Y shares two adjacent Cb and Cr, but the order of Cb and Cr is different from the above YUYV, and so on.

-

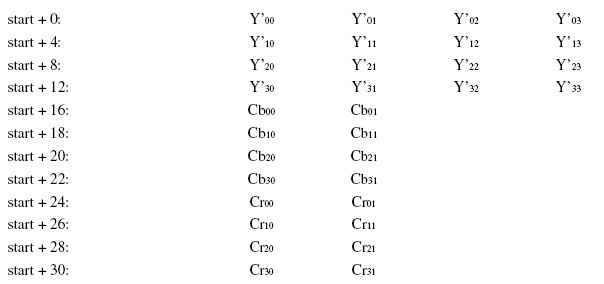

YUV422P

The P of YUV422P represents Planar formats, which means YUV stores y first, then U and V instead of interleaving. For y'00 and Y'01, the mean value of Cb and Cr is Cb00 and Cr00.

-

YV12 (YUV420)

YV12 belongs to YUV420, which is also a planar format. The storage method is to store Y first, then V, and then U. four Y components share a set of UVs, so Y'00, Y'01, Y'10, Y'11 share Cr00 and Cb00 in the following figure.

Many important encoders use YV12 space to store video: MPEG-4( x264,XviD,DivX ), DVD-Video storage formats MPEG-2, MPEG-1 and MJPEG.

YU12 is similar to YV12 except that the storage mode is slightly different. The storage mode is to store Y first, then U, then V.

-

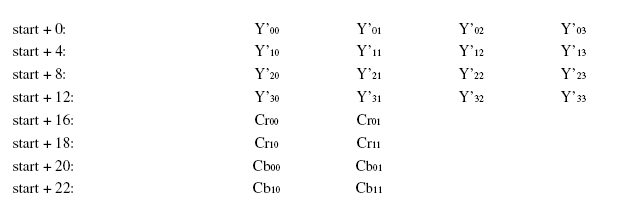

NV12(YUV420)

NV12 belongs to YUV420 format, but the storage mode is to store y first, then cross store U and V. its extraction mode is similar to YV12, that is, y'00, y'01, y'10 and Y'11 share Cb00 and Cr00.

NV21 and NV12 are slightly different. Y is stored first, then V and U are stored crosswise.

Yuvplay view YUV image

At first, yuv images were all colorful. I thought that the file was broken until I saw a sentence that ffplay needs to specify the size of YUV image. Because the YUV file does not contain the width and height data, you must specify the width and height with - video? Size, which reflects that YUV player needs to set the width and height to display normally.

- Size - > Custom - > Modify width and height

- Color - > select the corresponding YUV format

ffmpeg converting and viewing YUV image

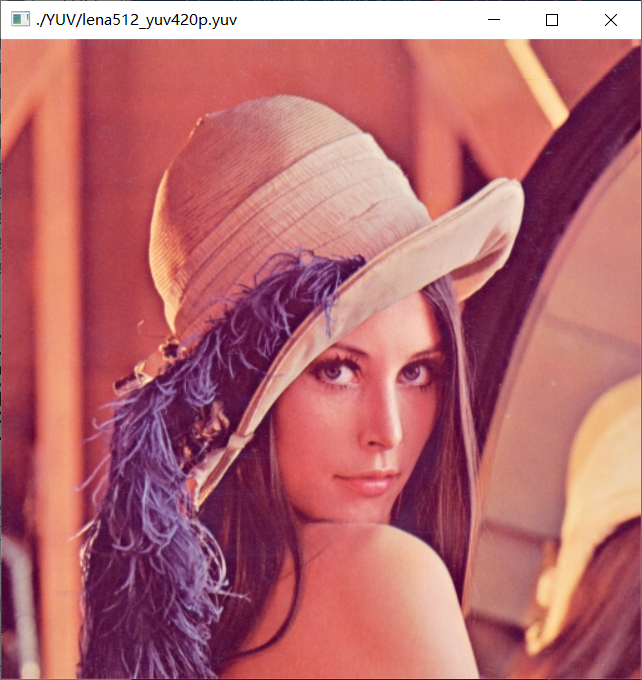

The image of YUV420P is obtained by ffmpeg using the original test image:

./ffmpeg -i ./originnal_pic/lena512color.tiff -pix_fmt yuv420p ./YUV/lena512_yuv420p.yuv

Display YUV image through ffplay:

./ffplay.exe -video_size 512*512 ./YUV/lena512_yuv420p.yuv

YUV Parser

Simple analysis YUV image code, code from Mr. Lei Xiaohua's blog.

Operating environment: Windows10, VS2017

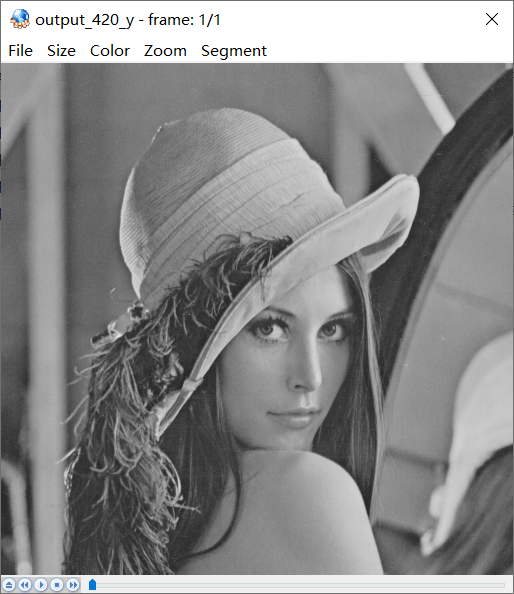

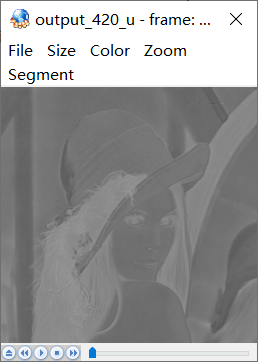

1. Separate Y, U and V components in YUV420P pixel data

Y, U and V of YUV420P are separated and saved as 3 files.

bool YuvParser::yuv420_split(const std::string input_url, int width, int height, int frame_num) { FILE *input_file = fopen(input_url.c_str(), "rb+"); FILE *output_y = fopen("output_420_y.y", "wb+"); FILE *output_u = fopen("output_420_u.y", "wb+"); FILE *output_v = fopen("output_420_v.y", "wb+"); unsigned char *picture = new unsigned char[width * height * 3 / 2]; for (int i = 0; i < frame_num; i++) { fread(picture, 1, width * height * 3 / 2, input_file); fwrite(picture, 1, width * height, output_y); fwrite(picture + width * height, 1, width * height / 4, output_u); fwrite(picture + width * height * 5 / 4, 1, width * height / 4, output_v); } delete[] picture; fclose(input_file); fclose(output_y); fclose(output_u); fclose(output_v); return true; }

The resolved image needs to be viewed by yuvplay. The original image is 512 * 512, as shown in the following figure:

After analysis, the picture is divided into three components, y, U and V. using yuvplay, select component Y in the Color tab, and first view the Y component, with the size of 512 * 512.

U. V as shown in the figure below, the size is 256 * 256.

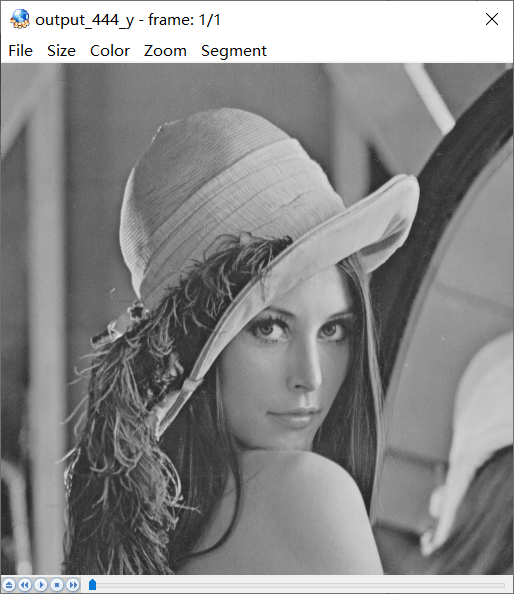

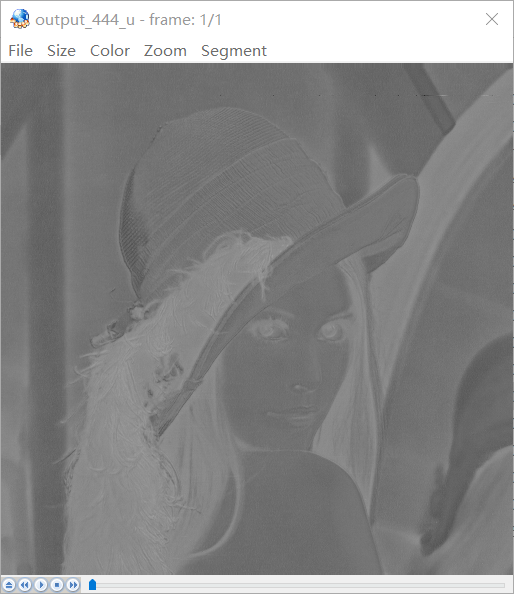

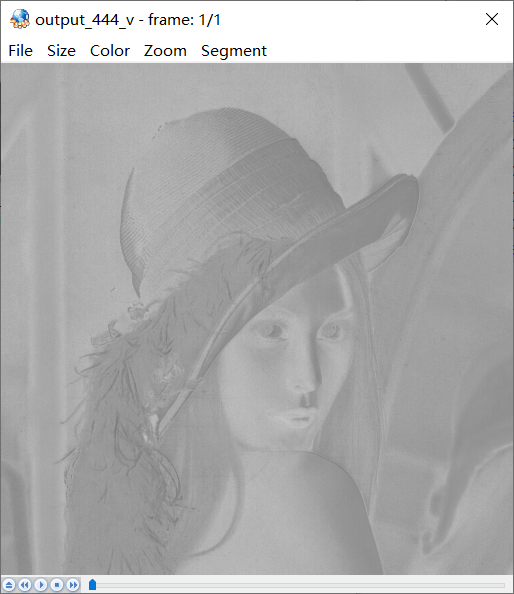

2. Separate Y, U and V components in YUV444P pixel data

Y, U and V of YUV444P are separated and saved as 3 files.

bool YuvParser::yuv444_split(const std::string input_url, int width, int height, int frame_num) { FILE *input_file = fopen(input_url.c_str(), "rb+"); FILE *output_y = fopen("output_444_y.y", "wb+"); FILE *output_u = fopen("output_444_u.y", "wb+"); FILE *output_v = fopen("output_444_v.y", "wb+"); unsigned char *picture = new unsigned char[width * height * 3]; for (int i = 0; i < frame_num; i++) { fread(picture, 1, width * height * 3, input_file); fwrite(picture, 1, width * height, output_y); fwrite(picture + width * height, 1, width * height, output_u); fwrite(picture + width * height * 2, 1, width * height, output_v); } delete[] picture; fclose(input_file); fclose(output_y); fclose(output_u); fclose(output_v); return true; }

The original image is still lena standard image, which is converted to YUV444P with ffmpeg. The effect after separation is as follows

output444_y.y

output444_u.y

output444_v.y

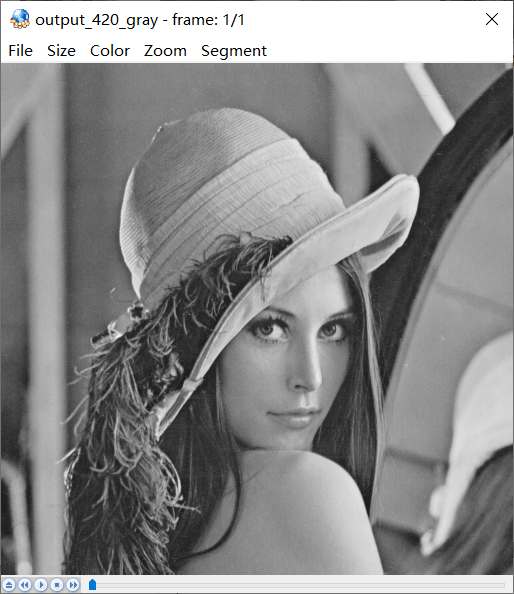

3. Remove color from YUV420P pixel data (grayscale)

Remove the color of the pixel data in YUV420P format, and turn it into a pure gray-scale image.

bool YuvParser::yuv420_gray(const std::string input_url, int width, int height, int frame_num) { FILE *input_file = fopen(input_url.c_str(), "rb+"); FILE *output_gray = fopen("output_420_gray.yuv", "wb+"); unsigned char *picture = new unsigned char[width * height * 3 / 2]; for (int i = 0; i < frame_num; i++) { fread(picture, 1, width * height * 3 / 2, input_file); memset(picture + width * height, 128, width * height / 2); fwrite(picture, 1, width * height * 3 / 2, output_gray); } delete[] picture; fclose(input_file); fclose(output_gray); return true; }

The results are as follows:

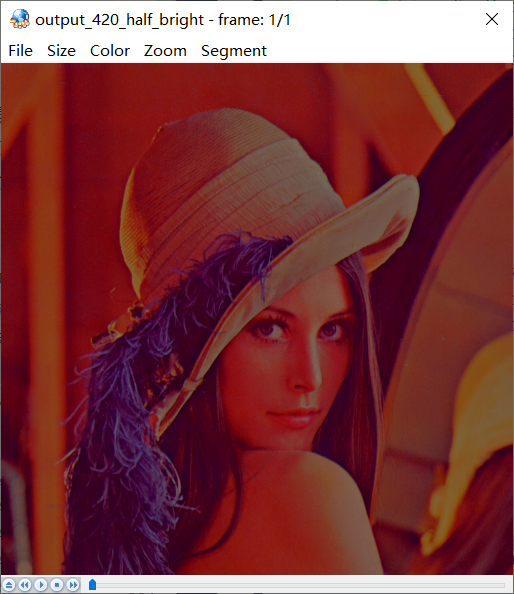

4. Halve the brightness of YUV420P pixel data

In YUV, Y represents brightness, so you only need to halve y, and the image will appear brightness halved.

bool YuvParser::yuv420_half_bright(const std::string input_url, int width, int height, int frame_num) { FILE *input_file = fopen(input_url.c_str(), "rb+"); FILE *output_half_bright = fopen("output_420_half_bright.yuv", "wb+"); unsigned char *picture = new unsigned char[width * height * 3 / 2]; for (int i = 0; i < frame_num; i++) { fread(picture, 1, width * height * 3 / 2, input_file); for (int cur_pixel = 0; cur_pixel < width * height; cur_pixel++) { // half Y picture[cur_pixel] /= 2; } fwrite(picture, 1, width * height * 3 / 2, output_half_bright); } delete[] picture; fclose(input_file); fclose(output_half_bright); return true; }

The brightness is halved as follows

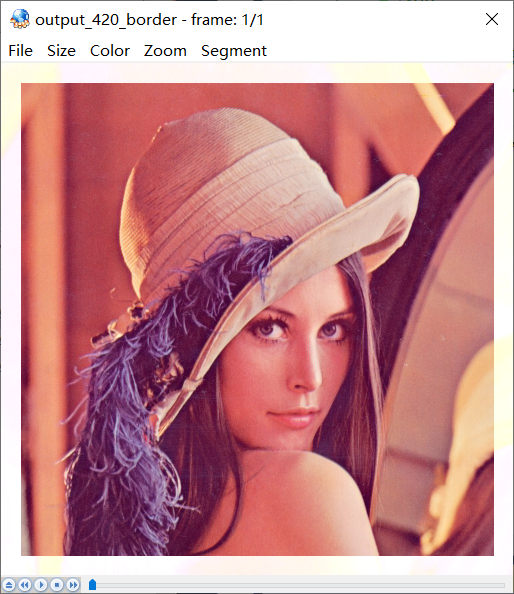

5. Add a border around YUV420P pixel data

By modifying the value of the luminance component Y at a specific position in YUV data, the y value is adjusted to the brightest (255), adding a "border" effect to the image.

bool YuvParser::yuv420_border(const std::string input_url, int width, int height, int border_length, int frame_num) { FILE *input_file = fopen(input_url.c_str(), "rb+"); FILE *output_border = fopen("output_420_border.yuv", "wb+"); unsigned char *picture = new unsigned char[width * height * 3 / 2]; for (int i = 0; i < frame_num; i++) { fread(picture, 1, width * height * 3 / 2, input_file); for (int cur_height = 0; cur_height < height; cur_height++) { for (int cur_width = 0; cur_width < width; cur_width++) { if (cur_width < border_length || cur_width > width - border_length || cur_height < border_length || cur_height > height - border_length) { picture[cur_height * width + cur_width] = 255; } } } fwrite(picture, 1, width * height * 3 / 2, output_border); } delete[] picture; fclose(input_file); fclose(output_border); return true; }

The border effect of 20 pixels is as follows

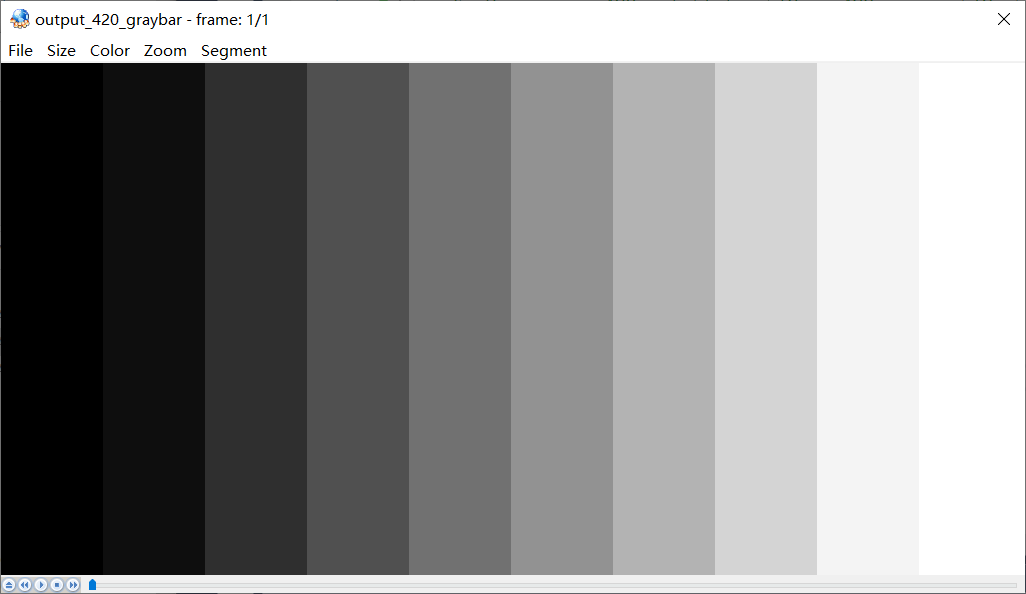

6. Generate gray scale test chart in YUV420P format

The following functions generate a gray-scale test map.

bool YuvParser::yuv420_graybar(int width, int height, int y_min, int y_max, int bar_num) { FILE *output_graybar = fopen("output_420_graybar.yuv", "wb+"); unsigned char *picture = new unsigned char[width * height * 3 / 2]; if (bar_num == 1 && y_max != y_min) { return false; } float luma_range = (float)(y_max - y_min) / (float)(bar_num > 1 ? bar_num - 1 : bar_num); unsigned char cur_luma = y_min; int cur_block = 0; int bar_width = width / bar_num; // write Y for (int cur_height = 0; cur_height < height; cur_height++) { for (int cur_width = 0; cur_width < width; cur_width++) { cur_block = (cur_width / bar_width == bar_num) ? (bar_num - 1) : (cur_width / bar_width); cur_luma = y_min + (unsigned char)(cur_block * luma_range); picture[cur_height * width + cur_width] = cur_luma; } } // NOTE: write U and write V can use memset to set, // write them separately to make them easier // to understand // write U for (int cur_height = 0; cur_height < height / 2; cur_height++) { for (int cur_width = 0; cur_width < width / 2; cur_width++) { picture[height * width + cur_height * width / 2 + cur_width] = 128; } } // write V for (int cur_height = 0; cur_height < height / 2; cur_height++) { for (int cur_width = 0; cur_width < width / 2; cur_width++) { picture[height * width * 5 / 4 + cur_height * width / 2 + cur_width] = 128; } } fwrite(picture, 1, width * height * 3 / 2, output_graybar); delete[] picture; fclose(output_graybar); return true; }

Simply learn from the 10 level gray scale test chart under the Raytheon test, with a width of 1024 pixels and a height of 512. The effect is as follows.

The Y, U and V values of each gray scale bar are as follows

| Y | U | V |

|---|---|---|

| 0 | 128 | 128 |

| 28 | 128 | 128 |

| 56 | 128 | 128 |

| 85 | 128 | 128 |

| 113 | 128 | 128 |

| 141 | 128 | 128 |

| 170 | 128 | 128 |

| 198 | 128 | 128 |

| 226 | 128 | 128 |

| 255 | 128 | 128 |

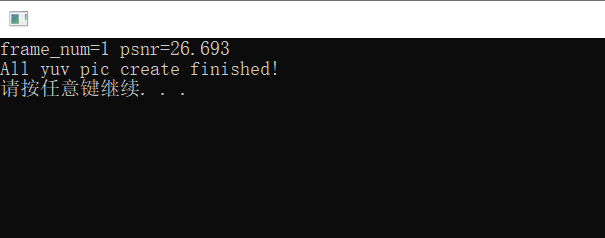

7. Calculate PSNR of two YUV420P pixel data

PSNR is the most basic video quality evaluation method. For 8-bit quantized pixel data, the calculation method is as follows:

PSNR=10∗lg(255MSE)

PSNR = 10 * \lg(\frac{255}{MSE})

PSNR=10∗lg(MSE255)

Where MSE calculation method is:

MSE=1M∗N∑i=1M∑j=1N(xij−yij)2

MSE = \frac{1}{M * N}\sum_{i = 1}^M\sum_{j = 1}^N(x_{ij} - y_{ij})^2

MSE=M∗N1i=1∑Mj=1∑N(xij−yij)2

M and N represent the width and height of the image, and xijx {ij} Xij and yijy {ij} Yij are the pixel values of each image respectively, which are used to calculate the difference between the damaged image and the original image, and evaluate the quality of the damaged image. PSNR value is usually in the range of 20-50, the higher the value is, the closer the two images are, reflecting the better the quality of the damaged image.

bool YuvParser::yuv420_psnr(const std::string input_url1, const std::string input_url2, int width, int height, int frame_num) { FILE *input_file1 = fopen(input_url1.c_str(), "rb+"); FILE *input_file2 = fopen(input_url2.c_str(), "rb+"); unsigned char *picture1 = new unsigned char[width * height * 3 / 2]; unsigned char *picture2 = new unsigned char[width * height * 3 / 2]; for (int i = 0; i < frame_num; i++) { fread(picture1, 1, width * height * 3 / 2, input_file1); fread(picture2, 1, width * height * 3 / 2, input_file2); double mse_total = 0, mse = 0, psnr = 0; for (int cur_pixel = 0; cur_pixel < width * height; cur_pixel++) { mse_total += pow((double)(picture1[cur_pixel] - picture2[cur_pixel]), 2); } mse = mse_total / (width * height); psnr = 10 * log10(255.0 * 255.0 / mse); printf("frame_num=%d psnr=%5.3f\n", frame_num, psnr); // Skip the UV component fseek(input_file1, width * height / 2, SEEK_CUR); fseek(input_file2, width * height / 2, SEEK_CUR); } delete[] picture1; delete[] picture2; fclose(input_file1); fclose(input_file2); return true; }

Because I don't know how to make the picture damaged, the picture uses 256 * 256 lena material of Raytheon.

The results are as follows: 26.693

To be continued

YUV has been sorting out for two days, and the code has been typed again. I feel that the format has been understood now, but I still don't know what to do:

- Why is the colorless U and V component 128?

- If the pixel is not a multiple of 4, how is YUV stored?

- YUV4:2:0 what are the explanations of these numbers? Although they are summarized above, they are still half understood?

What to do next:

-

Using ffmpeg to develop a YUV video separation tool.

I've seen someone do it. It's written in the back ~ ~ ~ the link is pasted first

https://blog.csdn.net/longjiang321/article/details/103229035