Concept:

ZooKeeper is a distributed, open-source distributed application coordination service. It is an open-source implementation of Chubby of Google and an important component of Hadoop and Hbase. It is a software that provides consistency services for distributed applications. Its functions include configuration maintenance, domain name service, distributed synchronization, group service, etc.

The goal of ZooKeeper is to encapsulate complex and error prone key services and provide users with simple and easy-to-use interfaces and systems with efficient performance and stable functions.

ZooKeeper contains a simple set of primitives that provide Java and C interfaces.

In the code version of ZooKeeper, interfaces for distributed exclusive locks, elections and queues are provided. The code is in $zookeeper_home\src\recipes. There are two versions of distributed locks and queues: Java and C, and there is only java version for election.

principle

ZooKeeper is based on the Fast Paxos algorithm. The Paxos algorithm has the problem of livelock, that is, when multiple proposers submit alternately, they may be mutually exclusive, resulting in no proposer can submit successfully. However, Fast Paxos has made some optimization and elected a leader (leader). Only the leader can submit the proposer. See the specific algorithm in Fast Paxos. Therefore, to understand ZooKeeper, you must first understand Fast Paxos.

Basic operation process of ZooKeeper:

1. Elect leaders.

2. Synchronize data.

3. There are many algorithms in the process of electing leaders, but the election standards to be achieved are consistent.

4. The Leader should have the highest execution ID, similar to root permission.

5. Most of the machines in the cluster get the response and accept the selected Leader.

summary

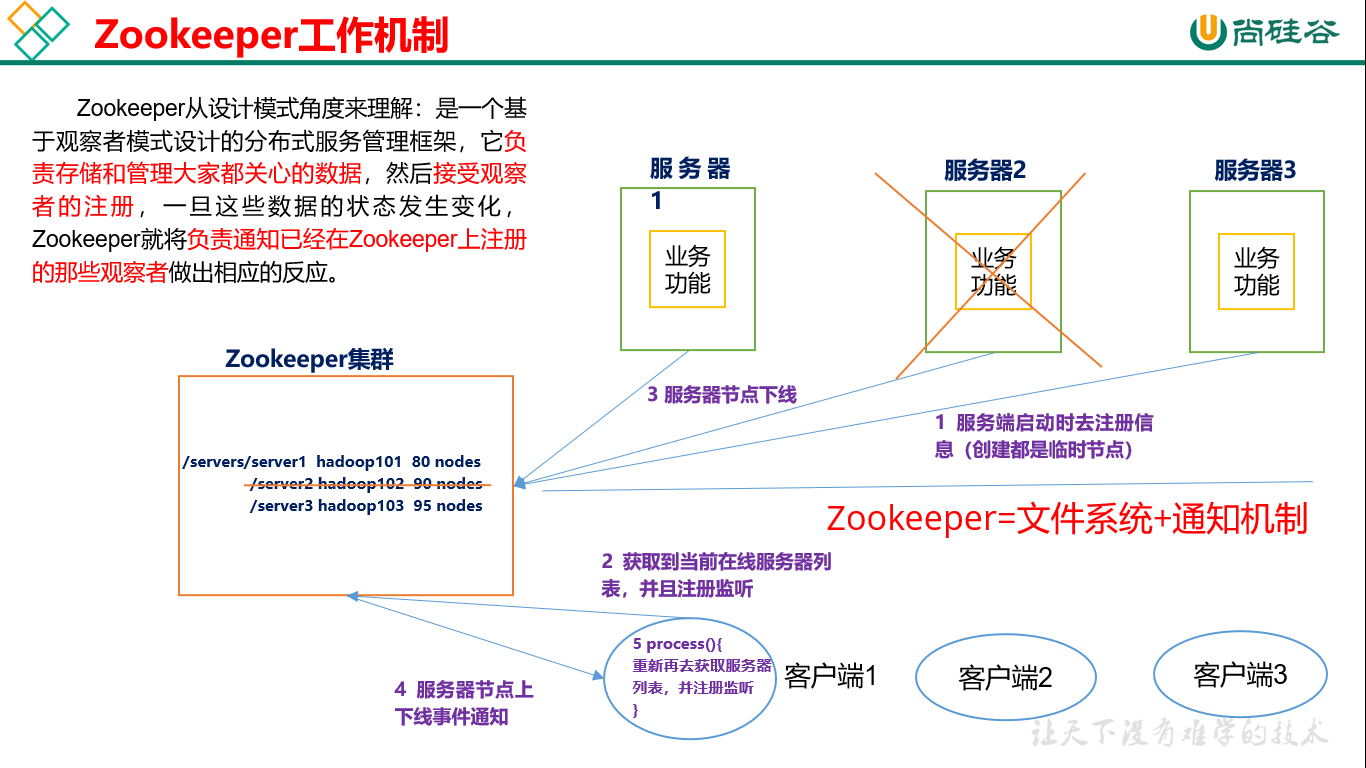

Zookeeper is an open source distributed Apache project that provides coordination services for distributed applications.

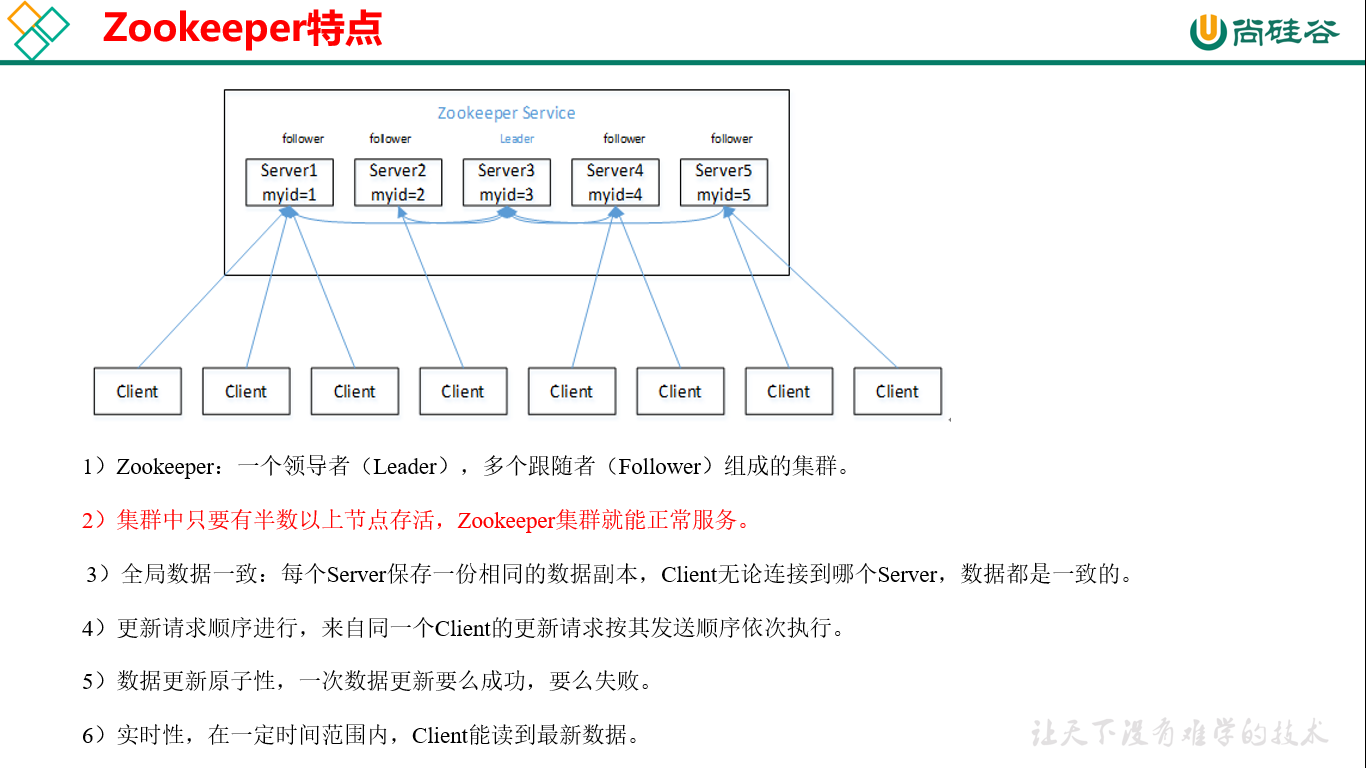

characteristic

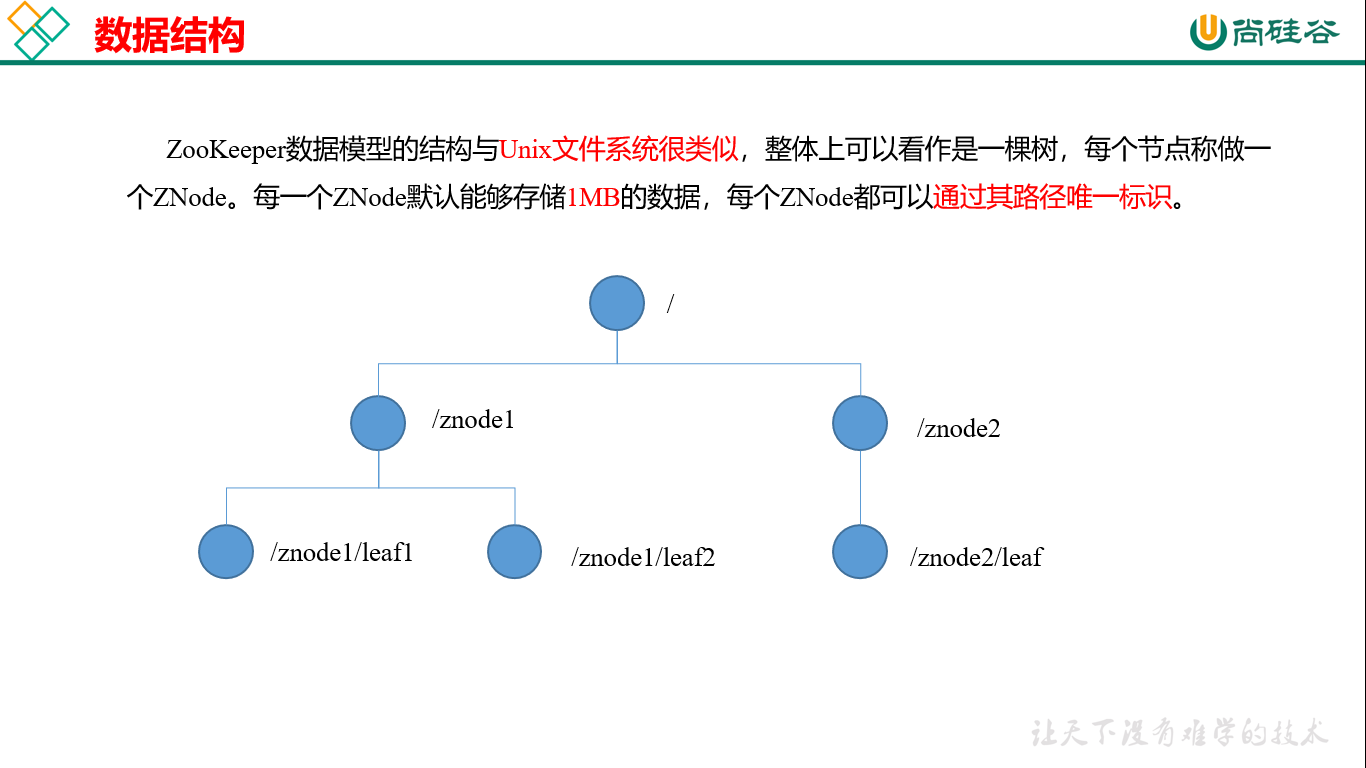

data structure

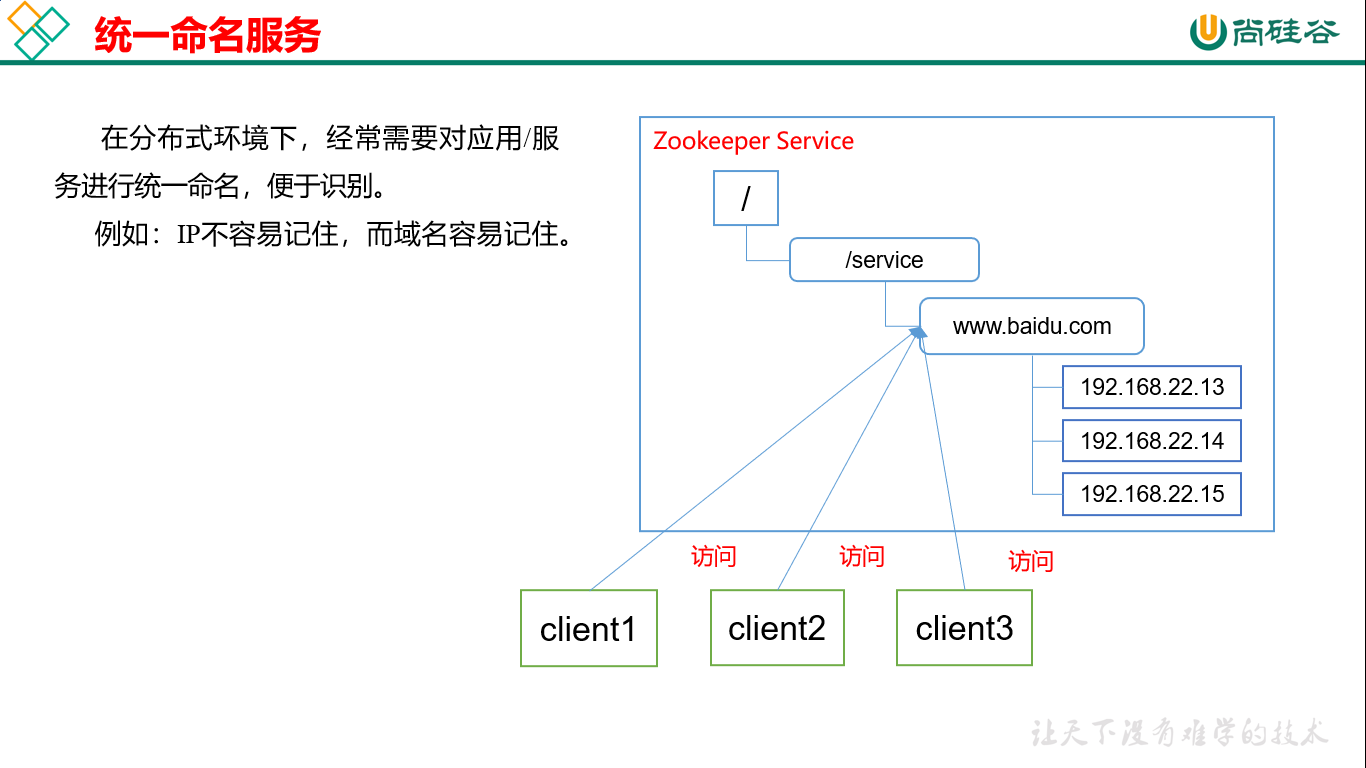

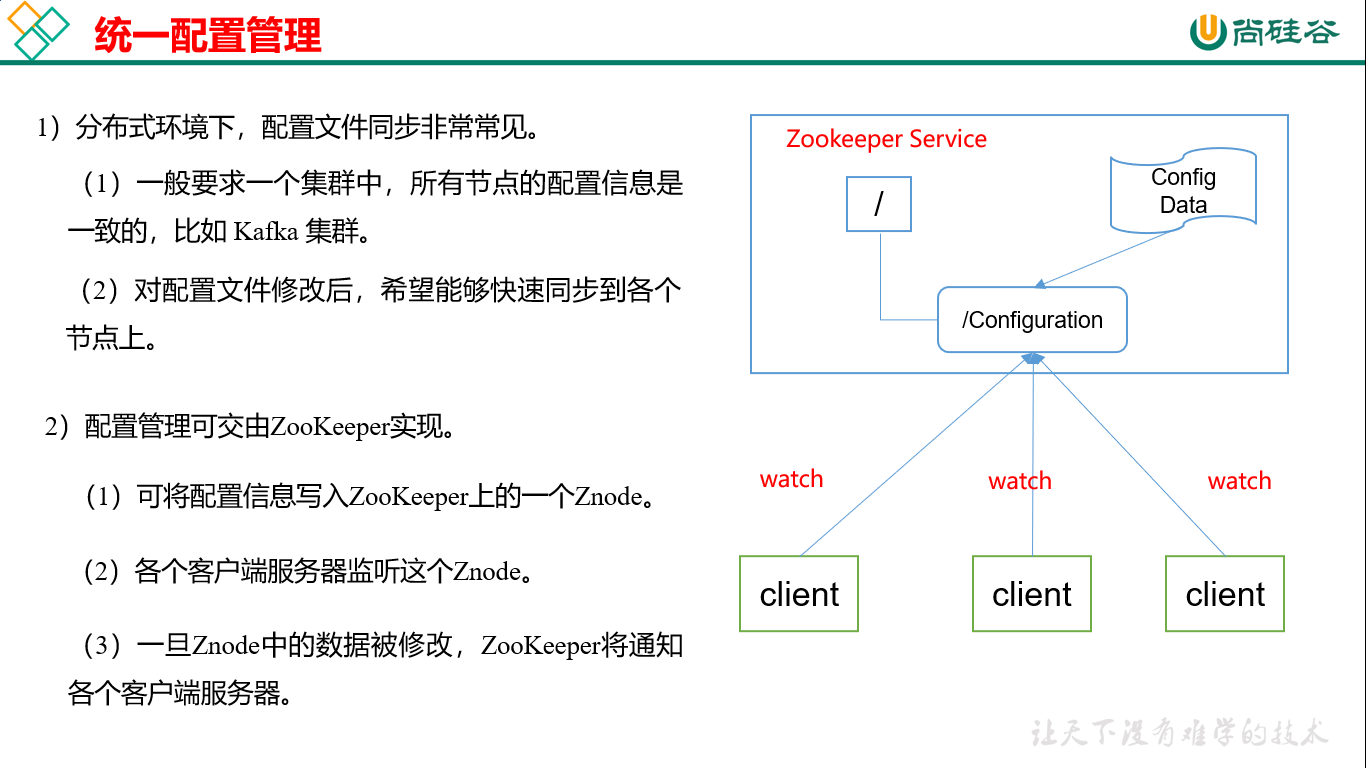

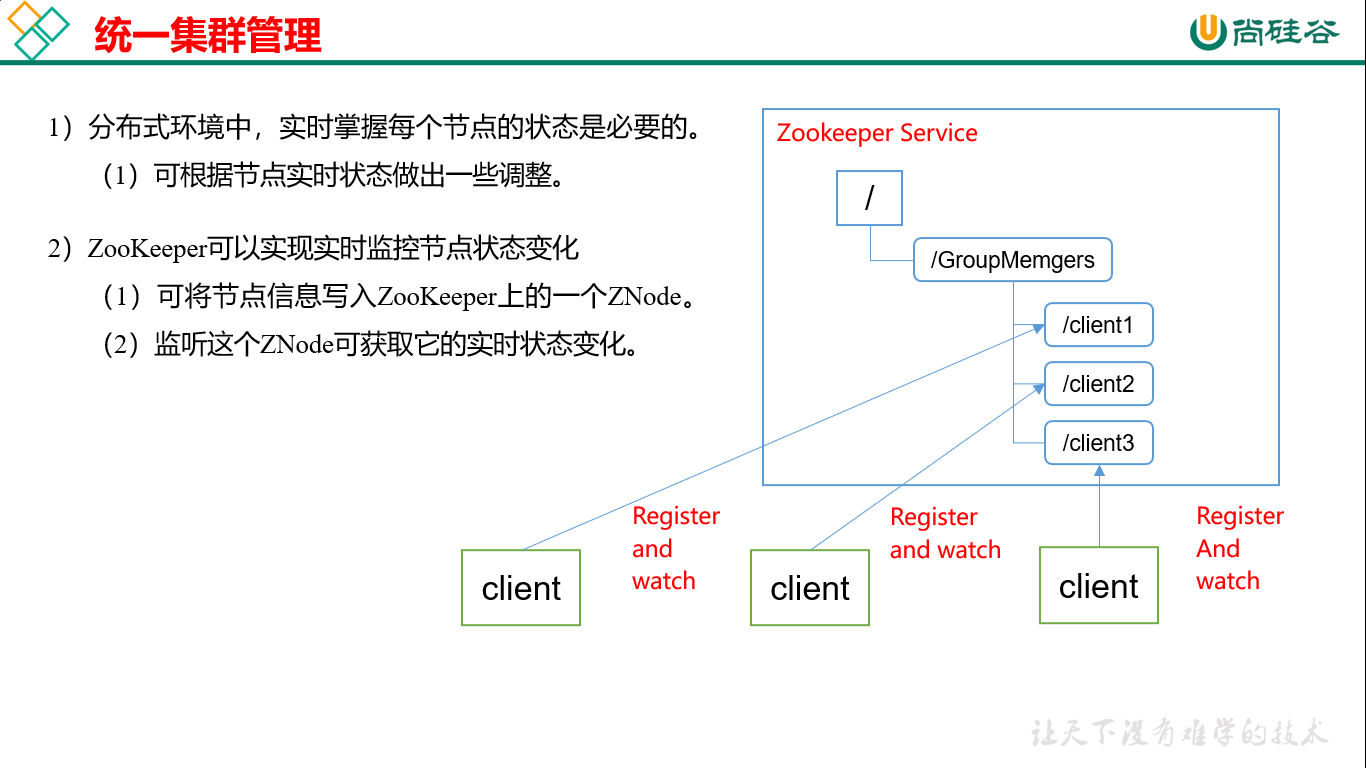

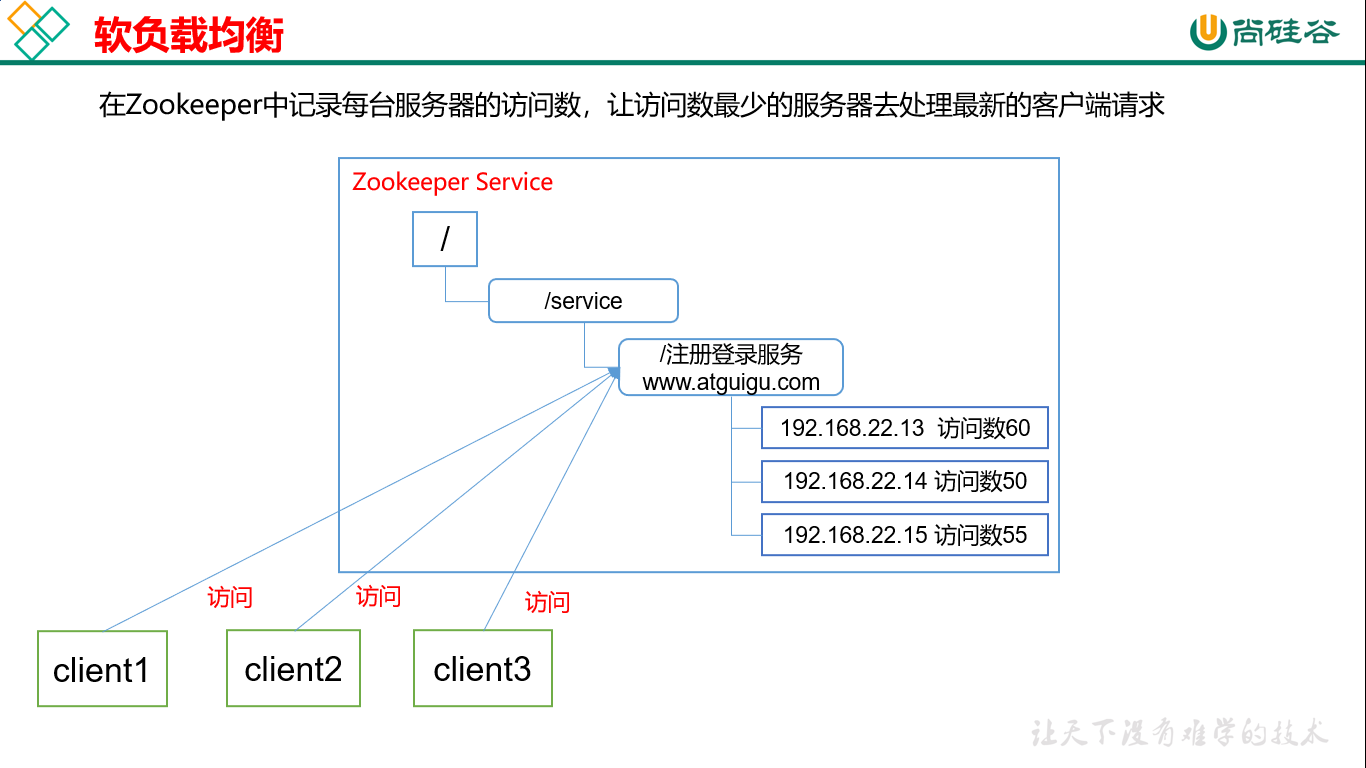

Application scenario

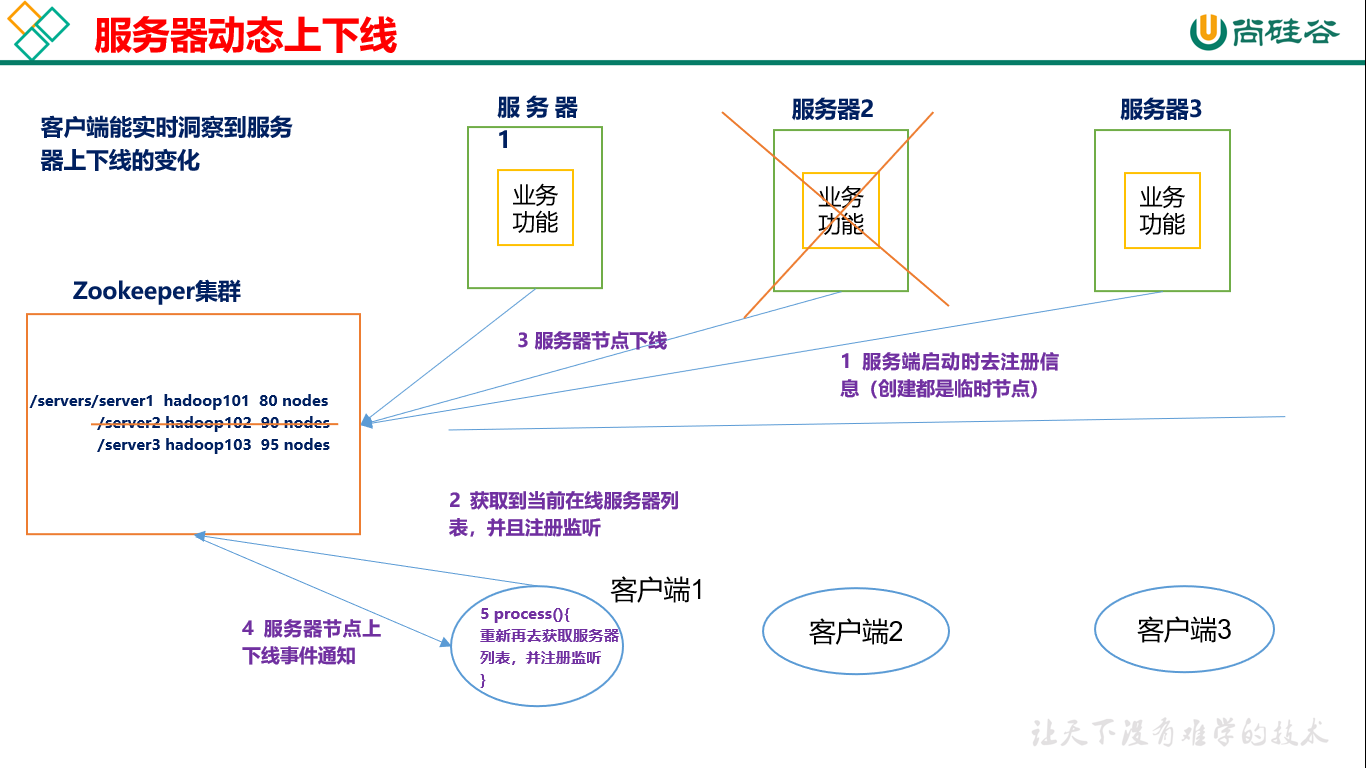

The services provided include: unified naming service, unified configuration management, unified cluster management, dynamic uplink and downlink of server nodes, soft load balancing, etc.

Interpretation of configuration parameters

Zookeeper Configuration files in zoo.cfg The meaning of parameters in is interpreted as follows: 1.tickTime =2000: Number of communication heartbeats, Zookeeper Heartbeat time of server and client, in milliseconds Zookeeper The basic time used, the time interval between servers or between clients and servers to maintain heartbeat, that is, each tickTime Time sends a heartbeat in milliseconds. It is used for the heartbeat mechanism and sets the minimum session The timeout is twice the heartbeat time.(session The minimum timeout for is 2*tickTime) 2.initLimit =10: LF Initial communication time limit In cluster Follower Follower server and Leader The maximum number of heartbeats that can be tolerated during the initial connection between leader servers( tickTime Number of), which is used to limit the number of users in the cluster Zookeeper Server connected to Leader Time limit for. 3.syncLimit =5: LF Synchronous communication time limit In cluster Leader And Follower The maximum response time unit between if the response exceeds syncLimit * tickTime,Leader think Follwer Dead, remove from server list Follwer. 4.dataDir: Data file directory+Data persistence path Mainly used to save Zookeeper Data in. 5.clientPort =2181: Client connection port Listen to the port on which the client is connected.

Zookeeper internal principle

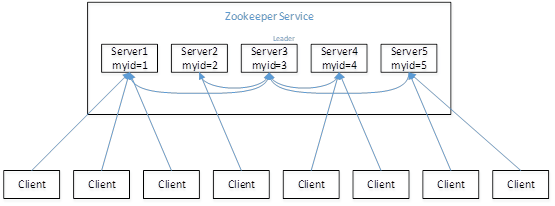

Election mechanism (interview focus)

1)Half mechanism: more than half of the machines in the cluster survive and the cluster is available. therefore Zookeeper Suitable for installing odd servers. 2)Zookeeper Although not specified in the configuration file Master and Slave. But, Zookeeper When working, there is a node for Leader,Others are Follower,Leader It was temporarily elected through an internal electoral mechanism. 3)A simple example is given to illustrate the whole election process. Suppose there are five servers Zookeeper Clusters, their id From 1-5,At the same time, they are all newly started, that is, there is no historical data. The amount of data stored is the same. Let's assume that these servers are started in sequence to see what happens, as shown in Figure 5-8 As shown in. Figure 5-8 Zookeeper New electoral mechanism (1)Server 1 is started. At this time, only one server is started. There is no response to the message sent by it, so its election status is always LOOKING Status. (2)The server 2 starts up, communicates with the server 1 that started up at the beginning, and exchanges its own election results with each other. Since there is no historical data for both, so id Server 2 with higher value wins, but more than half of the servers agree to elect it(More than half of this example is 3),Therefore, servers 1 and 2 continue to maintain LOOKING Status. (3)Server 3 starts. According to the previous theoretical analysis, server 3 becomes the leader of servers 1, 2 and 3. Different from the above, three servers elect it at this time, so it becomes the leader of this election Leader. (4)Server 4 starts. According to the previous analysis, theoretically, server 4 should be the largest of servers 1, 2, 3 and 4. However, since more than half of the previous servers have elected server 3, it can only accept the life of a younger brother. (5)Server 5 starts, just like 4.

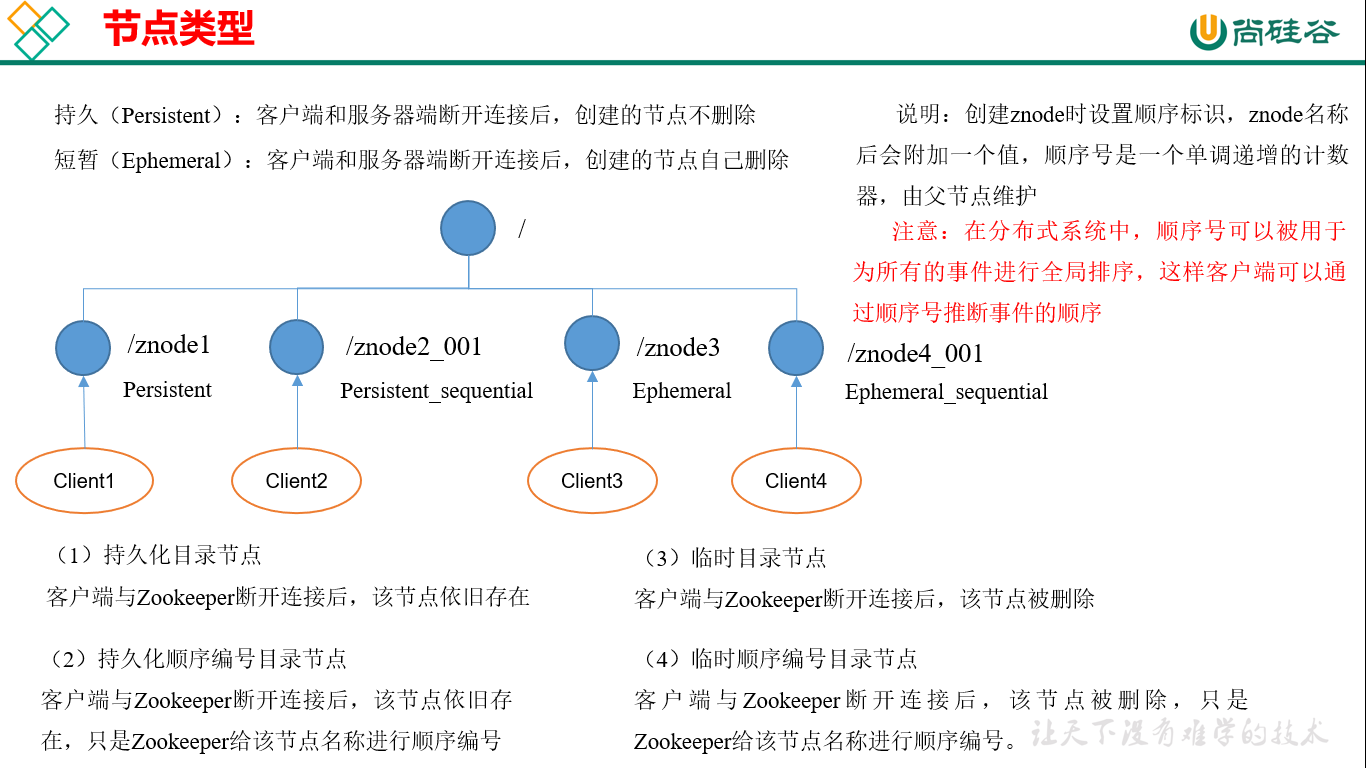

Node type

Stat structure

1)czxid-Create transaction for node zxid Each modification ZooKeeper Status will receive a zxid Form of timestamp, that is ZooKeeper affair ID. affair ID yes ZooKeeper The total order of all modifications in the. Each modification has a unique zxid,If zxid1 less than zxid2,that zxid1 stay zxid2 It happened before. 2)ctime - znode The number of milliseconds created(Since 1970) 3)mzxid - znode Last updated transaction zxid 4)mtime - znode Last modified milliseconds(Since 1970) 5)pZxid-znode Last updated child node zxid 6)cversion - znode Child node change number, znode Child node modification times 7)dataversion - znode Data change number 8)aclVersion - znode Change number of access control list 9)ephemeralOwner- If it is a temporary node, this is znode Owner's session id. 0 if it is not a temporary node. 10)dataLength- znode Data length 11)numChildren - znode Number of child nodes

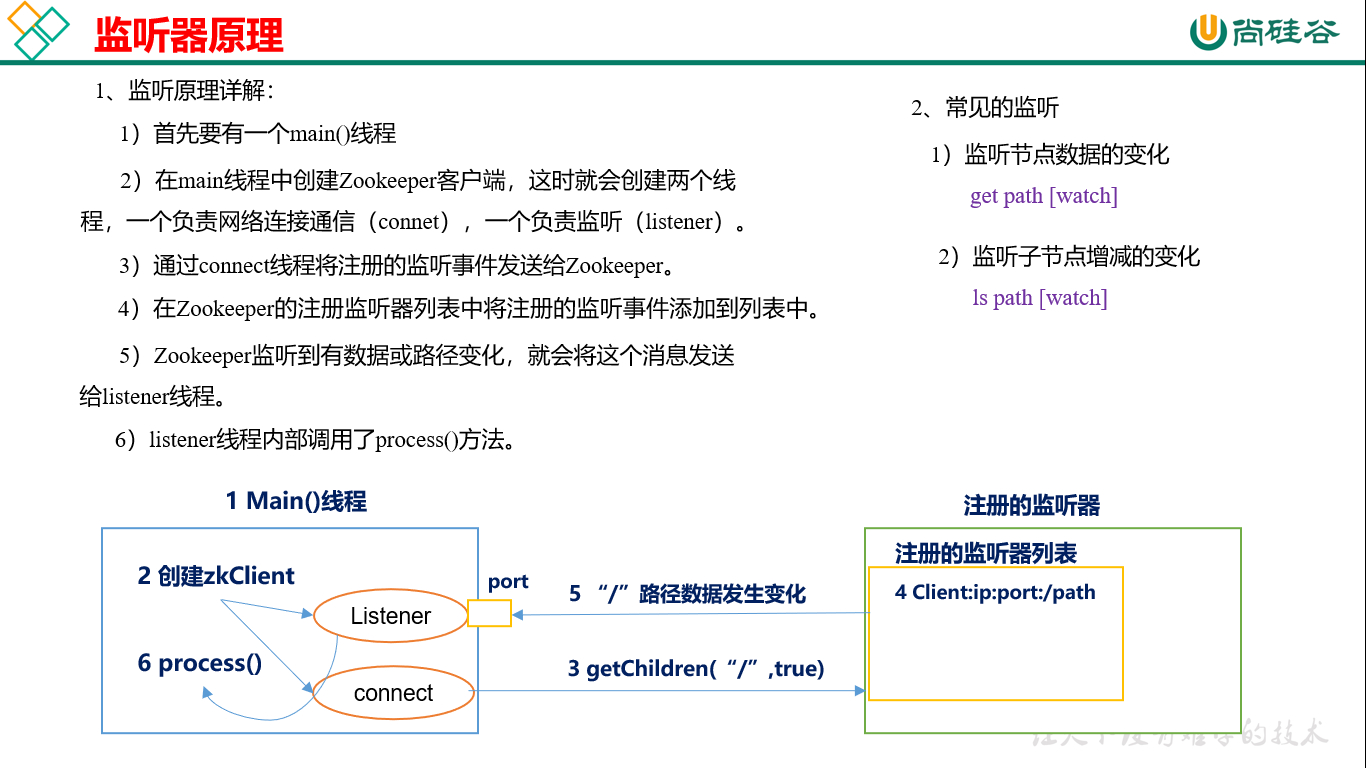

Principle of listener (key points of interview)

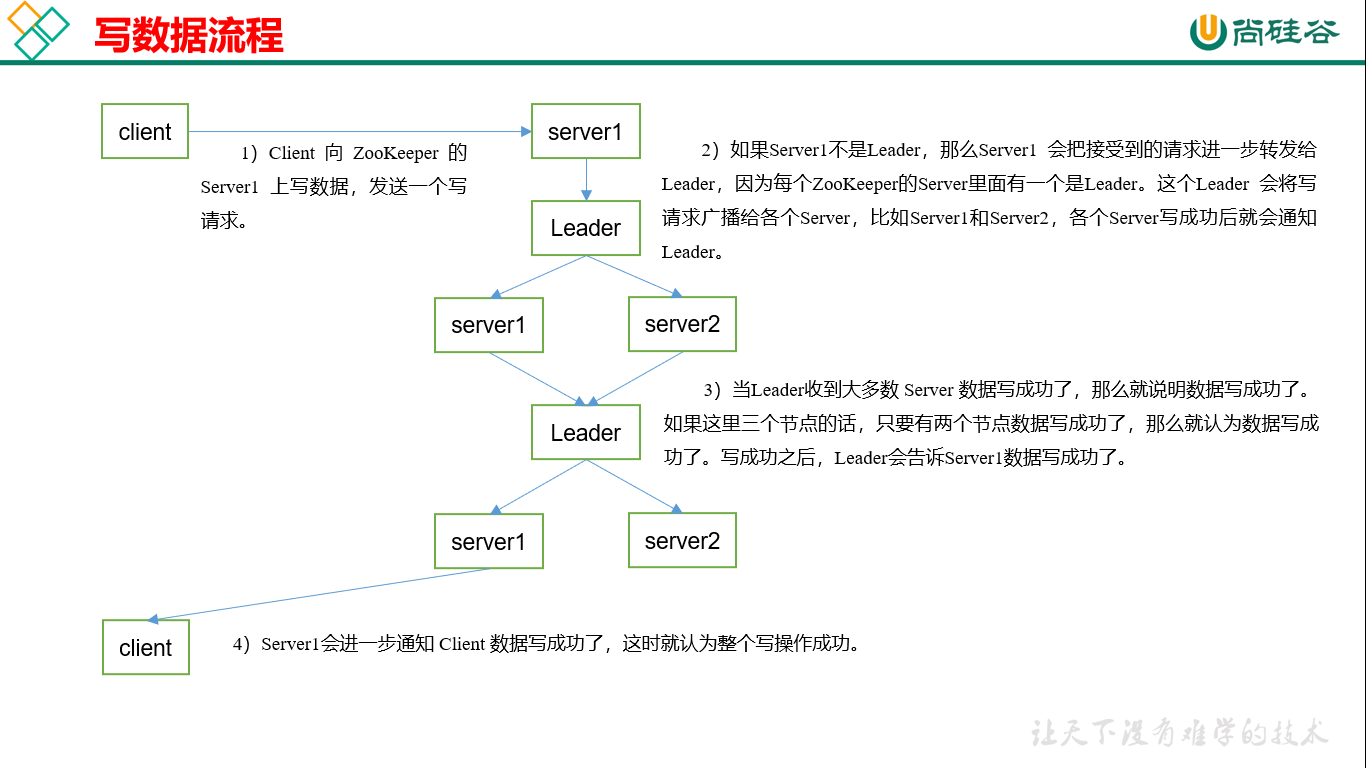

Write data flow

Chapter 4 Zookeeper actual combat (development focus)

4.1 Distributed installation and deployment

1.Cluster planning

stay hadoop102,hadoop103 and hadoop104 Deploy on three nodes Zookeeper.

2.Decompression installation

(1)decompression Zookeeper Install package to/opt/module/Directory

[atguigu@hadoop102 software]$ tar -zxvf zookeeper-3.4.10.tar.gz -C /opt/module/

(2)synchronization/opt/module/zookeeper-3.4.10 Directory contents to hadoop103,hadoop104

[atguigu@hadoop102 module]$ xsync zookeeper-3.4.10/

3.Configure server number

(1)stay/opt/module/zookeeper-3.4.10/Create under this directory zkData

[atguigu@hadoop102 zookeeper-3.4.10]$ mkdir -p zkData

(2)stay/opt/module/zookeeper-3.4.10/zkData Create a directory myid File

[atguigu@hadoop102 zkData]$ touch myid

add to myid File, be sure to linux Created inside, in notepad++It's probably garbled

(3)edit myid file

[atguigu@hadoop102 zkData]$ vi myid

Add and to the file server Corresponding number:

2

(4)Copy configured zookeeper To other machines

[atguigu@hadoop102 zkData]$ xsync myid

And in hadoop102,hadoop103 Upper modification myid The contents in the document are 3 and 4

4.to configure zoo.cfg file

(1)rename/opt/module/zookeeper-3.4.10/conf Under this directory zoo_sample.cfg by zoo.cfg

[atguigu@hadoop102 conf]$ mv zoo_sample.cfg zoo.cfg

(2)open zoo.cfg file

[atguigu@hadoop102 conf]$ vim zoo.cfg

Modify data storage path configuration

dataDir=/opt/module/zookeeper-3.4.10/zkData

Add the following configuration

#######################cluster##########################

server.2=hadoop102:2888:3888

server.3=hadoop103:2888:3888

server.4=hadoop104:2888:3888

(3)synchronization zoo.cfg configuration file

[atguigu@hadoop102 conf]$ xsync zoo.cfg

(4)Interpretation of configuration parameters

server.A=B:C:D.

A Is a number indicating the server number;

Configure a file in cluster mode myid,This file is in dataDir Under the directory, there is a data in this file A The value of, Zookeeper Read this file at startup and get the data and zoo.cfg Compare the configuration information inside to determine which one it is server.

B It's from this server ip Address;

C It is the server and the server in the cluster Leader The port where the server exchanges information;

D It's in the cluster Leader The server is down. You need a port to re elect and choose a new one Leader,This port is used to communicate with each other during the election.

4.Cluster operation

(1)Start separately Zookeeper

[atguigu@hadoop102 zookeeper-3.4.10]$ bin/zkServer.sh start

[atguigu@hadoop103 zookeeper-3.4.10]$ bin/zkServer.sh start

[atguigu@hadoop104 zookeeper-3.4.10]$ bin/zkServer.sh start

(2)View status

[atguigu@hadoop102 zookeeper-3.4.10]# bin/zkServer.sh status

JMX enabled by default

Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg

Mode: follower

[atguigu@hadoop103 zookeeper-3.4.10]# bin/zkServer.sh status

JMX enabled by default

Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg

Mode: leader

[atguigu@hadoop104 zookeeper-3.4.5]# bin/zkServer.sh status

JMX enabled by default

Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg

Mode: follower

4.2 Client command line operation

Table 5-1

Basic command syntax Function description

help Display all operation commands

ls path [watch] use ls Command to view the current znode Content contained in

ls2 path [watch] View the current node data and see the update times and other data

create Normal creation

-s Containing sequence

-e Temporary (restart or timeout disappears)

get path [watch] Gets the value of the node

set Set the specific value of the node

stat View node status

delete Delete node

rmr Recursively delete nodes

1.Start client

[atguigu@hadoop103 zookeeper-3.4.10]$ bin/zkCli.sh

2.Display all operation commands

[zk: localhost:2181(CONNECTED) 1] help

3.View current znode Content contained in

[zk: localhost:2181(CONNECTED) 0] ls /

[zookeeper]

4.View detailed data of current node

[zk: localhost:2181(CONNECTED) 1] ls2 /

[zookeeper]

cZxid = 0x0

ctime = Thu Jan 01 08:00:00 CST 1970

mZxid = 0x0

mtime = Thu Jan 01 08:00:00 CST 1970

pZxid = 0x0

cversion = -1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 0

numChildren = 1

5.Create 2 ordinary nodes respectively

[zk: localhost:2181(CONNECTED) 3] create /sanguo "jinlian"

Created /sanguo

[zk: localhost:2181(CONNECTED) 4] create /sanguo/shuguo "liubei"

Created /sanguo/shuguo

6.Gets the value of the node

[zk: localhost:2181(CONNECTED) 5] get /sanguo

jinlian

cZxid = 0x100000003

ctime = Wed Aug 29 00:03:23 CST 2018

mZxid = 0x100000003

mtime = Wed Aug 29 00:03:23 CST 2018

pZxid = 0x100000004

cversion = 1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 7

numChildren = 1

[zk: localhost:2181(CONNECTED) 6]

[zk: localhost:2181(CONNECTED) 6] get /sanguo/shuguo

liubei

cZxid = 0x100000004

ctime = Wed Aug 29 00:04:35 CST 2018

mZxid = 0x100000004

mtime = Wed Aug 29 00:04:35 CST 2018

pZxid = 0x100000004

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 6

numChildren = 0

7.Create transient node

[zk: localhost:2181(CONNECTED) 7] create -e /sanguo/wuguo "zhouyu"

Created /sanguo/wuguo

(1)It can be viewed on the current client

[zk: localhost:2181(CONNECTED) 3] ls /sanguo

[wuguo, shuguo]

(2)Exit the current client and then restart the client

[zk: localhost:2181(CONNECTED) 12] quit

[atguigu@hadoop104 zookeeper-3.4.10]$ bin/zkCli.sh

(3)Check again that the temporary node under the root directory has been deleted

[zk: localhost:2181(CONNECTED) 0] ls /sanguo

[shuguo]

8.Create node with sequence number

(1)First create a common root node/sanguo/weiguo

[zk: localhost:2181(CONNECTED) 1] create /sanguo/weiguo "caocao"

Created /sanguo/weiguo

(2)Create node with sequence number

[zk: localhost:2181(CONNECTED) 2] create -s /sanguo/weiguo/xiaoqiao "jinlian"

Created /sanguo/weiguo/xiaoqiao0000000000

[zk: localhost:2181(CONNECTED) 3] create -s /sanguo/weiguo/daqiao "jinlian"

Created /sanguo/weiguo/daqiao0000000001

[zk: localhost:2181(CONNECTED) 4] create -s /sanguo/weiguo/diaocan "jinlian"

Created /sanguo/weiguo/diaocan0000000002

If there is no Sn node, the SN will be incremented from 0. If there are 2 nodes under the original node, the reordering starts from 2, and so on.

9.Modify node data value

[zk: localhost:2181(CONNECTED) 6] set /sanguo/weiguo "simayi"

10.Node value change monitoring

(1)stay hadoop104 Register listening on the host/sanguo Node data change

[zk: localhost:2181(CONNECTED) 26] [zk: localhost:2181(CONNECTED) 8] get /sanguo watch

(2)stay hadoop103 Modify on host/sanguo Node data

[zk: localhost:2181(CONNECTED) 1] set /sanguo "xisi"

(3)observation hadoop104 Monitoring of data changes received by the host

WATCHER::

WatchedEvent state:SyncConnected type:NodeDataChanged path:/sanguo

11.Child node change monitoring of node (path change)

(1)stay hadoop104 Register listening on the host/sanguo Child node change of node

[zk: localhost:2181(CONNECTED) 1] ls /sanguo watch

[aa0000000001, server101]

(2)stay hadoop103 host/sanguo Create child nodes on node

[zk: localhost:2181(CONNECTED) 2] create /sanguo/jin "simayi"

Created /sanguo/jin

(3)observation hadoop104 The host receives the monitoring of child node changes

WATCHER::

WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/sanguo

12.Delete node

[zk: localhost:2181(CONNECTED) 4] delete /sanguo/jin

13.Recursively delete nodes

[zk: localhost:2181(CONNECTED) 15] rmr /sanguo/shuguo

14.View node status

[zk: localhost:2181(CONNECTED) 17] stat /sanguo

cZxid = 0x100000003

ctime = Wed Aug 29 00:03:23 CST 2018

mZxid = 0x100000011

mtime = Wed Aug 29 00:21:23 CST 2018

pZxid = 0x100000014

cversion = 9

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 4

numChildren = 1

4.3 API application

4.3.1 Eclipse Environment construction

1.Create a Maven engineering

2.add to pom file

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>RELEASE</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.8.2</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.zookeeper/zookeeper -->

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.4.10</version>

</dependency>

</dependencies>

3.Copy log4j.properties File to project root

Need to be in the project src/main/resources Under directory, create a new file named“ log4j.properties",Fill in the document.

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

4.3.2 establish ZooKeeper client

private static String connectString =

"hadoop102:2181,hadoop103:2181,hadoop104:2181";

private static int sessionTimeout = 2000;

private ZooKeeper zkClient = null;

@Before

public void init() throws Exception {

zkClient = new ZooKeeper(connectString, sessionTimeout, new Watcher() {

@Override

public void process(WatchedEvent event) {

// Callback function after receiving event notification (user's business logic)

System.out.println(event.getType() + "--" + event.getPath());

// Start listening again

try {

zkClient.getChildren("/", true);

} catch (Exception e) {

e.printStackTrace();

}

}

});

}

4.3.3 Create child node

// Create child node

@Test

public void create() throws Exception {

// Parameter 1: path of node to be created; parameter 2: node data; parameter 3: node permission; parameter 4: node type

String nodeCreated = zkClient.create("/atguigu", "jinlian".getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

}

4.3.4 Get child nodes and listen for node changes

// Get child nodes

@Test

public void getChildren() throws Exception {

List<String> children = zkClient.getChildren("/", true);

for (String child : children) {

System.out.println(child);

}

// Delay blocking

Thread.sleep(Long.MAX_VALUE);

}

4.3.5 judge Znode Does it exist

// Determine whether znode exists

@Test

public void exist() throws Exception {

Stat stat = zkClient.exists("/eclipse", false);

System.out.println(stat == null ? "not exist" : "exist");

}

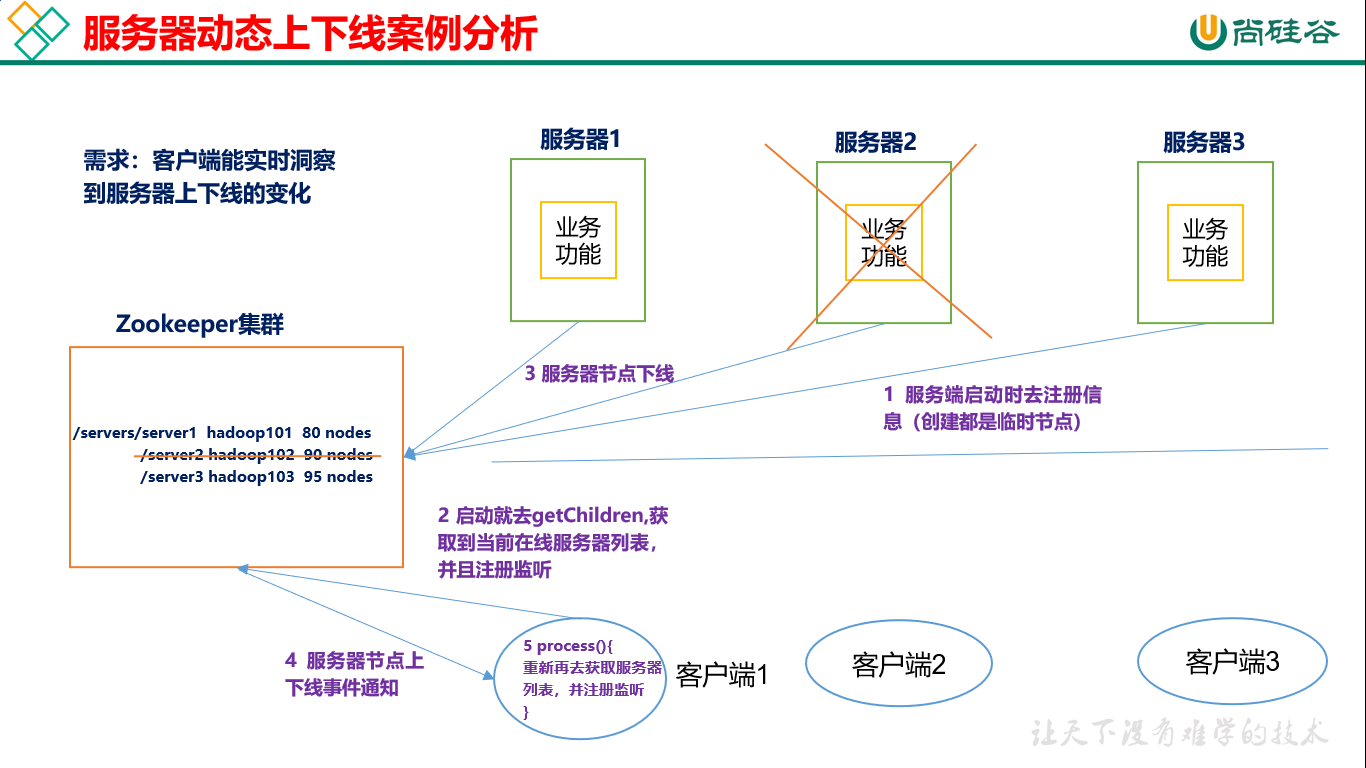

Listen for dynamic uplink and downlink cases of server nodes

1.demand

In a distributed system, there can be multiple master nodes, which can dynamically go online and offline. Any client can sense the online and offline of the master node server in real time.

2.Demand analysis, as shown in Figure 5-12 Shown

Figure 5-12 Server dynamic online and offline

3.Concrete implementation

(0)Create a cluster first/servers node

[zk: localhost:2181(CONNECTED) 10] create /servers "servers"

Created /servers

(1)Server side to Zookeeper Registration code

package com.atguigu.zkcase;

import java.io.IOException;

import org.apache.zookeeper.CreateMode;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooKeeper;

import org.apache.zookeeper.ZooDefs.Ids;

public class DistributeServer {

private static String connectString = "hadoop102:2181,hadoop103:2181,hadoop104:2181";

private static int sessionTimeout = 2000;

private ZooKeeper zk = null;

private String parentNode = "/servers";

// Create a client connection to zk

public void getConnect() throws IOException{

zk = new ZooKeeper(connectString, sessionTimeout, new Watcher() {

@Override

public void process(WatchedEvent event) {

}

});

}

// Registration server

public void registServer(String hostname) throws Exception{

String create = zk.create(parentNode + "/server", hostname.getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL);

System.out.println(hostname +" is online "+ create);

}

// Business function

public void business(String hostname) throws Exception{

System.out.println(hostname+" is working ...");

Thread.sleep(Long.MAX_VALUE);

}

public static void main(String[] args) throws Exception {

// 1 get zk connection

DistributeServer server = new DistributeServer();

server.getConnect();

// 2 use zk connection to register server information

server.registServer(args[0]);

// 3 start business function

server.business(args[0]);

}

}

(2)Client code

package com.atguigu.zkcase;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooKeeper;

public class DistributeClient {

private static String connectString = "hadoop102:2181,hadoop103:2181,hadoop104:2181";

private static int sessionTimeout = 2000;

private ZooKeeper zk = null;

private String parentNode = "/servers";

// Create a client connection to zk

public void getConnect() throws IOException {

zk = new ZooKeeper(connectString, sessionTimeout, new Watcher() {

@Override

public void process(WatchedEvent event) {

// Start listening again

try {

getServerList();

} catch (Exception e) {

e.printStackTrace();

}

}

});

}

// Get server list information

public void getServerList() throws Exception {

// 1 obtain the information of the server's child nodes and listen to the parent nodes

List<String> children = zk.getChildren(parentNode, true);

// 2 storage server information list

ArrayList<String> servers = new ArrayList<>();

// 3 traverse all nodes to obtain the host name information in the node

for (String child : children) {

byte[] data = zk.getData(parentNode + "/" + child, false, null);

servers.add(new String(data));

}

// 4 print server list information

System.out.println(servers);

}

// Business function

public void business() throws Exception{

System.out.println("client is working ...");

Thread.sleep(Long.MAX_VALUE);

}

public static void main(String[] args) throws Exception {

// 1 get zk connection

DistributeClient client = new DistributeClient();

client.getConnect();

// 2 get the child node information of servers and get the server information list from it

client.getServerList();

// 3 business process startup

client.business();

}

}