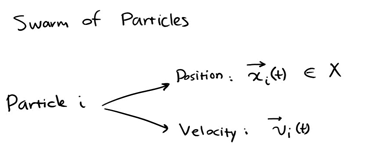

I. particle swarm optimization

Particle swarm optimization algorithm was proposed by Dr. Eberhart and Dr. Kennedy in 1995. It comes from the study of bird predation behavior. Its basic core is to make use of the information sharing of individuals in the group, so as to make the movement of the whole group produce an evolutionary process from disorder to order in the problem-solving space, so as to obtain the optimal solution of the problem. Imagine this scenario: a flock of birds are foraging, and there is a corn field in the distance. All the birds don't know where the corn field is, but they know how far their current position is from the corn field. Then the best strategy to find the corn field, and the simplest and most effective strategy, is to search the surrounding area of the nearest bird group to the corn field.

In PSO, the solution of each optimization problem is a bird in the search space, which is called "particle", and the optimal solution of the problem corresponds to the "corn field" found in the bird swarm. All particles have a position vector (the position of the particle in the solution space) and a velocity vector (which determines the direction and speed of the next flight), and the fitness value of the current position can be calculated according to the objective function, which can be understood as the distance from the "corn field". In each iteration, the examples in the population can learn not only according to their own experience (historical position), but also according to the "experience" of the optimal particles in the population, so as to determine how to adjust and change the flight direction and speed in the next iteration. In this way, the whole population will gradually tend to the optimal solution.

The above explanation may be more abstract. Let's illustrate it with a simple example

There are two people in a lake. They can communicate with each other and detect the lowest point of their position. The initial position is shown in the figure above. Because the right side is deep, the people on the left will move the boat to the right.

Now the left side is deep, so the person on the right will move the boat to the left

Keep repeating the process and the last two boats will meet

A local optimal solution is obtained

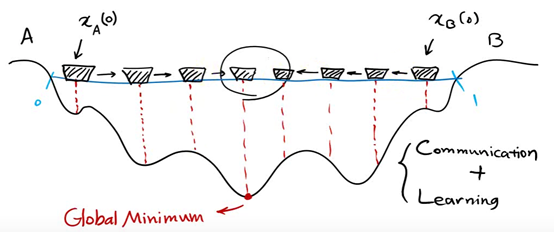

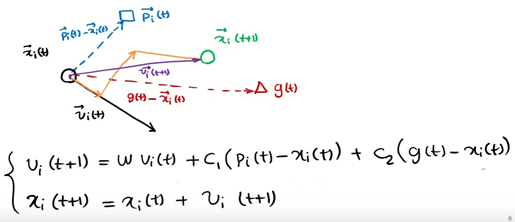

Represent each individual as a particle. The position of each individual at a certain time is expressed as x(t), and the direction is expressed as v(t)

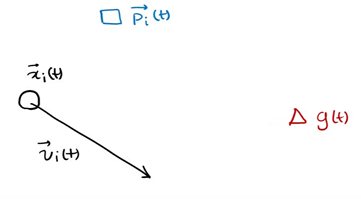

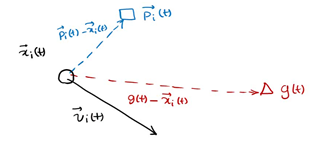

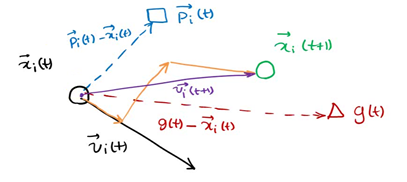

p (T) is the optimal solution of individual x at time t, g(t) is the optimal solution of all individuals at time t, v(t) is the direction of individual at time t, and x(t) is the position of individual at time t

The next position is shown in the figure above, which is determined by X, P and G

The particles in the population can find the optimal solution of the problem by constantly learning from the historical information of themselves and the population.

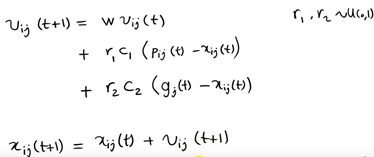

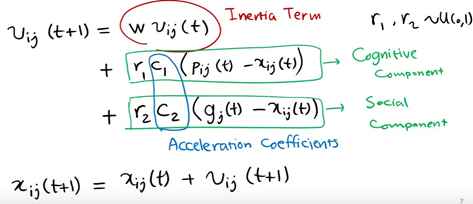

However, in the follow-up research, the table shows that there is a problem in the above original formula: the update of V in the formula is too random, which makes the global optimization ability of the whole PSO algorithm strong, but the local search ability is poor. In fact, we need that PSO has strong global optimization ability in the early stage of algorithm iteration, and the whole population should have stronger local search ability in the later stage of algorithm iteration. Therefore, according to the above disadvantages, shi and Eberhart modified the formula by introducing inertia weight, so as to put forward the inertia weight model of PSO:

The components of each vector are represented as follows

Where W is called the inertia weight of PSO, and its value is between [0,1]. Generally, adaptive value method is adopted, that is, w=0.9 at the beginning, which makes PSO have strong global optimization ability. With the deepening of iteration, the parameter W decreases, so that PSO has strong local optimization ability. When the iteration is over, w=0.1. The parameters c1 and c2 are called learning factors and are generally set to 14961; r1 and r2 are random probability values between [0,1].

The algorithm framework of the whole particle swarm optimization algorithm is as follows:

Step 1 population initialization can be carried out randomly or design a specific initialization method according to the optimized problem, and then calculate the individual fitness value, so as to select the individual local optimal position vector and the global optimal position vector of the population.

Step 2 iteration setting: set the number of iterations and set the current number of iterations to 1

step3 speed update: update the speed vector of each individual

step4 position update: update the position vector of each individual

Step 5 local position and global position vector update: update the local optimal solution of each individual and the global optimal solution of the population

Step 6 termination condition judgment: the maximum number of iterations is reached when judging the number of iterations. If it is satisfied, the global optimal solution is output. Otherwise, continue the iteration and jump to step 3.

For the application of particle swarm optimization algorithm, it is mainly the design of velocity and position vector iterative operators. Whether the iterative operator is effective or not will determine the performance of the whole PSO algorithm, so how to design the iterative operator of PSO is the research focus and difficulty in the application of PSO algorithm.

2, Competitive learning particle swarm optimization (CLPSO)

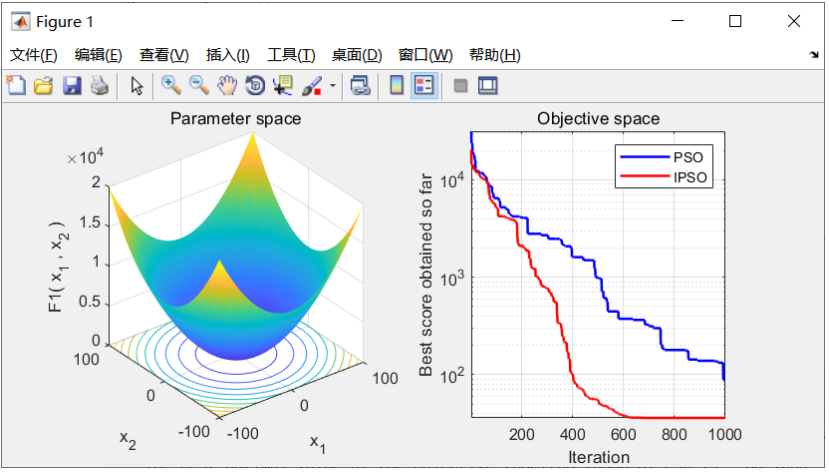

The traditional time-varying inertia weight particle swarm optimization algorithm has good results for solving general global optimization problems, but it is easy to fall into local convergence and premature for complex high-dimensional optimization problems. In view of the above shortcomings, the concept of population evolutionary dispersion is proposed, and considering the good balance between linearity and nonlinearity of Sigmoid function, a particle swarm optimization algorithm with nonlinear dynamic adaptive inertia weight is proposed. The algorithm fully considers the evolutionary differences between population particles in the evolutionary process, adaptively gives different inertia weight factors, and meets the needs of particle swarm optimization algorithm for global exploration and local development ability in different evolutionary periods. The simulation example test results verify the effectiveness of the algorithm.

3, Partial code

function [gbest,gbestval,fitcount]= CLPSO_new_func(fhd,Max_Gen,Max_FES,Particle_Number,Dimension,VRmin,VRmax,varargin)

%[gbest,gbestval,fitcount]= CLPSO_new_func('f8',3500,200000,30,30,-5.12,5.12)

rand('state',sum(100*clock));

me=Max_Gen;

ps=Particle_Number;

D=Dimension;

cc=[1 1]; %acceleration constants

t=0:1/(ps-1):1;t=5.*t;

Pc=0.0+(0.5-0.0).*(exp(t)-exp(t(1)))./(exp(t(ps))-exp(t(1)));

% Pc=0.5.*ones(1,ps);

m=0.*ones(ps,1);

iwt=0.9-(1:me)*(0.7/me);

% iwt=0.729-(1:me)*(0.0/me);

cc=[1.49445 1.49445];

if length(VRmin)==1

VRmin=repmat(VRmin,1,D);

VRmax=repmat(VRmax,1,D);

end

mv=0.2*(VRmax-VRmin);

VRmin=repmat(VRmin,ps,1);

VRmax=repmat(VRmax,ps,1);

Vmin=repmat(-mv,ps,1);

Vmax=-Vmin;

pos=VRmin+(VRmax-VRmin).*rand(ps,D);

for i=1:ps;

e(i,1)=feval(fhd,pos(i,:),varargin{:});

end

fitcount=ps;

vel=Vmin+2.*Vmax.*rand(ps,D);%initialize the velocity of the particles

pbest=pos;

pbestval=e; %initialize the pbest and the pbest's fitness value

[gbestval,gbestid]=min(pbestval);

gbest=pbest(gbestid,:);%initialize the gbest and the gbest's fitness value

gbestrep=repmat(gbest,ps,1);

stay_num=zeros(ps,1);

ai=zeros(ps,D);

f_pbest=1:ps;f_pbest=repmat(f_pbest',1,D);

for k=1:ps

ar=randperm(D);

ai(k,ar(1:m(k)))=1;

fi1=ceil(ps*rand(1,D));

fi2=ceil(ps*rand(1,D));

fi=(pbestval(fi1)<pbestval(fi2))'.*fi1+(pbestval(fi1)>=pbestval(fi2))'.*fi2;

bi=ceil(rand(1,D)-1+Pc(k));

if bi==zeros(1,D),rc=randperm(D);bi(rc(1))=1;end

f_pbest(k,:)=bi.*fi+(1-bi).*f_pbest(k,:);

end

stop_num=0;

i=1;

while i<=me&fitcount<=Max_FES

i=i+1;

for k=1:ps

if stay_num(k)>=5

% if round(i/10)==i/10%|stay_num(k)>=5

stay_num(k)=0;

ai(k,:)=zeros(1,D);

f_pbest(k,:)=k.*ones(1,D);

ar=randperm(D);

ai(k,ar(1:m(k)))=1;

fi1=ceil(ps*rand(1,D));

fi2=ceil(ps*rand(1,D));

fi=(pbestval(fi1)<pbestval(fi2))'.*fi1+(pbestval(fi1)>=pbestval(fi2))'.*fi2;

bi=ceil(rand(1,D)-1+Pc(k));

if bi==zeros(1,D),rc=randperm(D);bi(rc(1))=1;end

f_pbest(k,:)=bi.*fi+(1-bi).*f_pbest(k,:);

end

for dimcnt=1:D

pbest_f(k,dimcnt)=pbest(f_pbest(k,dimcnt),dimcnt);

end

aa(k,:)=cc(1).*(1-ai(k,:)).*rand(1,D).*(pbest_f(k,:)-pos(k,:))+cc(2).*ai(k,:).*rand(1,D).*(gbestrep(k,:)-pos(k,:));%~~~~~~~~~~~~~~~~~~~~~~

vel(k,:)=iwt(i).*vel(k,:)+aa(k,:);

vel(k,:)=(vel(k,:)>mv).*mv+(vel(k,:)<=mv).*vel(k,:);

vel(k,:)=(vel(k,:)<(-mv)).*(-mv)+(vel(k,:)>=(-mv)).*vel(k,:);

pos(k,:)=pos(k,:)+vel(k,:);

if (sum(pos(k,:)>VRmax(k,:))+sum(pos(k,:)<VRmin(k,:)))==0;

e(k,1)=feval(fhd,pos(k,:),varargin{:});

fitcount=fitcount+1;

tmp=(pbestval(k)<=e(k));

if tmp==1

stay_num(k)=stay_num(k)+1;

end

temp=repmat(tmp,1,D);

pbest(k,:)=temp.*pbest(k,:)+(1-temp).*pos(k,:);

pbestval(k)=tmp.*pbestval(k)+(1-tmp).*e(k);%update the pbest

if pbestval(k)<gbestval

gbest=pbest(k,:);

gbestval=pbestval(k);

gbestrep=repmat(gbest,ps,1);%update the gbest

end

end

end

% if round(i/100)==i/100

% plot(pos(:,D-1),pos(:,D),'b*');hold on;

% for k=1:floor(D/2)

% plot(gbest(:,2*k-1),gbest(:,2*k),'r*');

% end

% hold off

% title(['PSO: ',num2str(i),' generations, Gbestval=',num2str(gbestval)]);

% axis([VRmin(1,D-1),VRmax(1,D-1),VRmin(1,D),VRmax(1,D)])

% drawnow

% end

if fitcount>=Max_FES

break;

end

if (i==me)&(fitcount<Max_FES)

i=i-1;

end

end

gbestval

4, Simulation results

V. references

[1] Wang Shengliang, Liu Genyou A nonlinear dynamic adaptive inertia weight PSO algorithm [J] Computer simulation, 2021,38 (04): 249-253 + 451