1, Introduction

1 overview of genetic algorithm

Genetic Algorithm (GA) is a part of evolutionary computation. It is a computational model simulating Darwin's genetic selection and natural elimination of biological evolution process. It is a method to search the optimal solution by simulating the natural evolution process. The algorithm is simple, universal, robust and suitable for parallel processing.

2 characteristics and application of genetic algorithm

Genetic algorithm is a kind of robust search algorithm which can be used for complex system optimization. Compared with the traditional optimization algorithm, it has the following characteristics:

(1) The coding of decision variables is used as the operation object. Traditional optimization algorithms often directly use the actual value of decision variables itself for optimization calculation, but genetic algorithm uses some form of coding of decision variables as the operation object. This coding method of decision variables enables us to learn from the concepts of chromosome and gene in biology in optimization calculation, imitate the genetic and evolutionary incentives of organisms in nature, and easily apply genetic operators.

(2) Directly take fitness as search information. The traditional optimization algorithm not only needs to use the value of the objective function, but also the search process is often constrained by the continuity of the objective function. It may also need to meet the requirement that "the derivative of the objective function must exist" to determine the search direction. The genetic algorithm only uses the fitness function value transformed from the objective function value to determine the further search range without other auxiliary information such as the derivative value of the objective function. Directly using the objective function value or individual fitness value can also focus the search range into the search space with higher fitness, so as to improve the search efficiency.

(3) Using the search information of multiple points has implicit parallelism. Traditional optimization algorithms often start from an initial point in the solution space. The search information provided by a single point is not much, so the search efficiency is not high, and it may fall into local optimal solution and stop; Genetic algorithm starts the search process of the optimal solution from the initial population composed of many individuals, rather than from a single individual. The, selection, crossover, mutation and other operations on the initial population produce a new generation of population, including a lot of population information. This information can avoid searching some unnecessary points, so as to avoid falling into local optimization and gradually approach the global optimal solution.

(4) Use probabilistic search instead of deterministic rules. Traditional optimization algorithms often use deterministic search methods. The transfer from one search point to another has a certain transfer direction and transfer relationship. This certainty may make the search less than the optimal store, which limits the application scope of the algorithm. Genetic algorithm is an adaptive search technology. Its selection, crossover, mutation and other operations are carried out in a probabilistic way, which increases the flexibility of the search process, and can converge to the optimal solution with a large probability. It has a good ability of global optimization. However, crossover probability, mutation probability and other parameters will also affect the search results and search efficiency of the algorithm, so how to select the parameters of genetic algorithm is an important problem in its application.

In conclusion, because the overall search strategy and optimization search method of genetic algorithm do not depend on gradient information or other auxiliary knowledge, and only need to solve the objective function and corresponding fitness function affecting the search direction, genetic algorithm provides a general framework for solving complex system problems. It does not depend on the specific field of the problem and has strong robustness to the types of problems, so it is widely used in various fields, including function optimization, combinatorial optimization, production scheduling problem and automatic control

, robotics, image processing (image restoration, image edge feature extraction...), artificial life, genetic programming, machine learning.

3 basic flow and implementation technology of genetic algorithm

Simple genetic algorithms (SGA) only uses three genetic operators: selection operator, crossover operator and mutation operator. The evolution process is simple and is the basis of other genetic algorithms.

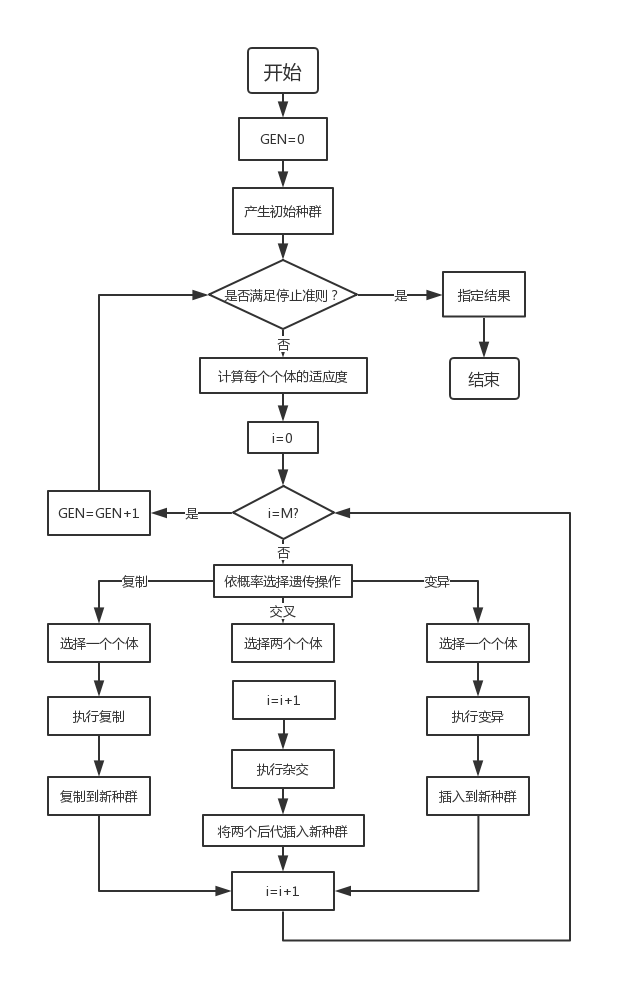

3.1 basic flow of genetic algorithm

Generating a number of initial groups encoded by a certain length (the length is related to the accuracy of the problem to be solved) in a random manner;

Each individual is evaluated by fitness function. Individuals with high fitness value are selected to participate in genetic operation, and individuals with low fitness are eliminated;

A new generation of population is formed by the collection of individuals through genetic operation (replication, crossover and mutation) until the stopping criterion is met (evolutionary algebra gen > =?);

The best realized individual in the offspring is taken as the execution result of genetic algorithm.

Where GEN is the current algebra; M is the population size, and i represents the population number.

3.2 implementation technology of genetic algorithm

Basic genetic algorithm (SGA) consists of coding, fitness function, genetic operators (selection, crossover, mutation) and operating parameters.

3.2.1 coding

(1) Binary coding

The length of binary coded string is related to the accuracy of the problem. It is necessary to ensure that every individual in the solution space can be encoded.

Advantages: simple operation of encoding and decoding, easy implementation of heredity and crossover

Disadvantages: large length

(2) Other coding methods

Gray code, floating point code, symbol code, multi parameter code, etc

3.2.2 fitness function

The fitness function should effectively reflect the gap between each chromosome and the chromosome of the optimal solution of the problem.

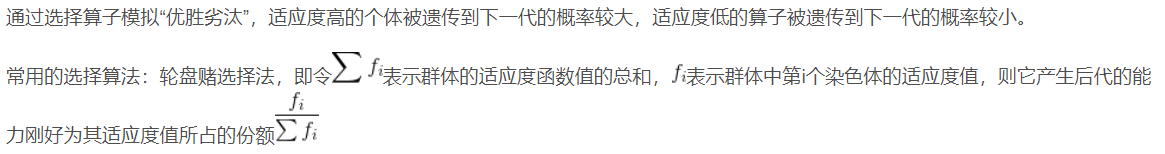

3.2.3 selection operator

3.2.4 crossover operator

Cross operation refers to the exchange of some genes between two paired chromosomes in some way, so as to form two new individuals; Crossover operation is an important feature of genetic algorithm, which is different from other evolutionary algorithms. It is the main method to generate new individuals. Before crossing, individuals in the group need to be paired, which generally adopts the principle of random pairing.

Common crossing methods:

Single point intersection

Two point crossing (multi-point crossing. The more crossing points, the greater the possibility of individual structure damage. Generally, multi-point crossing is not adopted)

Uniform crossing

Arithmetic Crossover

3.2.5 mutation operator

Mutation operation in genetic algorithm refers to replacing the gene values at some loci in the individual chromosome coding string with other alleles at this locus, so as to form a new individual.

In terms of the ability to generate new individuals in the operation process of genetic algorithm, crossover operation is the main method to generate new individuals, which determines the global search ability of genetic algorithm; Mutation is only an auxiliary method to generate new individuals, but it is also an essential operation step, which determines the local search ability of genetic algorithm. The combination of crossover operator and mutation operator completes the global search and local search of the search space, so that the genetic algorithm can complete the optimization process of the optimization problem with good search performance.

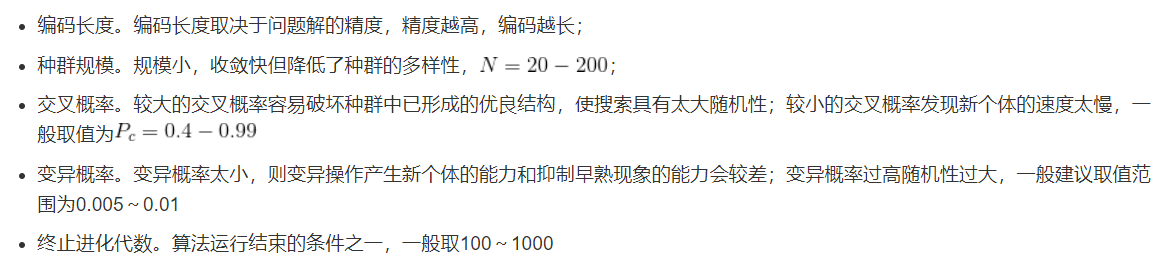

3.2.6 operating parameters

4 basic principle of genetic algorithm

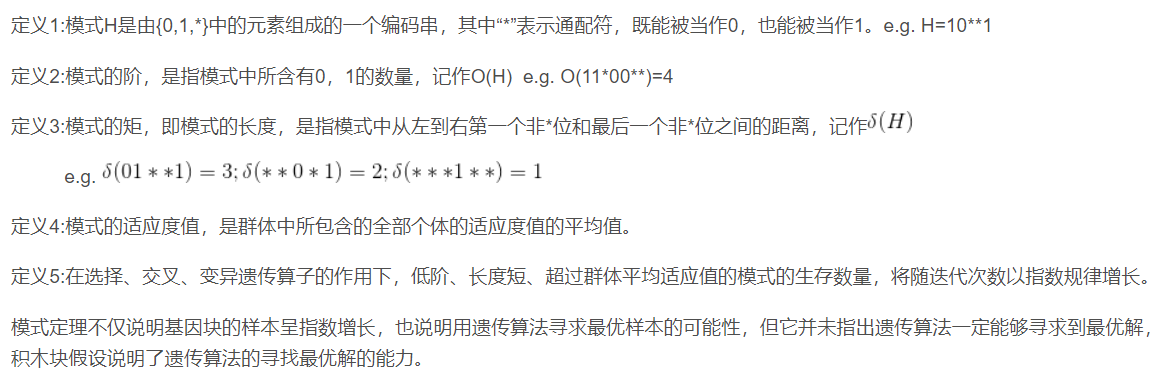

4.1 mode theorem

4.2 building block assumptions

Patterns with low order, short definition length and fitness value higher than the average fitness value of the population are called gene blocks or building blocks.

Building block hypothesis: individual gene blocks can be spliced together through genetic operators such as selection, crossover and mutation to form individual coding strings with higher fitness.

The building block hypothesis illustrates the basic idea of using genetic algorithm to solve various problems, that is, better solutions can be produced by directly splicing the building blocks together.

2, Source code

%genetic algorithm VRP problem Matlab realization

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%tic%timer

clear;

clc

W=80; %Load capacity of each vehicle

Citynum=50; %Number of customers

Stornum=4;%Number of warehouses

%C %%The second and third columns are customer coordinates, and the fourth column is customer demand 51,52,53,54 Four warehouses

load('p01-n50-S4-w80.mat'); %Load test data, n Customer service points, S Number of warehouses,w Vehicle load capacity

load('p02-n50-S4-w160.mat');

load('p04-n100-S2-w100.mat');

load('p05-n100-S2-w200.mat');

load('p06-n100-S3-w100.mat');

G=100;%Population size

[dislist,Clist]=vrp(C);%dislist Is the distance matrix, Clist Point coordinate matrix and customer requirements

L=[];%Save the loop length of each population

for i=1:G

Parent(i,:)=randperm(Citynum);%Random generation path

L(i,1)=curlist(Citynum,Clist(:,4),W,Parent(i,:),Stornum,dislist);

end

Pc=0.8;%Cross ratio

Pm=0.3;%Variation ratio

species=Parent;%population

children=[];%Offspring

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%

disp('Running for a long time, please wait.........')

g=1;

for generation=1:g

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

tic

fprintf('\n The second phase is in progress%d Iterations of%d second..........',generation,g);

Parent=species;%Children become parents

children=[];%Offspring

Lp=L;

%Select cross parent

[n m]=size(Parent);

%Cross processing

for i=1:n

for j=i:n

if rand<Pc

crossover

end

end

end

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

[n m]=size(Parent);

for i=1:n

if rand<Pm

parent=Parent(i,:);%Variant individual

X=floor(rand*Citynum)+1;

Y=floor(rand*Citynum)+1;

Z=parent(X);

parent(X)=parent(Y);

parent(Y)=Z; %Gene exchange variation

children=[children;parent];

end

end

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%Calculate offspring fitness(Path length) (This one takes a long time)

[m n]=size(children);

Lc=zeros(m,1);%Offspring fitness

for i=1:m

Lc(i,1)=curlist(Citynum,Clist(:,4),W,children(i,:),Stornum,dislist);

end

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%Before eliminating the remaining offspring G Optimal solution

[m n]=size(children);

if(m>G)

[m n]=sort(Lc);

children=children(n(1:G),:);

Lc=Lc(n(1:G));

end

%Eliminated population

species=[children;Parent];

L=[Lc;Lp];

[m n]=sort(L);

species=species(n(1:G),:); %Renewal generation

L=L(n(1:G));

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%join Opt optimization

%Assign warehouse for opt

temp=initialStor(Citynum,Clist(:,4),W,species(1,:),Stornum,dislist);%Store the results after warehouse allocation [52 14 5 52 53 6 9 8 53......]

Rbest=temp;

L_best=L(1);

[m n]=size(temp);

start=1;

car=[];%deposit opt Optimized results

i=2;

while (i<n+1)

if (temp(i)>Citynum)

cur=[];

cur=Opt(i-start,[1:i-start,1:i-start],dislist,temp(start:i-1),Citynum);

car=[car,[cur,cur(1)]];

start=i+1;

i=i+2;

else

i=i+1;

end

end

function F=CalDist(dislist,s,Citynum)%Calculate loop path distance

DistanV=0;%The initial distance is 0

n=size(s,2);%n by s The number of columns of the matrix, s It should be a matrix with several points in a line

for i=1:(n-1)

if(s(i)>Citynum && s(i+1)>Citynum)

else

DistanV=DistanV+dislist(s(i),s(i+1));

end

end

F=DistanV;

end

%OX Sequential crossover strategy

P1=Parent(i,:);

P2=Parent(j,:);

%Select tangent point,Exchange intermediate parts and repair genes

X=floor(rand*(m-2))+2;

Y=floor(rand*(m-2))+2;

if X>Y

Z=X;

X=Y;

Y=Z;

end

change1=P1(X:Y);

change2=P2(X:Y);

%Start repair Order Crossover

%1.List genes

p1=[P1(Y+1:end),P1(1:X-1),change1];

p2=[P2(Y+1:end),change2,P2(1:X-1)];

%2.1 Delete existing genes P1

for i=1:length(change2)

p1(find(p1==change2(i)))=[];

end

%2.2 Delete existing genes P2

for i=1:length(change1)

p2(find(p2==change1(i)))=[];

end

%3.1 repair P1

P1=[p1(m-Y+1:end),change2,p1(1:m-Y)];

%3.1 repair P2

P2=[change1,p2(m-Y+1:end),p2(1:m-Y)];

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%Join offspring

children=[children;P1;P2];

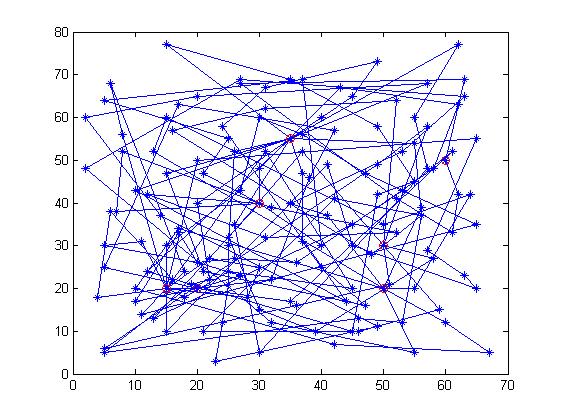

3, Operation results

4, Remarks

Version: 2014a