preface

2022 is a auspicious year of the tiger. Welcome to the new year. In the new year, I hope to bring you good luck through "ten thousand tigers" and "ten thousand blessings". I wish you tiger and tiger vitality, smooth wind and smooth water, good life and rolling financial resources! Congratulations are over, and now we will highlight technology~

1, Crawling tiger

Through Python crawler technology, we can easily and quickly crawl from Baidu pictures to a large number of tiger and Fu pictures. Here's how to crawl:

1. Website analysis

First, we open Baidu picture, F12 open the console, then search for a "year of the tiger", and click the picture:

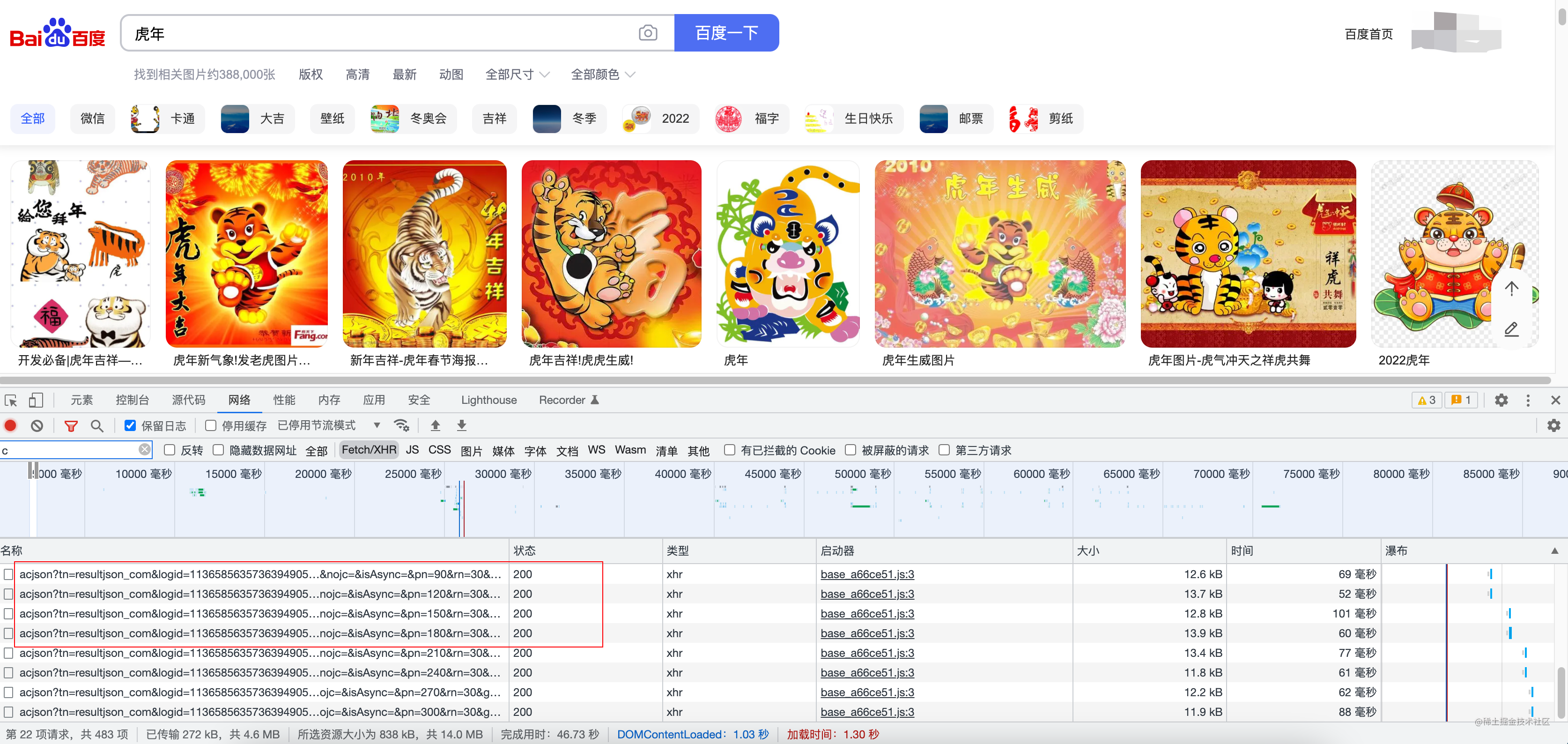

While sliding the mouse to load more pictures, we can view the contents output in the console and find that there are many data packets:

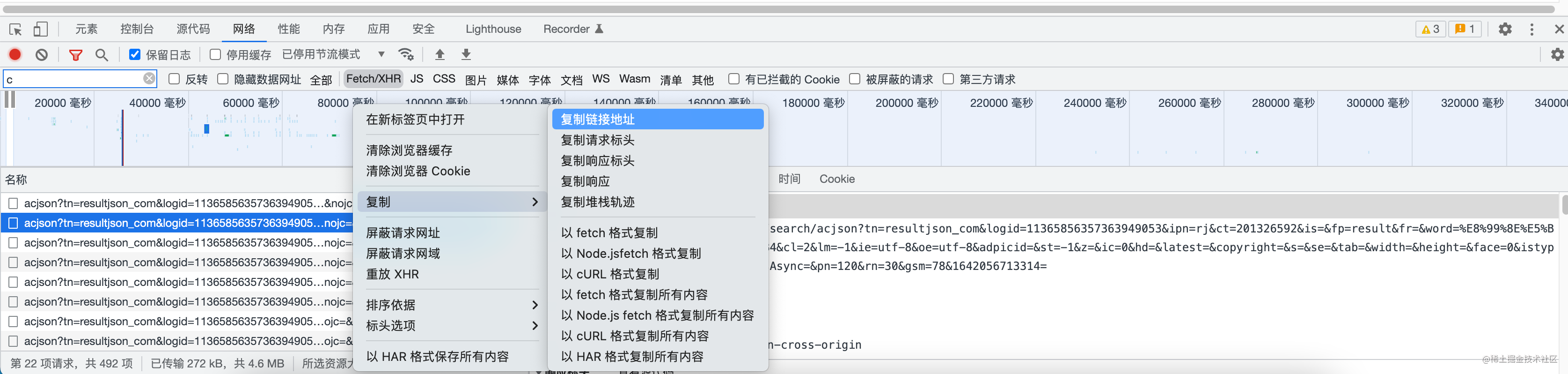

Select any one here and copy the URL request of this packet:

https://image.baidu.com/search/acjson?tn=resultjson_com&logid=11365856357363949053&ipn=rj&ct=201326592&is=&fp=result&fr=&word=%E8%99%8E%E5%B9%B4&queryWord=%E8%99%8E%E5%B9%B4&cl=2&lm=-1&ie=utf-8&oe=utf-8&adpicid=&st=-1&z=&ic=0&hd=&latest=©right=&s=&se=&tab=&width=&height=&face=0&istype=2&qc=&nc=1&expermode=&nojc=&isAsync=&pn=120&rn=30&gsm=78&1642056713314=

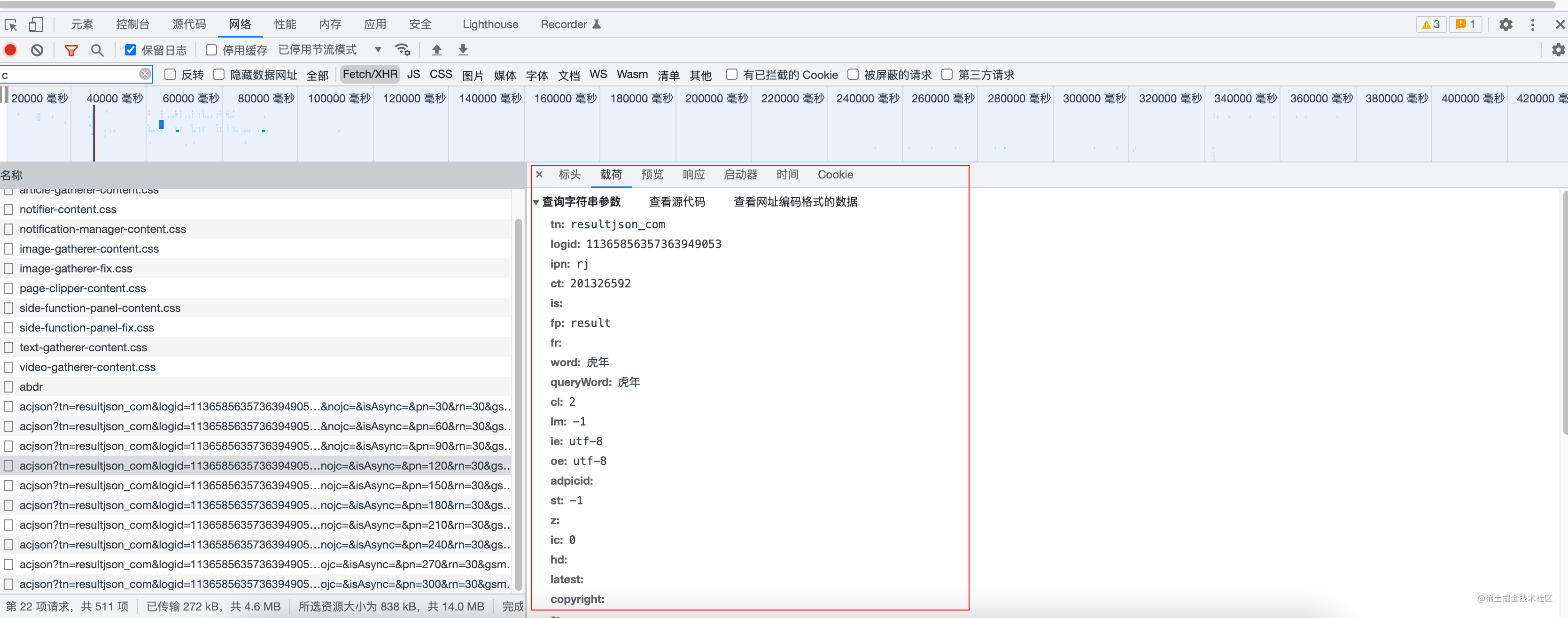

Click this URL to see the parameters it carries, and copy this paragraph:

tn: resultjson_com logid: 11365856357363949053 ipn: rj ct: 201326592 is: fp: result fr: word: Year of the tiger queryWord: Year of the tiger cl: 2 lm: -1 ie: utf-8 oe: utf-8 adpicid: st: -1 z: ic: 0 hd: latest: copyright: s: se: tab: width: height: face: 0 istype: 2 qc: nc: 1 expermode: nojc: isAsync: pn: 120 rn: 30 gsm: 78 1642056713314:

So far, we have obtained the required code, and the analysis is over!

2. Crawl code

I won't describe the detailed steps of coding. Here are the main source codes:

import requests

import os

from lxml import etree

path = r"/Users/lpc/Downloads/baidu1/"

# Judge whether the directory exists, skip if it exists, and create if it does not exist

if os.path.exists(path):

pass

else:

os.mkdir(path)

page = input('Please enter how many pages to crawl:')

page = int(page) + 1

header = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 11_1_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36'

}

n = 0

pn = 1

# pn is obtained from the first few pictures. When Baidu pictures decline, 30 are displayed at one time by default

for m in range(1, page):

url = 'https://image.baidu.com/search/acjson?'

param = {

'tn': 'resultjson_com',

'logid': '7680290037940858296',

'ipn': 'rj',

'ct': '201326592',

'is': '',

'fp': 'result',

'queryWord': 'Year of the tiger',

'cl': '2',

'lm': '-1',

'ie': 'utf-8',

'oe': 'utf-8',

'adpicid': '',

'st': '-1',

'z': '',

'ic': '0',

'hd': '1',

'latest': '',

'copyright': '',

'word': 'Year of the tiger',

's': '',

'se': '',

'tab': '',

'width': '',

'height': '',

'face': '0',

'istype': '2',

'qc': '',

'nc': '1',

'fr': '',

'expermode': '',

'nojc': '',

'acjsonfr': 'click',

'pn': pn, # Which picture to start with

'rn': '30',

'gsm': '3c',

'1635752428843=': '',

}

page_text = requests.get(url=url, headers=header, params=param)

page_text.encoding = 'utf-8'

page_text = page_text.json()

print(page_text)

# First take out the dictionary where all links are located and store it in a list

info_list = page_text['data']

# Since the last dictionary retrieved in this way is empty, the last element in the list is deleted

del info_list[-1]

# Define a list for storing picture addresses

img_path_list = []

for i in info_list:

img_path_list.append(i['thumbURL'])

# Then take out all the picture addresses and download them

# n will be the name of the picture

for img_path in img_path_list:

img_data = requests.get(url=img_path, headers=header).content

img_path = path + str(n) + '.jpg'

with open(img_path, 'wb') as fp:

fp.write(img_data)

n = n + 1

pn += 29

The above methods can achieve crawling Baidu pictures, but it is troublesome and not intelligent to analyze crawling every time. Therefore, share another source code that can be crawled only by entering the keyword "year of the tiger" after running:

# -*- coding:utf-8 -*-

import requests

import re, time, datetime

import os

import random

import urllib.parse

from PIL import Image # Import a module

imgDir = r"/Volumes/DBA/python/img/"

# Set headers to prevent anti pickpocketing, set multiple headers

# chrome,firefox,Edge

headers = [

{

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.105 Safari/537.36',

'Accept-Language': 'zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2',

'Connection': 'keep-alive'

},

{

"User-Agent": 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0',

'Accept-Language': 'zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2',

'Connection': 'keep-alive'

},

{

"User-Agent": 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.19041',

'Accept-Language': 'zh-CN',

'Connection': 'keep-alive'

}

]

picList = [] # Empty List of stored pictures

keyword = input("Please enter a keyword to search for:")

kw = urllib.parse.quote(keyword) # transcoding

# Get 1000 thumbnail list s searched by Baidu

def getPicList(kw, n):

global picList

weburl = r"https://image.baidu.com/search/acjson?tn=resultjson_com&logid=11601692320226504094&ipn=rj&ct=201326592&is=&fp=result&queryWord={kw}&cl=2&lm=-1&ie=utf-8&oe=utf-8&adpicid=&st=&z=&ic=&hd=&latest=©right=&word={kw}&s=&se=&tab=&width=&height=&face=&istype=&qc=&nc=1&fr=&expermode=&force=&cg=girl&pn={n}&rn=30&gsm=1e&1611751343367=".format(

kw=kw, n=n * 30)

req = requests.get(url=weburl, headers=random.choice(headers))

req.encoding = req.apparent_encoding # Prevent Chinese garbled code

webJSON = req.text

imgurlReg = '"thumbURL":"(.*?)"' # regular

picList = picList + re.findall(imgurlReg, webJSON, re.DOTALL | re.I)

for i in range(150): # The number of cycles is relatively large. If there are not so many graphs, the picList data will not increase.

getPicList(kw, i)

for item in picList:

# Suffix and first name

itemList = item.split(".")

hz = ".jpg"

picName = str(int(time.time() * 1000)) # Millisecond timestamp

# Request picture

imgReq = requests.get(url=item, headers=random.choice(headers))

# Save picture

with open(imgDir + picName + hz, "wb") as f:

f.write(imgReq.content)

# Open picture with Image module

im = Image.open(imgDir + picName + hz)

bili = im.width / im.height # Get the width height ratio, and adjust the picture size according to the width height ratio

newIm = None

# Resize the picture with the smallest side set to 50

if bili >= 1:

newIm = im.resize((round(bili * 50), 50))

else:

newIm = im.resize((50, round(50 * im.height / im.width)))

# Intercept the 50 * 50 part of the picture

clip = newIm.crop((0, 0, 50, 50)) # Intercept the picture and crop it

clip.convert("RGB").save(imgDir + picName + hz) # Save the captured picture

print(picName + hz + " Processing completed")

Demonstrate this method. After running, enter "year of the tiger" and press enter to wait for the download to complete:

The above is the source code of Baidu pictures. After crawling "Fu" and "tiger" respectively, we can start wantu imaging!

2, Wantu imaging

Wantu imaging is very simple. I wrote a similar article before and introduced it: Python crawls cat pictures in batches to realize thousand image imaging , you can refer to it!

design sketch

Now upload the renderings we have assembled directly:

3, I use SQL to write Fu characters

The SQL is as follows:

create table LuciferFu(fu_line varchar2(128));

insert into LuciferFu values('22222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222'),

('22222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222'),

('22222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222'),

('22222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222'),

('2222222222222222222222222222222222222222222222222222222222222222222222222/[[ .\2222222222222222222222222222222222'),

('2222222222222222222222222222222222222222222222222222222222222222222/[. ,2222222222222222222222222222222'),

('222222222222222222222222/2[222222222222222222222222222222222222` \22222222222222222222222222222'),

('222222222222222222222222^ ,\2222222222222222[` =2222222222222222222222222222'),

('2222222222222222222222222 \22222222/2[ \2222222222222222222222222222'),

('2222222222222222222222222 22222\ ]22222222222222222222222222222'),

('2222222222222222222222222^ \2222 .]/22222222222222222222222222222222'),

('22222222222222222222222222 2222` .,,]]/2222222222222222222222222222222222222222'),

('222222222222222222222222222 \2222] ,/2/,/222222222222222222222222222222222222222222222222222222'),

('2222222222222222222222222222 .22222222`/222222222222222222222222222222222222222222222222222222222'),

('22222222222222222222222222222. ,\2222\`/222222222222222222[. ..[22222222222222222222222222222'),

('2222222222222222222222222222` =222^ 2222222222222[` ,2222222222222222222222222222'),

('22222222222222222222222222^ ]2\]` =2` 2222222/` 2222222222222222222222222222'),

('222222222222222222222222/ /22222222\` ,]222` ,2[` =2222222222222222222222222222'),

('2222222222222222222222/ /2222222222222222222222^ ,22222222222222222222222222222222'),

('222222222222222222222^ ,/2222222[` ,[[[\2222222. .]/22^ /222222222222222222222222222222222'),

('2222222222222222222/ ,/222222/` ,22222 ,]]]222222` =2222222222222222222222222222222222'),

('222222222222222222`,2222222/[ /222^ ,22222222/` [2222222222222222222222222222222222'),

('2222222222222222^ /22222222^ /222/ 222222[ ,2222222222222222222222222222222'),

('22222222222222/ ,222222222` 22^ ,22/ /2222222222222222222222222222222'),

('2222222222222/./2222222` ,22^ ]/22222222222222222222222222222222'),

('222222222222./222222[ /22` =^ ,222222222222222222222222222222222222'),

('2222222222/,22222[ ,,/ .2^ ,]/22222222222222222222222222222222222222222'),

('22222222/./22[. ` 22^ .]22222222222222222222/[[[[[\2222222222222222222222'),

('22222/` 222^ 2222222222222222[[. [\22222222222222222'),

('22222 /2 22222. =22222222[` ,222222222222222'),

('2222^ /22/ =22222\ =2[` ,2222222222222'),

('2222\ ,]22222 2222222` .222222222222'),

('222222\]]]]`]]\/222222222` ,222222222 ]/222\/2222\2/]` ,22222222222'),

('222222222222222222222222` /[22222222/ 2222222222222222\ /22222222222'),

('22222222222222222222222^ ,` ,22222^ . =2/`,`[,[[\222222\ \22222222222'),

('2222222222222222222222^ ,/2^ ,222]. .]]222^ . ,222 222222222222'),

('222222222222222222222/. /222^ ,2222222222222222 =2^ .222222222222'),

('222222222222222222222. 22222. =222222222222/` =22. ,222222222222'),

('22222222222222222222^ .222222. .22222222/[ ,`=22 =222222222222'),

('2222222222222222222^.. .. . .\22222^ ,2222[. ]2222^. .2222222222222'),

('22222222222222222/..... ..2222222`. . . ,^ . . ]\/222222. =2222222222222'),

('22222222222222222........... . ....22222222... . .. ,` .. . ... .. . .. .222222222^ ... .22222222222222'),

('2222222222222222`................ .\2222222^ ... ............,/22^...... ../222222222222.............=22222222222222'),

('22222222222222022`..................,\2222222`.........,\]]22222`........../222222222222^.............222222222222222'),

('2222222222222222^...................222222222..........=2222/.,`.....................\2.............=222222222222222'),

('222222222222222222.]22222..........,2222222222..........2/]222......................................2222222222222222'),

('2222222222222222222222222..........,2222222222^..........`.........................................,2222222222222222'),

('2222222222222222222222222^.........=22222222222^...................................................22222222222222222'),

('2222222222222222222222222^.........=222222222222\.................................................222222222222222222'),

('2222222222222222222222222^.*........=222222222222\...................,]]]/22222\...............,22222222222222222222'),

('2222222222222222222222222^...*....../2222222222222\.......]/22222222222222222222\...*..*.....,2222222222222222222222'),

('22222222222222222222222222......**]/2222222222222222\**,]2222222222222222222222222`.......,2222222222222222222222222'),

('22222222222222222222222222\/2\22222222222222222222222222222222222222222222222222222222222222222222222222222222222222'),

('22222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222'),

('22222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222'),

('22222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222'),

('22222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222222');

design sketch:

select * from LuciferFu;

Interested friends can try it by themselves and play the year of the tiger!