- preface

At present, the main functions of the mobile camera sdk have been basically developed, and the product has been online for a long time. Looking back on the development cycle, there are many knowledge points in the design of beauty camera, the realization of basic functions is relatively simple, and there are many ready-made open source projects on the Internet However, I feel that it is not easy to make a beauty camera with performance up to standard, various business functions meeting the requirements and easy to maintain The purpose of opening this series of blogs is to summarize the technical points and difficulties encountered in the development of beauty camera. Most solutions can be found online, but they also supplement my own practical results and supplements

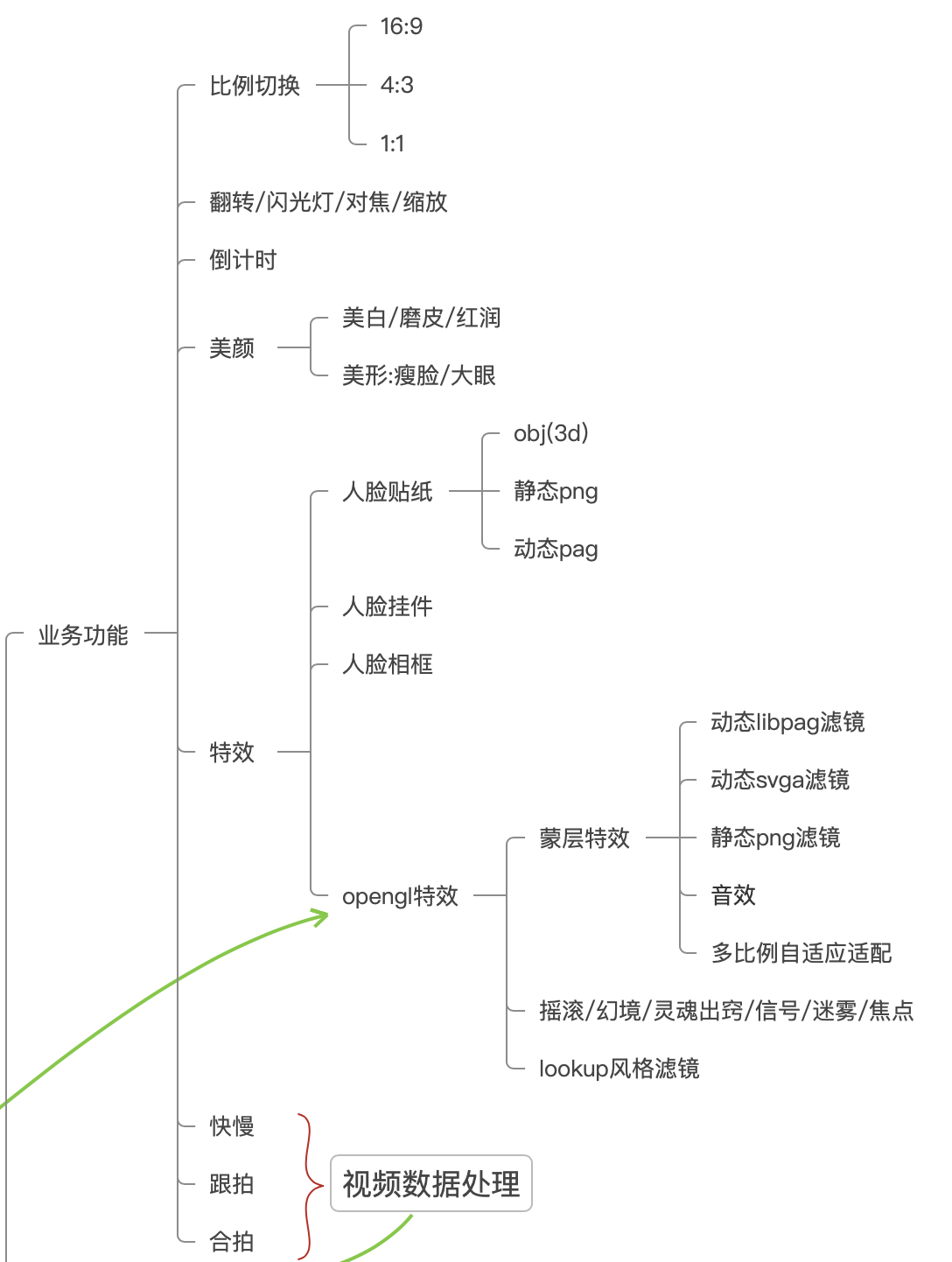

- Beauty camera business functions

The implemented functions are shown in the figure above, and the main technical framework is implemented based on GPUImage

The author will make blog discussion and Analysis on the valuable technical points of the above functions from time to time. Readers are also welcome to leave messages on the technical points they want to analyze

- Throw a problem

Since gpuimage is the ios solution by default, the Android solution is only implemented by another team with reference to ios. At present, the maintenance has been stopped. In the first problem of camera integrated development, it was found that the upload speed of gpuimage's camera texture is relatively slow in medium and low-end computers, which is far from being achieved 720@30 The required speed will cause serious frame loss

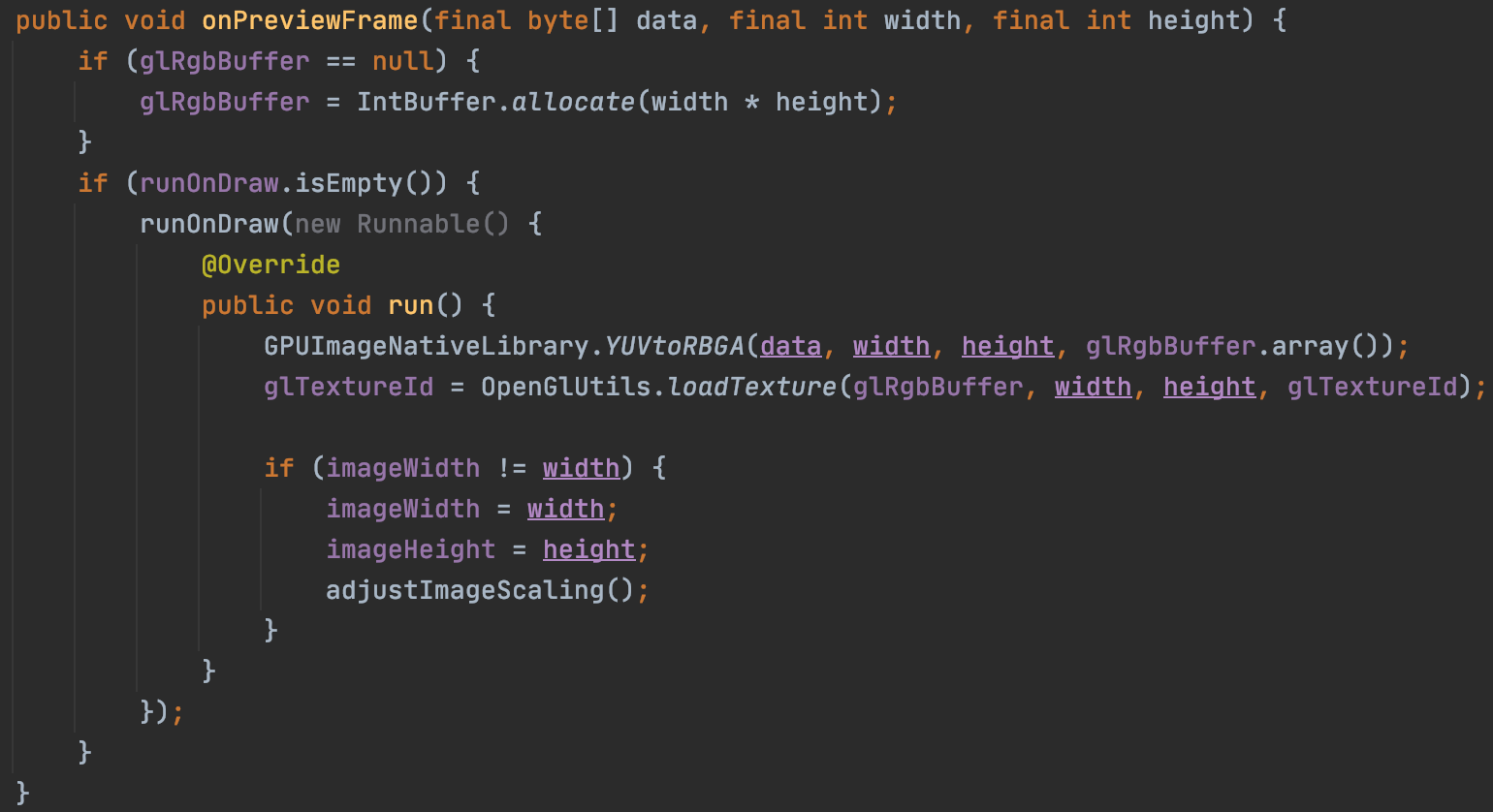

In the GPUImageRenderer file, we found the onPreviewFrame function

The purpose of this function is to convert camera data into texture data and return glTextureId to gpu. The function performs two functions:

- Convert camera data to rgba data

- Upload rgab data to texture glTextureId

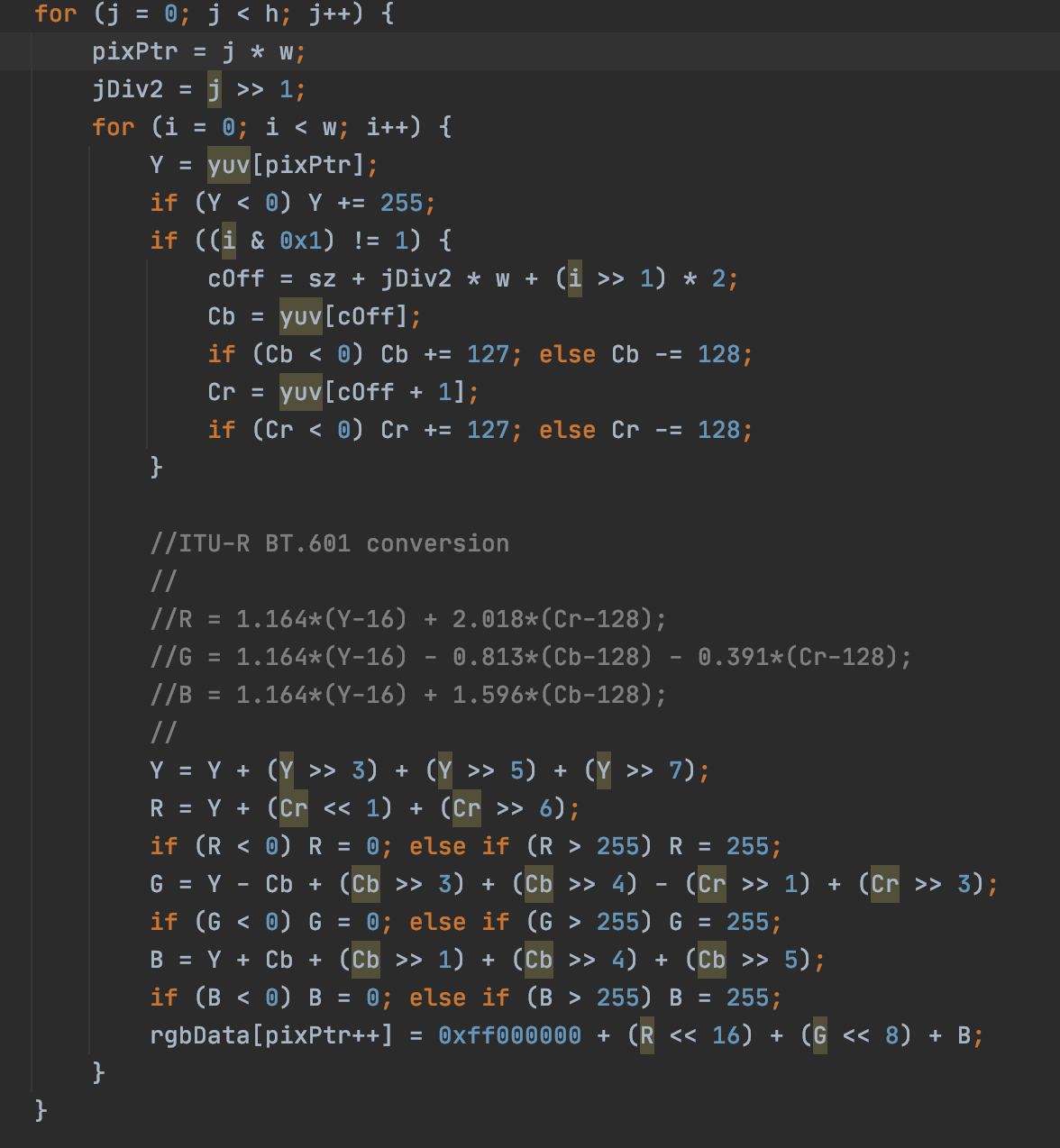

Continue tracking GPUImageNativeLibrary_YUVtoRBGA function, we find that gpu converts nv21 data into rgba data through jni

This function traverses nv21 data and converts it byte by byte according to the yuv and rgb conversion formula

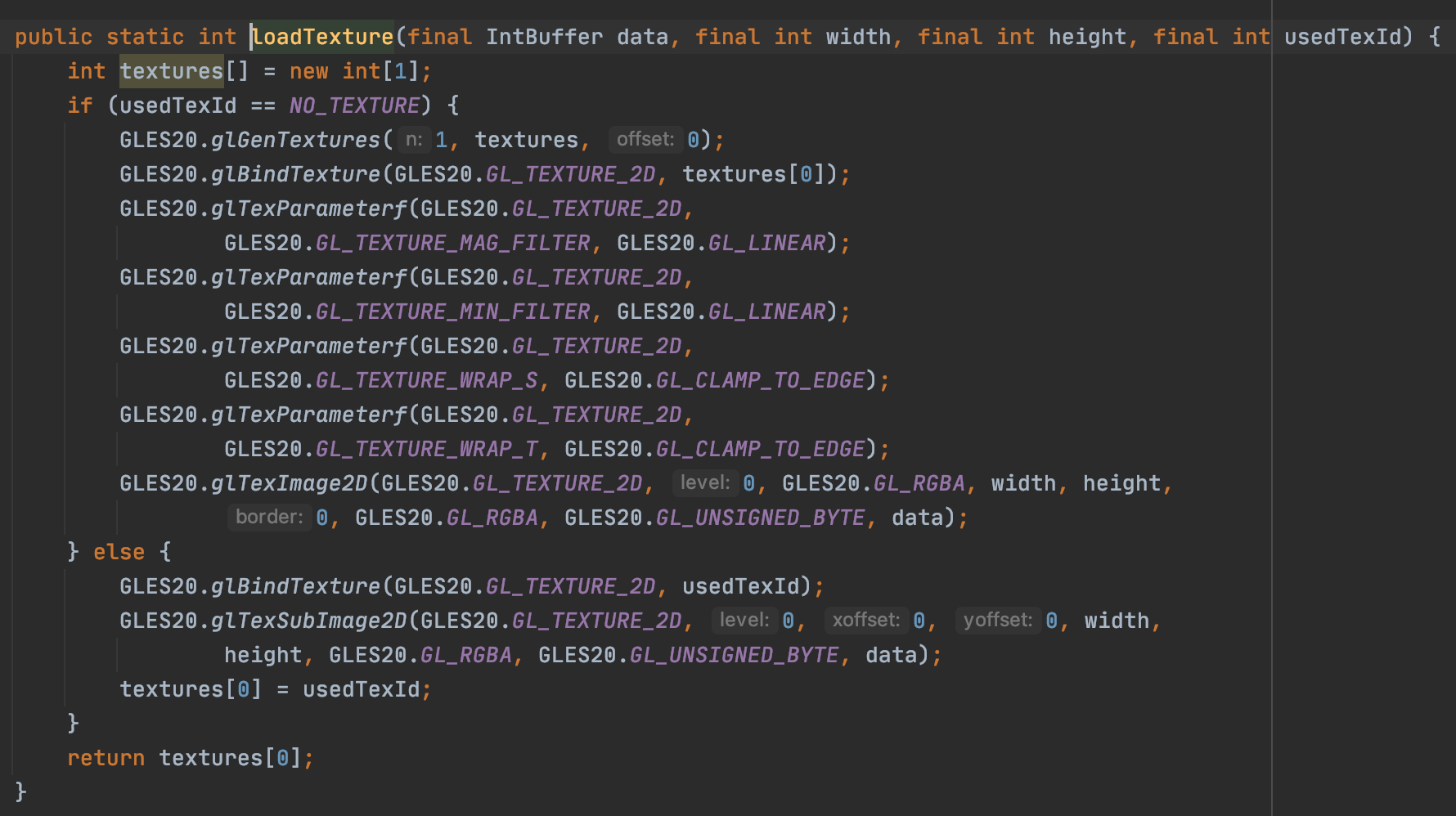

Returning to the conversion function, we find openglutils Loadtexture is actually loading rgba data into texture

We found that the processing of gpuimage is to create a texture when there is no texture, and use gltex image2d to fill the data. If the texture already exists, use gltex subimage2d to update the texture data to avoid repeated application in texture space

There is a lot of room for optimization here. Of course, the author has also tried to optimize to varying degrees in the development process. First, libyuv is used for format conversion. Libyuv is accelerated by simd instruction and neon instruction set, and the speed of performance improvement is very obvious

- analysis

Here we know the general process and performance bottlenecks of gpu texture upload. One is the performance bottleneck of nv21 data conversion to rgba data, and the other is the performance bottleneck of rgba data upload to texture id

- nv21 to rgba

Traversing the color space byte by byte and making color space according to the formula must be the least efficient. This method consumes a lot of cpu resources. As long as the image size is constantly high, the computational complexity is very high, so our first reaction is to optimize the algorithm. At present, the conventional is to speed up the simd instruction set, and the android end is connected to the neon acceleration technology. We can manually optimize the above for loop, The complexity can be reduced, or the better solution is to directly integrate libyuv library for conversion, which will greatly improve the efficiency, especially in the case of high resolution - rgba texture upload

OpenGL texture upload scheme is a classic performance optimization problem. Direct rgba data upload will cause glTexSubImage2D to wait a long cpu time. According to opengl3 0 programming guide. It is recommended to refresh a large amount of dynamic texture data by pbo. pbo will open up a memory mapping between memory and video memory. The actual data copy is copied by dma image. This part of the work is directly completed by gpu, which can save a lot of cpu time Of course, pbo also needs to combine in alignment and double buffering mechanism to give full play to its best performance. pbo texture mode notes will be analyzed in a later meeting

After analyzing the above two problems, it is true that the performance of notes has improved a lot in the actual development process. At least most medium-performance machines can achieve 720p30 performance, but it is found that the low-end machines still can't reach it. After further research and further optimization of the scheme, it is found that there are several other performance bottlenecks:

- nv21 data upload texture directly

This has several advantages. Firstly, it can avoid cpu color conversion and carry out this work in gpu. gpu has this natural advantage in data conversion. Secondly, it greatly reduces the amount of data exchange between memory and video memory. rgba is 32-bit data, nv21 data is 12 bit data, and the amount of data transmission is saved by more than twice - Over drawing problem

After actual measurement, it is found that the gpuiamge layout will also have a great impact on the performance. When the over drawing detection is turned on, it is found that if the layout is set with background color or level superposition, it will have a great impact on the performance of the low-end machine. Therefore, it is necessary to check the over drawing problem according to their respective projects. See details for details Over drawing check method - gpu filter quantity performance bottleneck

As mentioned earlier, the data conversion work will be processed by gpu. The actual project may have more filter functions and gpu performance will encounter bottlenecks. Therefore, off screen rendering ebo and shared texture multithreading are designed here (further analysis in the blog later)

- programme

Back to our topic, the best scheme for uploading camera preview texture data is to upload nv21 data directly. How to do it? Here, we can load the data into gpu based on opengl brightness channel data, and then write glsl code to convert nv21 data into rgba data

public static int loadLuminanceTexture(final ByteBuffer byteBuffer,final int width,final int height, final int usedTexId, int glFormat){

int textures[] = new int[1];

if (usedTexId == NO_TEXTURE) {

GLES20.glGenTextures(1, textures, 0);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textures[0]);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D,

GLES20.GL_TEXTURE_MAG_FILTER, GLES20.GL_LINEAR);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D,

GLES20.GL_TEXTURE_MIN_FILTER, GLES20.GL_LINEAR);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D,

GLES20.GL_TEXTURE_WRAP_S, GLES20.GL_CLAMP_TO_EDGE);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D,

GLES20.GL_TEXTURE_WRAP_T, GLES20.GL_CLAMP_TO_EDGE);

GLES20.glTexImage2D(

GLES20.GL_TEXTURE_2D, 0,

glFormat, width, height, 0,

glFormat, GLES20.GL_UNSIGNED_BYTE, byteBuffer);

} else {

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, usedTexId);

GLES20.glTexSubImage2D(

GLES20.GL_TEXTURE_2D, 0,

0,0, width, height,

glFormat,

GLES20.GL_UNSIGNED_BYTE, byteBuffer);

textures[0] = usedTexId;

}

return textures[0];

}Here I encapsulate a simple upload code, which only needs to pass in nv21 fragment data and specify the format

public void loadNV21ByteBuffer(ByteBuffer yByteBuffer, ByteBuffer uvByteBuffer, int width, int height) {

if (isPush){

Log.w(TAG, "loadNV21ByteBuffer: isPush false");

return;

}

isPush = true;

runOnDraw(new Runnable() {

@Override

public void run() {

samplerYTexture = OpenGlUtils.loadLuminanceTexture(yByteBuffer, width, height,

samplerYTexture, GLES20.GL_LUMINANCE);

samplerUVTexture = OpenGlUtils.loadLuminanceTexture(uvByteBuffer, width / 2, height / 2,

samplerUVTexture, GLES20.GL_LUMINANCE_ALPHA);

isPush = false;

}

});

}The call method is as above, so that our nv21 data has been uploaded to gpu, and the next step is gpu conversion

void nv12ToRGB(){

vec3 yuv = vec3(

1.1643 * (texture2D(yTexture, textureCoordinate).r - 0.0625),

texture2D(uvTexture, textureCoordinate).r - 0.5,

texture2D(uvTexture, textureCoordinate).a - 0.5

);

vec3 rgb = yuv * yuv2rgb;

gl_FragColor = vec4(rgb, 1.0);

}

void main() {

if(blankMode == 0){

gl_FragColor = vec4(0.0,0.0,0.0,1.0);

}

else{

if(yuvType == 0){

I420ToRGB();

}else if(yuvType == 1){

nv12ToRGB();

}else{

nv21ToRGB();

}

}

}Here, nv21 data has been converted into rgba data in gpu. The returned texture id needs to be bound to gpugroupfilter as the data source input for the next filter processing

- Complete code

package com.litalk.media.core.filter;

import android.content.Context;

import android.opengl.GLES20;

import android.util.Log;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.nio.FloatBuffer;

import jp.co.cyberagent.android.gpuimage.filter.GPUImageFilter;

import jp.co.cyberagent.android.gpuimage.util.OpenGlUtils;

public class GPUImageYUVFilter extends GPUImageFilter {

private final String TAG = GPUImageYUVFilter.class.getSimpleName();

public static final String fragmentShaderCode = "precision mediump float;" +

"uniform sampler2D yTexture;" +

"uniform sampler2D uTexture;" +

"uniform sampler2D vTexture;" +

"uniform sampler2D uvTexture;" +

"uniform int yuvType;" +

"varying vec2 textureCoordinate;" +

"uniform sampler2D inputImageTexture;" +

"void main() {" +

" vec4 c = vec4((texture2D(yTexture, textureCoordinate).r - 0.0627) * 1.1643);" +

" vec4 U; vec4 V;" +

" if (yuvType == 0){" +

" U = vec4(texture2D(uTexture, textureCoordinate).r - 0.5);" +

" V = vec4(texture2D(vTexture, textureCoordinate).r - 0.5);" +

" } else if (yuvType == 1){" +

" U = vec4(texture2D(uvTexture, textureCoordinate).r - 0.5);" +

" V = vec4(texture2D(uvTexture, textureCoordinate).a - 0.5);" +

" } else {" +

" U = vec4(texture2D(uvTexture, textureCoordinate).a - 0.5);" +

" V = vec4(texture2D(uvTexture, textureCoordinate).r - 0.5);" +

" } " +

" c += V * vec4(1.596, -0.813, 0, 0);" +

" c += U * vec4(0, -0.392, 2.017, 0);" +

" c.a = 1.0;" +

" gl_FragColor = c;" +

"}";

private String FRAGMENT_SHADER_NAME = "shaders/fragment_yuv2rgb.glsl";

private YUVType yuvType;

private int yuvTypeHandle;

private int blankModeHandle;

private int yTextureHandle;

private int uTextureHandle;

private int vTextureHandle;

private int uvTextureHandle;

private int samplerYTexture = OpenGlUtils.NO_TEXTURE;

private int samplerUTexture = OpenGlUtils.NO_TEXTURE;

private int samplerVTexture = OpenGlUtils.NO_TEXTURE;

private int samplerUVTexture = OpenGlUtils.NO_TEXTURE;

public enum YUVType {

I420,

NV12,

NV21

}

volatile boolean isPush = false;

Object nv21BufferLock = new Object();

//0:i420 1:nv12 2:nv21

public GPUImageYUVFilter(Context appContext, YUVType yuvType) {

super(NO_FILTER_VERTEX_SHADER, fragmentShaderCode);

//Replace Shader

String fragmentShaderCode = null;

try {

fragmentShaderCode = GPUImageFilter.readShaderFileFromAssets(appContext, FRAGMENT_SHADER_NAME);

} catch (IOException e) {

e.printStackTrace();

}

setFragmentShader(fragmentShaderCode);

this.yuvType = yuvType;

}

@Override

public void onInit() {

super.onInit();

blankModeHandle = GLES20.glGetUniformLocation(getProgram(), "blankMode");

yuvTypeHandle = GLES20.glGetUniformLocation(getProgram(), "yuvType");

yTextureHandle = GLES20.glGetUniformLocation(getProgram(), "yTexture");

uTextureHandle = GLES20.glGetUniformLocation(getProgram(), "uTexture");

vTextureHandle = GLES20.glGetUniformLocation(getProgram(), "vTexture");

uvTextureHandle = GLES20.glGetUniformLocation(getProgram(), "uvTexture");

int type = 0;

switch (yuvType) {

case I420:

type = 0;

break;

case NV12:

type = 1;

break;

case NV21:

type = 2;

break;

default:

break;

}

setInteger(yuvTypeHandle, type);

}

public void loadNV21Bytes(byte[] nv21Bytes, int width, int height) {

//Save one copy

ByteBuffer yByteBuffer = ByteBuffer.wrap(nv21Bytes, 0, width * height);

ByteBuffer uvByteBuffer = ByteBuffer.allocate(width * height >> 1);

System.arraycopy(nv21Bytes,width * height,uvByteBuffer.array(),0,uvByteBuffer.capacity());

//test

// Bitmap bitmap = Bitmap.createBitmap(width,height, Bitmap.Config.ARGB_8888);

// int ret = VideoConvertNative.ltNV21ToBitmap(yByteBuffer,uvByteBuffer,bitmap);

loadNV21ByteBuffer(yByteBuffer, uvByteBuffer, width, height);

}

public void loadNV21ByteBuffer(ByteBuffer yByteBuffer, ByteBuffer uvByteBuffer, int width, int height) {

if (isPush){

Log.w(TAG, "loadNV21ByteBuffer: isPush false");

return;

}

isPush = true;

runOnDraw(new Runnable() {

@Override

public void run() {

samplerYTexture = OpenGlUtils.loadLuminanceTexture(yByteBuffer, width, height,

samplerYTexture, GLES20.GL_LUMINANCE);

samplerUVTexture = OpenGlUtils.loadLuminanceTexture(uvByteBuffer, width / 2, height / 2,

samplerUVTexture, GLES20.GL_LUMINANCE_ALPHA);

isPush = false;

}

});

}

public void syncLoadNV21ByteBuffer(ByteBuffer yByteBuffer, ByteBuffer uvByteBuffer, int width, int height) {

runOnDraw(new Runnable() {

@Override

public void run() {

samplerYTexture = OpenGlUtils.loadLuminanceTexture(yByteBuffer, width, height,

samplerYTexture, GLES20.GL_LUMINANCE);

samplerUVTexture = OpenGlUtils.loadLuminanceTexture(uvByteBuffer, width / 2, height / 2,

samplerUVTexture, GLES20.GL_LUMINANCE_ALPHA);

synchronized (nv21BufferLock){

nv21BufferLock.notify();

}

}

});

}

public void syncWaitNV21BufferLoad(){

synchronized (nv21BufferLock){

try {

nv21BufferLock.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

public void loadI420Buffer(ByteBuffer yByteBuffer, ByteBuffer uByteBuffer, ByteBuffer vByteBuffer, int width, int height) {

if (isPush){

Log.w(TAG, "loadI420Buffer: isPush false");

return;

}

isPush = true;

runOnDraw(new Runnable() {

@Override

public void run() {

samplerYTexture = OpenGlUtils.loadLuminanceTexture(yByteBuffer, width, height,

samplerYTexture, GLES20.GL_LUMINANCE);

samplerUTexture = OpenGlUtils.loadLuminanceTexture(uByteBuffer, width / 2, height / 2,

samplerUTexture, GLES20.GL_LUMINANCE);

samplerVTexture = OpenGlUtils.loadLuminanceTexture(vByteBuffer, width / 2, height / 2,

samplerVTexture, GLES20.GL_LUMINANCE);

isPush = false;

}

});

}

@Override

protected void onDrawArraysPre() {

super.onDrawArraysPre();

//Bind texture

if (yuvType == YUVType.I420){

if (samplerYTexture != OpenGlUtils.NO_TEXTURE){

GLES20.glUniform1i(blankModeHandle, 1);

GLES20.glActiveTexture(GLES20.GL_TEXTURE1); // Sets the currently active texture unit to texture unit 0

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, samplerYTexture);

GLES20.glUniform1i(yTextureHandle, 1);

GLES20.glActiveTexture(GLES20.GL_TEXTURE2);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, samplerUTexture);

GLES20.glUniform1i(uTextureHandle, 2);

GLES20.glActiveTexture(GLES20.GL_TEXTURE3);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, samplerVTexture);

GLES20.glUniform1i(vTextureHandle, 3);

}

else {

GLES20.glUniform1i(blankModeHandle, 0);

}

}

else {

if (samplerYTexture != OpenGlUtils.NO_TEXTURE) {

GLES20.glUniform1i(blankModeHandle, 1);

GLES20.glActiveTexture(GLES20.GL_TEXTURE1); // Sets the currently active texture unit to texture unit 0

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, samplerYTexture);

GLES20.glUniform1i(yTextureHandle, 1);

GLES20.glActiveTexture(GLES20.GL_TEXTURE2);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, samplerUVTexture);

GLES20.glUniform1i(uvTextureHandle, 2);

}

else {

GLES20.glUniform1i(blankModeHandle, 0);

}

}

}

@Override

protected void onDrawArraysAfter(int textureId, FloatBuffer textureBuffer) {

super.onDrawArraysAfter(textureId, textureBuffer);

// checkGLError(TAG, "onDrawArraysAfter");

}

}

//#version 120 fragment code

precision mediump float;

varying vec2 textureCoordinate;

uniform int blankMode;

uniform int yuvType;

uniform sampler2D yTexture;

uniform sampler2D uTexture;

uniform sampler2D vTexture;

uniform sampler2D uvTexture;

const mat3 yuv2rgb = mat3(

1, 0, 1.2802,

1, -0.214821, -0.380589,

1, 2.127982, 0

);

void I420ToRGB(){

vec3 yuv = vec3(

1.1643 * (texture2D(yTexture, textureCoordinate).r - 0.0625),

texture2D(uTexture, textureCoordinate).r - 0.5,

texture2D(vTexture, textureCoordinate).r - 0.5

);

vec3 rgb = yuv * yuv2rgb;

gl_FragColor = vec4(rgb, 1.0);

}

void nv12ToRGB(){

vec3 yuv = vec3(

1.1643 * (texture2D(yTexture, textureCoordinate).r - 0.0625),

texture2D(uvTexture, textureCoordinate).r - 0.5,

texture2D(uvTexture, textureCoordinate).a - 0.5

);

vec3 rgb = yuv * yuv2rgb;

gl_FragColor = vec4(rgb, 1.0);

}

void nv21ToRGB(){

vec3 yuv = vec3(

1.1643 * (texture2D(yTexture, textureCoordinate).r - 0.0625),

texture2D(uvTexture, textureCoordinate).a - 0.5,

texture2D(uvTexture, textureCoordinate).r - 0.5

);

vec3 rgb = yuv * yuv2rgb;

gl_FragColor = vec4(rgb, 1.0);

}

void main() {

if(blankMode == 0){

gl_FragColor = vec4(0.0,0.0,0.0,1.0);

}

else{

if(yuvType == 0){

I420ToRGB();

}else if(yuvType == 1){

nv12ToRGB();

}else{

nv21ToRGB();

}

}

}

//Vertex code

public static final String NO_FILTER_VERTEX_SHADER = "" +

"attribute vec4 position;\n" +

"attribute vec4 inputTextureCoordinate;\n" +

" \n" +

"varying vec2 textureCoordinate;\n" +

" \n" +

"void main()\n" +

"{\n" +

" gl_Position = position;\n" +

" textureCoordinate = inputTextureCoordinate.xy;\n" +

"}";