1, Introduction

BP network (Back Propagation) was proposed by a team of scientists led by Rumelhart and McCelland in 1986. It is a multilayer feedforward network trained by error Back Propagation algorithm. It is one of the most widely used neural network models at present. BP network can learn and store a large number of input-output mode mapping relationships without revealing the mathematical equations describing this mapping relationship in advance.

In the development history of artificial neural network, there is no effective algorithm to adjust the connection weight of hidden layer for a long time. Until the error back propagation algorithm (BP algorithm) was proposed, the weight adjustment problem of multilayer feedforward neural network for solving nonlinear continuous function was successfully solved.

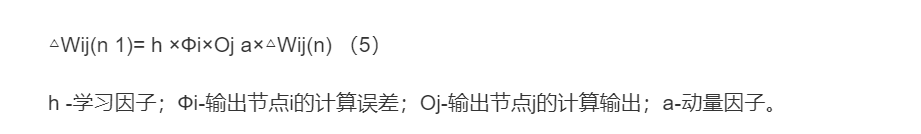

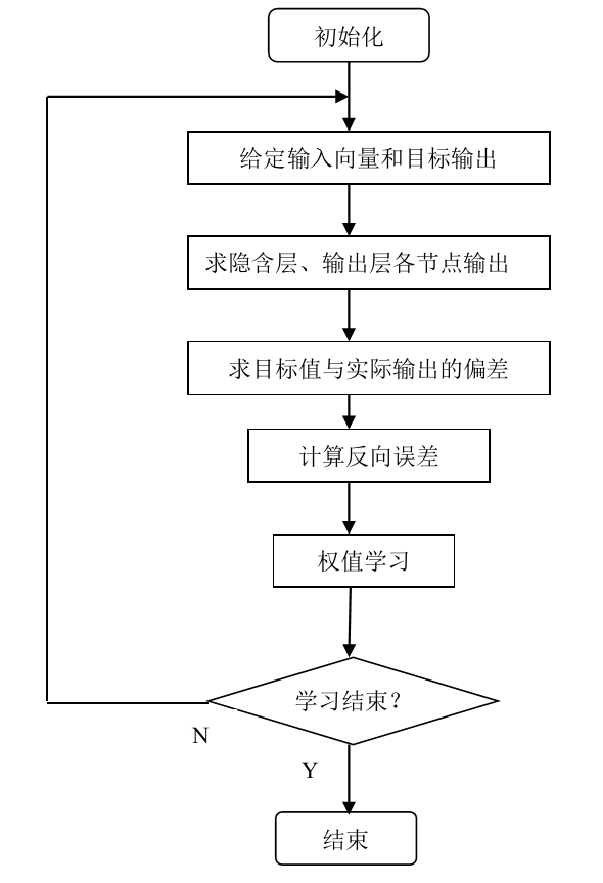

BP (Back Propagation) neural network, that is, the learning process of error back propagation algorithm, consists of two processes: forward propagation of information and back propagation of error. Each neuron in the input layer is responsible for receiving input information from the outside and transmitting it to each neuron in the middle layer; The middle layer is the internal information processing layer, which is responsible for information transformation. According to the requirements of information change ability, the middle layer can be designed as single hidden layer or multi hidden layer structure; The last hidden layer transmits the information to the neurons of the output layer. After further processing, it completes the forward propagation processing process of one learning, and the output layer outputs the information processing results to the outside world. When the actual output is inconsistent with the expected output, it enters the back propagation stage of error. The error passes through the output layer, modifies the weight of each layer in the way of error gradient descent, and transmits it back to the hidden layer and input layer layer layer by layer. The repeated process of information forward propagation and error back propagation is not only the process of continuously adjusting the weight of each layer, but also the process of neural network learning and training. This process continues until the error of network output is reduced to an acceptable level or the preset learning times.

BP neural network model BP network model includes its input-output model, action function model, error calculation model and self-learning model.

2 BP neural network model and its basic principle

3 BP_PID algorithm flow

2, Source code

%% Clear environment variables

clc

clear

%% Training data prediction data extraction and normalization

%Download four types of voice signals

load data1 c1

load data2 c2

load data3 c3

load data4 c4

%Four characteristic signal matrices are combined into one matrix

data(1:500,:)=c1(1:500,:);

data(501:1000,:)=c2(1:500,:);

data(1001:1500,:)=c3(1:500,:);

data(1501:2000,:)=c4(1:500,:);

%Random sorting from 1 to 2000

k=rand(1,2000);

[m,n]=sort(k);

%Input / output data

input=data(:,2:25);

output1 =data(:,1);

%Change the output from 1D to 4D

output=zeros(2000,4);

for i=1:2000

switch output1(i)

case 1

output(i,:)=[1 0 0 0];

case 2

output(i,:)=[0 1 0 0];

case 3

output(i,:)=[0 0 1 0];

case 4

output(i,:)=[0 0 0 1];

end

end

%1500 samples were randomly selected as training samples and 500 samples as prediction samples

input_train=input(n(1:1500),:)';

output_train=output(n(1:1500),:)';

input_test=input(n(1501:2000),:)';

output_test=output(n(1501:2000),:)';

%Input data normalization

[inputn,inputps]=mapminmax(input_train);

%% Network structure initialization

innum=24;

midnum=25;

outnum=4;

%Weight initialization

w1=rands(midnum,innum);

b1=rands(midnum,1);

w2=rands(midnum,outnum);

b2=rands(outnum,1);

w2_1=w2;w2_2=w2_1;

w1_1=w1;w1_2=w1_1;

b1_1=b1;b1_2=b1_1;

b2_1=b2;b2_2=b2_1;

%Learning rate

xite=0.1;

alfa=0.01;

loopNumber=10;

I=zeros(1,midnum);

Iout=zeros(1,midnum);

FI=zeros(1,midnum);

dw1=zeros(innum,midnum);

db1=zeros(1,midnum);

%% Network training

E=zeros(1,loopNumber);

for ii=1:10

E(ii)=0;

for i=1:1:1500

%% Prediction output network

x=inputn(:,i);

% Hidden layer output

for j=1:1:midnum

I(j)=inputn(:,i)'*w1(j,:)'+b1(j);

Iout(j)=1/(1+exp(-I(j)));

end

% Output layer

yn=w2'*Iout'+b2;

%% Weight threshold correction

%calculation error

e=output_train(:,i)-yn;

E(ii)=E(ii)+sum(abs(e));

%Calculate weight change rate

dw2=e*Iout;

db2=e';

for j=1:1:midnum

S=1/(1+exp(-I(j)));

FI(j)=S*(1-S);

end

for k=1:1:innum

for j=1:1:midnum

dw1(k,j)=FI(j)*x(k)*(e(1)*w2(j,1)+e(2)*w2(j,2)+e(3)*w2(j,3)+e(4)*w2(j,4));

db1(j)=FI(j)*(e(1)*w2(j,1)+e(2)*w2(j,2)+e(3)*w2(j,3)+e(4)*w2(j,4));

end

end

w1=w1_1+xite*dw1'+alfa*(w1_1-w1_2);

b1=b1_1+xite*db1'+alfa*(b1_1-b1_2);

w2=w2_1+xite*dw2'+alfa*(w2_1-w2_2);

b2=b2_1+xite*db2'+alfa*(b2_1-b2_2);

w1_2=w1_1;w1_1=w1;

w2_2=w2_1;w2_1=w2;

b1_2=b1_1;b1_1=b1;

b2_2=b2_1;b2_1=b2;

end

end

%% Speech feature signal classification

inputn_test=mapminmax('apply',input_test,inputps);

fore=zeros(4,500);

for ii=1:1

for i=1:500%1500

%Hidden layer output

for j=1:1:midnum

I(j)=inputn_test(:,i)'*w1(j,:)'+b1(j);

Iout(j)=1/(1+exp(-I(j)));

end

fore(:,i)=w2'*Iout'+b2;

end

end

%% Result analysis

%Find out what kind of data it belongs to according to the network output

output_fore=zeros(1,500);

for i=1:500

output_fore(i)=find(fore(:,i)==max(fore(:,i)));

end

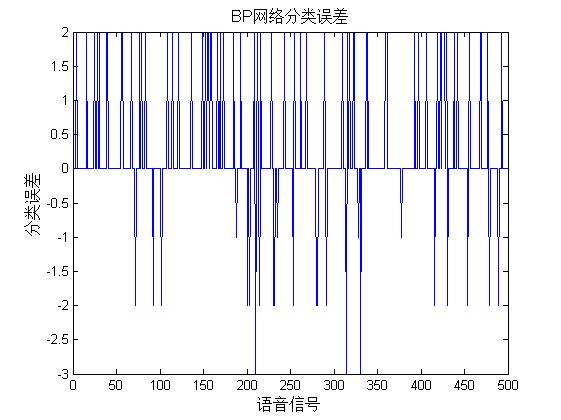

%BP Network prediction error

error=output_fore-output1(n(1501:2000))';

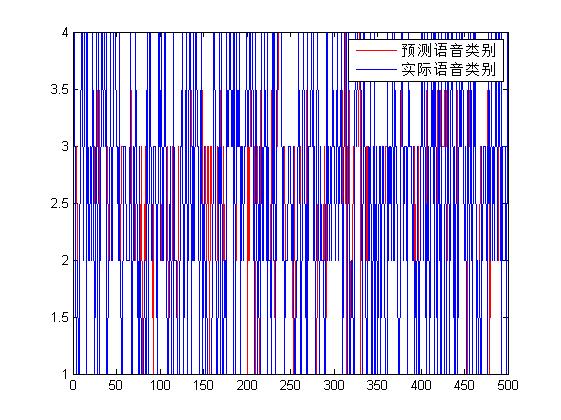

%Draw the classification diagram of predicted speech types and actual speech types

figure(1)

plot(output_fore,'r')

hold on

plot(output1(n(1501:2000))','b')

legend('Predicted speech category','Actual voice category')

%Draw the error diagram

figure(2)

plot(error)

title('BP Network classification error','fontsize',12)

xlabel('speech signal ','fontsize',12)

ylabel('Classification error','fontsize',12)

%print -dtiff -r600 1-4

k=zeros(1,4);

%Find out the category of judgment errors

for i=1:500

if error(i)~=0

[b,c]=max(output_test(:,i));

switch c

case 1

k(1)=k(1)+1;

case 2

k(2)=k(2)+1;

case 3

k(3)=k(3)+1;

case 4

k(4)=k(4)+1;

end

end

end3, Operation results

4, Remarks

Complete code or write on behalf of QQ1575304183