An introduction

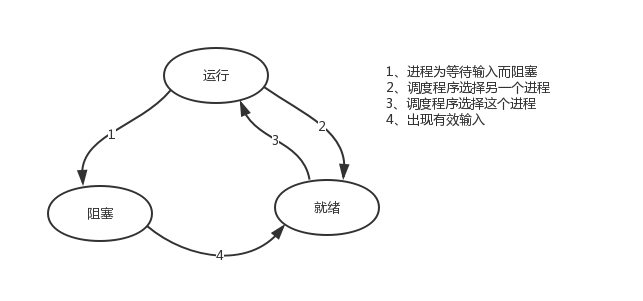

The topic of this section is to realize concurrency based on single thread, that is, to realize concurrency with only one main thread (obviously, there is only one available cpu). Therefore, we need to review the essence of concurrency first: switching + saving state. The cpu is running a task and will switch to execute other tasks in two cases (switching is forcibly controlled by the operating system), One is that the task is blocked, the other is that the task takes too long to calculate or a higher priority program replaces it  ps: when introducing the process theory, it refers to the three execution states of the process, and the thread is the execution unit. Therefore, the above figure can also be understood as the three states of the thread. First, the second situation can not improve the efficiency, but just to make the cpu wet and wet, and realize the effect that all tasks seem to be executed "at the same time". If multiple tasks are purely computational, This switching will reduce efficiency. Therefore, we can verify based on yield. Yield itself is a method that can save the running state of a task under a single thread. Let's briefly review:

ps: when introducing the process theory, it refers to the three execution states of the process, and the thread is the execution unit. Therefore, the above figure can also be understood as the three states of the thread. First, the second situation can not improve the efficiency, but just to make the cpu wet and wet, and realize the effect that all tasks seem to be executed "at the same time". If multiple tasks are purely computational, This switching will reduce efficiency. Therefore, we can verify based on yield. Yield itself is a method that can save the running state of a task under a single thread. Let's briefly review:

#1. LED can save the state. The state saving of yield is very similar to the thread state saving of the operating system, but yield is controlled at the code level and is more lightweight #2 send can transfer the result of one function to another function, so as to realize the switching between programs in a single thread

Simply switching will reduce the operation efficiency

'''

1,Coordination process:

Single thread concurrency

Control the switching of multiple tasks in the application+Save status

advantage:

Application level speed is much faster than operating system switching

Disadvantages:

Once one block of multiple tasks is not cut, the whole thread is blocked in place

Other tasks in this thread cannot be executed

Once a coroutine is introduced, it is necessary to detect all data in a single thread IO behavior,

Implementation encounter IO Just switch,Once one thread is blocked, the whole task will be blocked,

Other tasks, even if they can be calculated, cannot be run

2,Purpose of the coordination procedure:

Want to achieve concurrency in a single thread

Concurrency means that multiple tasks appear to be running at the same time

Concurrent=switch+Save status

'''

#Serial execution

import time

def func1():

for i in range(10000000):

i+1

def func2():

for i in range(10000000):

i+1

start = time.time()

func1()

func2()

stop = time.time()

print(stop - start)

#Concurrent execution based on yield

import time

def func1():

while True:

yield

def func2():

g=func1()

for i in range(10000000):

i+1

next(g)

start=time.time()

func2()

stop=time.time()

print(stop-start)

2: Switching in the first case. When task 1 encounters IO, switch to task 2 for execution. In this way, the blocking time of task 1 can be used to complete the calculation of task 2. This is why the efficiency is improved. yield can't detect IO and realize automatic switching in case of Io

import time

def func1():

while True:

print('func1')

yield

def func2():

g=func1()

for i in range(10000000):

i+1

next(g)

time.sleep(3)

print('func2')

start=time.time()

func2()

stop=time.time()

print(stop-start)

For a single thread, we cannot avoid io operations in the program, but if we can control multiple tasks under a single thread in our own program (i.e. user program level rather than operating system level), we can switch to another task for calculation when one task encounters io blocking, so as to ensure that the thread can be in the ready state to the greatest extent, That is, the state that can be executed by the cpu at any time is equivalent to that we hide our io operations to the greatest extent at the user program level, so that we can confuse the operating system and let it see that the thread seems to be calculating all the time, with less io, so we allocate more execution permissions of the cpu to our threads. The essence of a collaborative process is that in a single thread, the user controls a task and switches another task to execute in case of io blocking, so as to improve efficiency. In order to realize it, we need to find a solution that can meet the following conditions at the same time:

#1. The switching between multiple tasks can be controlled. Before switching, the state of the task can be saved so that when it is run again, it can continue to execute based on the suspended position. #2. As a supplement to 1: io operation can be detected, and switching occurs only when io operation is encountered

II. Introduction to cooperative process

Coroutine: it is concurrency under single thread, also known as micro thread and fiber process. English name coroutine. What is a thread in one sentence: a coroutine is a lightweight thread in user mode, that is, the coroutine is controlled and scheduled by the user program itself. It should be emphasized that:

#1. python threads belong to the kernel level, that is, the scheduling is controlled by the operating system (if a single thread encounters io or the execution time is too long, it will be forced to hand over the cpu execution permission and switch other threads to run) #2. Start the co process in a single thread. Once io is encountered, it will control the switching from the application level (rather than the operating system) to improve efficiency (!!! The switching of non io operations has nothing to do with efficiency)

Compared with the switching of operating system control threads, the advantages of users controlling the switching of collaborative processes in a single thread are as follows:

#1. The switching overhead of the cooperative process is smaller. It belongs to program level switching, which is completely invisible to the operating system, so it is more lightweight #2. The effect of concurrency can be realized in a single thread to maximize the use of cpu

The disadvantages are as follows:

#1. The essence of a collaborative process is that under a single thread, multiple cores cannot be used. One program can start multiple processes, multiple threads in each process, and a collaborative process can be started in each thread #2. A coroutine refers to a single thread, so once the coroutine is blocked, the whole thread will be blocked

Summarize the characteristics of collaborative process:

- Concurrency must be implemented in only one single thread

- No lock is required to modify shared data

- The user program stores the context stack of multiple control flows

- Additional: when a collaboration process encounters IO operation, it will automatically switch to other collaboration processes (how to detect IO, yield and greenlet cannot be realized, so gevent module (select mechanism) is used)

III. Greenlet

If we have 20 tasks in a single thread and want to switch between multiple tasks, the way of using yield generator is too cumbersome (we need to get the generator initialized once first, and then call send... Very cumbersome), while using greenlet module can easily switch these 20 tasks directly

#install pip3 install greenlet

from greenlet import greenlet

def eat(name):

print('%s eat 1' %name)

g2.switch('egon')

print('%s eat 2' %name)

g2.switch()

def play(name):

print('%s play 1' %name)

g1.switch()

print('%s play 2' %name)

g1=greenlet(eat)

g2=greenlet(play)

g1.switch('egon')#You can pass in parameters during the first switch, and you don't need them in the future

Simple switching (without io or repeated operations to open up memory space) will reduce the execution speed of the program

#Sequential execution

import time

def f1():

res=1

for i in range(100000000):

res+=i

def f2():

res=1

for i in range(100000000):

res*=i

start=time.time()

f1()

f2()

stop=time.time()

print('run time is %s' %(stop-start)) #10.985628366470337

#switch

from greenlet import greenlet

import time

def f1():

res=1

for i in range(100000000):

res+=i

g2.switch()

def f2():

res=1

for i in range(100000000):

res*=i

g1.switch()

start=time.time()

g1=greenlet(f1)

g2=greenlet(f2)

g1.switch()

stop=time.time()

print('run time is %s' %(stop-start)) # 52.763017892837524

greenlet only provides a more convenient switching mode than the generator. When switching to a task execution, if it encounters IO, it will block in place. It still does not solve the problem of automatic IO switching to improve efficiency. The code of these 20 tasks in a single thread usually has both computing operations and blocking operations. We can use the blocking time to execute task 2 when we encounter blocking in task 1.... In this way, the efficiency can be improved, which uses the Gevent module.

IV. introduction to Gevent

#install pip3 install gevent

Gevent is a third-party library, which can easily realize concurrent synchronous or asynchronous programming through gevent. The main mode used in gevent is Greenlet, which is a lightweight process connected to Python in the form of C extension module. Greenlets all run inside the main program operating system process, but they are scheduled cooperatively.

#usage g1=gevent.spawn(func,1,,2,3,x=4,y=5)Create a coroutine object g1,spawn The first parameter in parentheses is the function name, such as eat,There can be multiple parameters behind, which can be location arguments or keyword arguments, which are passed to the function eat of g2=gevent.spawn(func2) g1.join() #Wait for g1 to end g2.join() #Wait for g2 to end #Or the above two steps cooperate in one step: gevent joinall([g1,g2]) g1.value#Get the return value of func1

Automatically switch tasks in case of IO blocking

import gevent

def eat(name):

print('%s eat 1' %name)

gevent.sleep(2)

print('%s eat 2' %name)

def play(name):

print('%s play 1' %name)

gevent.sleep(1)

print('%s play 2' %name)

g1=gevent.spawn(eat,'egon')

g2=gevent.spawn(play,name='egon')

g1.join()

g2.join()

#Or gevent joinall([g1,g2])

print('main')

Gevent Sleep (2) simulates io blocking recognized by gevent, while time Sleep (2) or other blocking, gevent cannot be directly identified. You need to use the following line of code and patch to identify from gevent import monkey;monkey.patch_all() must be placed in front of the patched person, such as time and socket module, or we simply remember that to use gevent, we need to import from gevent into monkey; monkey. patch_ Put all() at the beginning of the file

from gevent import monkey;monkey.patch_all()

import gevent

import time

def eat():

print('eat food 1')

time.sleep(2)

print('eat food 2')

def play():

print('play 1')

time.sleep(1)

print('play 2')

g1=gevent.spawn(eat)

g2=gevent.spawn(play_phone)

gevent.joinall([g1,g2])

print('main')

We can use threading current_ thread(). Getname() to view each g1 and g2. The result is dummy thread-n, that is, false thread

5. Synchronous and asynchronous of Gevent

from gevent import spawn,joinall,monkey;monkey.patch_all()

import time

def task(pid):

"""

Some non-deterministic task

"""

time.sleep(0.5)

print('Task %s done' % pid)

def synchronous():

for i in range(10):

task(i)

def asynchronous():

g_l=[spawn(task,i) for i in range(10)]

joinall(g_l)

if __name__ == '__main__':

print('Synchronous:')

synchronous()

print('Asynchronous:')

asynchronous()

#An important part of the above program is to encapsulate the task function into the gevent of the internal thread of Greenlet spawn. The initialized Greenlet list is stored in the array threads, which is passed to gevent Join all function, which blocks the current process and executes all given greenlets. The execution process will only continue to go down after all greenlets are executed.

Example 1 of Gevent application

Collaborative application: crawler

from gevent import monkey;monkey.patch_all()

import gevent

import requests

import time

def get_page(url):

print('GET: %s' %url)

response=requests.get(url)

if response.status_code == 200:

print('%d bytes received from %s' %(len(response.text),url))

start_time=time.time()

gevent.joinall([

gevent.spawn(get_page,'https://www.python.org/'),

gevent.spawn(get_page,'https://www.yahoo.com/'),

gevent.spawn(get_page,'https://github.com/'),

])

stop_time=time.time()

print('run time is %s' %(stop_time-start_time))

7. Application example 2 of Gevent

Implement socket concurrency under single thread through gevent (from gevent import monkey;monkey.patch_all() must be placed before importing socket module, otherwise gevent cannot recognize socket blocking) server

from gevent import monkey;monkey.patch_all()

from socket import *

import gevent

#If you don't want to use money patch_ All() for patching, you can use the socket provided by gevent

# from gevent import socket

# s=socket.socket()

def server(server_ip,port):

s=socket(AF_INET,SOCK_STREAM)

s.setsockopt(SOL_SOCKET,SO_REUSEADDR,1)

s.bind((server_ip,port))

s.listen(5)

while True:

conn,addr=s.accept()

gevent.spawn(talk,conn,addr)

def talk(conn,addr):

try:

while True:

res=conn.recv(1024)

print('client %s:%s msg: %s' %(addr[0],addr[1],res))

conn.send(res.upper())

except Exception as e:

print(e)

finally:

conn.close()

if __name__ == '__main__':

server('127.0.0.1',8080)

Server

client

#_*_coding:utf-8_*_

__author__ = 'Linhaifeng'

from socket import *

client=socket(AF_INET,SOCK_STREAM)

client.connect(('127.0.0.1',8080))

while True:

msg=input('>>: ').strip()

if not msg:continue

client.send(msg.encode('utf-8'))

msg=client.recv(1024)

print(msg.decode('utf-8'))

Multithreading concurrent multiple clients

from threading import Thread

from socket import *

import threading

def client(server_ip,port):

c=socket(AF_INET,SOCK_STREAM) #The socket object must be added to the function, that is, in the local namespace. If it is placed outside the function, it will be shared by all threads. Then everyone will share a socket object, and the client port will always be the same

c.connect((server_ip,port))

count=0

while True:

c.send(('%s say hello %s' %(threading.current_thread().getName(),count)).encode('utf-8'))

msg=c.recv(1024)

print(msg.decode('utf-8'))

count+=1

if __name__ == '__main__':

for i in range(500):

t=Thread(target=client,args=('127.0.0.1',8080))

t.start()

onnect((server_ip,port))

count=0

while True:

c.send(('%s say hello %s' %(threading.current_thread().getName(),count)).encode('utf-8'))

msg=c.recv(1024)

print(msg.decode('utf-8'))

count+=1

if name == 'main':

for i in range(500):

t=Thread(target=client,args=('127.0.0.1',8080))

t.start()