1, Introduction

Geometric moment was proposed by Hu(Visual pattern recognition by moment invariants) in 1962. It has translation, rotation and scale invariance.

These seven invariant moments form a set of characteristic quantities, Hu M. K proved that they are invariant to rotation, scaling and translation in 1962.

In fact, in the process of object recognition in the picture, only the and invariance are maintained well, and the errors caused by other invariant moments are relatively large. Some scholars believe that only the invariant moment based on the second-order moment can describe the two-dimensional object with rotation, scaling and translation invariance (and just consist of the second-order moment). But I didn't prove whether it was true.

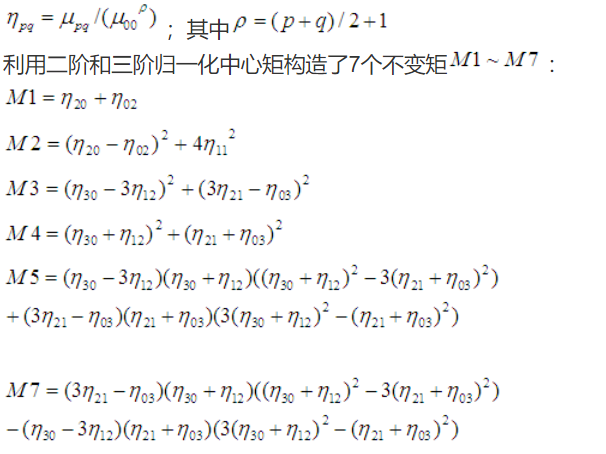

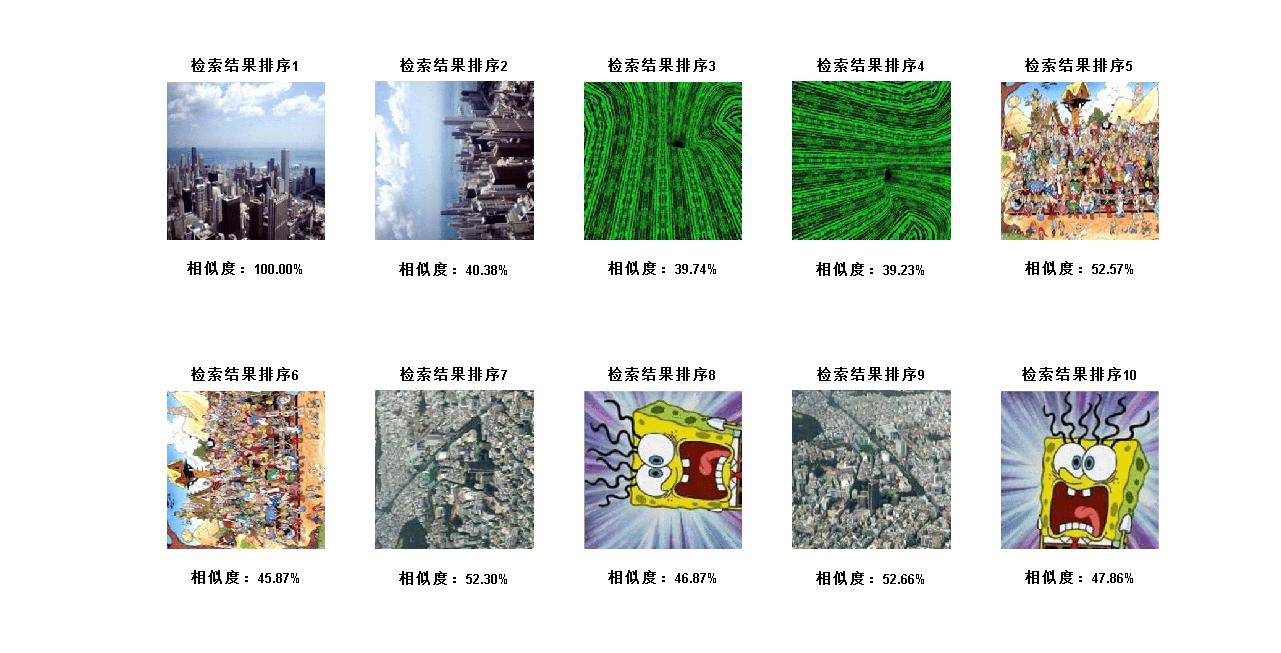

The feature quantity composed of Hu moment has the advantage of fast speed, but the disadvantage is that the recognition rate is relatively low. I have done gesture recognition. For the segmented gesture contour map, the recognition rate is about 30%, and for the pictures with rich texture, the recognition rate is even less eye-catching, only about 10%. This is partly because Hu moment invariants only use low-order moments (at most, third-order moments), and the details of the image are not well described, resulting in the incomplete description of the image.

Hu moment invariants are generally used to identify large objects in the image. The shape of the object is well described, and the texture features of the image cannot be too complex. For example, the recognition effect of fruit shape or simple characters in the license plate will be relatively better.

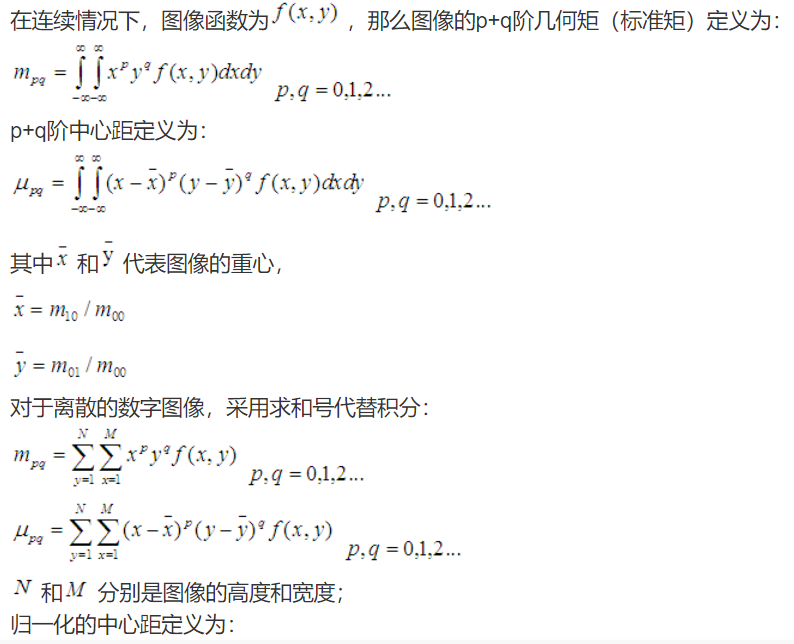

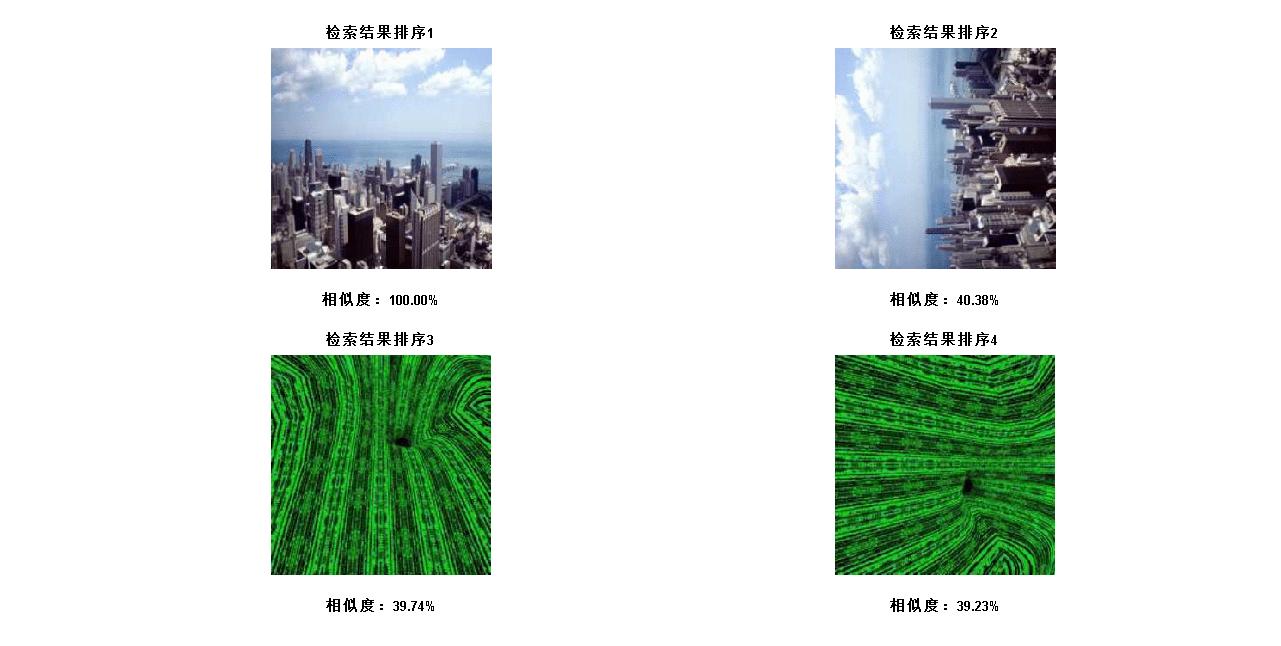

It is defined as follows:

① Definition of moment invariants of order (p+q):

② For digital images, discretization is defined as:

③ Definition of normalized central moment:

④ Hu moment definition

2, Source code

clc; clear all; close all;

warning off all;

filename = 'Image to be retrieved\\im1.bmp';

[I, map] = imread(filename);

I = ind2rgb(I, map);

I1 = Gray_Convert(I, 0);

bw1 = Image_Binary(I1, 0);

Hu = Compute_HuNicolas(bw1);

[resultNames, index] = CoMatrix_Process(filename, 1);

[fresultNames, index] = Hu_Process(filename, Hu, resultNames, index);

D = Analysis(filename, fresultNames, index, 1);

function [resultNames, index] = CoMatrix_Process(filename, flag)

if nargin < 2

flag = 1;

end

if nargin < 1

filename = 'Image to be retrieved\\im1.bmp';

end

resultValues = [];

resultNames = {};

files = ls('Picture library\\*.*');

[queryx, querymap] = imread(filename);

if isempty(querymap)

[queryx, querymap] = rgb2ind(queryx, 256);

end

for i = 1 : size(files, 1)

file = fullfile('Picture library\\', files(i, :));

[pathstr, name, ext] = fileparts(file);

if length(strtrim(ext)) > 3

[X, RGBmap] = imread(file);

HSVmap = rgb2hsv(RGBmap);

D = Compute_QuadDistance(queryx, querymap, X, HSVmap);

resultValues(end+1) = D;

resultNames{end+1} = {file};

end

end

[sortedValues, index] = sort(resultValues);

query = ind2rgb(queryx, querymap);

query = rgb2gray(query);

if flag

figure;

imshow(queryx, querymap);

title('Image to be retrieved', 'FontWeight', 'Bold');

figure;

set(gcf, 'units', 'normalized', 'position',[0 0 1 1]);

for i = 1 : 10

tempstr = cell2mat(resultNames{index(i)});

[X, RGBmap] = imread(tempstr);

img = ind2rgb(X, RGBmap);

subplot(2, 5, i); imshow(img, []);

img = rgb2gray(img);

dm = imabsdiff(query, img);

df = (1-sum(dm(:))/sum(query(:)))*100;

xlabel(sprintf('Similarity:%.2f%%', abs(df)), 'FontWeight', 'Bold');

str = sprintf('Sorting of search results%d', i);

title(str, 'FontWeight', 'Bold');

end

end

function Hu = Compute_HuNicolas(in_image)

format long

if ndims(in_image) == 3

image = rgb2gray(in_image);

else

image = in_image;

end

image = double(image);

m00=sum(sum(image));

m10=0;

m01=0;

[row,col]=size(image);

for i=1:row

for j=1:col

m10=m10+i*image(i,j);

m01=m01+j*image(i,j);

end

end

u10=m10/m00;

u01=m01/m00;

n20 = 0;

n02 = 0;

n11 = 0;

n30 = 0;

n12 = 0;

n21 = 0;

n03 = 0;

for i=1:row

for j=1:col

n20=n20+i^2*image(i,j);

n02=n02+j^2*image(i,j);

n11=n11+i*j*image(i,j);

n30=n30+i^3*image(i,j);

n03=n03+j^3*image(i,j);

n12=n12+i*j^2*image(i,j);

n21=n21+i^2*j*image(i,j);

end

end

n20=n20/m00^2;

n02=n02/m00^2;

n11=n11/m00^2;

n30=n30/m00^2.5;

n03=n03/m00^2.5;

n12=n12/m00^2.5;

n21=n21/m00^2.5;

h1 = n20 + n02;

h2 = (n20-n02)^2 + 4*(n11)^2;

h3 = (n30-3*n12)^2 + (3*n21-n03)^2;

h4 = (n30+n12)^2 + (n21+n03)^2;

h5 = (n30-3*n12)*(n30+n12)*((n30+n12)^2-3*(n21+n03)^2)+(3*n21-n03)*(n21+n03)*(3*(n30+n12)^2-(n21+n03)^2);

h6 = (n20-n02)*((n30+n12)^2-(n21+n03)^2)+4*n11*(n30+n12)*(n21+n03);

h7 = (3*n21-n03)*(n30+n12)*((n30+n12)^2-3*(n21+n03)^2)+(3*n12-n30)*(n21+n03)*(3*(n30+n12)^2-(n21+n03)^2);

Hu = [h1 h2 h3 h4 h5 h6 h7];

3, Operation results

4, Remarks

Complete code or write on behalf of QQ1575304183