1, NETTY bottom layer is implemented by NIO selector and epoll. Select, poll and epoll are IO multiplexing mechanisms. I/O multiplexing is a mechanism through which a process can monitor multiple descriptors. Once a descriptor is ready (generally read ready or write ready), it can notify the program to perform corresponding read-write operations. However, select, poll and epoll are synchronous I/O in essence, because they need to be responsible for reading and writing after the read-write event is ready, that is, copying data from the kernel to user space is blocked, while asynchronous I/O does not need to be responsible for reading and writing. The implementation of asynchronous I/O will be responsible for copying data from the kernel to user space.

2, Test example

1.C++

/*

*\ Server side source code

*/

#include <netinet/in.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <fcntl.h>

#include <iostream>

#include <signal.h>

#include <sys/epoll.h>

#define MAXFDS 256

#define EVENTS 100

#define PORT 8888

int epfd;

bool setNonBlock(int fd)

{

int flags = fcntl(fd, F_GETFL, 0);

flags |= O_NONBLOCK;

if(-1 == fcntl(fd, F_SETFL, flags))

return false;

return true;

}

int main(int argc, char *argv[], char *evp[])

{

int fd, nfds, confd;

int on = 1;

char *buffer[512];

struct sockaddr_in saddr, caddr;

struct epoll_event ev, events[EVENTS];

if(-1 == socket(AF_INET, SOCKSTREAM), 0)

{

std::cout << "Error creating socket" << std::endl;

return -1;

}

struct sigaction sig;

sigemptyset(&sig.sa_mask);

sig_handler = SIG_IGN;

sigaction(SIGPIPE, &N > sig, NULL);

epfd = epoll_create(MAXFDS);

setsockopt(fd, SOL_SOCKET, SO_REUSEADDR, &on, sizeof(on));

memset(&saddr, 0, sizeof(saddr));

saddr.sin_family = AF_INET;

saddr.sin_port = htons((short)(PORT));

saddr.sin_addr.s_addr = INADDR_ANY;

if(-1 == bind(fd, (struct sockaddr *)&saddr, sizeof(saddr)))

{

std::cout << "The socket cannot be bound to the server" << std::endl;

return -1;

}

if(-1 == listen(fd, 32))

{

std::cout << "An error occurred while listening on the socket" << std::endl;

return -1;

}

ev.data.fd = fd;

ev.events = EPOLLIN;

epoll_ctl(epfd, EPOLL_CTL_ADD, fd, &ev);

while(true)

{

nfds = epoll_wait(epfd, &events, MAXFDS, 0);

for(int i = 0; i < nfds; ++ i)

{

if(fd == events[i].data.fd)

{

memset(&caddr, sizeof(caddr));

cfd = accept(fd, (struct sockaddr *)&caddr, &sizeof(caddr));

if(-1 == cfd)

{

std::cout << "There was a problem with the server receiving the socket" << std::endl;

break;

}

setNonBlock(cfd);

ev.data.fd = cfd;

ev.events = EPOLLIN;

epoll_ctl(epfd, EPOLL_CTL_ADD, cfd, &ev);

}

else if(events[i].data.fd & EPOLLIN)

{

bzero(&buffer, sizeof(buffer));

std::cout << "The server side should read the messages sent by the client" << std::endl;

ret = recv(events[i].data.fd, buffer, sizeof(buffer), 0);

if(ret < 0)

{

std::cout << "The server received an error message" << endl;

return -1;

}

std::cout << "The received message is:" << (char *) buffer << std::endl;

ev.data.fd = events[i].data.fd;

ev.events = EPOLLOUT;

epoll_ctl(epfd, EPOLL_CTL_MOD, events[i].data.fd, &ev);

}

else if(events[i].data.fd & EPOLLOUT)

{

bzero(&buffer, sizeof(buffer));

bcopy("The Author@: magicminglee@Hotmail.com", buffer, sizeof("The Author@: magicminglee@Hotmail.com"));

ret = send(events[i].data.fd, buffer, strlen(buffer));

if(ret < 0)

{

std::cout << "There was an error when the server sent a message to the client" << std::endl;

return -1;

}

ev.data.fd = events[i].data.fd;

epoll_ctl(epfd, EPOLL_CTL_DEL, ev.data.fd, &ev);

}

}

}

if(fd > 0)

{

shutdown(fd, SHUT_RDWR);

close(fd);

}

}2.JAVA

package com.tpw.summaryday.nio;

import lombok.Getter;

import lombok.extern.slf4j.Slf4j;

import java.io.IOException;

import java.net.InetSocketAddress;

import java.nio.ByteBuffer;

import java.nio.channels.*;

import java.nio.charset.Charset;

import java.nio.charset.StandardCharsets;

import java.util.*;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

/**

* <h3>summaryday</h3>

* <p></p>

*

* @author : lipengyao

* @date : 2021-09-16 16:50:54

**/

@Slf4j

public class ServerReactor3 {

public static final int workReactorNums = Runtime.getRuntime().availableProcessors() * 2;

public static class WorkReactor {

public static ExecutorService executorService = Executors.newFixedThreadPool(ServerReactor3.workReactorNums * 2);

private Selector selector;

private int reactorIndex;

private int channelCnt;

private Map<SocketChannel, ArrayDeque<String>> unWriteDataMap = new ConcurrentHashMap<>();

private List<SocketChannel> waitRegisterChannels = new ArrayList<>();

private Lock lock = new ReentrantLock();

private int maxItemKeyCnt = 0;

public WorkReactor(int reactorIndex) throws IOException {

this.selector = Selector.open();

this.reactorIndex = reactorIndex;

this.channelCnt = 0;

select();

log.debug("register init channelCnt:{},reactorIndex:{},selectionKey:{}"

, this.channelCnt, this.reactorIndex,this.selector);

}

public void register(SocketChannel socketChannel) {

lock.lock();

try{

waitRegisterChannels.add(socketChannel);

log.debug("register add socket channel waitRegisterChannels:{}",waitRegisterChannels.size());

}finally {

lock.unlock();

}

}

public void registerAllWaitChannels(){

lock.lock();

try{

if (!this.waitRegisterChannels.isEmpty()){

log.debug("registerAllWaitChannels will register begin waitRegisterChannels:{}",waitRegisterChannels.size());

for (int i = 0; i < this.waitRegisterChannels.size(); i++) {

this.interRegister(this.waitRegisterChannels.get(i));

}

this.waitRegisterChannels.clear();

log.debug("registerAllWaitChannels register end waitRegisterChannels:{}",waitRegisterChannels.size());

}

}finally {

lock.unlock();

}

}

public void interRegister(SocketChannel socketChannel){

try {

selector.wakeup();

SelectionKey selectionKey = socketChannel.register(selector, SelectionKey.OP_READ /*| SelectionKey.OP_WRITE*/);

int read = SelectionKey.OP_READ;

this.channelCnt++;

unWriteDataMap.put(socketChannel, new ArrayDeque<String>());

log.debug("register channelCnt:{},reactorIndex:{},selectionKey:{}"

, this.channelCnt, this.reactorIndex, selectionKey);

}catch ( Exception e){

e.printStackTrace();

}

}

public void select() throws ClosedChannelException {

executorService.submit(() -> {

log.debug("begin channelCnt:{},reactorIndex:{}", this.channelCnt, this.reactorIndex);

while (true) {

try {

if (selector.select(10) <= 0) {

// TimeUnit.MILLISECONDS.sleep(10);

// log.debug(" has no event,continue,reactorIndex:{}", this.reactorIndex);

this.registerAllWaitChannels();

continue;

}

log.debug(" get some io event,will handle,reactorIndex:{}", this.reactorIndex);

Set<SelectionKey> selectionKeys = selector.selectedKeys();

Iterator<SelectionKey> selectionKeyIterator = selectionKeys.iterator();

Set<SelectionKey> allKeys = selector.keys();

if (allKeys.size() > maxItemKeyCnt){

maxItemKeyCnt = allKeys.size();

}

log.debug(" get select key--> selectionKeys:{},channelCnt:{},reactorIndex:{},allKeys:{},maxItemKeyCnt:{}",

selectionKeys.size(), this.channelCnt, this.reactorIndex,allKeys.size(),maxItemKeyCnt);

while (selectionKeyIterator.hasNext()) {

SelectionKey t = selectionKeyIterator.next();

selectionKeyIterator.remove();

if (t.isReadable() && t.isValid()) {

SocketChannel socketChannel = (SocketChannel) t.channel();

ByteBuffer byteBuffer = ByteBuffer.allocate(1024);

int count = socketChannel.read(byteBuffer);

log.debug(" read socketHandler:{},count:{}", socketChannel.getRemoteAddress(),count);

if (count < 0) {

log.debug(" read error ,will close channel remote Address:{}"

, socketChannel.getRemoteAddress());

t.cancel();

socketChannel.close();

this.channelCnt--;

continue;

}

byteBuffer.flip();

byte[] inputData = new byte[byteBuffer.limit()];

byteBuffer.get(inputData);

String body = new String(inputData);

log.debug(" read ok , ,reactorIndex:{},data:{}", reactorIndex, body);

if (socketChannel.isConnected() && socketChannel.isOpen()) {

byteBuffer.clear();

String response = "server rsp:" + body + "\r\n";

log.debug(" read ok , ,response:{}", response);

// unWriteDataMap.get(socketChannel).addLast(response);

byte[] writeByets = response.getBytes("utf-8");

byteBuffer.put(writeByets);

byteBuffer.flip();

socketChannel.write(byteBuffer);

}

} else if (t.isWritable()) {

SocketChannel socketChannel = (SocketChannel) t.channel();

while (!unWriteDataMap.get(socketChannel).isEmpty()) {

String response = unWriteDataMap.get(socketChannel).pollFirst();

log.debug(" write ok , ,response:{}", response);

byte[] writeByets = response.getBytes("utf-8");

ByteBuffer byteBuffer = ByteBuffer.allocate(1024);

byteBuffer.put(writeByets);

byteBuffer.flip();

socketChannel.write(byteBuffer);

}

}

selectionKeys.remove(t);

}

} catch (IOException e) {

e.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

}

}

});

}

}

@Getter

public static class SocketHandler {

private String name;

private Object channel;

public SocketHandler(String name, Object channel) {

this.name = name;

this.channel = channel;

}

}

public void start() throws IOException {

ExecutorService executorService = Executors.newFixedThreadPool(100);

Selector selector = Selector.open();

ServerSocketChannel serverSocketChannel = ServerSocketChannel.open();

serverSocketChannel.configureBlocking(false);

int port = 7020;

serverSocketChannel.bind(new InetSocketAddress(port));

serverSocketChannel.register(selector, SelectionKey.OP_ACCEPT, new SocketHandler("acceptHandler", serverSocketChannel));

log.debug(" start bind success port:{}", port);

int clientIndex = 0;

WorkReactor[] workReactors = new WorkReactor[workReactorNums];

for (int i = 0; i < workReactorNums; i++) {

workReactors[i] = new WorkReactor(i);

}

while (selector.select() > 0) {

Set<SelectionKey> selectionKeys = selector.selectedKeys();

Iterator<SelectionKey> selectionKeyIterator = selectionKeys.iterator();

while (selectionKeyIterator.hasNext()) {

SelectionKey t = selectionKeyIterator.next();

selectionKeys.remove(t);

if (t.isAcceptable()) {

ServerSocketChannel serverSocketChannel1 = (ServerSocketChannel) t.channel();

SocketHandler socketHandler = (SocketHandler) t.attachment();

// log.debug(" accept socketHandler:{}", socketHandler.getName());

SocketChannel socketChannel = serverSocketChannel1.accept();

socketChannel.configureBlocking(false);

int reactorNumIndex = clientIndex++ % workReactorNums;

log.debug(" accept new channel remote Address:{},reactorNumIndex:{}"

, socketChannel.getRemoteAddress(), reactorNumIndex);

workReactors[reactorNumIndex].register(socketChannel);

}

}

}

}

public static void main(String[] args) {

ServerReactor3 serverReactor = new ServerReactor3();

try {

serverReactor.start();

} catch (IOException e) {

e.printStackTrace();

}

}

}

1. The accept reactor is a selector that handles all access connections. It is the start function of our main class, which will continuously receive connections, and then register the newly obtained socketChannel into the processing class of work reactor.

2. Work reactor CPU core count selector and CPU core * 2 threads. All client connections are evenly distributed to these threads by roundRobin. The work reactor in this class is the WorkReactor class. Each class will create a selector, handle some socket channel connections and share tasks.

3. Note: all operations such as register and select of a selector should be processed in the same thread, otherwise deadlock will occur. For example, when the accept reactor registers the selector from the newly connected socketChannel to the work reactor, it cannot register directly. It needs to be processed in the same thread as the select method of the work reactor, otherwise a competitive deadlock will occur.

3, Selector.open initialization process analysis

1. First, call Selector.open() to create a selector object. Note that generally nio programs are deployed in linux environment, so let's check the JDK implementation source code under linux (generally download the linux source code of open JDK for analysis).

public static Selector open() throws IOException {

return SelectorProvider.provider().openSelector();

}2. The provider is created by sun.nio.ch.DefaultSelectorProvider.create(). The create method internally creates a SelectorProvider through the name of the system. Here, sun.nio.ch.EPollSelectorProvider is created due to the linux environment

public static SelectorProvider create() {

String osName = (String)AccessController.doPrivileged(new GetPropertyAction("os.name"));

if (osName .equals("SunOS")) {

return createProvider("sun.nio.ch.DevPollSelectorProvider");

} else {

return (SelectorProvider)(osName .equals("Linux") ? createProvider("sun.nio.ch.EPollSelectorProvider") : new PollSelectorProvider());

}

}3. Continue to return to the open() of the Selector. After obtaining the SelecProvider instance, continue to call openSelector(), and naturally enter the openSelector() method of EPollSelectorProvider

public AbstractSelector openSelector() throws IOException {

return new EPollSelectorImpl(this);

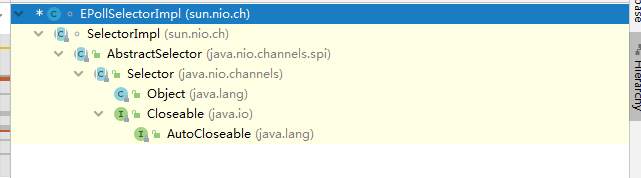

}4.EPollSelectorImpl is the implementation under linux, and the inheritance diagram is as follows

5. Let's look at what EPollSelectorImpl initialization does. EPollArrayWrapper is the three interfaces epoll for epoll operation on linux_ create,epoll_ ctl,epoll_ Wait is logically encapsulated.

EPollSelectorImpl(SelectorProvider sp) throws IOException {

super(sp);

long pipeFds = IOUtil.makePipe(false);

fd0 = (int) (pipeFds >>> 32);

fd1 = (int) pipeFds;

pollWrapper = new EPollArrayWrapper();

pollWrapper.initInterrupt(fd0, fd1);

fdToKey = new HashMap<>();

}Creating fd0 and FD1 and calling pollWrapper.initInterrupt add these two local descriptors to the monitoring event of epoll, so that when calling wakeUp function, you can jump out of the select function, quickly wake up, end waiting or blocking, and then jump out of the loop or register a new event.

void initInterrupt(int fd0, int fd1) {

outgoingInterruptFD = fd1;

incomingInterruptFD = fd0;

epollCtl(epfd, EPOLL_CTL_ADD, fd0, EPOLLIN);

}6. Construction method of parent class SelectorImp. In the JDK, the IO event relationship registered on the Selector is represented by SelectionKey, which represents the events of interest to the Channel, such as read, write, connect and accept. Internally initialize publicKeys and publicSelectedKeys. The container used is HashSet. The former is used to store all interested events and the latter is used to prepare events. publicKeys and publicSelectedKeys refer to a layer of permission control, which internally points to the previous HashSet. There are only two HashSet objects in the actual heap memory. The following are the properties and construction methods of the parent class SelectorImpl:

protected SelectorImpl(SelectorProvider sp) {

super(sp);

keys = ConcurrentHashMap.newKeySet();

selectedKeys = new HashSet<>();

publicKeys = Collections.unmodifiableSet(keys);

publicSelectedKeys = Util.ungrowableSet(selectedKeys);

}7. Epoll arraywrapper completes the construction of epoll file descriptor and the encapsulation of epoll instruction manipulation in linux system. Maintain the result of each selection operation, that is, epoll_ Epoll of wait result_ Event array. Epoll is created here_ The handle returned by create. pollArray is int epoll_wait ( int epfd, struct epoll_event* events, int maxevents, int timeout ); Epoll in this method_ The event array object is used to receive the event of IO change, and the kernel modifies this local object.

EPollArrayWrapper() throws IOException {

// creates the epoll file descriptor

epfd = epollCreate();

// the epoll_event array passed to epoll_wait

int allocationSize = NUM_EPOLLEVENTS * SIZE_EPOLLEVENT;

pollArray = new AllocatedNativeObject(allocationSize, true);

pollArrayAddress = pollArray.address();

// eventHigh needed when using file descriptors > 64k

if (OPEN_MAX > MAX_UPDATE_ARRAY_SIZE)

eventsHigh = new HashMap<>();

}8. eventsLow and eventsHigh are the list of events registered and concerned by the FD storing the socket. eventsLow takes FD as the index of the array, and the value is the event of interest. eventsHigh takes FD as the KEY and the event of interest as the value. FD less than 64K is placed in the low-end event array, otherwise it is placed in the high-end event array.

private final byte[] eventsLow = new byte[MAX_UPDATE_ARRAY_SIZE]; private Map<Integer,Byte> eventsHigh;

9. There are also several parameters. updateCount indicates the number of registered events, and updateDescriptors is the FD list of update events. Registered indicates whether the FD has been registered in the EPOLL handle. It is mainly used to register the FD in the EPLL handle_ It is used for judgment when registering events in wait. The first registration is to call epoll_ctl(ctl_add), others are to call epoll_ctl(ctl_modify). If EPOLL is logged off_ ctl(ctl_del) // number of file descriptors with registration changes pending private int updateCount; // file descriptors with registration changes pending private int[] updateDescriptors = new int[INITIAL_PENDING_UPDATE_SIZE];

// Used by release and updateRegistrations to track whether a file // descriptor is registered with epoll. private final BitSet registered = new BitSet();

4, channel.register registration process analysis

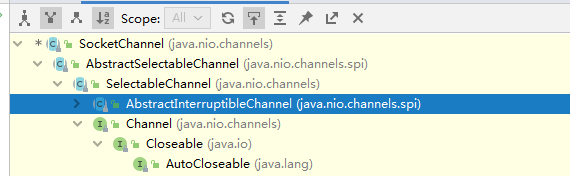

1.channel.register will first call the java.nio.channels.spi.AbstractSelectableChannel.register method of the base class,

- If the channel and selector have been registered, add events and attachments directly

- Otherwise, the registration process is implemented through the selector. Continue calling the register method of SelectorImp

- addKey(k) is to add the registered key to the member variable keys [] of socketchannel

public abstract class AbstractSelectableChannel extends SelectableChannel {

private final SelectorProvider provider;

private SelectionKey[] keys = null;

public final SelectionKey register(Selector var1, int var2, Object var3) throws ClosedChannelException {

synchronized(this.regLock) {

if (!this.isOpen()) {

throw new ClosedChannelException();

} else {

SelectionKey var5 = this.findKey(var1);

if (var5 != null) {

var5.interestOps(var2);

var5.attach(var3);

}

if (var5 == null) {

synchronized(this.keyLock) {

var5 = ((AbstractSelector)var1).register(this, var2, var3);

this.addKey(var5);

}

}

return var5;

}

}

}2. Next, the selector.register method will be called, which will call the registration method of the base class selectorImpl. A SelectionKeyImpl will be created here, which wraps the socketChannel and the current selector as member variables, and any object can be added as additional data.

protected final SelectionKey register(AbstractSelectableChannel var1, int var2, Object var3) {

if (!(var1 instanceof SelChImpl)) {

throw new IllegalSelectorException();

} else {

SelectionKeyImpl var4 = new SelectionKeyImpl((SelChImpl)var1, this);

var4.attach(var3);

synchronized(this.publicKeys) {

this.implRegister(var4);

}

var4.interestOps(var2);

return var4;

}

}3.EPollSelectorImpl.implRegister, put the fd (file descriptor) corresponding to the channel and the corresponding SelectionKey into the fdToKey mapping table. fdToKey is a map type structure used to store the mapping relationship between fd and key.

Add the fd (file descriptor) corresponding to the channel to the EPollArrayWrapper, and force the initialization event of fd to be 0 (force the initial update event to be 0, because the event may exist in the previously cancelled registration.)

Put the selectionKey into the keys collection.

protected void implRegister(SelectionKeyImpl ski) {

if (closed)

throw new ClosedSelectorException();

SelChImpl ch = ski.channel;

int fd = Integer.valueOf(ch.getFDVal());

fdToKey.put(fd, ski);

pollWrapper.add(fd);

keys.add(ski);

}ch.getFDVal() is the FD.keys in the socketChannel, which is the selectKey object registered to the selector in full.

4. The add method of EpollWrapper calls the setUpdateEvents method internally, and sets the second parameter event type (events) to 0, which is the initial value. In setUpdateEvents, take fd as the following table of the array, and the value is the event type. If fd is greater than 64 * 1024, store fd and event type into eventsHigh, which is the member variable, two event arrays and HASHMAP in EpollWrapper mentioned above

void add(int fd) {

// force the initial update events to 0 as it may be KILLED by a

// previous registration.

synchronized (updateLock) {

assert !registered.get(fd);

setUpdateEvents(fd, (byte)0, true);

}

}

private void setUpdateEvents(int fd, byte events, boolean force) {

if (fd < MAX_UPDATE_ARRAY_SIZE) {

if ((eventsLow[fd] != KILLED) || force) {

eventsLow[fd] = events;

}

} else {

Integer key = Integer.valueOf(fd);

if (!isEventsHighKilled(key) || force) {

eventsHigh.put(key, Byte.valueOf(events));

}

}

}Note that when adding here, you will first judge whether this FD already exists in the registered. If so, an error will be reported and cannot be added again.

5. Let's go back to the selectorImpl.register method in step 2 and call SelectionKeyImpl.interestOps(var2); Add events, and then call SelectionKeyImpl.nioInterestOps.

public SelectionKey nioInterestOps(int var1) {

if ((var1 & ~this.channel().validOps()) != 0) {

throw new IllegalArgumentException();

} else {

this.channel.translateAndSetInterestOps(var1, this);

this.interestOps = var1;

return this;

}

}6. The above channel is the socketchannel, and VAR1 is the event of interest. sun.nio.ch.SocketChannelImpl.translateAndSetInterestOps to convert and set attention events.

public void translateAndSetInterestOps(int var1, SelectionKeyImpl var2) {

int var3 = 0;

if ((var1 & 1) != 0) {

var3 |= Net.POLLIN;

}

if ((var1 & 4) != 0) {

var3 |= Net.POLLOUT;

}

if ((var1 & 8) != 0) {

var3 |= Net.POLLCONN;

}

var2.selector.putEventOps(var2, var3);

}1, 4 and 8 are selectionkey.op respectively_ READ,OP_WRITE,OP_CONNECT, convert these three into Net definitions.

7. Next, EpollSelectorImpl.putEventOps configuration event is called.

public void putEventOps(SelectionKeyImpl ski, int ops) {

if (closed)

throw new ClosedSelectorException();

SelChImpl ch = ski.channel;

pollWrapper.setInterest(ch.getFDVal(), ops);

}8. Then call setInterest of EpollWrapper to set the attention event for FD. First, it will judge whether the array updateDescriptors storing FD is full. If it is full, expand the capacity, add 64 to the original basis, and then save FD to the array. Then call setUpdateEvents and set up the FD event, which has been analyzed once.

void setInterest(int fd, int mask) {

synchronized (updateLock) {

// record the file descriptor and events

int oldCapacity = updateDescriptors.length;

if (updateCount == oldCapacity) {

int newCapacity = oldCapacity + INITIAL_PENDING_UPDATE_SIZE;

int[] newDescriptors = new int[newCapacity];

System.arraycopy(updateDescriptors, 0, newDescriptors, 0, oldCapacity);

updateDescriptors = newDescriptors;

}

updateDescriptors[updateCount++] = fd;

// events are stored as bytes for efficiency reasons

byte b = (byte)mask;

assert (b == mask) && (b != KILLED);

setUpdateEvents(fd, b, false);

}

}The setUpdateEvent method will store the registered event of interest and its corresponding file description in eventslew or eventsHigh of the EPollArrayWrapper object, which is used to implement epoll for the underlying layer_ Used when waiting. At the same time, selectorImpl.register will also set the interestOps field of SelectionKey, which is used by our programmers

The FD of the new event of interest will be saved in updateDescriptors.

5, Analysis of selector.select registration process

1.