Note: the article comes from Write-Ahead Log , the author works at Thoughtworks Martin Fowler . This paper mainly writes "pre write log", a common technology in modern distributed database.

Pre write log

The persistence guarantee is provided by persisting each state change to the log in the form of command without refreshing the data structure to disk.

That is: operation log.

problem

Even if the server storing data fails, strong data integrity is also required. Once the server agrees to perform an operation, it should be able to perform it. Even if the server fails to restart, all memory confidence will be lost.

Solution

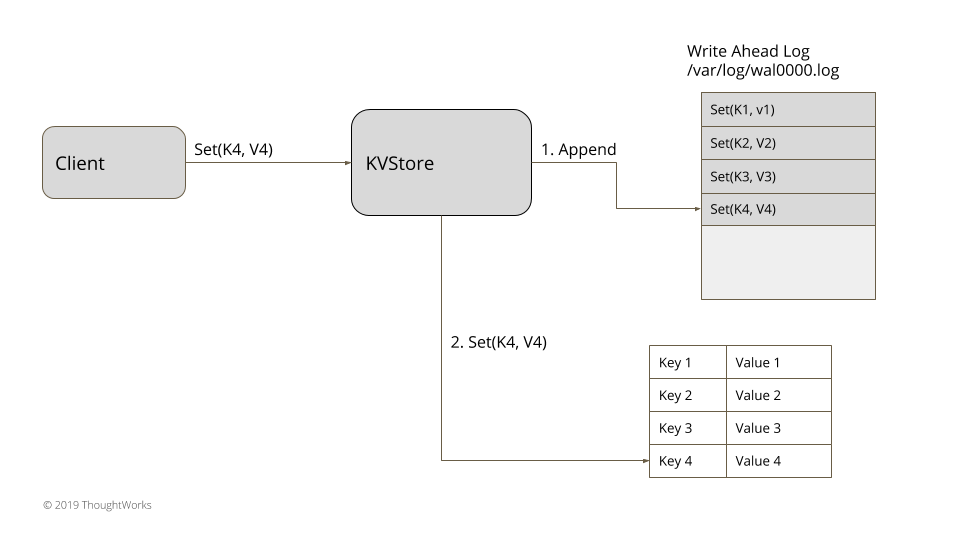

Figure 1: Write Ahead Log

Each state change is stored in a hard disk file in the form of a command. Maintain a log for each server process and append it in order. The sequential addition of a single log simplifies the log processing and subsequent online processing after service restart. Each log entry has a unique identifier. A unique log identifier helps you perform some other operations on the log, such as segmented logs( Segmented Log ) Or use a low watermark( Low-Water Mark ) Clean up logs, etc. log updates can use a single update queue( Singular Update Queue )To achieve.

The typical log entry structure is as follows:

class WALEntry... private final Long entryId; private final byte[] data; private final EntryType entryType; private long timeStamp;

The file can be read each time you reboot, and the state can be restored by replaying all log entries.

Consider a simple memory kv storage:

class KVStore...

private Map<String, String> kv = new HashMap<>();

public String get(String key) {

return kv.get(key);

}

public void put(String key, String value) {

appendLog(key, value);

kv.put(key, value);

}

private Long appendLog(String key, String value) {

return wal.writeEntry(new SetValueCommand(key, value).serialize());

}The put operation is represented as a command that is serialized and stored in the log before updating the memory hash map.

class SetValueCommand...

final String key;

final String value;

final String attachLease;

public SetValueCommand(String key, String value) {

this(key, value, "");

}

public SetValueCommand(String key, String value, String attachLease) {

this.key = key;

this.value = value;

this.attachLease = attachLease;

}

@Override

public void serialize(DataOutputStream os) throws IOException {

os.writeInt(Command.SetValueType);

os.writeUTF(key);

os.writeUTF(value);

os.writeUTF(attachLease);

}

public static SetValueCommand deserialize(InputStream is) {

try {

DataInputStream dataInputStream = new DataInputStream(is);

return new SetValueCommand(dataInputStream.readUTF(), dataInputStream.readUTF(), dataInputStream.readUTF());

} catch (IOException e) {

throw new RuntimeException(e);

}

}This ensures that once the put method returns successfully, even if the process holding KVStore crashes, its state can be restored by reading the log file at startup.

class KVStore...

public KVStore(Config config) {

this.config = config;

this.wal = WriteAheadLog.openWAL(config);

this.applyLog();

}

public void applyLog() {

List<WALEntry> walEntries = wal.readAll();

applyEntries(walEntries);

}

private void applyEntries(List<WALEntry> walEntries) {

for (WALEntry walEntry : walEntries) {

Command command = deserialize(walEntry);

if (command instanceof SetValueCommand) {

SetValueCommand setValueCommand = (SetValueCommand)command;

kv.put(setValueCommand.key, setValueCommand.value);

}

}

}

public void initialiseFromSnapshot(SnapShot snapShot) {

kv.putAll(snapShot.deserializeState());

}Implementation considerations

There are some important considerations when implementing pre write log. The most important thing is to ensure that entries written to log files are actually saved on physical media. The file processing libraries provided in all programming languages provide a mechanism to force the operating system to "Refresh" file changes to physical media. Trade offs need to be considered when using flushing mechanism.

Refreshing every log write operation on the disk can provide strong persistence guarantee (this is the primary purpose of owning logs) , but this severely limits performance and can quickly become a bottleneck. If the refresh is delayed or completed asynchronously, it will improve performance, but if the server crashes before refreshing the entries, it may lose entries in the log. Most implementations use technologies such as batch processing to limit the impact of refresh operations.

Another consideration is to ensure that a corrupted log file is detected when reading the log. To deal with this problem, log entries are usually written using CRC records, and then the CRC records can be verified when reading the file.

A single log file may become unmanageable and may quickly take up all storage space. To solve this problem, technologies such as segmented logs and low watermarks are used.

The pre write log only supports append. Due to this behavior, the log may contain duplicate entries in case of client communication failure and retry. When applying log entries, you need to ensure that duplicate entries are ignored. If the final state is similar to HashMap, in which the update of the same key is idempotent, no special mechanism is required. If not, some mechanism needs to be implemented, Mark each request with a unique identifier and detect duplicates.

example

- Log implementations in all consistency algorithm services such as zookeeper and raft are similar to pre write logs

- Storage implementation in kafka

- All databases, including nosql databases like Cassandra, use pre write logging technology to ensure persistence