5. Optimize program performance-|

Capabilities and limitations of optimizing compilers

void twiddle1(long *xp,long *yp){

*xp += *yp;

*xp += *yp;

}

void twiddle2(long *xp,long *yp){

*xp += 2* *yp;

}

In the two sections of code on the last two pages, the function twiddle2 is more efficient because it only requires three memory references (read * xp, read * yp, write * xp)

twiddle1 takes six times

(2 reads * xp, 2 reads * yp, 2 writes * xp)

If xp=yp, the function twidle1 actually increases the value of xp by four times, while the function twidle2 increases the value of xp by three times

Since the compiler does not know whether xp and yp may be equal, twiddle2 cannot be used as an optimized version of twiddle1

Effect of function call on Optimization

Consider the following code

long f();

long func1(){

return f()+f()+f()+f();

}

long func2(){

return 4*f();

}

Static RAM(SRAM)

Regardless of the specific content of function f, func2 calls f only once, while func1 calls f four times. But if you consider from function f as follows

long counter=0;

long f(){

return counter++;

}

For such an f, func1 returns 6 (0 + 1 + 2 + 3) and func2 returns 0

In this case, the compiler cannot judge

Representation of program performance

For a program, if we record the data scale of the program and the corresponding clock cycle required for operation, and fit these points by the least square method, we will get an expression like y=a+bx, where y is the clock cycle and x is the data scale. When the data scale is large, the running time is mainly determined by the linear factor b. at this time, We use b as a measure of program performance, which is called the number of cycles per element

For ease of illustration, the following structure is stated first

typedef struct{

long len;

data_t *data;

}vec_rec,*vec_ptr

This statement uses data_t to represent the data type of the base element

Consider the following code first

void combine1(vec_ptr v,data_t *dest){

long i;

*dest =IDENT;

for(i=0;i<vec_length(v);i++){

data_t val;

get_vec_element(v,i,&val);

*dest = *dest OP val;

}

}

Traditional DRAM

The VEC function is called every time the loop body executes_ Length, but the length of the array is constant, so VEC can be considered_ Length moves out of the loop to improve efficiency

void combine2(vec_ptr v,data_t *dest){

long i;

long length=vec_length(v);//vec length repeat call

*dest = IDENT;

for(i=0;i<length;i++){

data_t val;

get_vec_element(v,i,&val);

*dest = *dest OP val;

}

}

Reduce procedure calls

data_t *get_vec_start(vec_ptr v){

return v->data;

}

void combine3(vec_ptr v,data_t *dest){

long i;

long length = vec_length(v);

data_t *data = get_vec_start(v);

*dest =IDENT;

for(i=0;i<length;i++){

*dest = *dest OP data[i];

}

}

In the code on the previous page, we eliminated all calls in the loop body, but in fact, such a change will not improve the performance. In the case of integer summation, it will also reduce the performance, because other operations in the inner loop form a bottleneck

Eliminate unnecessary memory references

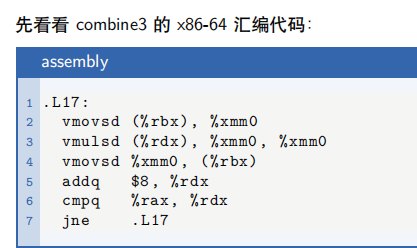

Assembly code of combine3

As can be seen from the assembly code above, during each iteration, the value of the cumulative variable must be read out from memory and then written to memory. Such reading and writing is very wasteful and can be eliminated

void combine4(vec_ptr v, data_t *dest){

long i;

long length = vec_length(v);

data_t *data = get_vec_start(v);

data_t acc = IDENT;

for(i=0;i<length;i++){

acc = acc OP data[i];

}

*dest = acc;

}

The recent Intel processor is superscalar, which means that it can perform multiple operations in each clock cycle. In addition, it is out of order, which means that the order of instruction execution is not necessarily the same as that in the machine level

Such a design will enable the processor to achieve a higher degree of parallelism. For example, when executing programs with branch structure, the processor will use branch prediction technology to predict whether to select a branch and predict the target address of the branch

In addition, there is a speculative execution technology, which means that the processor will perform operations after the branch before the branch. If the prediction is wrong, the processor will recharge the state to the state of the branch point

Loop expansion

The so-called loop expansion refers to reducing the number of iterations of the loop by increasing the number of elements calculated in each iteration. Consider the following procedure

void psum1(float a[],float p[],long n){

long i;

p[0] = a[0];

for (i=1;i<n;i++){

p[i] = p[i-1]+a[i];

}

}

By loop unrolling psum1, the number of iterations can be halved

void psum2(float a[],float p[],long n){

long i;

p[0]=a[0];

for(i=0;i<n;i=n-1;i+=2){

float mid_val = p[i-1]+a[i];

p[i] = mid_val;

p[i+1] = mid_val+a[i+1];

}

if(i<n){

p[i] = p[i-1]+a[i];

}

}

register spilling

For loop unrolling, it is natural to consider the following questions: whether the more times of unrolling, the greater the performance improvement. In fact, the loop expansion needs to maintain multiple variables. Once the expansion times are too many and there are not enough registers to save the variables, the variables need to be saved to memory, which increases the memory access time consumption. Even in an x86-64 architecture with enough registers, the loop is likely to reach the throughput limit before register overflow, This prevents continuous performance improvement