Deep learning - PaddleOCR environment installation

PaddleOCR environment installation

Official documents: https://gitee.com/paddlepaddle/PaddleOCR/blob/develop/doc/doc_ch/installation.md

Note: it is OK to install here, but the old version is installed.

express setup

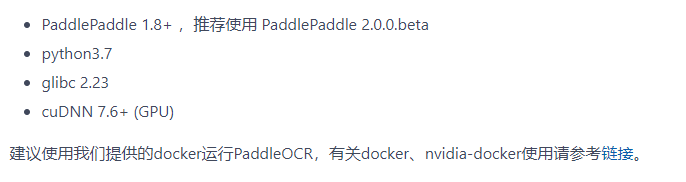

- According to the document, we need the following environment

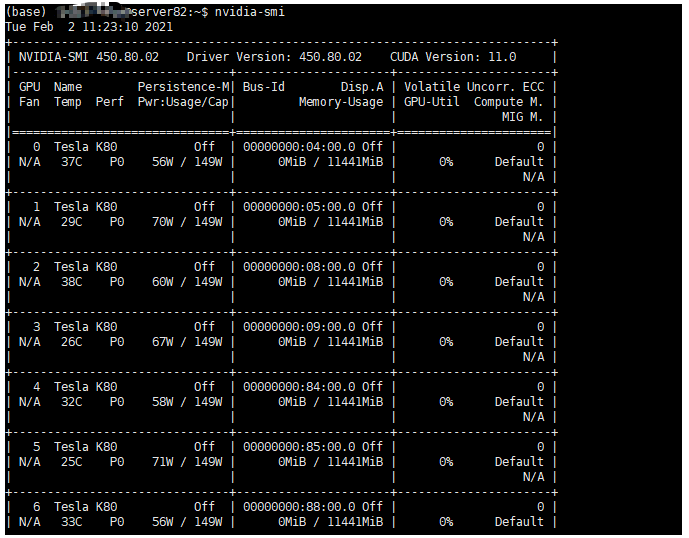

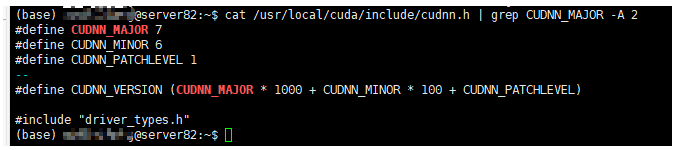

- docker (installation: https://lexiaoyuan.blog.csdn.net/article/details/118053600 )And NVIDIA docker (installation: https://lexiaoyuan.blog.csdn.net/article/details/118054085 )Already installed, let's take a look at our cuda and cuDNN versions

nvidia-smi cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

Looking at the figure above, we know that cuda is 11.0 and cuDNN is 7.6 1. No problem. Let's start the installation

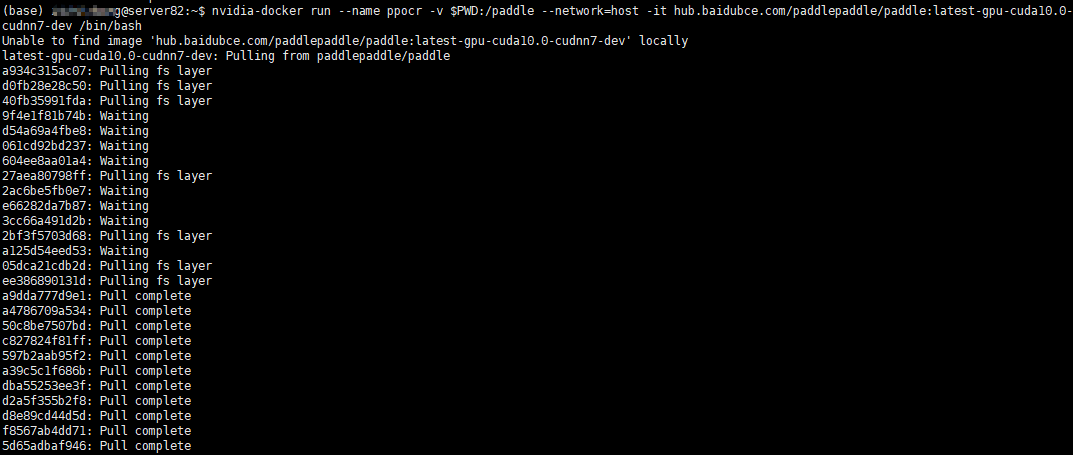

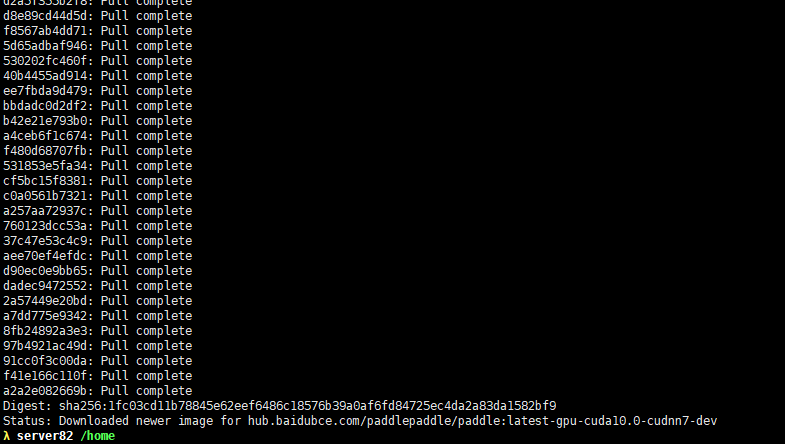

- Create container

Note: the following figure does not switch to the project directory. I reinstalled it later, but there is no screenshot. It is the first installation.

# Switch to project directory cd /home/lexiaoyuan/projects # Create a docker container named ppocr, and map the current directory (our project directory projects) to the / pad directory of the container # In this way, we only need to upload the paddle project to the projects directory, and it will be automatically mounted to the / paddle directory of the container nvidia-docker run --name ppocr -v $PWD:/paddle --network=host -it hub.baidubce.com/paddlepaddle/paddle:latest-gpu-cuda10.0-cudnn7-dev /bin/bash

- As shown in the figure above, after installation, it will automatically enter the container. Press ctrl+P+Q to exit docker and re-enter docker. Use the following command

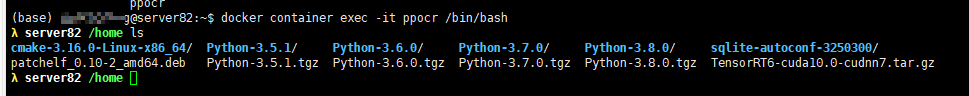

docker container exec -it ppocr /bin/bash

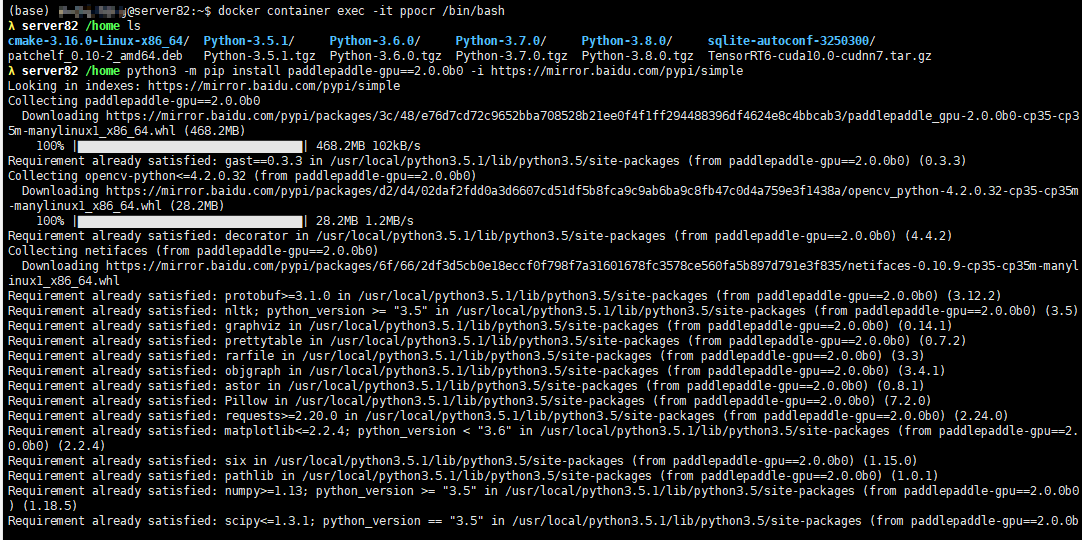

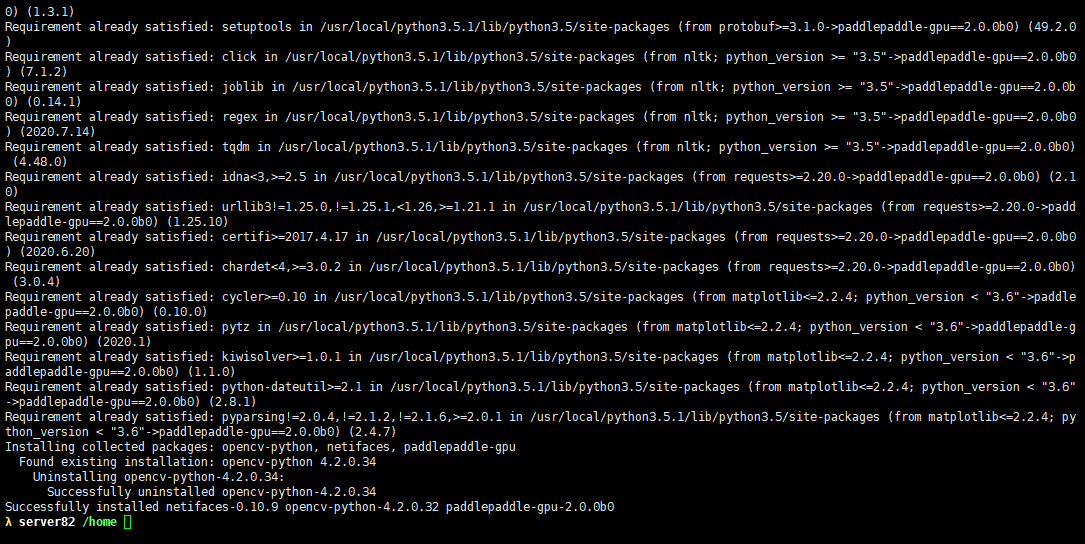

- After entering the container, install PaddlePaddle v2.. 0

python3 -m pip install paddlepaddle-gpu==2.0.0b0 -i https://mirror.baidu.com/pypi/simple # The gpu version is installed here. For other versions, please refer to the official website python3 -m pip install --upgrade pip # If you have CUDA9 or CUDA10 installed on your machine, run the following command to install python3 -m pip install paddlepaddle-gpu==2.0.0b0 -i https://mirror.baidu.com/pypi/simple # If your machine is CPU, run the following command to install python3 -m pip install paddlepaddle==2.0.0b0 -i https://mirror.baidu.com/pypi/simple # For more version requirements, please refer to [installation document]( https://www.paddlepaddle.org.cn/install/quick )Follow the instructions in.

- Clone PaddleOCR code locally using git

[[recommended] git clone https://github.com/PaddlePaddle/PaddleOCR If you can't because of network problems pull If successful, you can also choose to use the hosting on the code cloud: git clone https://gitee.com/paddlepaddle/PaddleOCR Note: the code cloud hosting code may not be synchronized in real time github Project update, existing 3~5 Days delay, please give priority to the recommended method.

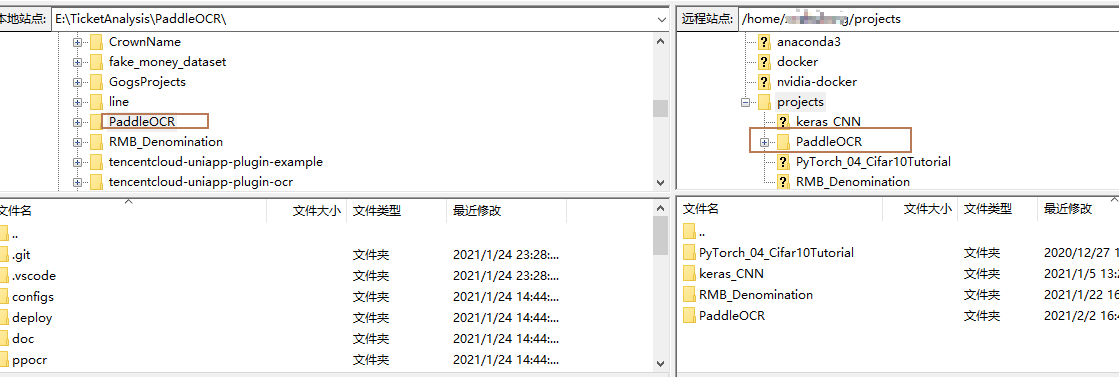

- Next, use FileZilla Client or xftp and other software to upload the downloaded paddle ocr project to the / home/lexiaoyuan/projects directory of the server. (previously, we mounted the directory to the / pad directory of docker)

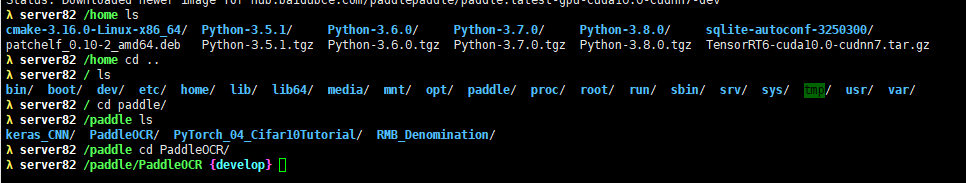

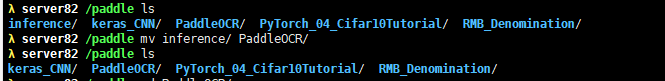

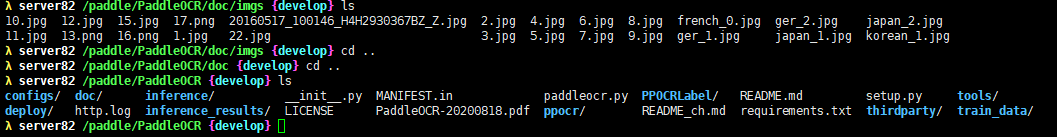

- Now look at the / pad directory in the container

cd /paddle ls

Sure enough, the PaddleOCR project has been mounted

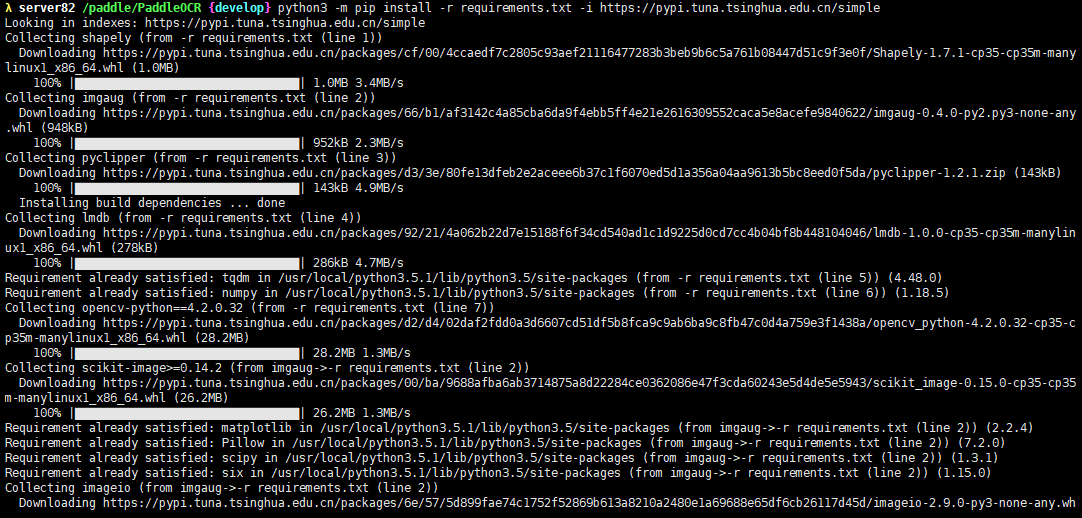

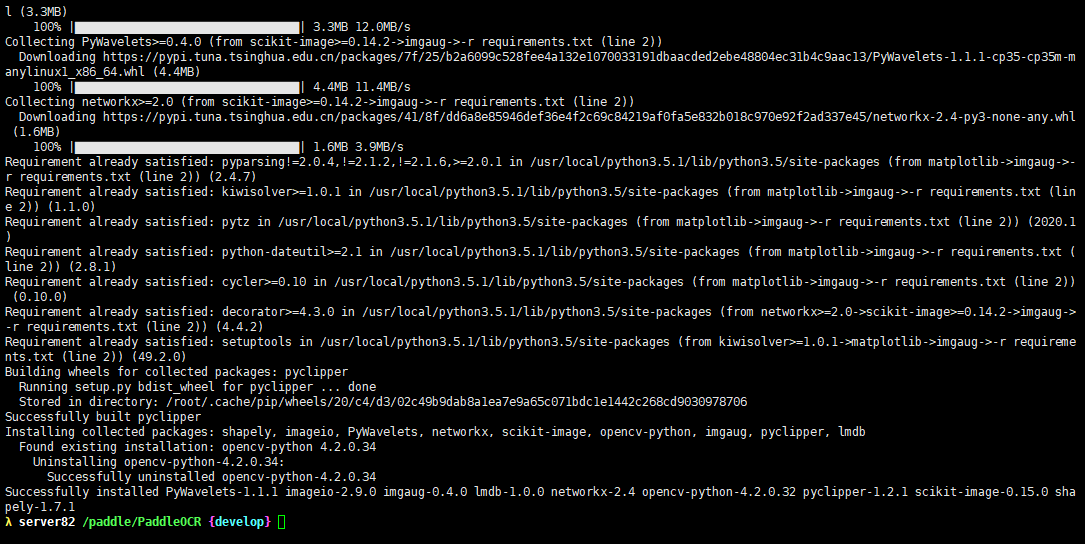

- Enter the PaddleOCR project and install the third-party library

cd PaddleOCR python3 -m pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

- OK, here, the environment of the PaddleOCR project has been installed. Let's start running the project

Quick use

Official documents: https://gitee.com/paddlepaddle/PaddleOCR/blob/develop/doc/doc_ch/quickstart.md

- The first step is to configure the environment. It has been installed. Next, proceed to the second step

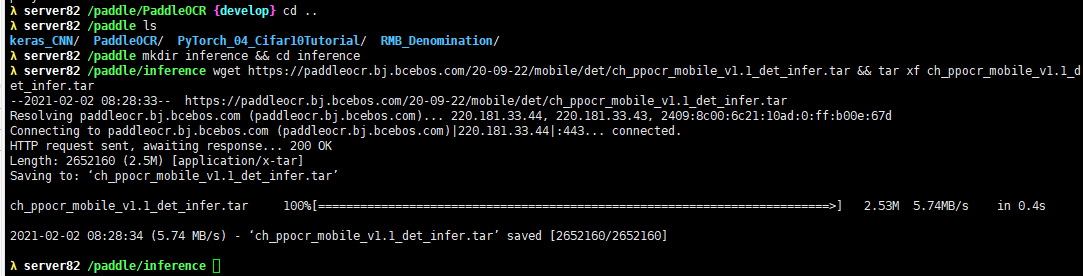

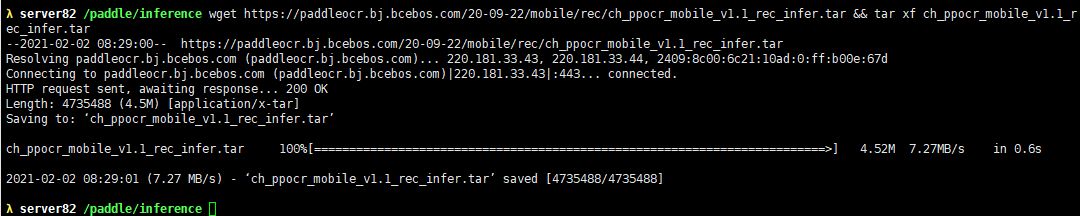

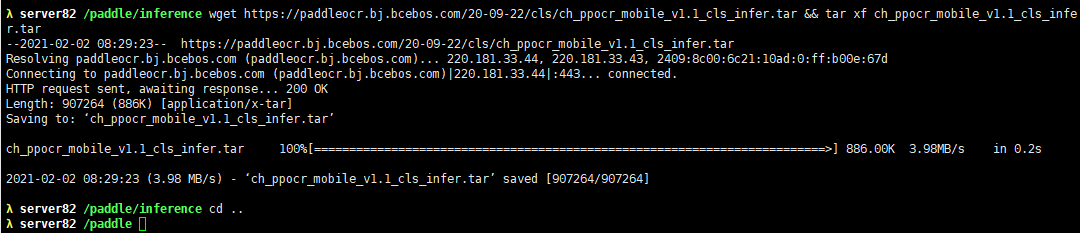

- Download the information model. Here, take the ultra lightweight model as an example:

mkdir inference && cd inference # Download the detection model of ultra lightweight Chinese OCR model and decompress it wget https://paddleocr.bj.bcebos.com/20-09-22/mobile/det/ch_ppocr_mobile_v1.1_det_infer.tar && tar xf ch_ppocr_mobile_v1.1_det_infer.tar # Download the recognition model of ultra lightweight Chinese OCR model and decompress it wget https://paddleocr.bj.bcebos.com/20-09-22/mobile/rec/ch_ppocr_mobile_v1.1_rec_infer.tar && tar xf ch_ppocr_mobile_v1.1_rec_infer.tar # Download the text direction classifier model of ultra lightweight Chinese OCR model and decompress it wget https://paddleocr.bj.bcebos.com/20-09-22/cls/ch_ppocr_mobile_v1.1_cls_infer.tar && tar xf ch_ppocr_mobile_v1.1_cls_infer.tar cd ..

- The previous information directory should be created under the PaddleOCR directory. It doesn't matter. Move it next

mv inference/ PaddleOCR/

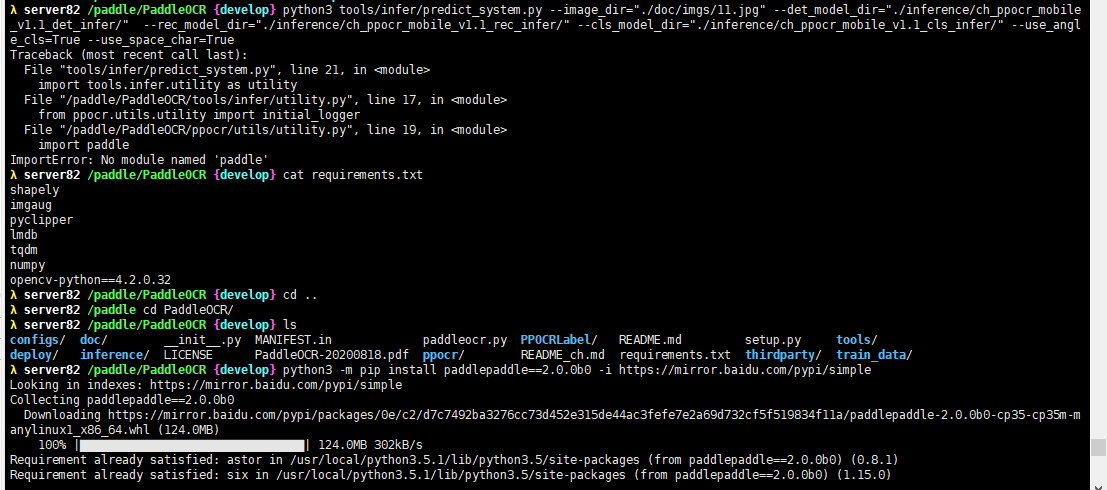

- Enter the PaddleOCR directory and test the code

cd /paddle/PaddleOCR python3 tools/infer/predict_system.py --image_dir="./doc/imgs/11.jpg" --det_model_dir="./inference/ch_ppocr_mobile_v1.1_det_infer/" --rec_model_dir="./inference/ch_ppocr_mobile_v1.1_rec_infer/" --cls_model_dir="./inference/ch_ppocr_mobile_v1.1_cls_infer/" --use_angle_cls=True --use_space_char=True

An error is reported. There is no padding module.

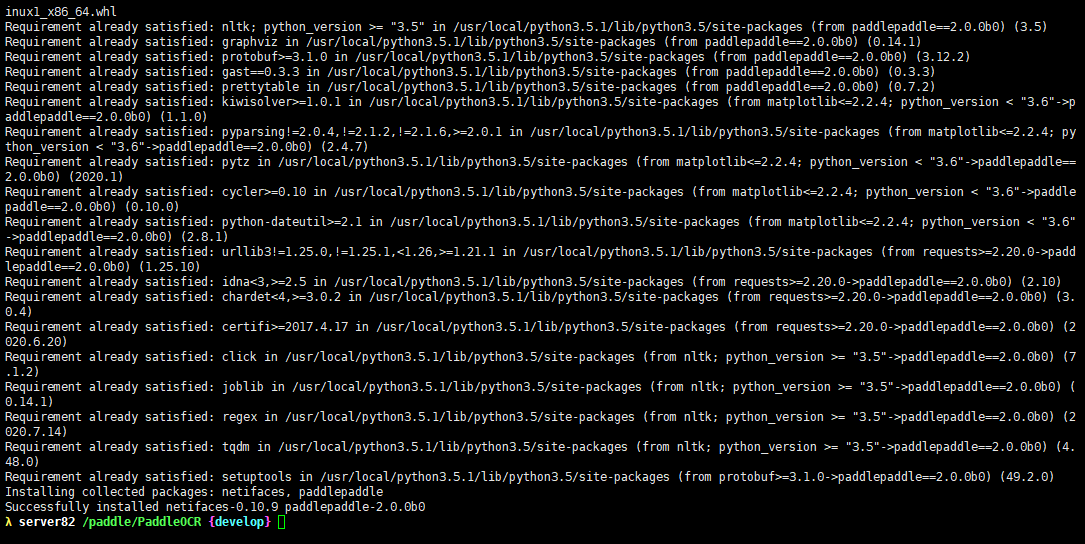

- Try reinstalling a CPU version

python3 -m pip install paddlepaddle==2.0.0b0 -i https://mirror.baidu.com/pypi/simple

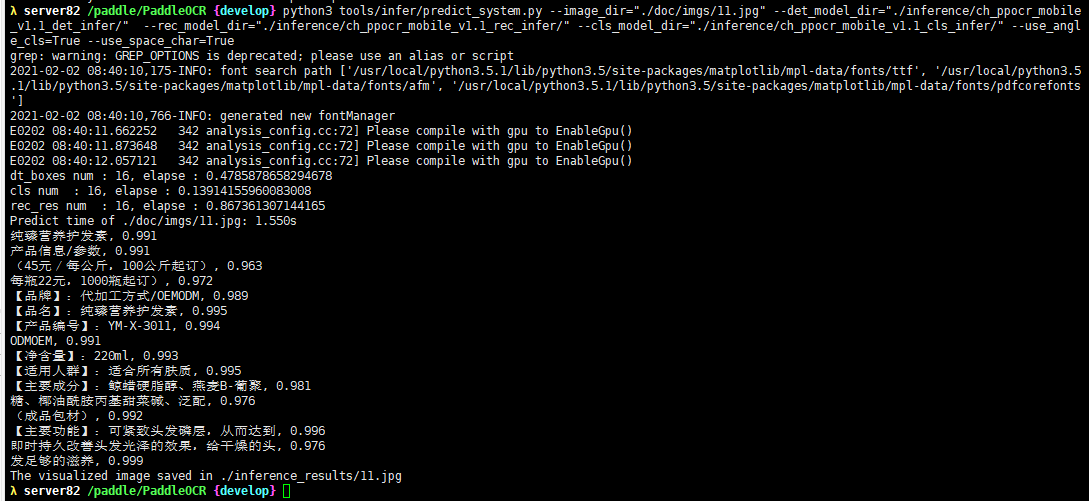

- Test it again

python3 tools/infer/predict_system.py --image_dir="./doc/imgs/11.jpg" --det_model_dir="./inference/ch_ppocr_mobile_v1.1_det_infer/" --rec_model_dir="./inference/ch_ppocr_mobile_v1.1_rec_infer/" --cls_model_dir="./inference/ch_ppocr_mobile_v1.1_cls_infer/" --use_angle_cls=True --use_space_char=True

- OK, it worked

- Let's try it with our own pictures. Use FileZilla Client or xftp and other software to upload the above local pictures to / home/lexiaoyuan/projects/PaddleOCR/doc/imgs directory. (upload to this directory and it will be automatically mounted)

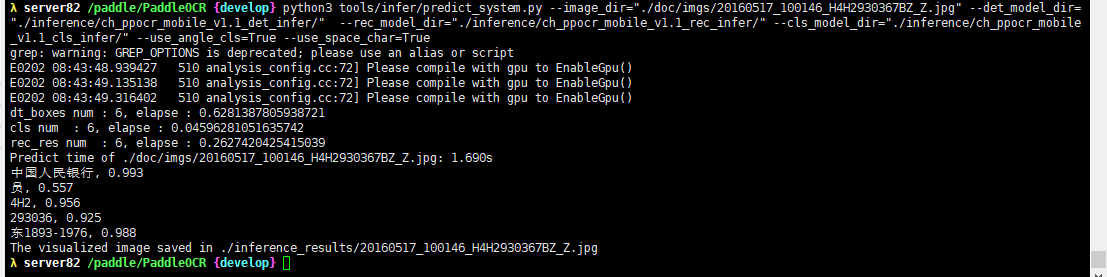

- Execute the above command and change the name of the picture to your own

python3 tools/infer/predict_system.py --image_dir="./doc/imgs/20160517_100146_H4H2930367BZ_Z.jpg" --det_model_dir="./inference/ch_ppocr_mobile_v1.1_det_infer/" --rec_model_dir="./inference/ch_ppocr_mobile_v1.1_rec_infer/" --cls_model_dir="./inference/ch_ppocr_mobile_v1.1_cls_infer/" --use_angle_cls=True --use_space_char=True

- OK, it worked, too.

It's not easy to create. If you like, add a focus and praise, ❤ Thank you ❤