preface

In this section, we explore the core source code of Netty.

Netty is divided into seven sections:

- 01 Java IO evolution path

- 02 Netty and NIO's past and present life

- 03 Netty's first experience of reconstructing RPC framework

- 04 Netty core high performance

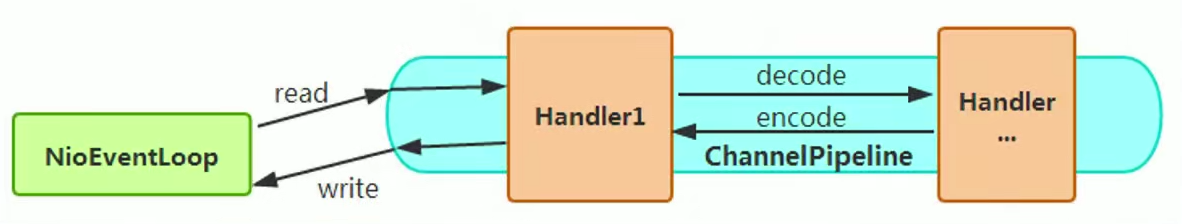

- 05 great artery Pipeline and EventLoop of netty core

- 06 Netty's actual handwritten message push system

- 07 performance tuning and design mode of netty practice

This section focuses on:

Get a deep understanding of Netty's operating mechanism

・ master the core principles of NioEventLoop, Pipeline and ByteBuf

・ master the common tuning schemes of Netty

Operation mechanism of Netty

Three sins of poor performance of traditional RPC calls

- Blocking IO does not have elastic scalability, and high concurrency leads to downtime.

- Performance issues with Java serialization coding.

- The traditional IO thread model takes up too much CPU resources.

We say that the three themes that embody high performance are:

- IO model

- Data protocol

- Thread model

So how does Netty's high performance reflect? Eight advantages are summarized below.

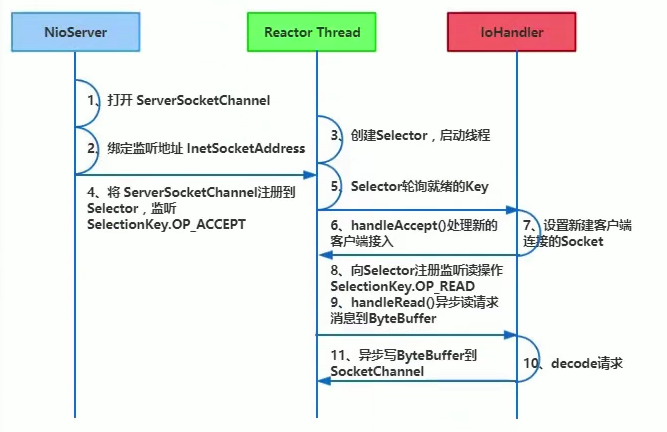

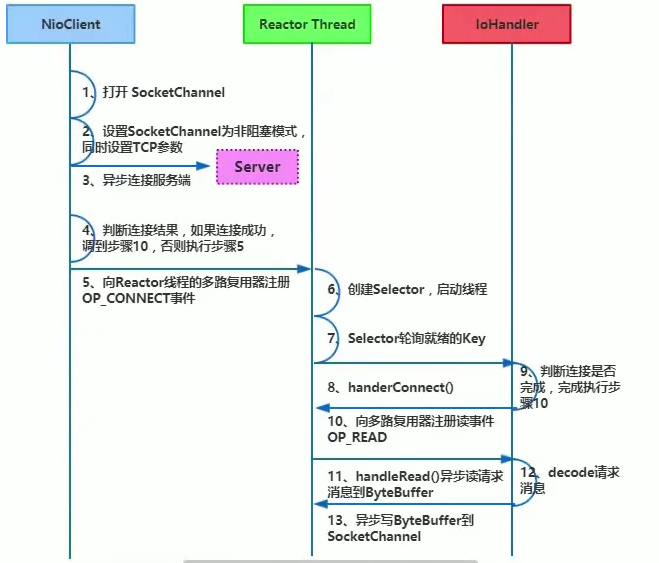

Asynchronous non blocking communication

NioEventLoop (io.netty.channel.nio.NioEventLoop) aggregates the multiplexer Selector and can process hundreds of client channels simultaneously. Since the read and write operations are non blocking, it can fully improve the operation efficiency of IO threads and avoid thread suspension caused by frequent IO blocking.

- Server level

- Client level

Zero-copy

- Receiving and sending ByteBuffer uses off heap memory to directly read and write sockets.

- A combined Buffer object is provided, which can be combined with multiple ByteBuffer objects.

- transferTo() directly sends the data of the file buffer to the target Channel.

Memory pool

- Pooled and UnPooled (non pooled).

- UnSafe and non UnSafe (underlying read / write and application read / write).

- Heap and Direct (heap memory and off heap memory).

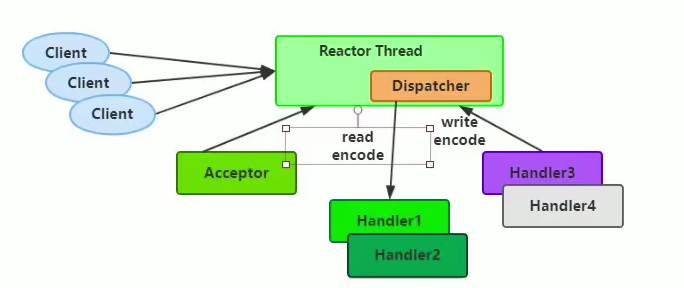

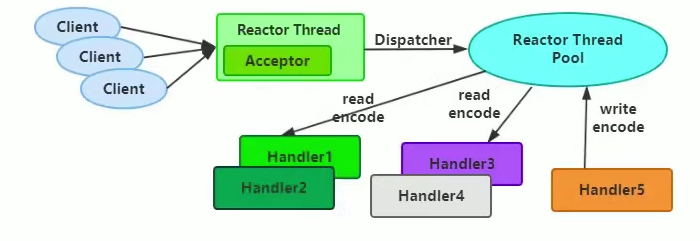

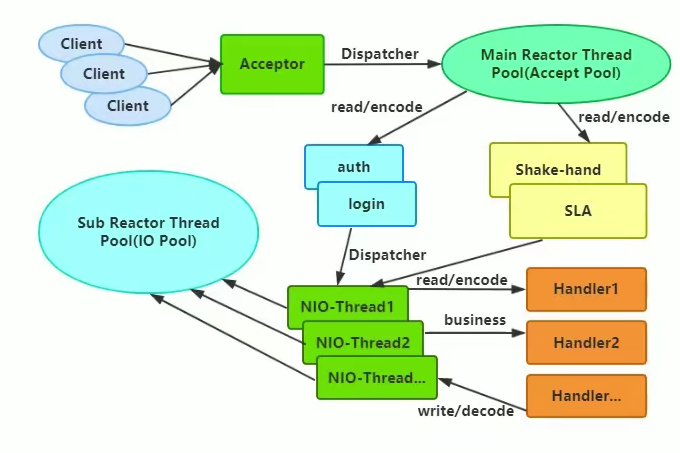

Efficient Reactor thread model

-

Reactor single thread model

-

Reactor multithreading model

- Master slave Reactor multithreading model

Lock free serial design concept

Efficient concurrent programming

Netty's efficient concurrent programming is mainly reflected in the following points:

- The massive and correct use of volatile.

- CAS and atomic classes are widely used.

- Use of thread safe containers.

- Improve concurrency performance through read-write locks.

High performance serialization framework

The key factors affecting serialization performance are summarized as follows:

- The size of the serialized code stream (the occupation of network bandwidth).

- Serialization & performance of deserialization (CPU resource consumption).

- Whether it supports cross language (docking of heterogeneous systems and development language switching).

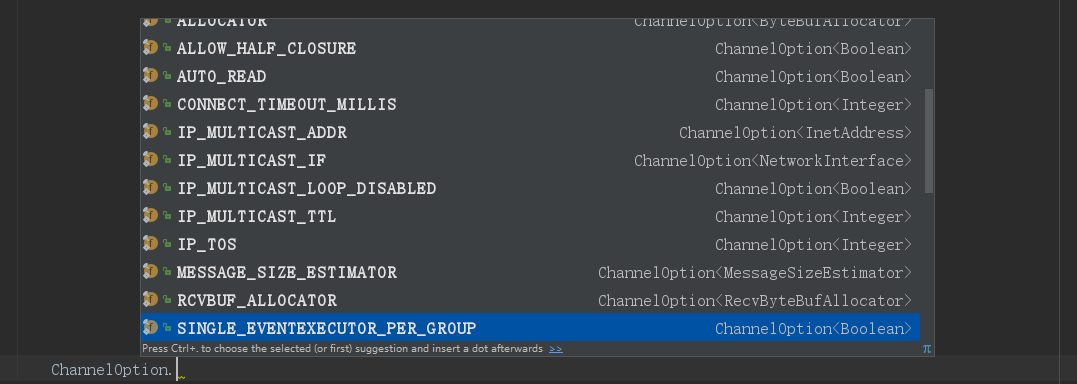

Flexible TCP parameter configuration capability

Preliminary study on NIO source code

When it comes to the source code, we must start with the open method of the selector, Java nio. channels. Selector:

public static Selector open() throws IOException {

return SelectorProvider.provider().openSelector();

}

Look at selectorprovider What does provider () do:

public static SelectorProvider provider() {

synchronized (lock) {

if (provider != null)

return provider;

return AccessController.doPrivileged(

new PrivilegedAction<SelectorProvider>() {

public SelectorProvider run() {

if (loadProviderFromProperty())

return provider;

if (loadProviderAsService())

return provider;

provider = sun.nio.ch.DefaultSelectorProvider.create();

return provider;

}

});

}

}

Where provider = sun nio. ch.DefaultSelectorProvider. create(); Different implementation classes will be returned according to the operating system, and the windows platform will return WindowsSelectorProvider; If (provider! = null) return provider; It ensures that there is only one WindowsSelectorProvider object in the whole server program;

Take another look at the windows selector provider openSelector():

public AbstractSelector openSelector() throws IOException {

return new WindowsSelectorImpl(this);

}

new WindowsSelectorImpl(SelectorProvider) code:

WindowsSelectorImpl(SelectorProvider sp) throws IOException {

super(sp);

pollWrapper = new PollArrayWrapper(INIT_CAP);

wakeupPipe = Pipe.open();

wakeupSourceFd = ((SelChImpl)wakeupPipe.source()).getFDVal();

SinkChannelImpl sink = (SinkChannelImpl)wakeupPipe.sink();

(sink.sc).socket().setTcpNoDelay(true);

wakeupSinkFd = ((SelChImpl)sink).getFDVal();

pollWrapper.addWakeupSocket(wakeupSourceFd, 0);

}

Where pipe Open () is the key. The calling process of this method is:

public static Pipe open() throws IOException {

return SelectorProvider.provider().openPipe();

}

In SelectorProvider:

public Pipe openPipe() throws IOException {

return new PipeImpl(this);

}

Let's look at how to new PipeImpl():

PipeImpl(SelectorProvider sp) {

long pipeFds = IOUtil.makePipe(true);

int readFd = (int) (pipeFds >>> 32);

int writeFd = (int) pipeFds;

FileDescriptor sourcefd = new FileDescriptor();

IOUtil.setfdVal(sourcefd, readFd);

source = new SourceChannelImpl(sp, sourcefd);

FileDescriptor sinkfd = new FileDescriptor();

IOUtil.setfdVal(sinkfd, writeFd);

sink = new SinkChannelImpl(sp, sinkfd);

}

Where ioutil Makepipe (true) is a native method:

staticnativelong makePipe(boolean blocking);

Specific implementation:

JNIEXPORT jlong JNICALL

Java_sun_nio_ch_IOUtil_makePipe(JNIEnv *env, jobject this, jboolean blocking)

{

int fd[2];

if (pipe(fd) < 0) {

JNU_ThrowIOExceptionWithLastError(env, "Pipe failed");

return 0;

}

if (blocking == JNI_FALSE) {

if ((configureBlocking(fd[0], JNI_FALSE) < 0)

|| (configureBlocking(fd[1], JNI_FALSE) < 0)) {

JNU_ThrowIOExceptionWithLastError(env, "Configure blocking failed");

close(fd[0]);

close(fd[1]);

return 0;

}

}

return ((jlong) fd[0] << 32) | (jlong) fd[1];

}

static int

configureBlocking(int fd, jboolean blocking)

{

int flags = fcntl(fd, F_GETFL);

int newflags = blocking ? (flags & ~O_NONBLOCK) : (flags | O_NONBLOCK);

return (flags == newflags) ? 0 : fcntl(fd, F_SETFL, newflags); }

As described in this note:

/** *Returns two file descriptors for a pipe encoded in a long. *The read end of the pipe is returned in the high 32 bits, *while the write end is returned in the low 32 bits. */

The high 32 bits store the file descriptor FD (file descriptor) on the read side of the channel, and the low 32 bits store the file descriptor on the write side. Therefore, after the return value of makepipe () is obtained, it needs to be shifted.

pollWrapper.addWakeupSocket(wakeupSourceFd, 0);

This line of code puts the FD of the write end of the returned pipe in the pollWrapper (it will be found later that this is to implement the wakeup() of the selector)

ServerSocketChannel. Implementation of open():

public static ServerSocketChannel open() throws IOException { return SelectorProvider.provider().openServerSocketChannel();

}

SelectorProvider:

public ServerSocketChannel openServerSocketChannel() throws IOException { return new ServerSocketChannelImpl(this);

}

It can be seen that the created ServerSocketChannelImpl also has a reference to WindowsSelectorImpl.

public ServerSocketChannelImpl(SelectorProvider sp) throws IOException {

super(sp);

this.fd = Net.serverSocket(true);

this.fdVal = IOUtil.fdVal(fd);

this.state = ST_INUSE;

}

Then through serverchannel1 register(selector, SelectionKey.OP_ACCEPT); Bind the selector and channel together, that is, bind the FD created during the new ServerSocketChannel with the selector.

At this point, the server has been started and the following objects have been created:

-

WindowsSelectorProvider: Singleton

-

WindowsSelectorImpl contains:

pollWrapper: saves the FD registered on the selector, including the FD on the write side of the pipe and the FD used by the ServerSocketChannel

wakeupPipe: channel (actually two FD S, one read and one write)

Then go to run() in the Server:

selector.select(); This method in WindowsSelectorImpl is mainly called:

protected int doSelect(long timeout) throws IOException {

if (channelArray == null)

throw new ClosedSelectorException();

this.timeout = timeout; // set selector timeout

processDeregisterQueue();

if (interruptTriggered) {

resetWakeupSocket();

return 0;

}

//Calculate number of helper threads needed for poll. If necessary

//threads are created here and start waiting on startLock adjustThreadsCount();

finishLock.reset(); // reset finishLock

//Wakeup helper threads, waiting on startLock, so they start polling.

//Redundant threads will exit here after wakeup. startLock.startThreads();

//do polling in the main thread. Main thread is responsible for

//first MAX_SELECTABLE_FDS entries in pollArray.

try {

begin();

try {

subSelector.poll();

} catch (IOException e) {

finishLock.setException(e); // Save this exception

}

//Main thread is out of poll(). Wakeup others and wait for them if (threads.size() > 0)

finishLock.waitForHelperThreads(); }

finally {

end();

}

//Done with poll(). Set wakeupSocket to nonsignaled for the next run. finishLock.checkForException();

processDeregisterQueue();

int updated = updateSelectedKeys();

//Done with poll(). Set wakeupSocket to nonsignaled for the next run. resetWakeupSocket();

return updated;

}

Where subselector Poll () is the core, that is, the FD saved in the rotation training pollWrapper; The specific implementation is to call the native method poll0:

private int poll() throws IOException{ // poll for the main thread return poll0(pollWrapper.pollArrayAddress,

Math.min(totalChannels, MAX_SELECTABLE_FDS),

readFds, writeFds, exceptFds, timeout);

}

private native int poll0(long pollAddress, int numfds,

int[] readFds, int[] writeFds, int[] exceptFds, long timeout);

//These arrays will hold result of native select().

//The first element of each array is the number of selected sockets.

//Other elements are file descriptors of selected sockets.

private final int[] readFds = new int [MAX_SELECTABLE_FDS + 1];//Save FD with read

private final int[] writeFds = new int [MAX_SELECTABLE_FDS + 1]; //Save FD with write

private final int[] exceptFds = new int [MAX_SELECTABLE_FDS + 1]; //Save FD with except ion

This poll0 () will listen for data in and out of the FD in the pollWrapper, which will cause IO blocking until a data read / write event occurs. For example, since the FD of ServerSocketChannel is also saved in the pollWrapper, as long as the ClientSocket sends a data to the ServerSocket, poll0 () will return; In addition, since the FD of the write end of the pipe is also saved in the pollWrapper, as long as the write end of the pipe sends a data to the FD, poll0() will also be returned; If neither of these conditions occurs, poll0 () is always blocked, that is, the selector Select() will always block; If any of these conditions occur, the selector Select() will return, and use while (true) {in run() of OperationServer to ensure that the selector can continue to listen to poll() after receiving and processing the data. At this time, let's take a look at WindowsSelectorImpl. Wakeup():

public Selector wakeup() {

synchronized (interruptLock) {

if (!interruptTriggered) {

setWakeupSocket();

interruptTriggered = true;

}

}

return this;

}

//Sets Windows wakeup socket to a signaled state.

private void setWakeupSocket() {

setWakeupSocket0(wakeupSinkFd);

}

private native void setWakeupSocket0(int wakeupSinkFd);

JNIEXPORT void JNICALL

Java_sun_nio_ch_WindowsSelectorImpl_setWakeupSocket0(JNIEnv *env, jclass this, jint scoutFd)

{

/* Write one byte into the pipe */

const char byte = 1;

send(scoutFd, &byte, 1, 0);

}

It can be seen that wakeup() wakes up poll() by sending (Scout FD, & byte, 1, 0) a byte 1 at the write end of pipe. So you can call selector when you need it Wakeup() to wake up the selector.

Reactor

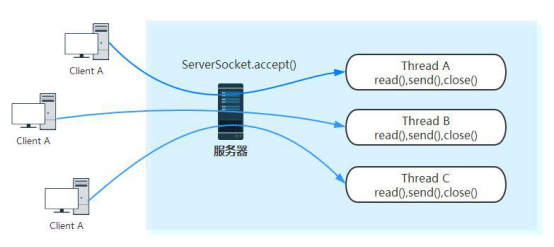

Now we have a certain understanding of blocking I/O. we know that blocking I/O calls InputStream The read () method is blocked, and it will not return until the data arrives (or times out); Similarly, when calling ServerSocket When the accept () method is used, it will be blocked until there is a client connection. After each client connects, the server will start a thread to process the client's request. The communication model of blocking I/O is as follows:

If you analyze it carefully, you will find that blocking I/O has some disadvantages. According to the blocking I/O communication model, I summarized its two disadvantages:

-

When there are many clients, a large number of processing threads will be created. And each thread takes up stack space and some CPU time

-

Blocking may lead to frequent context switching, and most of the context switching may be meaningless. In this case, non blocking I/O has its application prospect.

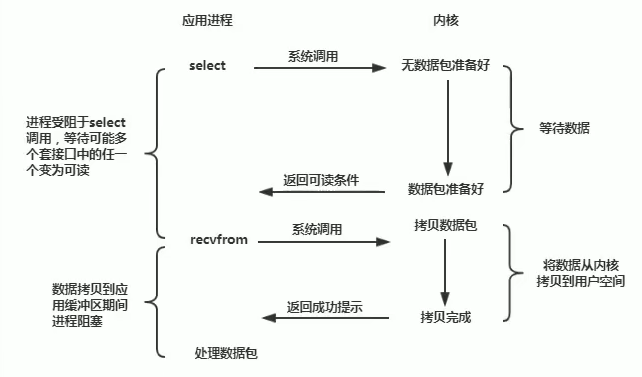

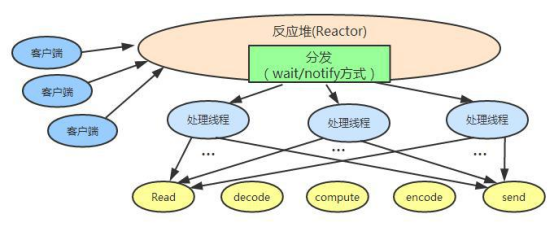

Java NIO is in jdk1 4. When it is used, it can be described as "new I/O" or non blocking I/O. Here is how Java NIO works:

-

A dedicated thread handles all IO events and is responsible for distribution.

-

Event driven mechanism: events are triggered when they arrive, rather than monitoring events synchronously.

-

Thread communication: threads communicate through wait,notify, etc. Ensure that each context switch is meaningful. Reduce unnecessary thread switching. The working principle diagram of Java NIO reactor I understand is posted below:

(Note: the processing flow of each thread is probably reading data, decoding, calculation and processing, encoding and sending response.)

Postscript

Download address of all Netty related demo Codes:

https://github.com/harrypottry/nettydemo

For more architecture knowledge, welcome to this series of Java articles: The growth path of Java Architects