ixgbe_dev_rx_queue_start set dma address

IXGBE_WRITE_REG(hw, IXGBE_RDH(rxq->reg_idx), 0);

IXGBE_WRITE_REG(hw, IXGBE_RDT(rxq->reg_idx), rxq->nb_rx_desc - 1);

recv

{

rxdp = &rx_ring[rx_id];

/* If the DD written back by the network card is 0, the loop will jump out */

staterr = rxdp->wb.upper.status_error;

if (!(staterr & rte_cpu_to_le_32(IXGBE_RXDADV_STAT_DD)))

break;

rxd = *rxdp;

/* Assign new mbuf */

nmb = rte_mbuf_raw_alloc(rxq->mb_pool);

rxe = &sw_ring[rx_id]; /* Get old mbuf */

rxm = rxe->mbuf; /* rxm Point to old mbuf */

rxe->mbuf = nmb; /* rxe->mbuf Point to new mbuf */

dma_addr =

rte_cpu_to_le_64(rte_mbuf_data_dma_addr_default(nmb)); /* Get the bus address of the new mbuf */

rxdp->read.hdr_addr = 0; /* Clear the DD of the desc corresponding to the new mbuf, and the subsequent network card will read the desc */

rxdp->read.pkt_addr = dma_addr; /* Set the bus address of desc corresponding to the new mbuf, and the subsequent network card will read desc */

}

Each queue should be set

/* Allocate and set up 1 RX queue per Ethernet port. */

for (q = 0; q < rx_rings; q++) {

retval = rte_eth_rx_queue_setup(port, q, nb_rxd,

rte_eth_dev_socket_id(port), NULL, mbuf_pool);

if (retval < 0)

return retval;

}

ret = (*dev->dev_ops->rx_queue_setup)(dev, rx_queue_id, nb_rx_desc,

socket_id, &local_conf, mp);

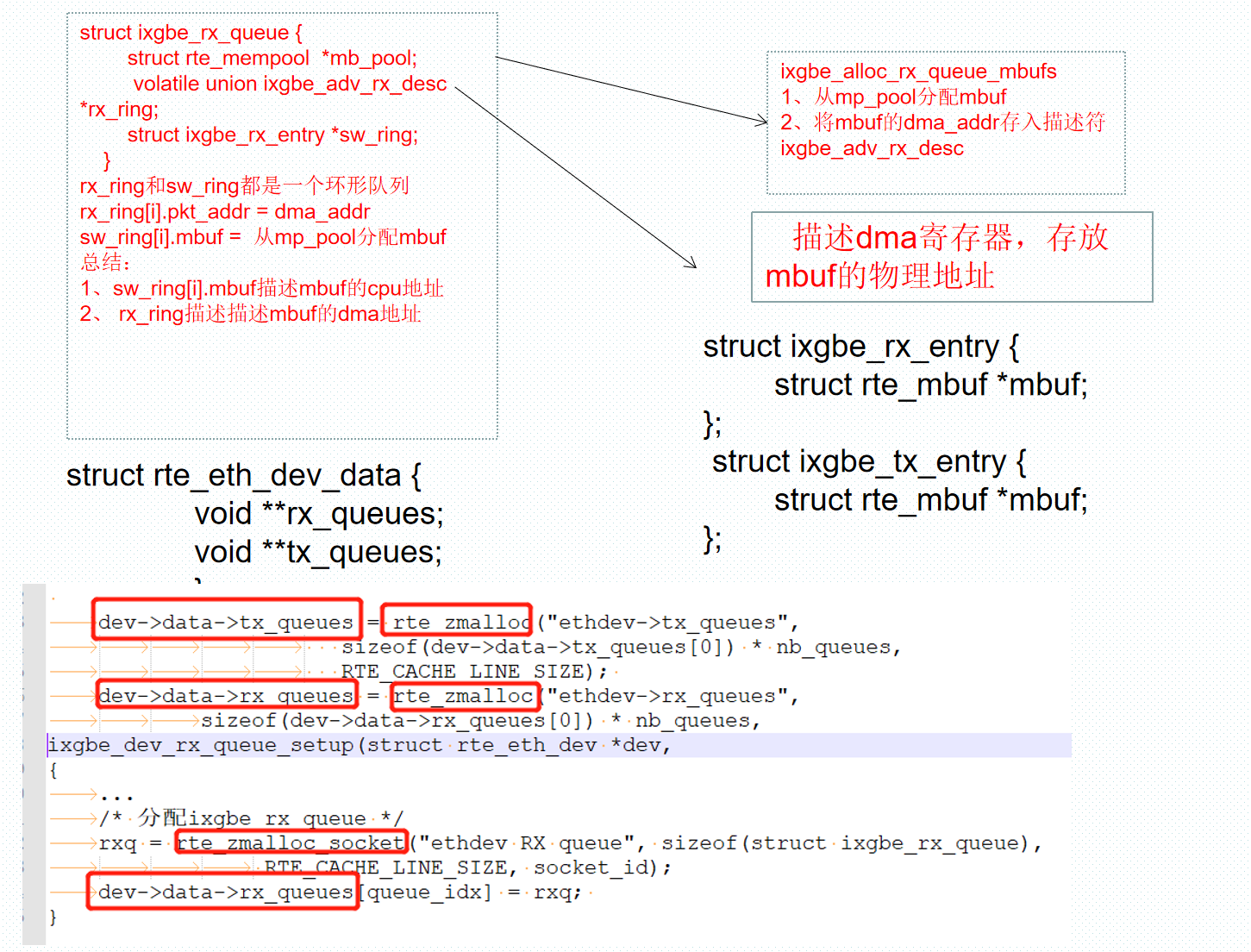

ixgbe_dev_rx_queue_setup()

/**

* Structure associated with each descriptor of the RX ring of a RX queue.

*/

struct ixgbe_rx_entry {

struct rte_mbuf *mbuf; /**< mbuf associated with RX descriptor. */

};

struct ixgbe_tx_entry {

struct rte_mbuf *mbuf; /**< mbuf associated with TX desc, if any. */

uint16_t next_id; /**< Index of next descriptor in ring. */

uint16_t last_id; /**< Index of last scattered descriptor. */

};

struct ixgbe_rx_queue {

struct rte_mempool *mb_pool; /**< mbuf pool to populate RX ring. */

volatile union ixgbe_adv_rx_desc *rx_ring; /**< RX ring virtual address. */ -----------------each queue Each has a device descriptor

uint64_t rx_ring_phys_addr; /**< RX ring DMA address. */

volatile uint32_t *rdt_reg_addr; /**< RDT register address. */

volatile uint32_t *rdh_reg_addr; /**< RDH register address. */

struct ixgbe_rx_entry *sw_ring; /**< address of RX software ring. */

}

-

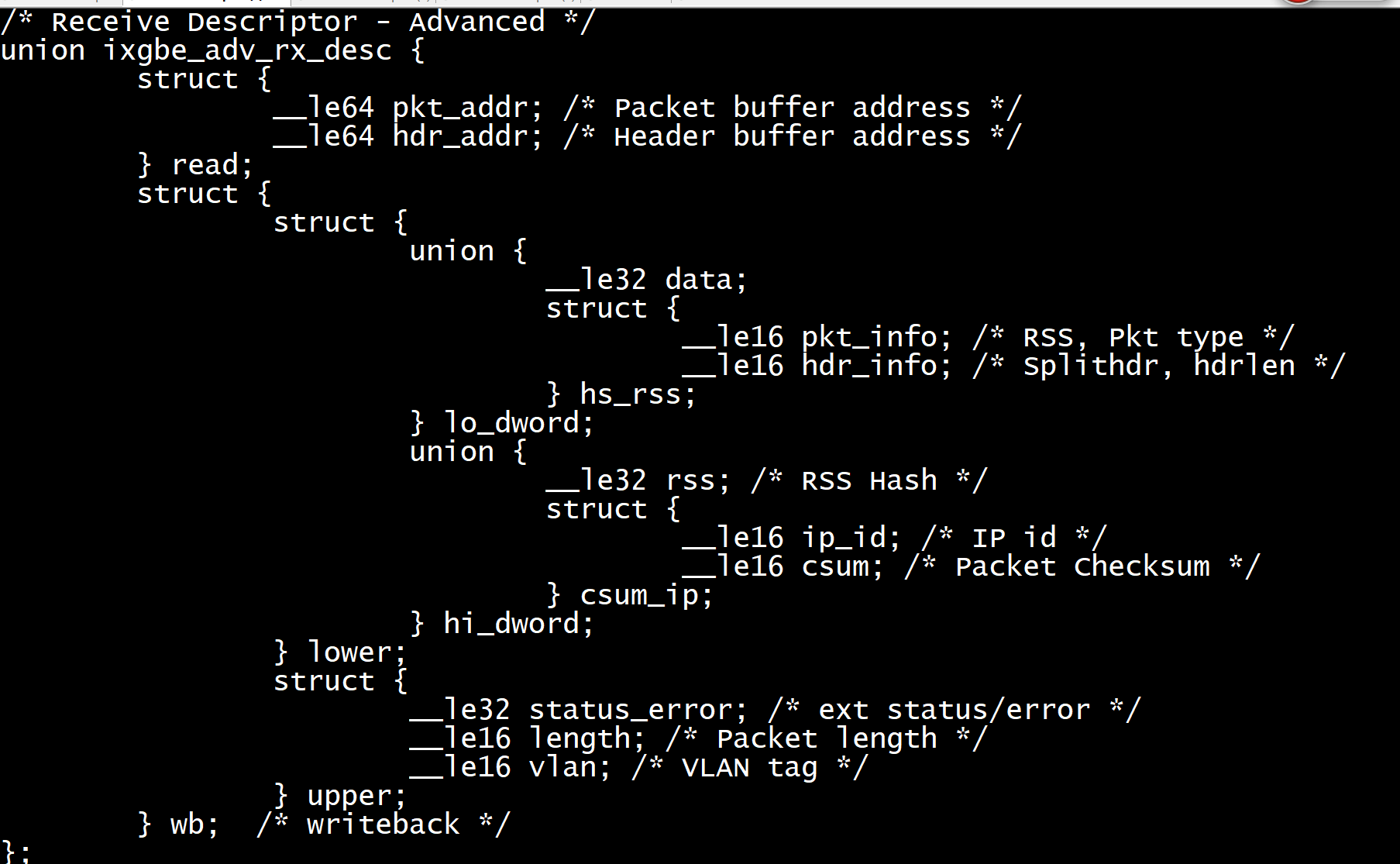

pkt_addr: the physical address of the message data. The network card DMA writes the message data into the corresponding memory space through the physical address.

-

hdr_addr: header information of message, HDR_ The last bit of addr is DD bit, because it is a union structure, that is, status_ The last bit of error also corresponds to DD bit.

The DD bit (Descriptor Done Status) is used to mark whether a descriptor buf is available.

- Every time a new packet comes to the network card, check rx_ring whether the DD bit of the current buf is 0. If it is 0, it means that the current buf can be used. Let DMA copy the data packet into the buf, and then set DD to 1. If it is 1, the network card is considered Rx_ When the ring queue is full, the packet will be discarded and an imiss will be recorded. (0->1)

- For applications, the use of DD bit is just the opposite. When reading data packets, first check whether the DD bit is 1. If it is 1, it means that the network card has put the data packet into memory and can read it. After reading, put a new buf and set the corresponding DD bit to 0. If it is 0, it means that there is no packet readable. (1->0)

int __attribute__((cold))

ixgbe_dev_rx_queue_setup(struct rte_eth_dev *dev,

uint16_t queue_idx,

uint16_t nb_desc,

unsigned int socket_id,

const struct rte_eth_rxconf *rx_conf,

struct rte_mempool *mp)

{

...

/* Assign ixgbe_rx_queue */

rxq = rte_zmalloc_socket("ethdev RX queue", sizeof(struct ixgbe_rx_queue),

RTE_CACHE_LINE_SIZE, socket_id);

...

/* Initialize rxq */

rxq->mb_pool = mp;

rxq->nb_rx_desc = nb_desc;

rxq->rx_free_thresh = rx_conf->rx_free_thresh;

rxq->queue_id = queue_idx;

rxq->reg_idx = (uint16_t)((RTE_ETH_DEV_SRIOV(dev).active == 0) ?

queue_idx : RTE_ETH_DEV_SRIOV(dev).def_pool_q_idx + queue_idx);

rxq->port_id = dev->data->port_id;

rxq->crc_len = (uint8_t) ((dev->data->dev_conf.rxmode.hw_strip_crc) ?

0 : ETHER_CRC_LEN);

rxq->drop_en = rx_conf->rx_drop_en;

rxq->rx_deferred_start = rx_conf->rx_deferred_start;

...

/* Allocate desc array. The element type of the array is union ixgbe_adv_rx_desc

* (IXGBE_MAX_RING_DESC + RTE_PMD_IXGBE_RX_MAX_BURST) * sizeof(union ixgbe_adv_rx_desc)

* (4096 + 32) * sizeof(union ixgbe_adv_rx_desc) */

rz = rte_eth_dma_zone_reserve(dev, "rx_ring", queue_idx,

RX_RING_SZ, IXGBE_ALIGN, socket_id);

...

memset(rz->addr, 0, RX_RING_SZ); /* Clear desc array */

...

/* Set rdt_reg_addr is the address of the RDT register */

rxq->rdt_reg_addr =

IXGBE_PCI_REG_ADDR(hw, IXGBE_RDT(rxq->reg_idx));

/* Set rdh_reg_addr is the address of the RDH register */

rxq->rdh_reg_addr =

IXGBE_PCI_REG_ADDR(hw, IXGBE_RDH(rxq->reg_idx));

...

/* rx_ring_phys_addr Bus address pointing to desc array */

rxq->rx_ring_phys_addr = rte_mem_phy2mch(rz->memseg_id, rz->phys_addr);

/* rx_ring Virtual address pointing to desc array */

rxq->rx_ring = (union ixgbe_adv_rx_desc *) rz->addr;

...

/* Allocate the entry array and assign the address to sw_ring ---------------------------------------- */

rxq->sw_ring = rte_zmalloc_socket("rxq->sw_ring",

sizeof(struct ixgbe_rx_entry) * len,

RTE_CACHE_LINE_SIZE, socket_id);

...

/* rx_queues[queue_idx]Point to ixgbe_rx_queue */

dev->data->rx_queues[queue_idx] = rxq; //dev->data->rx_queues = rte_zmalloc

...

/* Set receive queue parameters */

ixgbe_reset_rx_queue(adapter, rxq);

...

}

ixgbe_recv_pkts()

Write back when receiving:

- The network card uses DMA to write the Frame in Rx FIFO to the mbuf in Rx Ring Buffer, and sets the DD of desc to 1

- After the network card driver takes away the mbuf, set the DD of desc to 0 and update the RDT

uint16_t

ixgbe_recv_pkts(void *rx_queue, struct rte_mbuf **rx_pkts,

uint16_t nb_pkts)

{

...

nb_rx = 0;

nb_hold = 0;

rxq = rx_queue;

rx_id = rxq->rx_tail; /* Next equivalent to ixgbe_ to_ clean */

rx_ring = rxq->rx_ring;

sw_ring = rxq->sw_ring;

...

while (nb_rx < nb_pkts) {

...

/* Get RX_ Pointer to desc pointed by tail */

rxdp = &rx_ring[rx_id];

/* If the DD written back by the network card is 0, the loop will jump out */

staterr = rxdp->wb.upper.status_error;

if (!(staterr & rte_cpu_to_le_32(IXGBE_RXDADV_STAT_DD)))

break;

/* Get RX_ desc pointed by tail */

rxd = *rxdp;

...

/* Assign new mbuf */

nmb = rte_mbuf_raw_alloc(rxq->mb_pool);

...

nb_hold++; /* Count the number of mbuf s received */

rxe = &sw_ring[rx_id]; /* Get old mbuf */

rx_id++; /* Get the index of the next desc, and note that it is a ring buffer */

if (rx_id == rxq->nb_rx_desc)

rx_id = 0;

...

rte_ixgbe_prefetch(sw_ring[rx_id].mbuf); /* Prefetch next mbuf */

...

if ((rx_id & 0x3) == 0) {

rte_ixgbe_prefetch(&rx_ring[rx_id]);

rte_ixgbe_prefetch(&sw_ring[rx_id]);

}

...

rxm = rxe->mbuf; /* rxm Point to old mbuf */

rxe->mbuf = nmb; /* rxe->mbuf Point to new mbuf */

dma_addr =

rte_cpu_to_le_64(rte_mbuf_data_dma_addr_default(nmb)); /* Get the bus address of the new mbuf */

rxdp->read.hdr_addr = 0; /* Clear the DD of the desc corresponding to the new mbuf, and the subsequent network card will read the desc */

rxdp->read.pkt_addr = dma_addr; /* Set the bus address of desc corresponding to the new mbuf, and the subsequent network card will read desc */

...

pkt_len = (uint16_t) (rte_le_to_cpu_16(rxd.wb.upper.length) -

rxq->crc_len); /* Package length */

rxm->data_off = RTE_PKTMBUF_HEADROOM;

rte_packet_prefetch((char *)rxm->buf_addr + rxm->data_off);

rxm->nb_segs = 1;

rxm->next = NULL;

rxm->pkt_len = pkt_len;

rxm->data_len = pkt_len;

rxm->port = rxq->port_id;

...

if (likely(pkt_flags & PKT_RX_RSS_HASH)) /* RSS */

rxm->hash.rss = rte_le_to_cpu_32(

rxd.wb.lower.hi_dword.rss);

else if (pkt_flags & PKT_RX_FDIR) { /* FDIR */

rxm->hash.fdir.hash = rte_le_to_cpu_16(

rxd.wb.lower.hi_dword.csum_ip.csum) &

IXGBE_ATR_HASH_MASK;

rxm->hash.fdir.id = rte_le_to_cpu_16(

rxd.wb.lower.hi_dword.csum_ip.ip_id);

}

...

rx_pkts[nb_rx++] = rxm; /* Put the old mbuf into rx_pkts array */

}

rxq->rx_tail = rx_id; /* rx_tail Point to next desc */

...

nb_hold = (uint16_t) (nb_hold + rxq->nb_rx_hold);

/* If the number of mbuf s processed is greater than the upper limit (32 by default), update the RDT */

if (nb_hold > rxq->rx_free_thresh) {

...

rx_id = (uint16_t) ((rx_id == 0) ?

(rxq->nb_rx_desc - 1) : (rx_id - 1));

IXGBE_PCI_REG_WRITE(rxq->rdt_reg_addr, rx_id); /* Will RX_ Write ID to RDT */

nb_hold = 0; /* Clear nb_hold */

}

rxq->nb_rx_hold = nb_hold; /* Update nb_rx_hold */

return nb_rx;

}

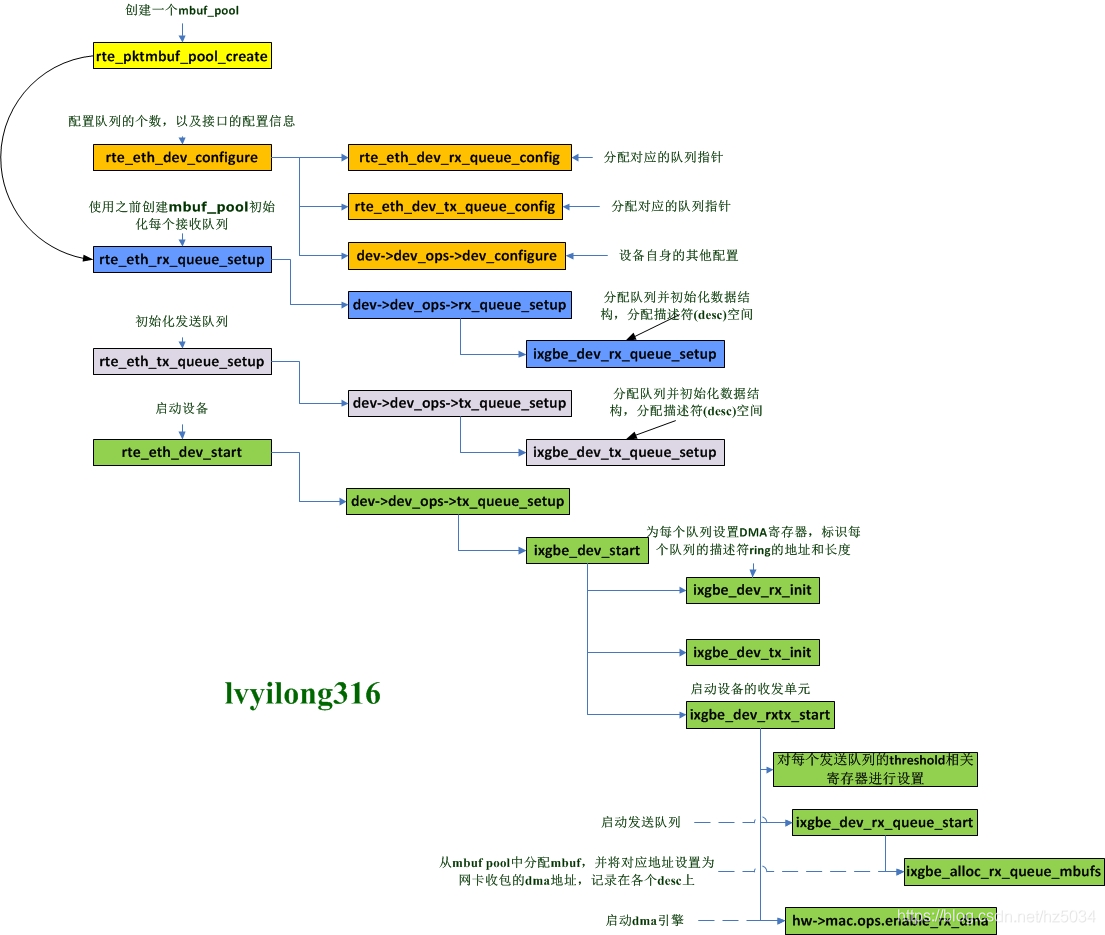

Function function

rte_eth_dev_count() number of network cards

rte_eth_dev_configure() configure network card

rte_eth_rx_queue_setup()

rte_eth_tx_queue_setup() assigns a receive / send queue to the network card

rte_eth_dev_start() start the network card

rte_eth_rx_burst()

rte_eth_tx_burst() specifies the receiving / sending function of the queue based on the specified network card

rte_eth_dev / rte_eth_dev_data

DPDK defines a rte_eth_devices array, array element type is struct

rte_eth_dev, an array element represents a network card. struct

rte_eth_dev has four important members: rx/tx_pkt_burst,dev_ops and data, wherein the first two are the burst receiving / sending function of the network card respectively; dev_ops is the function table registered by the network card driver. The type is struct

eth_dev_ops; Data contains the main information of the network card. The type is struct rte_eth_dev_data

struct rte_eth_dev {

/* In RTE_ bus_ Register Rx / TX in probe()_ pkt_ burst */

eth_rx_burst_t rx_pkt_burst; /**< Pointer to PMD receive function. */

eth_tx_burst_t tx_pkt_burst; /**< Pointer to PMD transmit function. */

eth_tx_prep_t tx_pkt_prepare; /**< Pointer to PMD transmit prepare function. */

struct rte_eth_dev_data *data; /**< Pointer to device data */

/* In RTE_ bus_ Register dev in probe()_ ops */

const struct eth_dev_ops *dev_ops; /**< Functions exported by PMD */

struct rte_device *device; /**< Backing device */

struct rte_intr_handle *intr_handle; /**< Device interrupt handle */

/** User application callbacks for NIC interrupts */

struct rte_eth_dev_cb_list link_intr_cbs;

/**

* User-supplied functions called from rx_burst to post-process

* received packets before passing them to the user

*/

struct rte_eth_rxtx_callback *post_rx_burst_cbs[RTE_MAX_QUEUES_PER_PORT];

/**

* User-supplied functions called from tx_burst to pre-process

* received packets before passing them to the driver for transmission.

*/

struct rte_eth_rxtx_callback *pre_tx_burst_cbs[RTE_MAX_QUEUES_PER_PORT];

enum rte_eth_dev_state state; /**< Flag indicating the port state */

} __rte_cache_aligned;

struct rte_eth_dev_data {

char name[RTE_ETH_NAME_MAX_LEN]; /**< Unique identifier name */

/* Receive queue array */

void **rx_queues; /**< Array of pointers to RX queues. */

/* Send queue array */

void **tx_queues; /**< Array of pointers to TX queues. */

/* Receive queue array length */

uint16_t nb_rx_queues; /**< Number of RX queues. */

/* Send queue array length */

uint16_t nb_tx_queues; /**< Number of TX queues. */

struct rte_eth_dev_sriov sriov; /**< SRIOV data */

void *dev_private; /**< PMD-specific private data */

struct rte_eth_link dev_link;

/**< Link-level information & status */

struct rte_eth_conf dev_conf; /**< Configuration applied to device. */

uint16_t mtu; /**< Maximum Transmission Unit. */

uint32_t min_rx_buf_size;

/**< Common rx buffer size handled by all queues */

uint64_t rx_mbuf_alloc_failed; /**< RX ring mbuf allocation failures. */

struct ether_addr* mac_addrs;/**< Device Ethernet Link address. */

uint64_t mac_pool_sel[ETH_NUM_RECEIVE_MAC_ADDR];

/** bitmap array of associating Ethernet MAC addresses to pools */

struct ether_addr* hash_mac_addrs;

/** Device Ethernet MAC addresses of hash filtering. */

uint8_t port_id; /**< Device [external] port identifier. */

__extension__

uint8_t promiscuous : 1, /**< RX promiscuous mode ON(1) / OFF(0). */

scattered_rx : 1, /**< RX of scattered packets is ON(1) / OFF(0) */

all_multicast : 1, /**< RX all multicast mode ON(1) / OFF(0). */

dev_started : 1, /**< Device state: STARTED(1) / STOPPED(0). */

lro : 1; /**< RX LRO is ON(1) / OFF(0) */

uint8_t rx_queue_state[RTE_MAX_QUEUES_PER_PORT];

/** Queues state: STARTED(1) / STOPPED(0) */

uint8_t tx_queue_state[RTE_MAX_QUEUES_PER_PORT];

/** Queues state: STARTED(1) / STOPPED(0) */

uint32_t dev_flags; /**< Capabilities */

enum rte_kernel_driver kdrv; /**< Kernel driver passthrough */

int numa_node; /**< NUMA node connection */

struct rte_vlan_filter_conf vlan_filter_conf;

/**< VLAN filter configuration. */

};

struct rte_eth_dev rte_eth_devices[RTE_MAX_ETHPORTS];

static struct rte_eth_dev_data *rte_eth_dev_data;

rte_eth_dev_configure()

rte_ eth_ dev_ The main work of configure () is to allocate the receive / send queue array. The array element type is void *, and an array element represents a receive / send queue

int

rte_eth_dev_configure(uint8_t port_id, uint16_t nb_rx_q, uint16_t nb_tx_q,

const struct rte_eth_conf *dev_conf)

{

struct rte_eth_dev *dev;

struct rte_eth_dev_info dev_info;

int diag;

/* Check port_ Is the ID legal */

RTE_ETH_VALID_PORTID_OR_ERR_RET(port_id, -EINVAL);

/* Check whether the number of receive queues is greater than the upper limit of DPDK */

if (nb_rx_q > RTE_MAX_QUEUES_PER_PORT) {

RTE_PMD_DEBUG_TRACE(

"Number of RX queues requested (%u) is greater than max supported(%d)\n",

nb_rx_q, RTE_MAX_QUEUES_PER_PORT);

return -EINVAL;

}

/* Check whether the number of send queues is greater than the upper limit of DPDK */

if (nb_tx_q > RTE_MAX_QUEUES_PER_PORT) {

RTE_PMD_DEBUG_TRACE(

"Number of TX queues requested (%u) is greater than max supported(%d)\n",

nb_tx_q, RTE_MAX_QUEUES_PER_PORT);

return -EINVAL;

}

/* Get port_ Device corresponding to ID */

dev = &rte_eth_devices[port_id];

/* Check dev_infos_get and dev_ Is configure defined */

RTE_FUNC_PTR_OR_ERR_RET(*dev->dev_ops->dev_infos_get, -ENOTSUP);

RTE_FUNC_PTR_OR_ERR_RET(*dev->dev_ops->dev_configure, -ENOTSUP);

/* Check whether the device is started */

if (dev->data->dev_started) {

RTE_PMD_DEBUG_TRACE(

"port %d must be stopped to allow configuration\n", port_id);

return -EBUSY;

}

/* Copy the dev_conf parameter into the dev structure */

/* Copy dev_conf to dev - > Data - > dev_conf */

memcpy(&dev->data->dev_conf, dev_conf, sizeof(dev->data->dev_conf));

/*

* Check that the numbers of RX and TX queues are not greater

* than the maximum number of RX and TX queues supported by the

* configured device.

*/

/* ixgbe For ixgbe_dev_info_get() */

(*dev->dev_ops->dev_infos_get)(dev, &dev_info);

/* Check whether the number of receive / send queues is 0 at the same time */

if (nb_rx_q == 0 && nb_tx_q == 0) {

RTE_PMD_DEBUG_TRACE("ethdev port_id=%d both rx and tx queue cannot be 0\n", port_id);

return -EINVAL;

}

/* Check whether the number of receive queues is greater than the upper limit of the network card */

if (nb_rx_q > dev_info.max_rx_queues) {

RTE_PMD_DEBUG_TRACE("ethdev port_id=%d nb_rx_queues=%d > %d\n",

port_id, nb_rx_q, dev_info.max_rx_queues);

return -EINVAL;

}

/* Check whether the number of sending queues is greater than the upper limit of the network card */

if (nb_tx_q > dev_info.max_tx_queues) {

RTE_PMD_DEBUG_TRACE("ethdev port_id=%d nb_tx_queues=%d > %d\n",

port_id, nb_tx_q, dev_info.max_tx_queues);

return -EINVAL;

}

/* Check that the device supports requested interrupts */

if ((dev_conf->intr_conf.lsc == 1) &&

(!(dev->data->dev_flags & RTE_ETH_DEV_INTR_LSC))) {

RTE_PMD_DEBUG_TRACE("driver %s does not support lsc\n",

dev->device->driver->name);

return -EINVAL;

}

if ((dev_conf->intr_conf.rmv == 1) &&

(!(dev->data->dev_flags & RTE_ETH_DEV_INTR_RMV))) {

RTE_PMD_DEBUG_TRACE("driver %s does not support rmv\n",

dev->device->driver->name);

return -EINVAL;

}

/*

* If jumbo frames are enabled, check that the maximum RX packet

* length is supported by the configured device.

*/

if (dev_conf->rxmode.jumbo_frame == 1) {

if (dev_conf->rxmode.max_rx_pkt_len >

dev_info.max_rx_pktlen) {

RTE_PMD_DEBUG_TRACE("ethdev port_id=%d max_rx_pkt_len %u"

" > max valid value %u\n",

port_id,

(unsigned)dev_conf->rxmode.max_rx_pkt_len,

(unsigned)dev_info.max_rx_pktlen);

return -EINVAL;

} else if (dev_conf->rxmode.max_rx_pkt_len < ETHER_MIN_LEN) {

RTE_PMD_DEBUG_TRACE("ethdev port_id=%d max_rx_pkt_len %u"

" < min valid value %u\n",

port_id,

(unsigned)dev_conf->rxmode.max_rx_pkt_len,

(unsigned)ETHER_MIN_LEN);

return -EINVAL;

}

} else {

if (dev_conf->rxmode.max_rx_pkt_len < ETHER_MIN_LEN ||

dev_conf->rxmode.max_rx_pkt_len > ETHER_MAX_LEN) /* Less than 64 or greater than 1518 */

/* Use default value */

dev->data->dev_conf.rxmode.max_rx_pkt_len =

ETHER_MAX_LEN; /* The default value is 1518 */

}

/*

* Setup new number of RX/TX queues and reconfigure device.

*/

/* Allocate the receiving queue array and assign the address to dev - > Data - > Rx_ Queues, length assigned to dev - > Data - > NB_ rx_ queues */

diag = rte_eth_dev_rx_queue_config(dev, nb_rx_q);

if (diag != 0) {

RTE_PMD_DEBUG_TRACE("port%d rte_eth_dev_rx_queue_config = %d\n",

port_id, diag);

return diag;

}

/* Allocate the sending queue array and assign the address to dev - > Data - > TX_ Queues, length assigned to dev - > Data - > NB_ tx_ queues */

diag = rte_eth_dev_tx_queue_config(dev, nb_tx_q);

if (diag != 0) {

RTE_PMD_DEBUG_TRACE("port%d rte_eth_dev_tx_queue_config = %d\n",

port_id, diag);

rte_eth_dev_rx_queue_config(dev, 0);

return diag;

}

/* ixgbe For ixgbe_dev_configure() */

diag = (*dev->dev_ops->dev_configure)(dev);

if (diag != 0) {

RTE_PMD_DEBUG_TRACE("port%d dev_configure = %d\n",

port_id, diag);

rte_eth_dev_rx_queue_config(dev, 0);

rte_eth_dev_tx_queue_config(dev, 0);

return diag;

}

return 0;

}

rte_eth_dev_rx_queue_config() ---------------- create multiple RX queues

static int

rte_eth_dev_rx_queue_config(struct rte_eth_dev *dev, uint16_t nb_queues)

{

...

dev->data->rx_queues = rte_zmalloc("ethdev->rx_queues",

sizeof(dev->data->rx_queues[0]) * nb_queues,

RTE_CACHE_LINE_SIZE);

...

dev->data->nb_rx_queues = nb_queues; /* Update nb_rx_queues */

...

}

rte_eth_dev_tx_queue_config()

static int

rte_eth_dev_tx_queue_config(struct rte_eth_dev *dev, uint16_t nb_queues)

{

...

dev->data->tx_queues = rte_zmalloc("ethdev->tx_queues",

sizeof(dev->data->tx_queues[0]) * nb_queues,

RTE_CACHE_LINE_SIZE);

...

dev->data->nb_tx_queues = nb_queues; /* Update nb_tx_queues */

...

}

ixgbe_dev_configure()

static int

ixgbe_dev_configure(struct rte_eth_dev *dev)

{

...

/* multipe queue mode checking */

ret = ixgbe_check_mq_mode(dev);

...

/*

* Initialize to TRUE. If any of Rx queues doesn't meet the bulk

* allocation or vector Rx preconditions we will reset it.

*/

adapter->rx_bulk_alloc_allowed = true;

adapter->rx_vec_allowed = true;

...

}

int __attribute__((cold))

ixgbe_dev_rx_queue_start(struct rte_eth_dev *dev, uint16_t rx_queue_id)

{

...

/* Assign mbuf to each receive queue */

if (ixgbe_alloc_rx_queue_mbufs(rxq) != 0) {

...

/* Enable reception */

rxdctl = IXGBE_READ_REG(hw, IXGBE_RXDCTL(rxq->reg_idx));

rxdctl |= IXGBE_RXDCTL_ENABLE;

IXGBE_WRITE_REG(hw, IXGBE_RXDCTL(rxq->reg_idx), rxdctl);

...

/* Write RDH to 0 */

IXGBE_WRITE_REG(hw, IXGBE_RDH(rxq->reg_idx), 0);

/* Write RDT as rxq - > NB_ rx_ desc - 1 */

IXGBE_WRITE_REG(hw, IXGBE_RDT(rxq->reg_idx), rxq->nb_rx_desc - 1);

/* Set the receive queue status to RTE_ETH_QUEUE_STATE_STARTED */

dev->data->rx_queue_state[rx_queue_id] = RTE_ETH_QUEUE_STATE_STARTED;

...

}

static int __attribute__((cold))

ixgbe_alloc_rx_queue_mbufs(struct ixgbe_rx_queue *rxq)

{

struct ixgbe_rx_entry *rxe = rxq->sw_ring;

uint64_t dma_addr;

unsigned int i;

/* Initialize software ring entries */

for (i = 0; i < rxq->nb_rx_desc; i++) {

volatile union ixgbe_adv_rx_desc *rxd;

struct rte_mbuf *mbuf = rte_mbuf_raw_alloc(rxq->mb_pool); /* Assign mbuf */

if (mbuf == NULL) {

PMD_INIT_LOG(ERR, "RX mbuf alloc failed queue_id=%u",

(unsigned) rxq->queue_id);

return -ENOMEM;

}

mbuf->data_off = RTE_PKTMBUF_HEADROOM;

mbuf->port = rxq->port_id;

dma_addr =

rte_cpu_to_le_64(rte_mbuf_data_dma_addr_default(mbuf)); /* mbuf Bus address of */

rxd = &rxq->rx_ring[i];

rxd->read.hdr_addr = 0;

rxd->read.pkt_addr = dma_addr; /* Assign the bus address to RXD - > read pkt_ addr */

rxe[i].mbuf = mbuf; /* Mount mbuf to rxe */

}

return 0;

}