I. Basic Introduction

1.1 Netty knowledge

1.1.1 general

Official website: https://netty.io/

- Netty is a NIO client server framework, which can quickly and easily develop network applications, such as protocol server and client. It greatly simplifies and simplifies network programming such as TCP and UDP socket server.

- Netty is an asynchronous event driven network application framework based on Java NIO. Using netty can quickly develop network applications. Netty provides a high-level abstraction to simplify the programming of TCP and UDP servers, but you can still use the underlying API.

- The internal implementation of netty is very complex, but netty provides an easy-to-use API to decouple business logic from network processing code. Netty is completely implemented based on NIO, so the whole netty is asynchronous.

- Netty is the most popular NIO framework. It has been verified by hundreds of commercial and commercial projects. The underlying rpc of many frameworks and open source components uses netty, such as Dubbo, Elasticsearch and so on. Here are some netty features on the official website:

1.1.2 design

- Provide a unified API for various transmission protocols (the same API is used when using blocking and non blocking sockets, but the parameters to be set are different).

- Based on a flexible and extensible event model to realize the clear separation of concerns.

- Highly customizable threading model - single thread, one or more thread pools.

- True datagram free socket (UDP) support (since 3.1).

1.1.3 ease of use

- Complete Javadoc documentation and sample code.

- No additional dependencies are required. JDK 5 (Netty 3.x) or JDK 6 (Netty 4.x) is sufficient.

1.1.4 performance

- Better throughput and lower latency.

- Less resource consumption.

- Minimize unnecessary memory copies.

1.1.5 safety

- Full SSL/TLS and StartTLS support

1.2 why study Netty

1.2.1 traditional HTTP

- Create a ServerSocket, listen and bind a port

- A series of clients request this port

- The server uses Accept to obtain a Socket connection object from the client

- Start a new thread to handle the connection

- Read Socket to get byte stream

- Decode the protocol to get the Http request object

- Handle the Http request and get a result, which is encapsulated into an HttpResponse object

- Encoding protocol, serializing the result into a byte stream

- Write Socket and send byte stream to client

- Continue looping http requests

- The HTTP server is called HTTP server because the encoding and decoding protocol is HTTP protocol. If the protocol is Redis protocol, it becomes Redis server. If the protocol is WebSocket, it becomes WebSocket server, and so on.

- Using Netty, you can customize the codec protocol and implement your own specific protocol server.

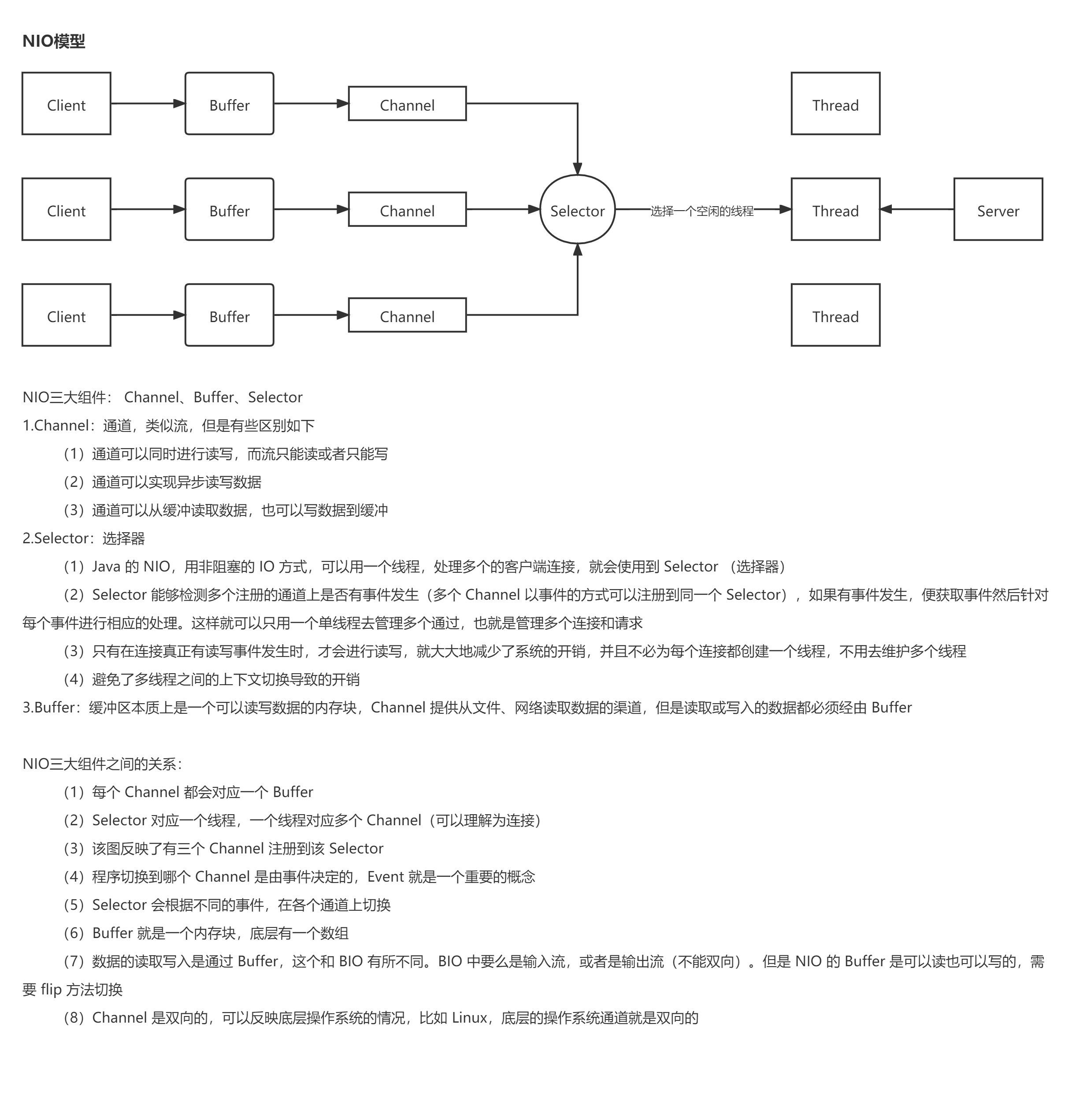

1.2.2 NIO under concurrent threads

- NIO's full name is NoneBlocking IO and non Blocking IO, which is different from BIO. The full name of BIO is Blocking IO and Blocking IO. What does this blockage mean?

- Accept is blocked. Only when a new connection comes will accept return and the main thread can continue

- Read is blocked. Only when the request message comes can read return and the child thread continue processing

- Write is blocked. Only when the client receives the message can write return, and the child thread can continue to read the next request

- Therefore, the traditional multi-threaded server is in the blocking IO mode, and all threads are blocked from beginning to end. Threads are wasted when they are scheduled.

- So how does NIO achieve non blocking. It uses an event mechanism. It can use a thread to do all the logic of Accept, read-write operation and request processing. If nothing can be done, it will not loop. It will sleep the thread until the next event comes. Such a thread is called NIO thread.

- If you know more about NIO, please refer to the previous blog

1.2.3 Netty

- Netty is based on NIO, which provides a higher level of abstraction.

- In Netty, the Accept connection can be handled by a separate thread pool, and the read-write operation is handled by another thread pool.

- Accept connections and read / write operations can also be processed using the same thread pool. The request processing logic can be processed using a separate thread pool or together with the read-write thread. Each thread in the thread pool is a NIO thread. Users can assemble according to the actual situation and construct a concurrency model to meet the system requirements.

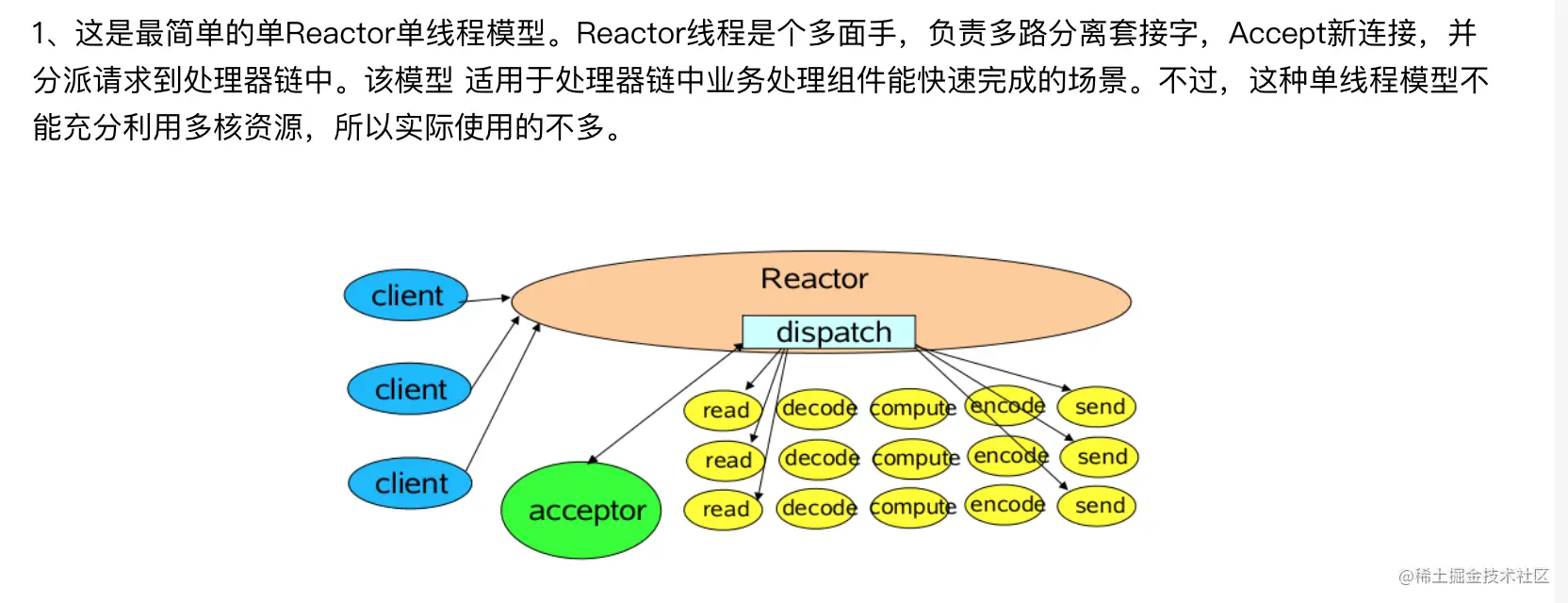

1.3 Reactor thread model

Reactor single thread model

One NIO thread + one accept thread:

Reactor multithreading model

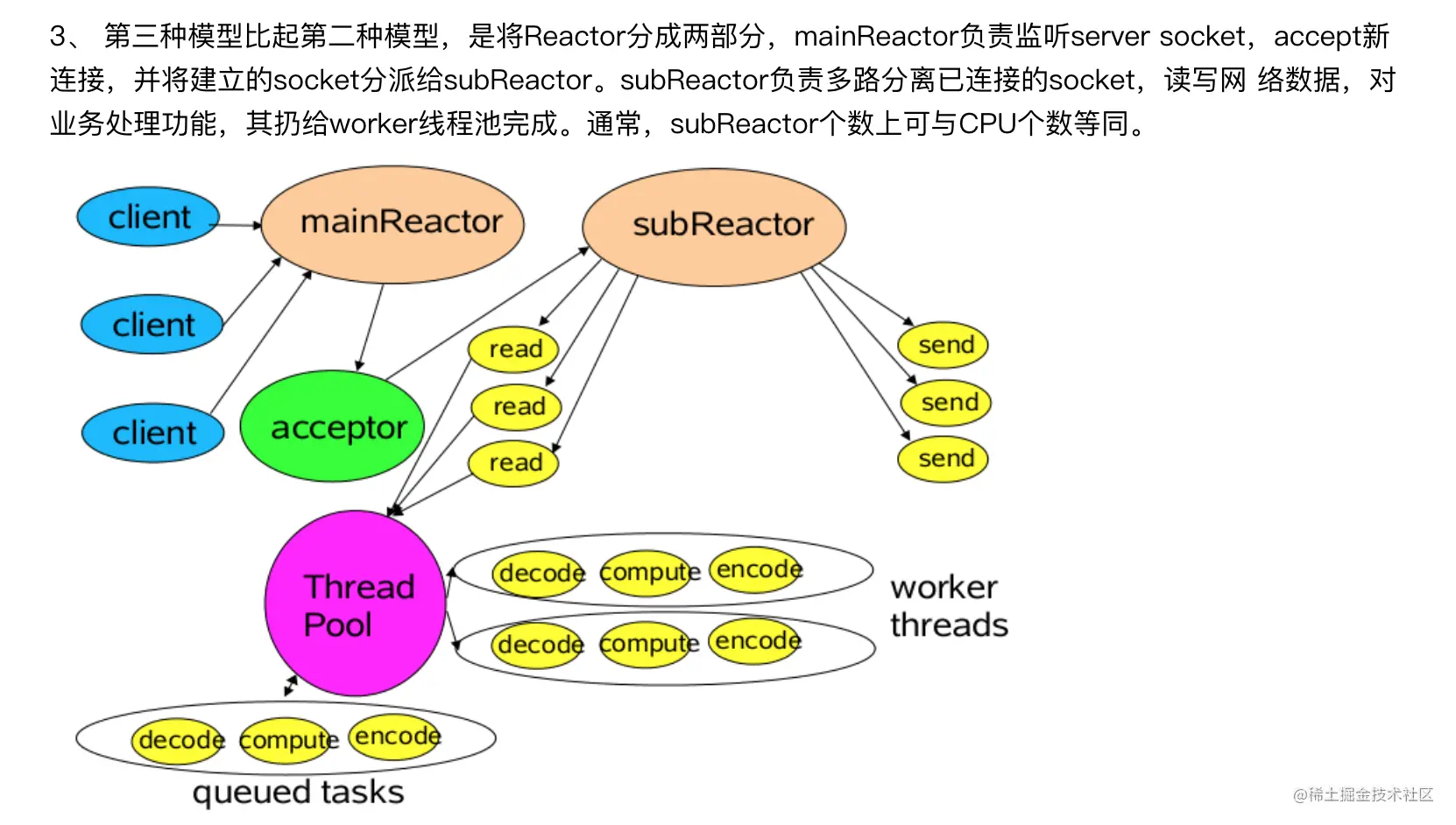

Reactor master-slave model

Master slave Reactor multithreading: NIO thread pools of multiple acceptor s are used to accept connections from clients

1.4 basic execution process

- Server

package com.shu.NettyHello;

import com.sun.scenario.effect.impl.sw.sse.SSEBlend_SRC_OUTPeer;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

/**

* @Author shu

* @Date: 2022/02/26/ 13:36

* @Description

**/

public class HelloServer {

public static void main(String[] args) {

new ServerBootstrap()

.group(new NioEventLoopGroup())

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

ch.pipeline().addLast(new StringDecoder());

ch.pipeline().addLast(new ChannelInboundHandlerAdapter(){

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

super.channelRead(ctx, msg);

System.out.println(msg);

}

});

}

})

.bind(8082);

}

}

- Customer service end

package com.shu.NettyHello;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.epoll.EpollEventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

import java.net.InetSocketAddress;

import java.nio.channels.SocketChannel;

/**

* @Author shu

* @Date: 2022/02/26/ 13:36

* @Description

**/

public class HelloClient {

public static void main(String[] args) throws InterruptedException {

new Bootstrap()

.group(new NioEventLoopGroup())

.channel(NioSocketChannel.class)

.handler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

ch.pipeline().addLast(new StringEncoder());

}

}).bind(new InetSocketAddress("localhost",8082))

.sync()

.channel()

.writeAndFlush("hello word");

}

}

II. Basic use of components

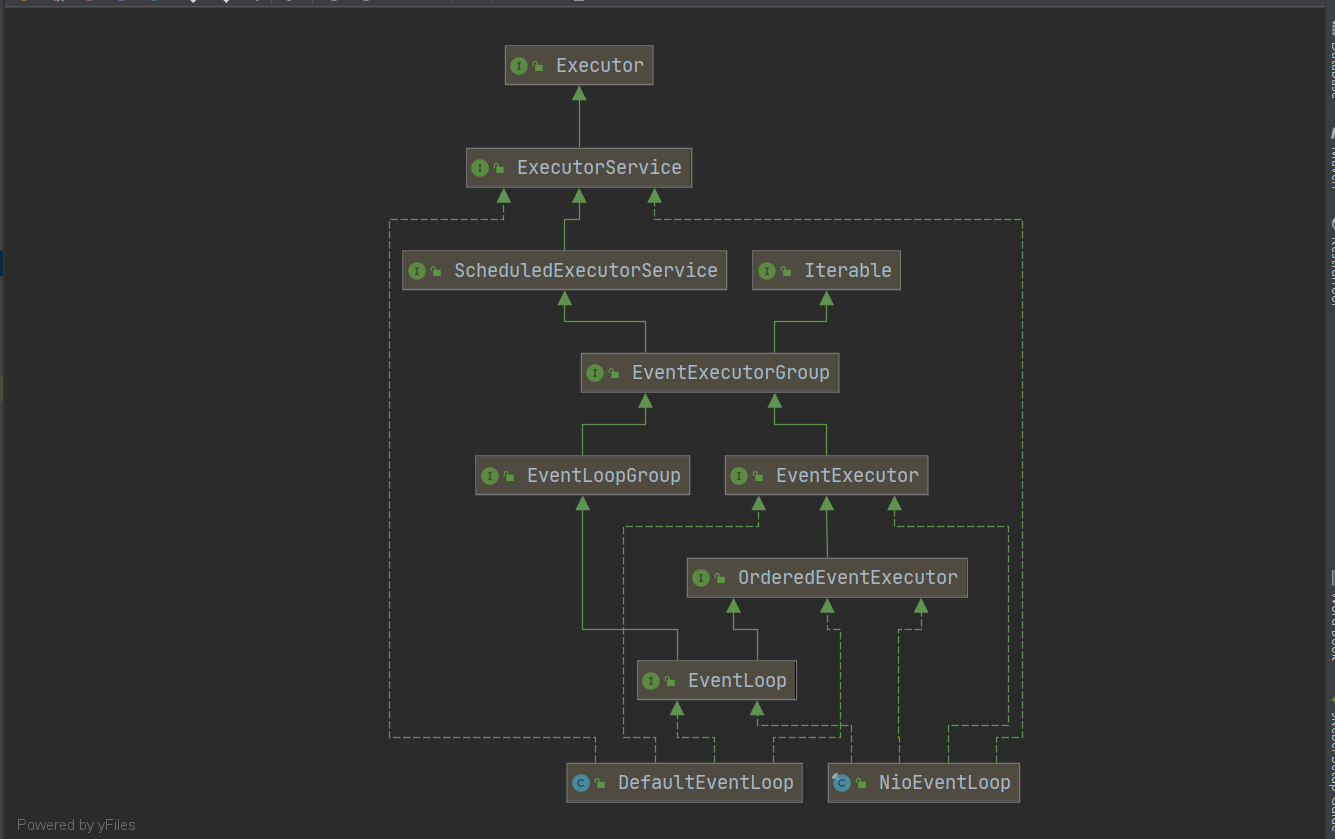

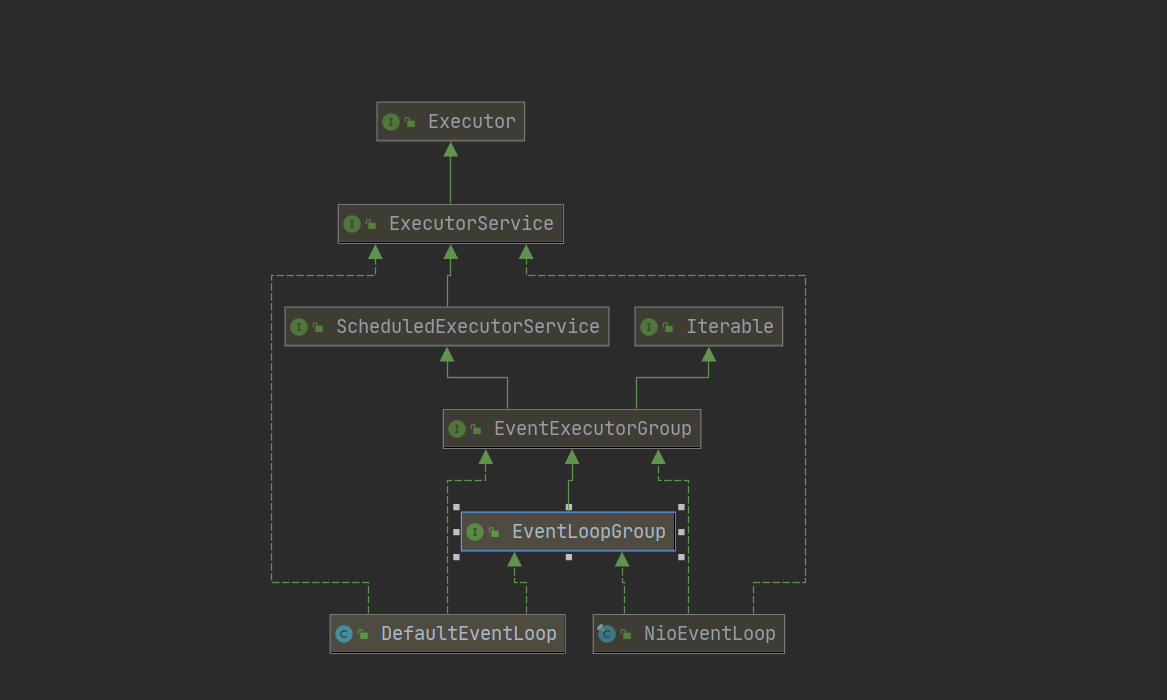

2.1 EventLoop

- The event loop object EventLoop is essentially a single thread executor (maintaining a Selector at the same time), which has a run method to handle the continuous flow of io events on one or more channels

- After registration, all I/O operations of the Channel will be processed. An EventLoop instance usually handles multiple channels, but this may depend on the implementation details and internal structure.

- Inheriting from j.u.c.ScheduledExecutorService, it contains all the methods in the thread pool

- Inherited from netty's own OrderedEventExecutor

- A boolean inEventLoop(Thread thread) method is provided to judge whether a thread belongs to this EventLoop

- The EventLoopGroup parent() method is provided to see which EventLoopGroup you belong to

- The event loop group EventLoopGroup is a group of eventloops. The Channel will generally call the register method of EventLoopGroup to bind one of the eventloops. Subsequent io events on this Channel will be processed by this EventLoop (ensuring thread safety during io event processing)

- Inherited from netty's own EventExecutorGroup

- The Iterable interface is implemented to provide the ability to traverse EventLoop

- In addition, the next method gets the next EventLoop in the collection

2.1.1 handling common tasks and scheduled tasks

package com.shu.EventLoop;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import lombok.extern.slf4j.Slf4j;

import java.util.concurrent.TimeUnit;

/**

* @Author shu

* @Date: 2022/03/01/ 16:20

* @Description

**/

@Slf4j

public class NioEventLoopTest {

public static void main(String[] args) {

EventLoopGroup eventLoopGroup=new NioEventLoopGroup(2);

// Perform common tasks

eventLoopGroup.next().submit(()->{

}

);

// Perform scheduled tasks

eventLoopGroup.next().scheduleAtFixedRate(()->{

System.out.println("ok");

},0,1, TimeUnit.SECONDS);

// Close gracefully

eventLoopGroup.shutdownGracefully();

}

}

2.1.2 processing IO tasks

- Server

package com.shu.EventLoop;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.buffer.ByteBuf;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import java.nio.charset.StandardCharsets;

/**

* @Author shu

* @Date: 2022/03/01/ 16:37

* @Description Custom EventLoopGroup

**/

public class NioEventLoopServer {

public static void main(String[] args) {

// Handle read and write events

EventLoopGroup defaultEventLoop=new DefaultEventLoop();

new ServerBootstrap()

// Two groups: the Boss is responsible for the Accept event and the Worker is responsible for the read-write event

.group(new NioEventLoopGroup(1),new NioEventLoopGroup(2))

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

// Add two handler s. The first one uses NioEventLoopGroup for processing and the second one uses custom EventLoopGroup for processing

socketChannel.pipeline().addLast("nioHandler",new ChannelInboundHandlerAdapter() {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ByteBuf buf = (ByteBuf) msg;

System.out.println(Thread.currentThread().getName() + " " + buf.toString(StandardCharsets.UTF_8));

// Call the next handler

ctx.fireChannelRead(msg);

}

})

// This handler is bound to a custom Group

.addLast(defaultEventLoop, "myHandler", new ChannelInboundHandlerAdapter() {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ByteBuf buf = (ByteBuf) msg;

System.out.println(Thread.currentThread().getName() + " " + buf.toString(StandardCharsets.UTF_8));

}

});

}

})

.bind(8080);

}

}

- Customer service end

package com.shu.EventLoop;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.Channel;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringEncoder;

import java.io.IOException;

import java.net.InetSocketAddress;

/**

* @Author shu

* @Date: 2022/03/01/ 16:37

* @Description Customer service end

**/

public class NioEventLoopClient {

public static void main(String[] args) throws IOException, InterruptedException {

Channel channel = new Bootstrap()

.group(new NioEventLoopGroup())

.channel(NioSocketChannel.class)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

socketChannel.pipeline().addLast(new StringEncoder());

}

})

.connect(new InetSocketAddress("localhost", 8080))

.sync()

.channel();

System.out.println(channel);

// Click here to interrupt debugging and call channel writeAndFlush(...);

System.in.read();

}

}

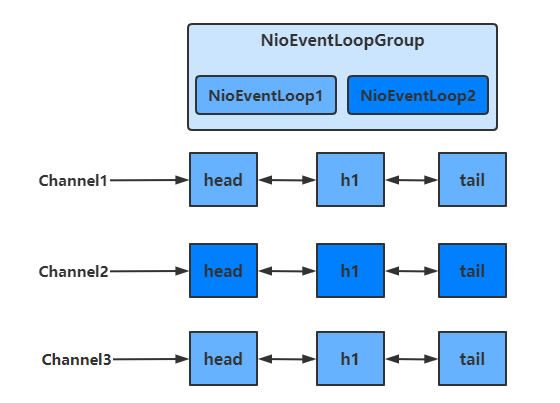

- It can be seen that one EventLoop can be responsible for multiple channels, and once it is bound with the Channel, it will always be responsible for handling events in the Channel

- When some tasks take a long time to process, non NioEventLoopGroup can be used to avoid that other channels in the same NioEventLoop cannot be processed for a long time

2.2 Channel

common method

- close() can be used to close the Channel

- closeFuture() is used to handle the closing of the Channel

- The sync method is used to synchronize and wait for the Channel to close

- The addListener method is to asynchronously wait for the Channel to close

- The pipeline() method is used to add a processor

- The write() method writes data to

- Because of the buffer mechanism, data will not be sent immediately after being written to the Channel

- Only when the buffer is full or the flush() method is called will the data be sent out through the Channel

- The writeAndFlush() method writes the data and sends it immediately (swipe it out)

- id(): returns the globally unique identifier of this channel.

- isActive(): returns true if the channel is active and connected.

- Isepen(): returns true if the channel is open and may be activated later.

- isRegistered(): returns true if the channel has registered EventLoop.

- config(): returns the configuration of this channel.

- localAddress(): returns the local address bound by this channel.

- pipeline(): returns the dispatched ChannelPipeline.

- remoteAddress(): returns the remote address to which this channel is connected.

2.2.1 ChannelFuture

Synchronous sending: sync()

public class ChannelClient {

public static void main(String[] args) throws InterruptedException {

ChannelFuture channelFuture = new Bootstrap()

.group(new NioEventLoopGroup())

// Select the customer Socket implementation class, and NioSocketChannel represents the client implementation based on NIO

.channel(NioSocketChannel.class)

// ChannelInitializer processor (execute only once)

// Its function is to execute initChannel to add more processors after the client SocketChannel establishes a connection

.handler(new ChannelInitializer<Channel>() {

@Override

protected void initChannel(Channel channel) throws Exception {

// The message will be processed by the channel handler. Here, string = > bytebuf is encoded and sent

channel.pipeline().addLast(new StringEncoder());

}

})

// Specify the server and port to connect to

.connect(new InetSocketAddress("localhost", 8080));

// //Synchronous processing

ChannelFuture future = channelFuture.sync();

future.channel().writeAndFlush("hello word");

}

}

- If we remove channelfuture Sync() method, the server will not receive hello world.

- This is because the process of establishing a connection is asynchronous and non blocking. If you do not block the main thread through the sync() method and wait for the connection to be established, you can use channelfuture The Channel object obtained by Channel () is not the Channel that has really established a connection with the server, so it is impossible to correctly transmit the information to the server.

- So you need to go through channelfuture The sync () method blocks the main thread, synchronizes the processing results, and waits for the connection to be established before obtaining the data transmitted by the Channel.

- Using this method, the thread that obtains the Channel and sends data is the main thread.

Asynchronous sending: addListener method

package com.shu.Channel;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.Channel;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelFutureListener;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringEncoder;

import java.net.InetSocketAddress;

/**

* @Author shu

* @Date: 2022/03/02/ 11:29

* @Description

**/

public class ChannelClient {

public static void main(String[] args) throws InterruptedException {

ChannelFuture channelFuture = new Bootstrap()

.group(new NioEventLoopGroup())

// Select the customer Socket implementation class, and NioSocketChannel represents the client implementation based on NIO

.channel(NioSocketChannel.class)

// ChannelInitializer processor (execute only once)

// Its function is to execute initChannel to add more processors after the client SocketChannel establishes a connection

.handler(new ChannelInitializer<Channel>() {

@Override

protected void initChannel(Channel channel) throws Exception {

// The message will be processed by the channel handler. Here, string = > bytebuf is encoded and sent

channel.pipeline().addLast(new StringEncoder());

}

})

// Specify the server and port to connect to

.connect(new InetSocketAddress("localhost", 8080));

// Asynchronous processing

channelFuture.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture future) throws Exception {

future.channel().writeAndFlush("hello word");

}

});

}

}

- In this way, you can get the Channel and send data in the NIO thread instead of performing these operations in the main thread

2.2.2 CloseFuture

When we want to close channel, we can call channel Close() method. But this method is also an asynchronous method. The real shutdown operation is not performed in the thread calling the method, but in the NIO thread

Synchronous closing: closefuture sync();

package com.shu.Channel;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.Channel;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelFutureListener;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringEncoder;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

import java.net.InetSocketAddress;

import java.util.Scanner;

/**

* @Author shu

* @Date: 2022/03/02/ 11:29

* @Description

**/

public class ChannelClient {

public static void main(String[] args) throws InterruptedException {

NioEventLoopGroup group = new NioEventLoopGroup();

ChannelFuture channelFuture = new Bootstrap()

.group(group)

// Select the customer Socket implementation class, and NioSocketChannel represents the client implementation based on NIO

.channel(NioSocketChannel.class)

// ChannelInitializer processor (execute only once)

// Its function is to execute initChannel to add more processors after the client SocketChannel establishes a connection

.handler(new ChannelInitializer<Channel>() {

@Override

protected void initChannel(Channel channel) throws Exception {

// The message will be processed by the channel handler. Here, string = > bytebuf is encoded and sent

channel.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

channel.pipeline().addLast(new StringEncoder());

}

})

// Specify the server and port to connect to

.connect(new InetSocketAddress("localhost", 8080));

Channel channel = channelFuture.channel();

Scanner scanner = new Scanner(System.in);

// Create a thread to input and send to the server

new Thread(()->{

while (true) {

String msg = scanner.next();

if ("q".equals(msg)) {

// The close operation is asynchronous and executed in the NIO thread

channel.close();

break;

}

channel.writeAndFlush(msg);

}

}, "inputThread").start();

// Get closeFuture object

ChannelFuture closeFuture = channel.closeFuture();

System.out.println("waiting close...");

// Synchronously wait for the NIO thread to complete the close operation

closeFuture.sync();

// Perform some operations after closing to ensure that the operations must be performed after the channel is closed

System.out.println("Perform some additional actions after closing...");

// Close EventLoopGroup

group.shutdownGracefully();

}

}

Asynchronous shutdown: addListener method

package com.shu.Channel;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.Channel;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelFutureListener;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringEncoder;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

import java.net.InetSocketAddress;

import java.util.Scanner;

/**

* @Author shu

* @Date: 2022/03/02/ 11:29

* @Description

**/

public class ChannelClient {

public static void main(String[] args) throws InterruptedException {

NioEventLoopGroup group = new NioEventLoopGroup();

ChannelFuture channelFuture = new Bootstrap()

.group(group)

// Select the customer Socket implementation class, and NioSocketChannel represents the client implementation based on NIO

.channel(NioSocketChannel.class)

// ChannelInitializer processor (execute only once)

// Its function is to execute initChannel to add more processors after the client SocketChannel establishes a connection

.handler(new ChannelInitializer<Channel>() {

@Override

protected void initChannel(Channel channel) throws Exception {

// The message will be processed by the channel handler. Here, string = > bytebuf is encoded and sent

channel.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

channel.pipeline().addLast(new StringEncoder());

}

})

// Specify the server and port to connect to

.connect(new InetSocketAddress("localhost", 8080));

Channel channel = channelFuture.channel();

Scanner scanner = new Scanner(System.in);

// Create a thread to input and send to the server

new Thread(()->{

while (true) {

String msg = scanner.next();

if ("q".equals(msg)) {

// The close operation is asynchronous and executed in the NIO thread

channel.close();

break;

}

channel.writeAndFlush(msg);

}

}, "inputThread").start();

// Get closeFuture object

ChannelFuture closeFuture = channel.closeFuture();

System.out.println("waiting close...");

// Asynchronous shutdown

closeFuture.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture channelFuture) throws Exception {

// An operation that waits until the channel is closed

System.out.println("Perform some additional actions after closing...");

// Close EventLoopGroup

group.shutdownGracefully();

}

});

}

}

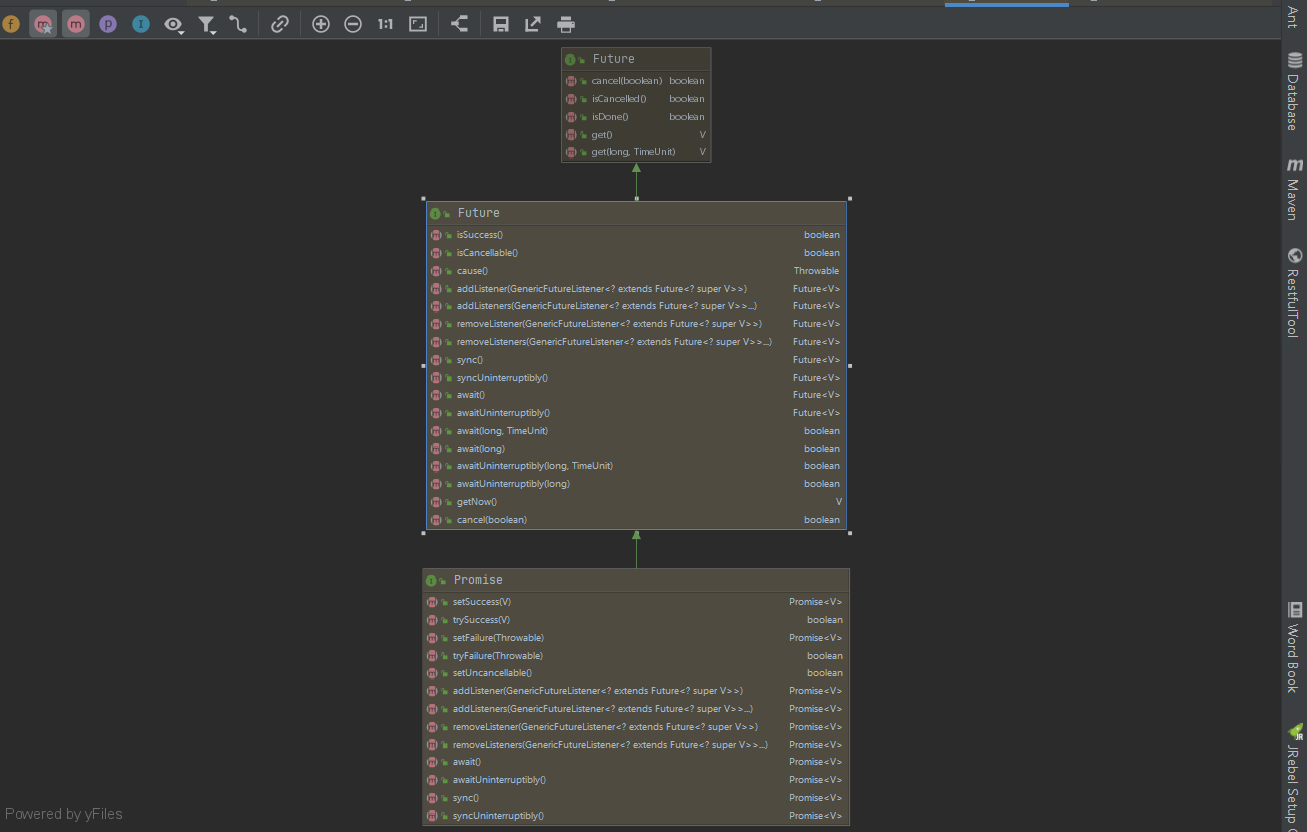

2.3 Future&Promise

2.3.1 Jdk Future

- Basic method

public interface Future<V> {

// Cancel task

boolean cancel(boolean mayInterruptIfRunning);

// Cancel

boolean isCancelled();

// Complete

boolean isDone();

// Get results

V get() throws InterruptedException, ExecutionException;

// Timeout get results

V get(long timeout, TimeUnit unit)

throws InterruptedException, ExecutionException, TimeoutException;

}

- Using Future return value in multithreading

package com.shu.Channel;

import java.util.concurrent.*;

/**

* @Author shu

* @Date: 2022/03/02/ 14:01

* @Description Return task in multithreading

**/

public class JdkFutureTask {

public static void main(String[] args) throws ExecutionException, InterruptedException {

ExecutorService executorService = Executors.newFixedThreadPool(2);

Future<Integer> future = executorService.submit(new Callable<Integer>() {

@Override

public Integer call() throws Exception {

return 2;

}

});

Integer integer = future.get();

System.out.println(integer);

}

}

2.3.2 Netty Future

- Basic method

public interface Future<V> extends java.util.concurrent.Future<V> {

// Returns true if and only if the I/O operation completes successfully.

boolean isSuccess();

// Returns true if and only if the cancel(boolean) operation is available.

boolean isCancellable();

// If the I/O operation fails, the reason for the failure of the I/O operation is returned.

Throwable cause();

// Adds the specified listener to this future. When this future is completed, the specified listener is notified. If this future has been completed, the specified listener will be notified immediately.

Future<V> addListener(GenericFutureListener<? extends Future<? super V>> listener);

Future<V> addListeners(GenericFutureListener<? extends Future<? super V>>... listeners);

// Removes the first occurrence of the specified listener from this future. When this future is completed, the specified listener is no longer notified. If the specified listener is not related to this future, this method does nothing and returns silently.

Future<V> removeListener(GenericFutureListener<? extends Future<? super V>> listener);

Future<V> removeListeners(GenericFutureListener<? extends Future<? super V>>... listeners);

// Wait for the future until it is completed. If the future fails, re throw out the reason for the failure.

Future<V> sync() throws InterruptedException;

//

Future<V> syncUninterruptibly();

// Wait for this future to be completed.

Future<V> await() throws InterruptedException;

Future<V> awaitUninterruptibly();

boolean await(long timeout, TimeUnit unit) throws InterruptedException;

boolean await(long timeoutMillis) throws InterruptedException;

boolean awaitUninterruptibly(long timeout, TimeUnit unit);

boolean awaitUninterruptibly(long timeoutMillis);

// Returns results without blocking. If the future has not been completed, this will return null. Since it is possible to mark the future as successful with a null value, you also need to check whether the future is really done with isDone(), rather than relying on the returned null value.

V getNow();

@Override

boolean cancel(boolean mayInterruptIfRunning);

}

package com.shu.Channel;

import io.netty.channel.EventLoop;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.util.concurrent.Future;

import io.netty.util.concurrent.GenericFutureListener;

import java.util.concurrent.Callable;

import java.util.concurrent.ExecutionException;

/**

* @Author shu

* @Date: 2022/03/02/ 14:22

* @Description Netty Future in

**/

public class NettyFutureTask {

public static void main(String[] args) throws ExecutionException, InterruptedException {

NioEventLoopGroup group = new NioEventLoopGroup();

// Get EventLoop object

EventLoop eventLoop = group.next();

Future<Integer> future = eventLoop.submit(new Callable<Integer>() {

@Override

public Integer call() throws Exception {

return 50;

}

});

// Get results from main thread

System.out.println(Thread.currentThread().getName() + " Get results");

System.out.println("getNow " + future.getNow());

System.out.println("get " + future.get());

// Get results asynchronously in NIO thread

future.addListener(new GenericFutureListener<Future<? super Integer>>() {

@Override

public void operationComplete(Future<? super Integer> future) throws Exception {

System.out.println(Thread.currentThread().getName() + " Get results");

System.out.println("getNow " + future.getNow());

}

});

}

}

The Future object in Netty can be obtained through the sumbit() method of EventLoop

- You can get the return result in a blocking way through the get method of the Future object

- You can also get the result through the getNow method. If there is no result, null will be returned. This method is non blocking

- You can also use future AddListener method, which asynchronously obtains the returned result in the thread executed by the Callable method

2.3.3 Netty Promise

- Basic method

public interface Promise<V> extends Future<V> {

Promise<V> setSuccess(V result);

boolean trySuccess(V result);

Promise<V> setFailure(Throwable cause);

boolean tryFailure(Throwable cause);

boolean setUncancellable();

@Override

Promise<V> addListener(GenericFutureListener<? extends Future<? super V>> listener);

@Override

Promise<V> addListeners(GenericFutureListener<? extends Future<? super V>>... listeners);

@Override

Promise<V> removeListener(GenericFutureListener<? extends Future<? super V>> listener);

@Override

Promise<V> removeListeners(GenericFutureListener<? extends Future<? super V>>... listeners);

@Override

Promise<V> await() throws InterruptedException;

@Override

Promise<V> awaitUninterruptibly();

@Override

Promise<V> sync() throws InterruptedException;

@Override

Promise<V> syncUninterruptibly();

}

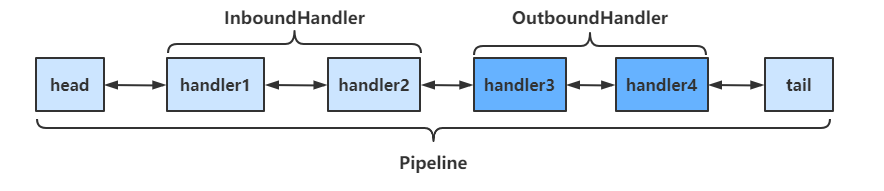

2.4 Handler&Pipeline

2.4.1 basic knowledge

- ChannelHandler is used to handle various events on the Channel, including inbound and outbound. All channelhandlers are connected into a string, which is Pipeline

- ·Inbound processor is usually a subclass of channelnboundhandleradapter, which is mainly used to read client data and write back results.

- The outbound processor is usually a subclass of ChannelOutboundHandlerAdapter, which mainly processes the writeback results.

- As an analogy, each Channel is a product processing workshop, Pipeline is the assembly line in the workshop, and ChannelHandler is each process on the assembly line, while ByteBuf, which will be talked about later, is a raw material, which is processed through many processes: first through one inbound process, then through one outbound process, and finally into a product.

public class PipeLineServer {

public static void main(String[] args) {

new ServerBootstrap()

.group(new NioEventLoopGroup())

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

// Add handler in the pipeline of socketChannel

// The handler in pipeline is a two-way linked list with head and tail nodes. The actual structure is

// head <-> handler1 <-> ... <-> handler4 <->tail

// Inbound mainly deals with inbound operations, generally read operations. When inbound operations occur, the inbound method will be triggered

// When entering the station, handler is invoked backwards from head.

socketChannel.pipeline().addLast("handler1" ,new ChannelInboundHandlerAdapter() {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

System.out.println(Thread.currentThread().getName() + " Inbound handler 1");

// The method of the parent class will call fireChannelRead internally

// Pass data to the next handler

super.channelRead(ctx, msg);

}

});

socketChannel.pipeline().addLast("handler2", new ChannelInboundHandlerAdapter() {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

System.out.println(Thread.currentThread().getName() + " Inbound handler 2");

// Execute the write operation so that the Outbound method can be called

socketChannel.writeAndFlush(ctx.alloc().buffer().writeBytes("Server...".getBytes(StandardCharsets.UTF_8)));

super.channelRead(ctx, msg);

}

});

// Outbound mainly deals with outbound operations, generally write operations. Outbound methods will be triggered when outbound operations occur

// When handler is outbound, the call of the tail is called forward.

socketChannel.pipeline().addLast("handler3" ,new ChannelOutboundHandlerAdapter(){

@Override

public void write(ChannelHandlerContext ctx, Object msg, ChannelPromise promise) throws Exception {

System.out.println(Thread.currentThread().getName() + " Outbound handler 1");

super.write(ctx, msg, promise);

}

});

socketChannel.pipeline().addLast("handler4" ,new ChannelOutboundHandlerAdapter(){

@Override

public void write(ChannelHandlerContext ctx, Object msg, ChannelPromise promise) throws Exception {

System.out.println(Thread.currentThread().getName() + " Outbound handler 2");

super.write(ctx, msg, promise);

}

});

}

})

.bind(8080);

}

}

- pipeline structure is a two-way linked list with head and tail pointers, in which the node is handler

- To pass CTX Firechannelread (MSG) and other methods to pass the processing results of the current handler to the next handler

- When an inbound (Inbound) operation is performed, the handler will be called back from head until handler is not processing Inbound operation.

- When there is an outgoing (Outbound) operation, the handler is called forward from tail until handler is not processing Outbound operation.

2.5 ByteBuf

2.5.1 basic creation

- ByteBuf is created by selecting allocator and calling the corresponding buffer() method through bytebufalocator. By default, direct memory is used as ByteBuf, with a capacity of 256 bytes. The initial capacity can be specified

- When the capacity of ByteBuf cannot accommodate all data, ByteBuf will expand the capacity. If ByteBuf is created in the handler, it is recommended to use channelhandlercontext CTX alloc(). Buffer()

public class ByteBufStudy {

public static void main(String[] args) {

// Create ByteBuf

ByteBuf buffer = ByteBufAllocator.DEFAULT.buffer(16);

ByteBufUtil.log(buffer);

// Write data to buffer

StringBuilder sb = new StringBuilder();

for(int i = 0; i < 20; i++) {

sb.append("a");

}

buffer.writeBytes(sb.toString().getBytes(StandardCharsets.UTF_8));

// View write results

ByteBufUtil.log(buffer);

}

2.5.2 direct memory and heap memory

package com.shu.NettyByteBuf;

import io.netty.buffer.ByteBuf;

import io.netty.buffer.ByteBufAllocator;

import java.nio.ByteBuffer;

/**

* @Author shu

* @Date: 2022/03/02/ 15:53

* @Description

**/

public class CreatByteBuf {

public static void main(String[] args) {

// Heap memory allocation

ByteBuf heapBuffer = ByteBufAllocator.DEFAULT.heapBuffer();

// Direct memory allocation

ByteBuf directBuffer = ByteBufAllocator.DEFAULT.directBuffer();

}

}

- Direct memory creation and destruction are expensive, but the read-write performance is high (less memory replication), which is suitable for use with the pooling function

- Direct memory has little pressure on GC, because this part of memory is not managed by JVM garbage collection, but it should also be released in time

2.5.3 basic structure of bytebuf

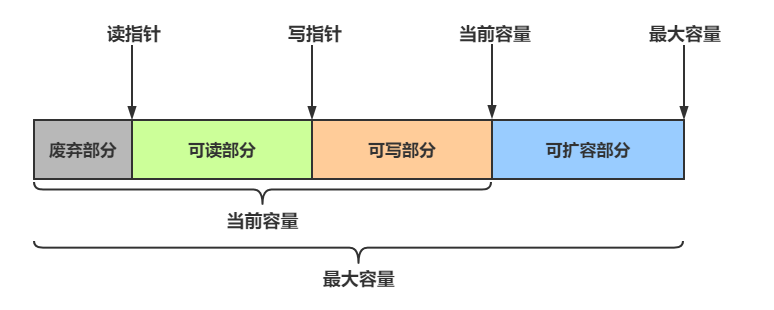

-

ByteBuf provides two pointer variables to support sequential read and write operations -- readerIndex for read operations and writerIndex for write operations, respectively. The following figure shows how the buffer is divided into three areas by two pointers:

±------------------±-----------------±-----------------+

| discardable bytes | readable bytes | writable bytes |

| | (CONTENT) | |

±------------------±-----------------±-----------------+

| | | |

0 <= readerIndex <= writerIndex <= capacity -

Readable byte (actual content), this segment is where the actual data is stored. Any operation whose name starts with read or skip will get or skip the data at the current readerIndex and increase the number of read bytes. If the parameter of the read operation is also a ByteBuf and the target index is not specified, the writerIndex of the specified buffer is increased together.

-

Writable byte, which is the undefined space to be filled. Any operation whose name begins with write will write data at the current writerIndex and increase it by the number of bytes written. If the parameter of the write operation is also a ByteBuf and the source index is not specified, the readerIndex of the specified buffer will be increased together. If there are not enough writable bytes, IndexOutOfBoundsException will be thrown. The default value of writerIndex for newly allocated buffers is 0. The default value of writerIndex for wrapping or copying buffers is the capacity of the buffer.

2.5.4 composition

ByteBuf mainly has the following components

- Maximum and current capacity

- When constructing ByteBuf, two parameters can be passed in, representing the initial capacity and maximum capacity respectively. If the second parameter (maximum capacity) is not passed in, the maximum capacity is integer by default MAX_ VALUE

- When the capacity of ByteBuf cannot accommodate all data, the capacity will be expanded. If the maximum capacity is exceeded, Java. Com will be thrown Lang.indexoutofboundsexception exception exception

- Unlike ByteBuffer, which is only controlled by position, ByteBuffer is controlled by read pointer and write pointer respectively. When reading and writing, there is no need to switch the mode

- The part before reading the pointer is called the abandoned part, which is the content that has been read

- The space between the read pointer and the write pointer is called the readable part

- The space between the write pointer and the current capacity is called the writable part

2.5.5 writing method

Common methods are as follows

| Method signature | meaning | remarks |

|---|---|---|

| writeBoolean(boolean value) | Write boolean value | One byte 01 | 00 represents true|false |

| writeByte(int value) | Write byte value | |

| writeShort(int value) | Write short value | |

| writeInt(int value) | Write int value | Big Endian, i.e. 0x250, 00 00 02 50 after writing |

| writeIntLE(int value) | Write int value | Little Endian, i.e. 0x250, 50 02 00 00 after writing |

| writeLong(long value) | Write long value | |

| writeChar(int value) | Write char value | |

| writeFloat(float value) | Write float value | |

| writeDouble(double value) | Write double value | |

| writeBytes(ByteBuf src) | ByteBuf written to netty | |

| writeBytes(byte[] src) | Write byte [] | |

| writeBytes(ByteBuffer src) | ByteBuffer written to nio | |

| int writeCharSequence(CharSequence sequence, Charset charset) | Write string | CharSequence is the parent class of string class, and the second parameter is the corresponding character set |

be careful

- The unspecified return value of these methods is ByteBuf, which means that different data can be written by chain call

- In network transmission, the default habit is Big Endian and writeInt(int value) is used

public class ByteBufStudy {

public static void main(String[] args) {

// Create ByteBuf

ByteBuf buffer = ByteBufAllocator.DEFAULT.buffer(16, 20);

ByteBufUtil.log(buffer);

// Write data to buffer

buffer.writeBytes(new byte[]{1, 2, 3, 4});

ByteBufUtil.log(buffer);

buffer.writeInt(5);

ByteBufUtil.log(buffer);

buffer.writeIntLE(6);

ByteBufUtil.log(buffer);

buffer.writeLong(7);

ByteBufUtil.log(buffer);

}

}

2.5.6 capacity expansion

- When the capacity in ByteBuf cannot accommodate the written data, the capacity expansion operation will be carried out

- How to select the next integer multiple of 16 for capacity expansion if the data size after writing does not exceed 512 bytes

- For example, if the size after writing is 12 bytes, the capacity after capacity expansion is 16 bytes

- If the data size after writing exceeds 512 bytes, select the next 2n

- For example, if the size after writing is 513 bytes, the capacity after capacity expansion is 210 = 1024 bytes (29 = 512 is not enough)

- The capacity expansion cannot exceed maxCapacity, or Java. Net will be thrown Lang.indexoutofboundsexception exception exception

2.5.7 reading method

- Reading is mainly carried out through a series of read methods. During reading, the read pointer will be moved according to the number of bytes of the read data

- If you need to read repeatedly, you need to call buffer Markreaderindex() marks the read pointer and passes it through buffer Resetreaderindex() restores the read pointer to the position marked by mark

public class ByteBufStudy {

public static void main(String[] args) {

// Create ByteBuf

ByteBuf buffer = ByteBufAllocator.DEFAULT.buffer(16, 20);

// Write data to buffer

buffer.writeBytes(new byte[]{1, 2, 3, 4});

buffer.writeInt(5);

// Read 4 bytes

System.out.println(buffer.readByte());

System.out.println(buffer.readByte());

System.out.println(buffer.readByte());

System.out.println(buffer.readByte());

ByteBufUtil.log(buffer);

// Repeat reading through mark and reset

buffer.markReaderIndex();

System.out.println(buffer.readInt());

ByteBufUtil.log(buffer);

// Restore to mark mark

buffer.resetReaderIndex();

ByteBufUtil.log(buffer);

}

}

2.5.8 memory release

Since there is a ByteBuf implementation of out of heap memory (direct memory) in Netty, it is better to release out of heap memory manually instead of waiting for GC garbage collection.

- UnpooledHeapByteBuf uses JVM memory, so you just need to wait for GC to reclaim memory

- UnpooledDirectByteBuf uses direct memory and requires special methods to reclaim memory

- PooledByteBuf and its subclasses use pooling mechanisms and require more complex rules to reclaim memory

Netty uses the reference counting method to control memory recycling, and each ByteBuf implements the ReferenceCounted interface

- The initial count for each ByteBuf object is 1

- Call the release method to reduce the count by 1. If the count is 0, ByteBuf memory will be recycled

Release rule

Because of the existence of pipeline, it is generally necessary to pass ByteBuf to the next ChannelHandler. If release is called in each ChannelHandler, the transitivity will be lost (if the ByteBuf has completed its mission in this ChannelHandler, there is no need to pass it again)

The basic rule is who is the last user and who is responsible for release

- Starting point, for NIO implementation, in io netty. channel. NIO. AbstractNioByteChannel. NioByteUnsafe. Create ByteBuf for the first time in the read method and put it into the pipeline (line 163 pipeline.fireChannelRead(byteBuf))

- Inbound ByteBuf processing policy

- Do not process the original ByteBuf, call CTX Firechannelread (MSG) is passed back, and release is not required at this time

- Convert the original ByteBuf to other types of Java objects. At this time, ByteBuf is useless and must be release d

- If you do not call CTX If firechannelread (MSG) is passed back, it must also be release d

- Note various exceptions. If ByteBuf is not successfully passed to the next ChannelHandler, it must be release d

- Assuming that the message is always sent back, TailContext will be responsible for releasing the unprocessed message (original ByteBuf)

- Outbound ByteBuf processing principle

- Outbound messages will eventually be converted to ByteBuf output, which will be transmitted forward and release d after HeadContext flush

- Exception handling principle

- Sometimes it is not clear how many times ByteBuf has been referenced, but it must be released completely. You can call release repeatedly until truewhile (!buffer.release()) {}Copy is returned

When ByteBuf is passed to the head and tail of the pipeline, ByteBuf will be completely released by the methods in it, but only if ByteBuf is passed to the head and tail

protected void onUnhandledInboundMessage(Object msg) {

try {

logger.debug("Discarded inbound message {} that reached at the tail of the pipeline. Please check your pipeline configuration.", msg);

} finally {

// Specific release method

ReferenceCountUtil.release(msg);

}

}

2.5.9 zero copy

- ByteBuf slicing is one of the embodiments of [zero copy]. The original ByteBuf is sliced into multiple bytebufs. The memory of the sliced ByteBuf is not copied. The memory of the original ByteBuf is still used. The sliced ByteBuf maintains independent read and write pointers

- After obtaining the fragmented buffer, call its retain method to add one to its internal reference count. Avoid the release of the original ByteBuffer, which will make the slice buffer unusable. Modifying the value in the original ByteBuffer will also affect the ByteBuffer obtained after slicing

public class TestSlice {

public static void main(String[] args) {

// Create ByteBuf

ByteBuf buffer = ByteBufAllocator.DEFAULT.buffer(16, 20);

// Write data to buffer

buffer.writeBytes(new byte[]{1, 2, 3, 4, 5, 6, 7, 8, 9, 10});

// Divide the buffer into two parts

ByteBuf slice1 = buffer.slice(0, 5);

ByteBuf slice2 = buffer.slice(5, 5);

// You need to add one to the buffer reference count of the partition

// Avoid that the original buffer is released, which makes the partition buffer unusable

slice1.retain();

slice2.retain();

ByteBufUtil.log(slice1);

ByteBufUtil.log(slice2);

// Change the value in the original buffer

System.out.println("===========Modify original buffer Value in===========");

buffer.setByte(0,5);

System.out.println("===========Print slice1===========");

ByteBufUtil.log(slice1);

}

}

- Pooling idea - ByteBuf instances in the pool can be reused to save memory and reduce the possibility of memory overflow

- The read-write pointer is separated, and there is no need to switch the read-write mode like ByteBuffer

- Automatic capacity expansion

- Support chain call, use more smoothly

- Zero copy is reflected in many places, such as

- slice,duplicate,CompositeByteBuf

Three step part

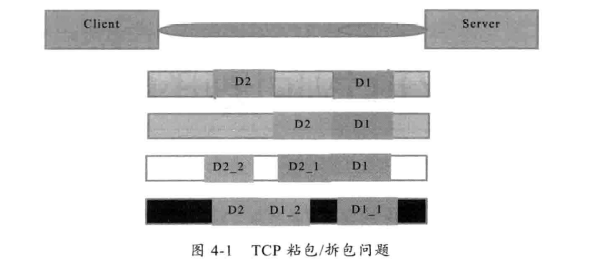

3.1 half package and sticking package

3.1.1 phenomena

- (1) The server reads two independent data packets, D1 and D2, without sticking and unpacking.

- (2) The server receives two packets at a time. D1 and D2 are bonded together, which is called TCP sticky packet.

- (3) the server reads two data packets twice. The first time it reads the complete D1 packet and part of the D2 packet, and the second time it reads the rest of the D2 packet. This is called TCP unpacking.

- (4) The server reads two data packets twice, and reads part of D1 packet dl for the first time_ 1. Read the remaining content D1 of D1 package for the second time_ 2 and D2 packages.

- If the TCP receiving sliding window of the server is very small at this time, and the data packets DI and D2 are relatively large, the fifth possibility is likely to occur, that is, the server can receive D1 and D2 packets completely by multiple times, during which multiple unpacking occurs.

Server

package com.shu.Package;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* @Author shu

* @Date: 2022/03/03/ 10:27

* @Description Sticking and half wrapping phenomenon

**/

public class PackageServer {

static final Logger log = LoggerFactory.getLogger(PackageServer.class);

void start() {

NioEventLoopGroup boss = new NioEventLoopGroup(1);

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

ServerBootstrap serverBootstrap = new ServerBootstrap();

// Half package phenomenon

serverBootstrap.option(ChannelOption.SO_RCVBUF,10);

serverBootstrap.channel(NioServerSocketChannel.class);

serverBootstrap.group(boss, worker);

serverBootstrap.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) {

ch.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

ch.pipeline().addLast(new ChannelInboundHandlerAdapter() {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

// This method is executed when the connection is established

log.debug("connected {}", ctx.channel());

super.channelActive(ctx);

}

@Override

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

// This method is executed when the connection is disconnected

log.debug("disconnect {}", ctx.channel());

super.channelInactive(ctx);

}

});

}

});

ChannelFuture channelFuture = serverBootstrap.bind(8080);

log.debug("{} binding...", channelFuture.channel());

channelFuture.sync();

log.debug("{} bound...", channelFuture.channel());

// Close channel

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

log.error("server error", e);

} finally {

boss.shutdownGracefully();

worker.shutdownGracefully();

log.debug("stopped");

}

}

public static void main(String[] args) {

new PackageServer().start();

}

}

Customer service end

package com.shu.Package;

import io.netty.bootstrap.Bootstrap;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* @Author shu

* @Date: 2022/03/03/ 10:29

* @Description Sticking and half wrapping phenomenon

**/

public class PackageClient {

static final Logger log = LoggerFactory.getLogger(PackageClient.class);

public static void main(String[] args) {

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.channel(NioSocketChannel.class);

bootstrap.group(worker);

bootstrap.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

log.debug("connected...");

ch.pipeline().addLast(new ChannelInboundHandlerAdapter() {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

log.debug("sending...");

// Send 16 bytes of data each time, 10 times in total

for (int i = 0; i < 10; i++) {

ByteBuf buffer = ctx.alloc().buffer();

buffer.writeBytes(new byte[]{0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15});

ctx.writeAndFlush(buffer);

}

}

});

}

});

ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 8080).sync();

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

log.error("client error", e);

} finally {

worker.shutdownGracefully();

}

}

}

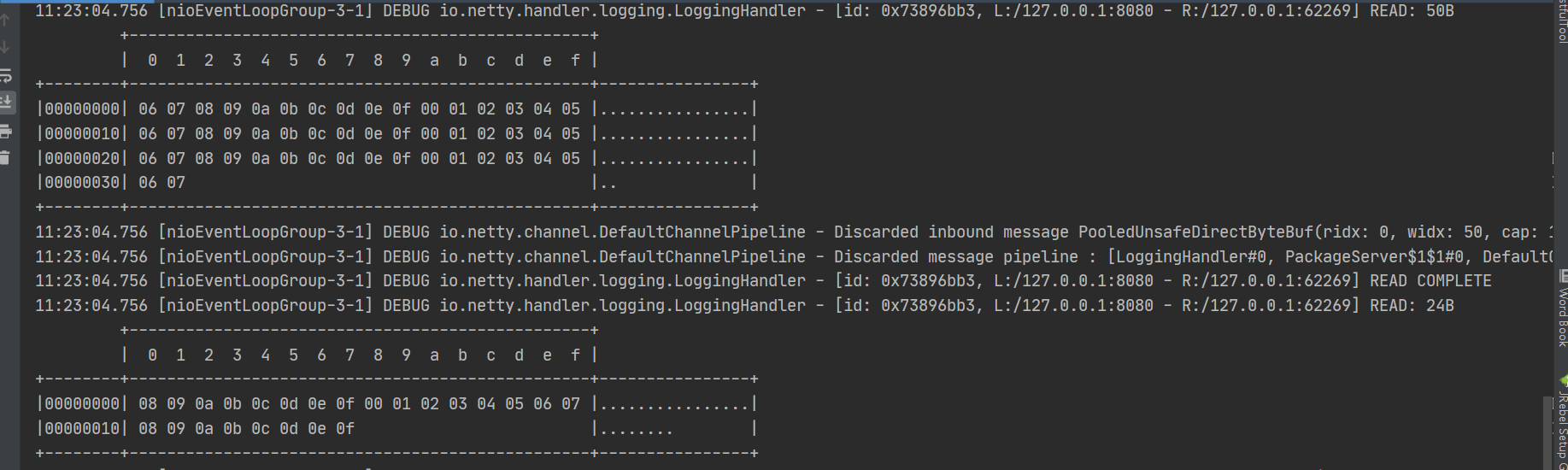

Sticking phenomenon

- The customer service end sends data ten times, and the server end accepts it at one time

Half package phenomenon

- Incomplete data acceptance

3.1.2 reasons

- (1) The byte size written by the application write is larger than the socket send buffer size.

- (2) Perform MSS size TCP segmentation.

- (3) The payload of Ethernet frame is larger than MTU for IP fragmentation.

3.1.3 solutions

- Because the underlying TCP cannot understand the business data of the upper layer, it cannot guarantee that the data packets will not be split and reorganized at the lower layer. This problem can only be solved through the design of the upper application protocol stack. According to the solutions of the mainstream protocols in the industry, it can be summarized as follows.

- (1) The message length is fixed. For example, the size of each message is a fixed length of 200 bytes. If it is not enough, fill in the space;

- (2) Add carriage return line feed at the end of the package for segmentation, such as FTP protocol;

- (3) the message is divided into a message header and a message body. The message header contains a field representing the total length of the message (or the length of the message body). The general design idea is that the first field of the message header uses int32 to represent the total length of the message;

- (4) More complex application layer protocols.

3.1.3.1 short connection

- Idea: send data once, close the connection and repeat the action

3.1.3.2 fixed length decoder

- The client agrees a maximum length with the server to ensure that the data length sent by the client each time will not be greater than this length.

- If the length of the transmitted data is insufficient, it needs to be supplemented to this length.

- When receiving data, the server splits the received data according to the agreed maximum length. Even if sticky packets are generated in the sending process, the data can be split correctly through the fixed length decoder.

- The server needs to use FixedLengthFrameDecoder to decode the data with fixed length.

Server

package com.shu.Package;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.FixedLengthFrameDecoder;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* @Author shu

* @Date: 2022/03/03/ 10:27

* @Description Sticking and half wrapping phenomenon

**/

public class PackageServer {

static final Logger log = LoggerFactory.getLogger(PackageServer.class);

void start() {

NioEventLoopGroup boss = new NioEventLoopGroup(1);

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap.channel(NioServerSocketChannel.class);

serverBootstrap.option(ChannelOption.SO_RCVBUF,10);

serverBootstrap.group(boss, worker);

serverBootstrap.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) {

// Fixed length decoder

ch.pipeline().addLast(new FixedLengthFrameDecoder(16));

ch.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

ch.pipeline().addLast(new ChannelInboundHandlerAdapter() {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

// This method is executed when the connection is established

log.debug("connected {}", ctx.channel());

super.channelActive(ctx);

}

@Override

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

// This method is executed when the connection is disconnected

log.debug("disconnect {}", ctx.channel());

super.channelInactive(ctx);

}

});

}

});

ChannelFuture channelFuture = serverBootstrap.bind(8080);

log.debug("{} binding...", channelFuture.channel());

channelFuture.sync();

log.debug("{} bound...", channelFuture.channel());

// Close channel

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

log.error("server error", e);

} finally {

boss.shutdownGracefully();

worker.shutdownGracefully();

log.debug("stopped");

}

}

public static void main(String[] args) {

new PackageServer().start();

}

}

Customer service end

package com.shu.Package;

import io.netty.bootstrap.Bootstrap;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* @Author shu

* @Date: 2022/03/03/ 10:29

* @Description Sticking and half wrapping phenomenon

**/

public class PackageClient {

static final Logger log = LoggerFactory.getLogger(PackageClient.class);

public static void main(String[] args) {

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.channel(NioSocketChannel.class);

bootstrap.group(worker);

bootstrap.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

log.debug("connected...");

ch.pipeline().addLast(new ChannelInboundHandlerAdapter() {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

log.debug("sending...");

// The agreed maximum length is 16

final int maxLength = 16;

// Data sent

char c = 'a';

// Send 10 messages to the server

for (int i = 0; i < 10; i++) {

ByteBuf buffer = ctx.alloc().buffer(maxLength);

// Fixed length byte array. Unused parts will be filled with 0

byte[] bytes = new byte[maxLength];

// Generate data with length of 0 ~ 15

for (int j = 0; j < (int)(Math.random()*(maxLength-1)); j++) {

bytes[j] = (byte) c;

}

buffer.writeBytes(bytes);

c++;

// Send data to server

ctx.writeAndFlush(buffer);

}

}

});

}

});

ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 8080).sync();

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

log.error("client error", e);

} finally {

worker.shutdownGracefully();

}

}

}

3.1.3.3 line decoder

- The of row decoder is to split the data by separator to solve the problem of sticking packet and half packet.

- You can use LineBasedFrameDecoder(int maxLength) to split data with newline character (\ n) as separator, or you can use DelimiterBasedFrameDecoder(int maxFrameLength, ByteBuf... delimiters) to specify which separator to split data (multiple separators can be passed in).

- Both decoders need the maximum length of incoming data. If the maximum length is exceeded, a TooLongFrameException exception will be thrown, with a newline character \ n as the separator.

Server

package com.shu.Package;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.DelimiterBasedFrameDecoder;

import io.netty.handler.codec.FixedLengthFrameDecoder;

import io.netty.handler.codec.LineBasedFrameDecoder;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* @Author shu

* @Date: 2022/03/03/ 10:27

* @Description Sticking and half wrapping phenomenon

**/

public class PackageServer {

static final Logger log = LoggerFactory.getLogger(PackageServer.class);

void start() {

NioEventLoopGroup boss = new NioEventLoopGroup(1);

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap.channel(NioServerSocketChannel.class);

serverBootstrap.group(boss, worker);

serverBootstrap.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) {

// Frame decoder based on / n

ch.pipeline().addLast(new LineBasedFrameDecoder(64));

// Based on custom character decoder

// Place separator in ByteBuf

ByteBuf bufSet = ch.alloc().buffer().writeBytes("\\c".getBytes(StandardCharsets.UTF_8));

// Split the sticky packet data through the row decoder with \ c as the separator

ch.pipeline().addLast(new DelimiterBasedFrameDecoder(64, ch.alloc().buffer().writeBytes(bufSet)));

ch.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

ch.pipeline().addLast(new ChannelInboundHandlerAdapter() {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

// This method is executed when the connection is established

log.debug("connected {}", ctx.channel());

super.channelActive(ctx);

}

@Override

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

// This method is executed when the connection is disconnected

log.debug("disconnect {}", ctx.channel());

super.channelInactive(ctx);

}

});

}

});

ChannelFuture channelFuture = serverBootstrap.bind(8080);

log.debug("{} binding...", channelFuture.channel());

channelFuture.sync();

log.debug("{} bound...", channelFuture.channel());

// Close channel

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

log.error("server error", e);

} finally {

boss.shutdownGracefully();

worker.shutdownGracefully();

log.debug("stopped");

}

}

public static void main(String[] args) {

new PackageServer().start();

}

}

Customer service end

package com.shu.Package;

import io.netty.bootstrap.Bootstrap;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.nio.charset.StandardCharsets;

import java.util.Random;

/**

* @Author shu

* @Date: 2022/03/03/ 10:29

* @Description Sticking and half wrapping phenomenon

**/

public class PackageClient {

static final Logger log = LoggerFactory.getLogger(PackageClient.class);

public static void main(String[] args) {

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.channel(NioSocketChannel.class);

bootstrap.group(worker);

bootstrap.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

log.debug("connected...");

ch.pipeline().addLast(new ChannelInboundHandlerAdapter() {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

log.debug("sending...");

// The agreed maximum length is 64

final int maxLength = 64;

// Data sent

char c = 'a';

for (int i = 0; i < 10; i++) {

ByteBuf buffer = ctx.alloc().buffer(maxLength);

// Generate data with length of 0 ~ 62

Random random = new Random();

StringBuilder sb = new StringBuilder();

for (int j = 0; j < (int)(random.nextInt(maxLength-2)); j++) {

sb.append(c);

}

// Data ends with \ n

sb.append("\n");

buffer.writeBytes(sb.toString().getBytes(StandardCharsets.UTF_8));

c++;

// Send data to server

ctx.writeAndFlush(buffer);

}

}

});

}

});

ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 8080).sync();

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

log.error("client error", e);

} finally {

worker.shutdownGracefully();

}

}

}

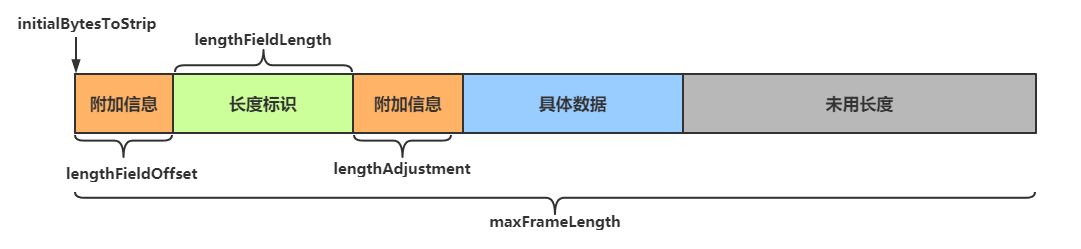

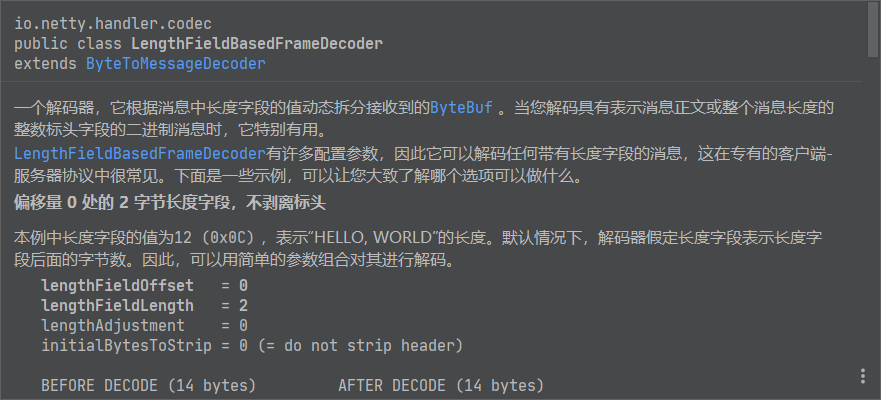

3.1.3.4 length field decoder

When transmitting data, you can add a field to the data to indicate the length of useful data, read out the field to indicate the length during decoding, and read other relevant parameters at the same time to know what the final required data looks like

The LengthFieldBasedFrameDecoder decoder can provide richer splitting methods, and its construction method has five parameters

public LengthFieldBasedFrameDecoder( int maxFrameLength, int lengthFieldOffset, int lengthFieldLength, int lengthAdjustment, int initialBytesToStrip)

Parameter analysis

- maxFrameLength maximum length of data

- Indicates the maximum length of data (including additional information, length identification, etc.)

- lengthFieldOffset the starting offset of the data length identifier

- Used to indicate the first few bytes of data. It is used to identify the length of useful bytes, because there may be other additional information ahead

- lengthFieldLength the number of bytes occupied by the data length identifier (used to indicate the length of useful data)

- The number of bytes in data used to indicate the length of useful data

- lengthAdjustment length represents the offset from useful data

- Used to indicate the distance between the data length identifier and useful data, because there may be additional information between them

- initialBytesToStrip data reading starting point

- Read the starting point, and do not read the data between 0 ~ initialBytesToStrip

Parameter diagram

Please check the source code document for detailed explanation

public class EncoderStudy {

public static void main(String[] args) {

// Simulation server

// Test using channel handler

EmbeddedChannel channel = new EmbeddedChannel(

// The maximum length of data is 1KB. There is 1 byte of additional information before and after the length identifier. The length of the length identifier is 4 bytes (int)

new LengthFieldBasedFrameDecoder(1024, 1, 4, 1, 0),

new LoggingHandler(LogLevel.DEBUG)

);

// Simulate client and write data

ByteBuf buffer = ByteBufAllocator.DEFAULT.buffer();

send(buffer, "Hello");

channel.writeInbound(buffer);

send(buffer, "World");

channel.writeInbound(buffer);

}

private static void send(ByteBuf buf, String msg) {

// Get the length of the data

int length = msg.length();

byte[] bytes = msg.getBytes(StandardCharsets.UTF_8);

// Write data information to buf

// Write other information before length identification

buf.writeByte(0xCA);

// Write data length identifier

buf.writeInt(length);

// Write other information after length identification

buf.writeByte(0xFE);

// Write specific data

buf.writeBytes(bytes);

}

}

3.2 protocol design

In TCP/IP, message transmission is based on stream and has no boundary

The purpose of the protocol is to delimit the boundary of the message and formulate the communication rules that both sides of the communication should abide by

3.2.1 Redis protocol

package com.shu.Protocol;

import io.netty.bootstrap.Bootstrap;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.net.InetSocketAddress;

/**

* @Author shu

* @Date: 2022/03/03/ 14:16

* @Description

**/

public class RedisProtocol {

static final Logger log = LoggerFactory.getLogger(RedisProtocol.class);

public static void main(String[] args) {

NioEventLoopGroup group = new NioEventLoopGroup();

try {

ChannelFuture channelFuture = new Bootstrap()

.group(group)

.channel(NioSocketChannel.class)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) {

// Print log

ch.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

ch.pipeline().addLast(new ChannelInboundHandlerAdapter() {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

// Carriage return and line feed

final byte[] LINE = {'\r','\n'};

// Get ByteBuf

ByteBuf buffer = ctx.alloc().buffer();

// After the connection is established, send an instruction to Redis and pay attention to adding carriage return and line feed

// set name Nyima

buffer.writeBytes("*3".getBytes());

buffer.writeBytes(LINE);

buffer.writeBytes("$3".getBytes());

buffer.writeBytes(LINE);

buffer.writeBytes("set".getBytes());

buffer.writeBytes(LINE);

buffer.writeBytes("$4".getBytes());

buffer.writeBytes(LINE);

buffer.writeBytes("name".getBytes());

buffer.writeBytes(LINE);

buffer.writeBytes("$5".getBytes());

buffer.writeBytes(LINE);

buffer.writeBytes("Nyima".getBytes());

buffer.writeBytes(LINE);

ctx.writeAndFlush(buffer);

}

});

}

})

.connect(new InetSocketAddress("localhost", 6379));

channelFuture.sync();

// Close channel

channelFuture.channel().close().sync();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

// Close group

group.shutdownGracefully();

}

}

}

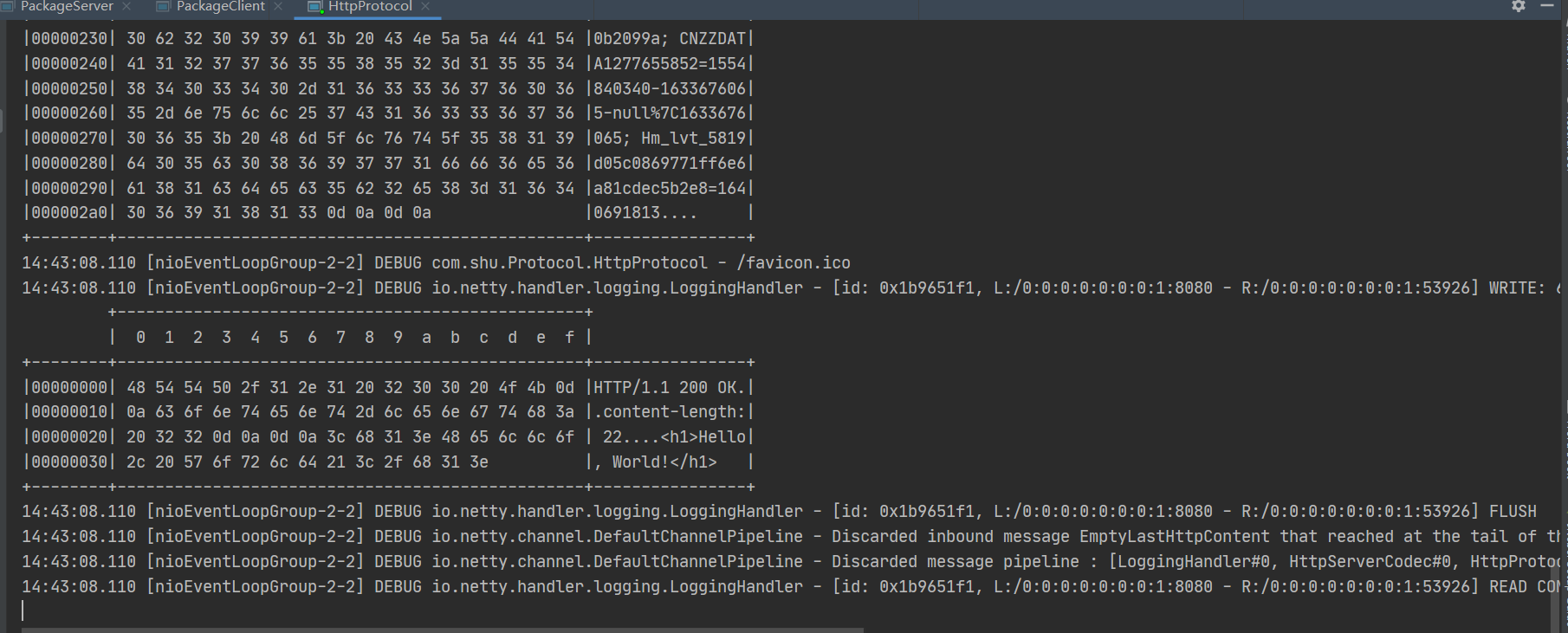

3.2.2 Http protocol

package com.shu.Protocol;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.SimpleChannelInboundHandler;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.http.DefaultFullHttpResponse;

import io.netty.handler.codec.http.HttpRequest;

import io.netty.handler.codec.http.HttpResponseStatus;

import io.netty.handler.codec.http.HttpServerCodec;

import io.netty.handler.logging.LogLevel;

import io.netty.handler.logging.LoggingHandler;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.nio.charset.StandardCharsets;

import static io.netty.handler.codec.http.HttpHeaderNames.CONTENT_LENGTH;

/**

* @Author shu

* @Date: 2022/03/03/ 14:18

* @Description http agreement

**/

public class HttpProtocol {

static final Logger log = LoggerFactory.getLogger(HttpProtocol.class);

public static void main(String[] args) {

NioEventLoopGroup group = new NioEventLoopGroup();

new ServerBootstrap()

.group(group)

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) {

ch.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

// As a server, HttpServerCodec is used as encoder and decoder

ch.pipeline().addLast(new HttpServerCodec());

// The server only processes httprequests

ch.pipeline().addLast(new SimpleChannelInboundHandler<HttpRequest>() {

@Override

protected void channelRead0(ChannelHandlerContext ctx, HttpRequest msg) {

// Get request uri

log.debug(msg.uri());

// Get a complete response and set the version number and status code

DefaultFullHttpResponse response = new DefaultFullHttpResponse(msg.protocolVersion(), HttpResponseStatus.OK);

// Set response content

byte[] bytes = "<h1>Hello, World!</h1>".getBytes(StandardCharsets.UTF_8);

// Set the length of the response body to prevent the browser from receiving the response content all the time

response.headers().setInt(CONTENT_LENGTH, bytes.length);

// Set response body

response.content().writeBytes(bytes);

// Writeback response

ctx.writeAndFlush(response);

}

});

}

})

.bind(8080);

}

}

3.2.3 user defined protocol

3.2.3.1 elements

- Magic Number: used to determine whether the received data is an invalid packet at the first time

- Version number: can support protocol upgrade

- Serialization algorithm: what kind of serialization and deserialization method does the message body adopt

- Such as json, protobuf, hessian and jdk

- Instruction type: login, registration, single chat, group chat... Related to business

- Request serial number: provide asynchronous capability for duplex communication

- Text length

- Message body

3.2.3.2 code

package com.shu.Message;

import io.netty.buffer.ByteBuf;

import io.netty.channel.ChannelHandlerContext;

import io.netty.handler.codec.ByteToMessageCodec;

import lombok.extern.slf4j.Slf4j;

import java.io.ByteArrayInputStream;

import java.io.ByteArrayOutputStream;

import java.io.ObjectInputStream;

import java.io.ObjectOutputStream;

import java.util.List;

/**

* @Author shu

* @Date: 2022/03/03/ 16:35

* @Description Message encoding and decoding

**/

@Slf4j

public class MessageCodec extends ByteToMessageCodec<Message> {

@Override

public void encode(ChannelHandlerContext ctx, Message msg, ByteBuf out) throws Exception {

// 1. Magic number of 4 bytes

out.writeBytes(new byte[]{1, 2, 3, 4});

// 2. 1 byte version,

out.writeByte(1);

// 3. 1-byte serialization JDK 0, JSON 1

out.writeByte(0);

// 4. Instruction type of 1 byte

out.writeByte(msg.getMessageType());

// 5. 4 bytes

out.writeInt(msg.getSequenceId());

// Meaningless, align fill

out.writeByte(0xff);

// 6. Get byte array of content

ByteArrayOutputStream bos = new ByteArrayOutputStream();

ObjectOutputStream oos = new ObjectOutputStream(bos);

oos.writeObject(msg);

byte[] bytes = bos.toByteArray();

// 7. Length

out.writeInt(bytes.length);

// 8. Write content

out.writeBytes(bytes);

}

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List<Object> out) throws Exception {

int magicNum = in.readInt();

byte version = in.readByte();

byte serializerType = in.readByte();

byte messageType = in.readByte();

int sequenceId = in.readInt();

in.readByte();

int length = in.readInt();

byte[] bytes = new byte[length];

in.readBytes(bytes, 0, length);

ObjectInputStream ois = new ObjectInputStream(new ByteArrayInputStream(bytes));

Message message = (Message) ois.readObject();

log.debug("{}, {}, {}, {}, {}, {}", magicNum, version, serializerType, messageType, sequenceId, length);

log.debug("{}", message);

out.add(message);

}

}

3.3 important parameters

CONNECT_TIMEOUT_MILLIS

- Parameters belonging to socketchannel