1, Introduction

1 Overview

BP (Back Propagation) neural network was proposed by the scientific research group headed by Rumelhart and McCelland in 1986. See their paper learning representations by Back Propagation errors published in Nature.

BP neural network is a multilayer feedforward network trained by error back propagation algorithm. It is one of the most widely used neural network models at present. BP network can learn and store a large number of input-output mode mapping relationships without revealing the mathematical equations describing this mapping relationship in advance. Its learning rule is to use the steepest descent method and continuously adjust the weight and threshold of the network through back propagation to minimize the sum of squares of the network error.

2 basic idea of BP algorithm

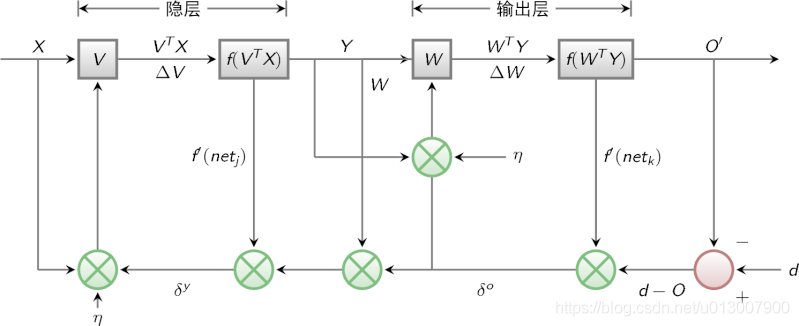

Last time, we said that multilayer perceptron encountered a bottleneck in how to obtain the weight of hidden layer. Since we can't get the weight of the hidden layer directly, can we indirectly adjust the weight of the hidden layer by getting the error between the output result and the expected output from the output layer first? BP algorithm is designed with this idea. Its basic idea is that the learning process consists of two processes: forward propagation of signal and back propagation of error.

During forward propagation, the input samples are transmitted from the input layer, processed layer by layer by hidden layer, and then transmitted to the output layer. If the actual output of the output layer is inconsistent with the expected output (teacher signal), it will turn to the back propagation stage of error.

During back propagation, the output is transmitted back to the input layer layer by layer through the hidden layer in some form, and the error is allocated to all units of each layer, so as to obtain the error signal of each layer unit, which is used as the basis for correcting the weight of each unit.

The specific processes of these two processes will be introduced later.

The signal flow diagram of BP algorithm is shown in the figure below

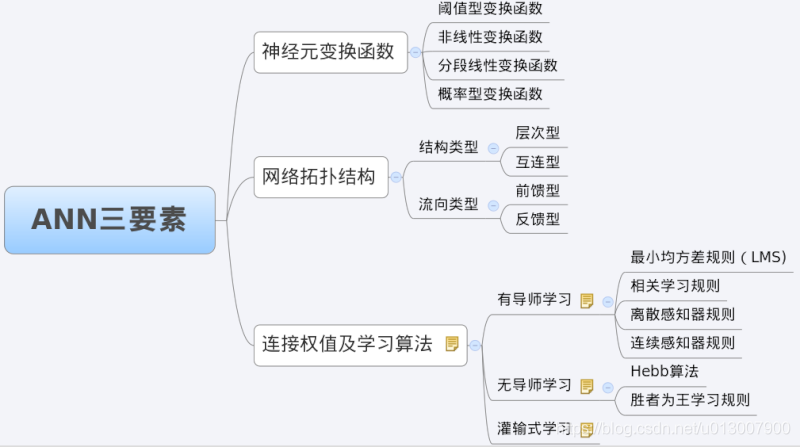

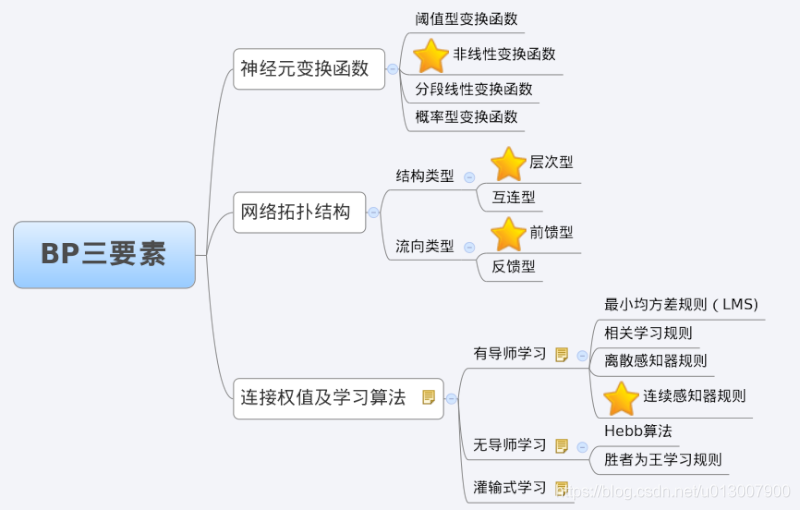

3 characteristic analysis of BP network -- three elements of BP

When we analyze an ANN, we usually start with its three elements, namely

1) Network topology;

2) Transfer function;

3) Learning algorithm.

The characteristics of each element together determine the functional characteristics of the ANN. Therefore, we also study BP network from these three elements.

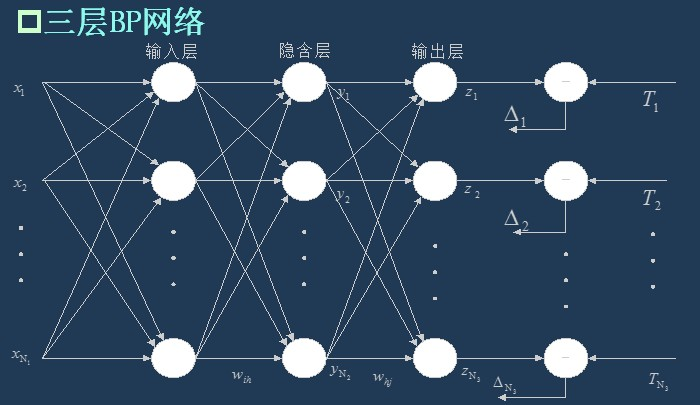

3.1 topology of BP network

As I said last time, BP network is actually a multilayer perceptron, so its topology is the same as that of multilayer perceptron. Because the single hidden layer (three-layer) perceptron can solve simple nonlinear problems, it is most widely used. The topology of the three-layer perceptron is shown in the figure below.

The simplest three-tier BP:

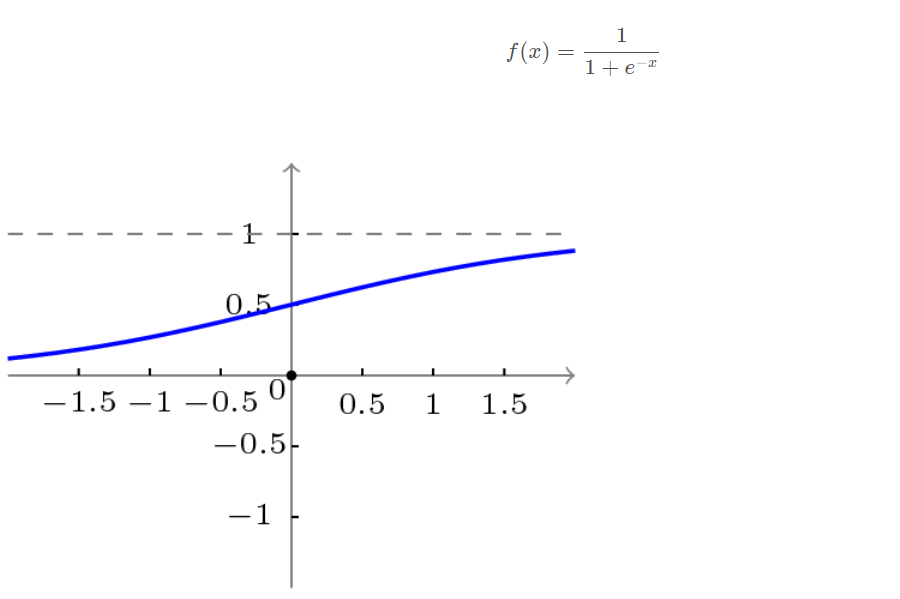

3.2 transfer function of BP network

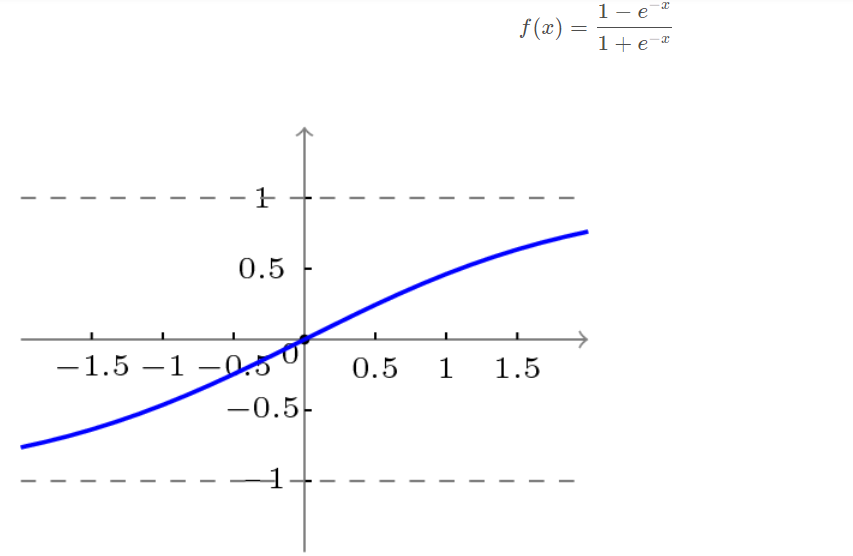

The transfer function adopted by BP network is a nonlinear transformation function - Sigmoid function (also known as S function). Its characteristic is that the function itself and its derivatives are continuous, so it is very convenient in processing. Why should we choose this function? I will give a further introduction when introducing the learning algorithm of BP network.

Unipolar S-type function curve is shown in the figure below.

The bipolar S-type function curve is shown in the figure below.

3.3 learning algorithm of BP network

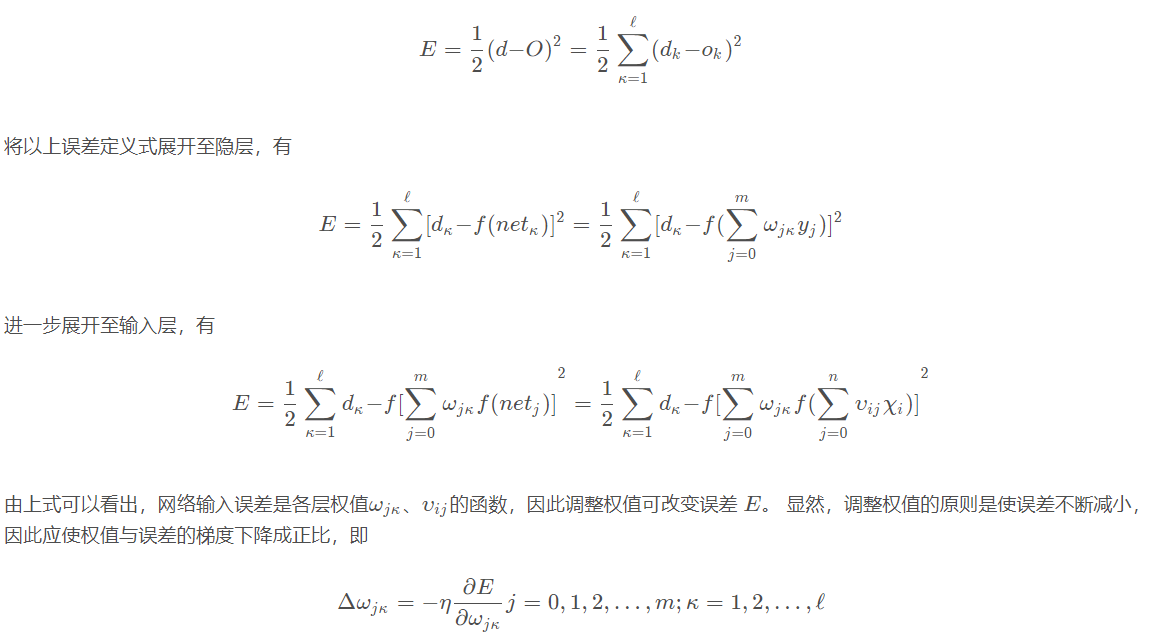

The learning algorithm of BP network is BP algorithm, also known as BP algorithm δ Algorithm (in the learning process of ANN, we will find many terms with multiple names). Taking the three-layer perceptron as an example, when the network output is different from the expected output, there is an output error E, which is defined as follows

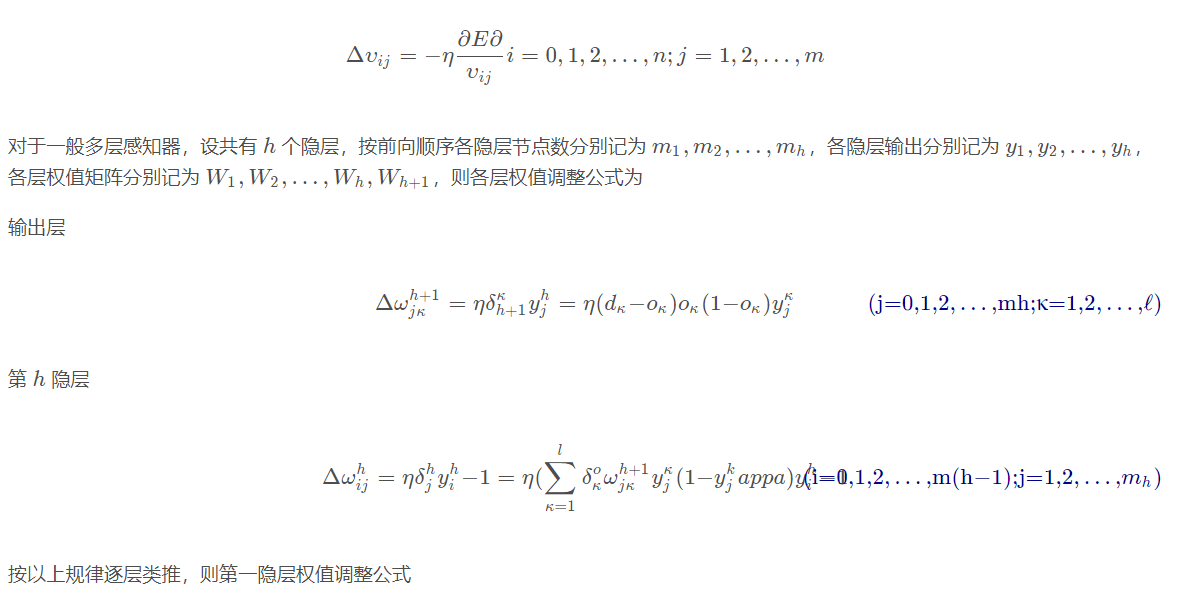

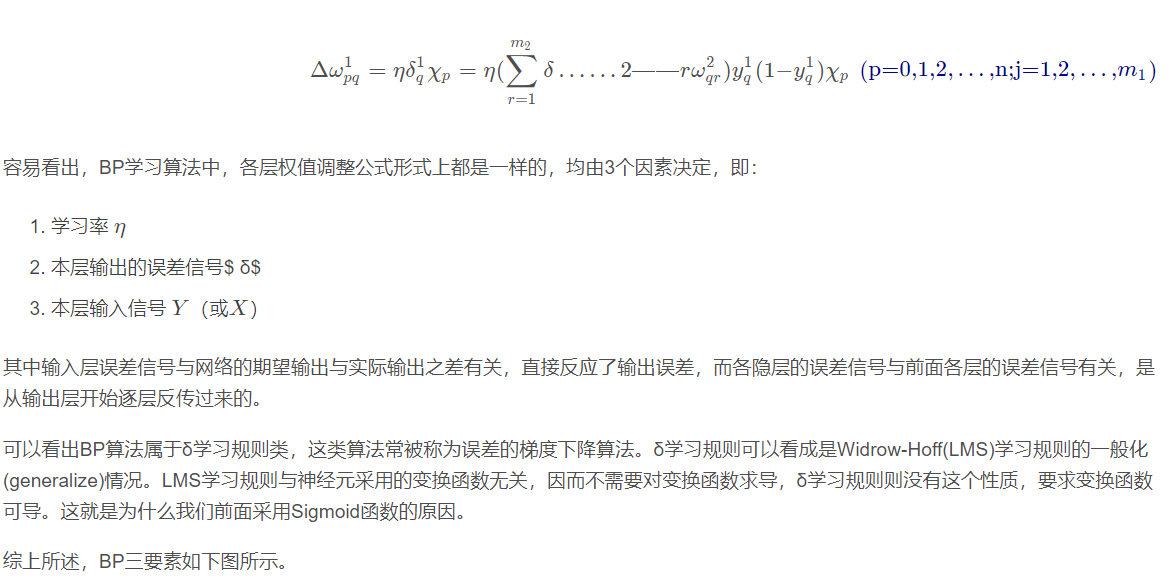

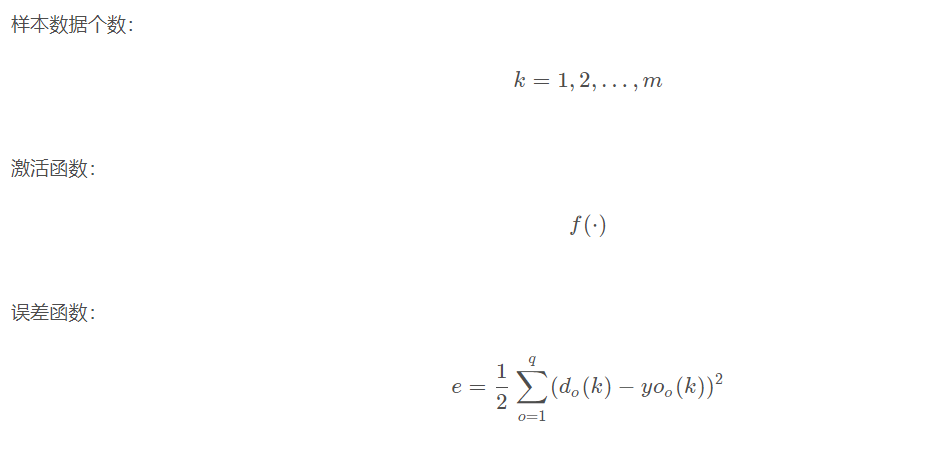

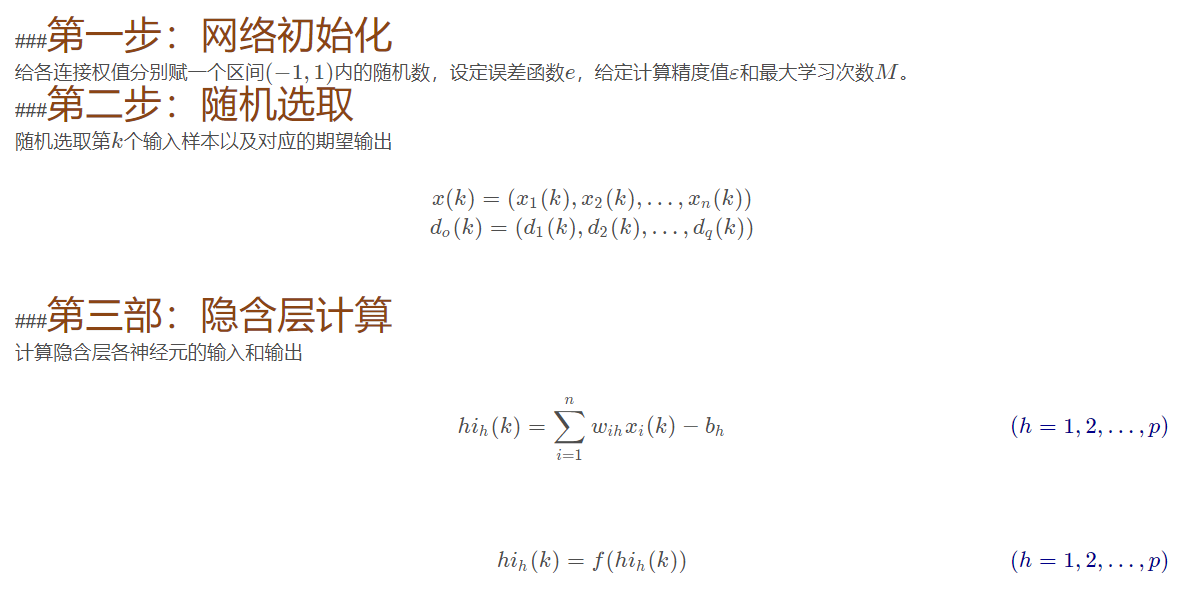

Next, we will introduce the specific process of learning and training BP network.

4 training decomposition of BP network

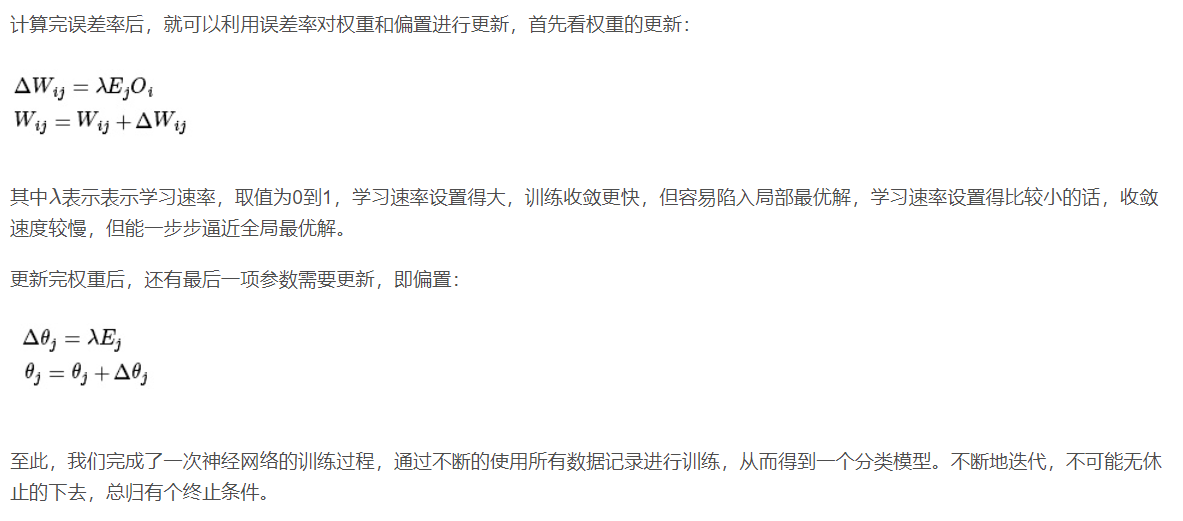

Training a BP neural network is actually adjusting the weight and bias of the network. The training process of BP neural network is divided into two parts:

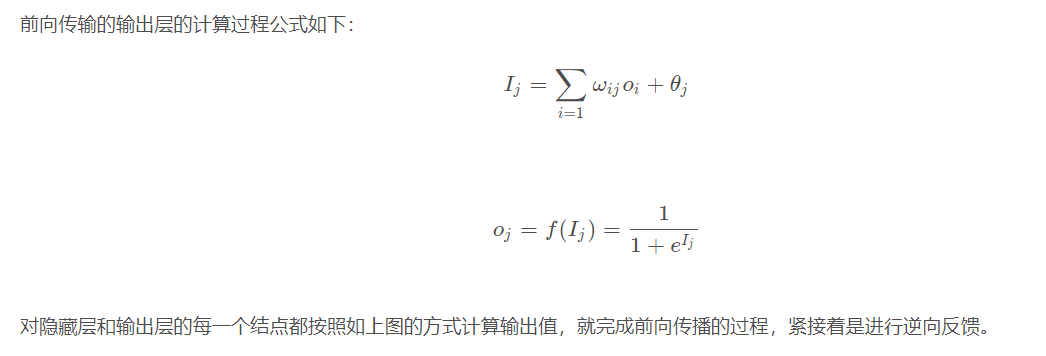

Forward transmission, wave transmission output value layer by layer;

Reverse feedback, reverse layer by layer adjustment of weight and bias;

Let's first look at forward transmission.

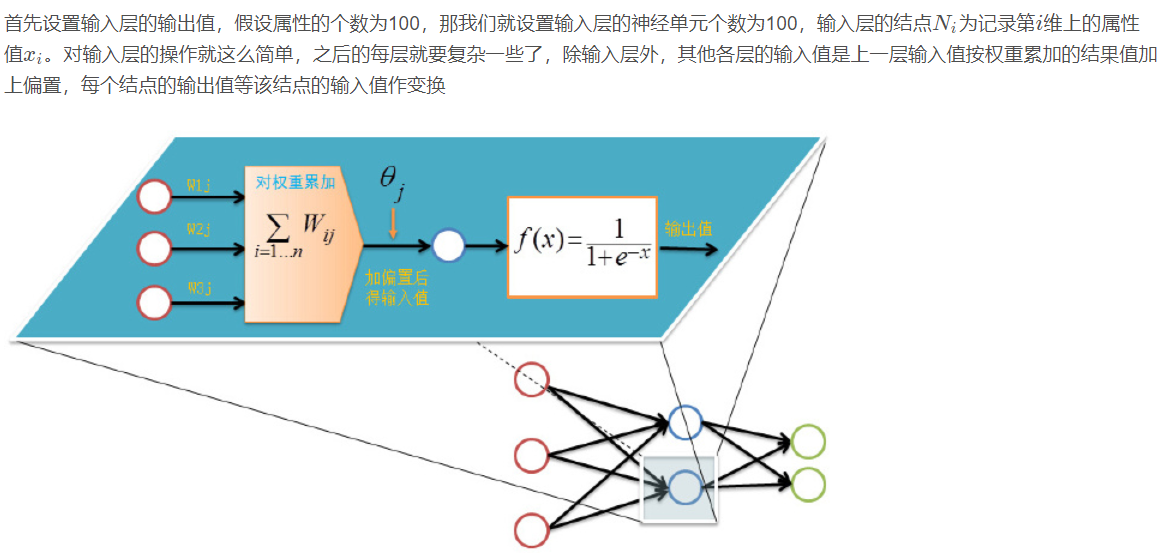

Forward transmission (feed forward feedback)

Before training the network, we need to initialize weights and offsets randomly, take a random real number of [− 1,1] [- 1,1] [− 1,1] for each weight, and a random real number of [0,1] [0,1] [0,1] for each offset, and then start forward transmission.

The training of neural network is completed by multiple iterations. Each iteration uses all records of the training set, while each training network uses only one record. The abstract description is as follows:

while Termination conditions not met:

for record:dataset:

trainModel(record)

4.1 back propagation

4.2 training termination conditions

Each round of training uses all records of the data set, but when to stop, there are two stopping conditions:

Set the maximum number of iterations, such as stopping training after 100 iterations with the dataset

Calculate the prediction accuracy of the training set on the network, and stop the training after reaching a certain threshold

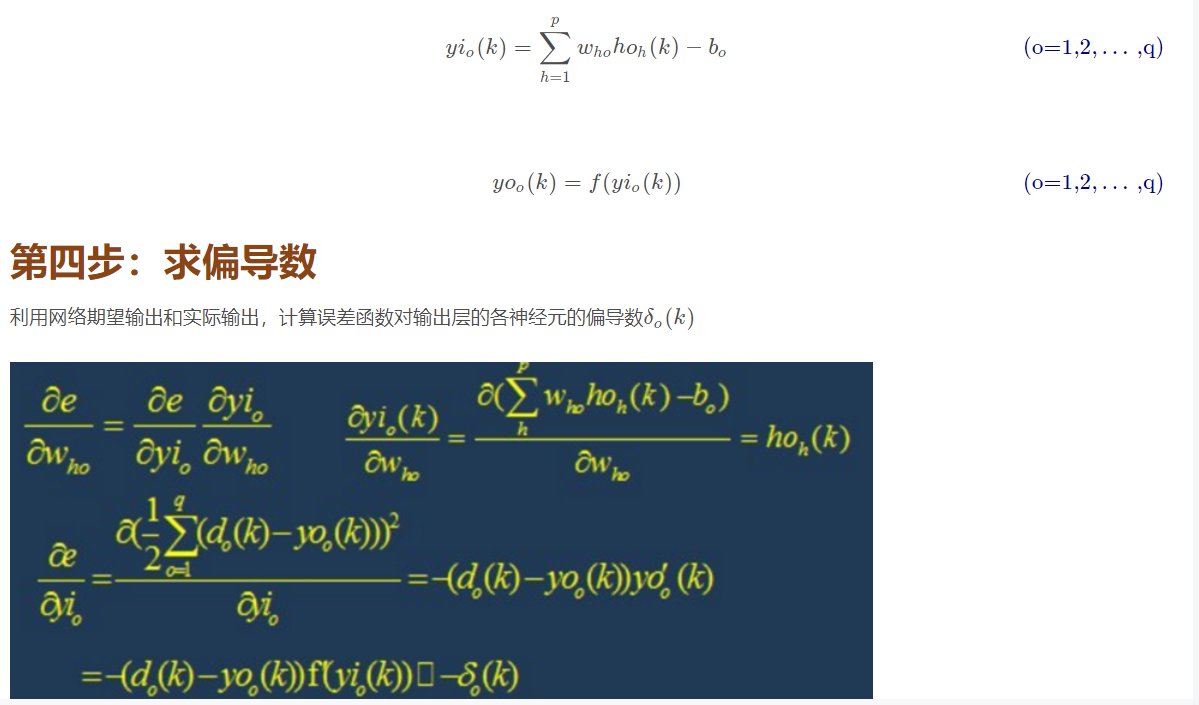

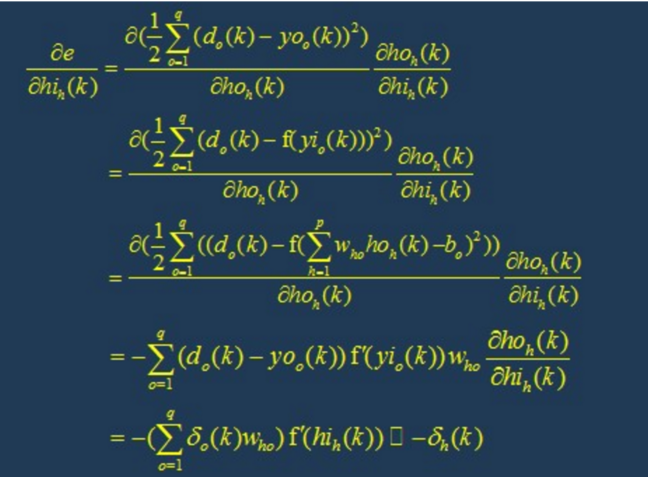

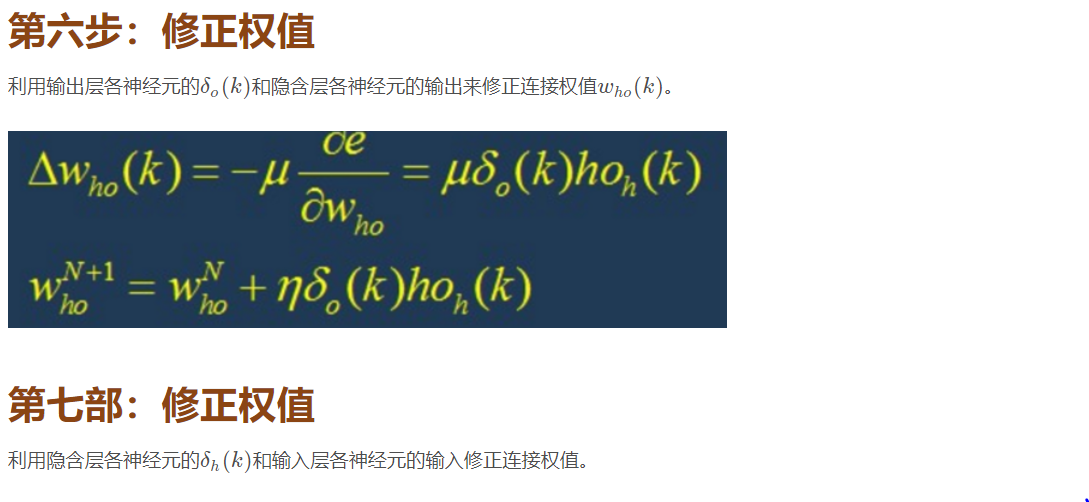

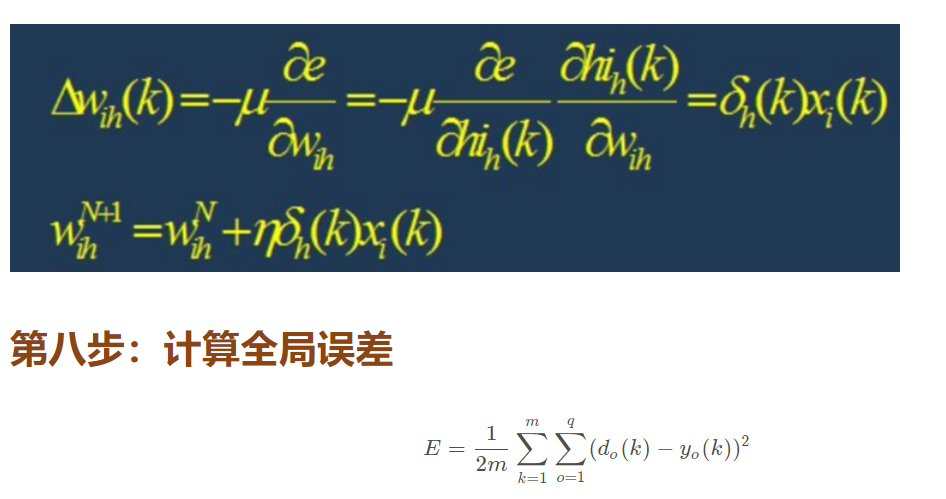

5. Specific process of BP network operation

5.1 network structure

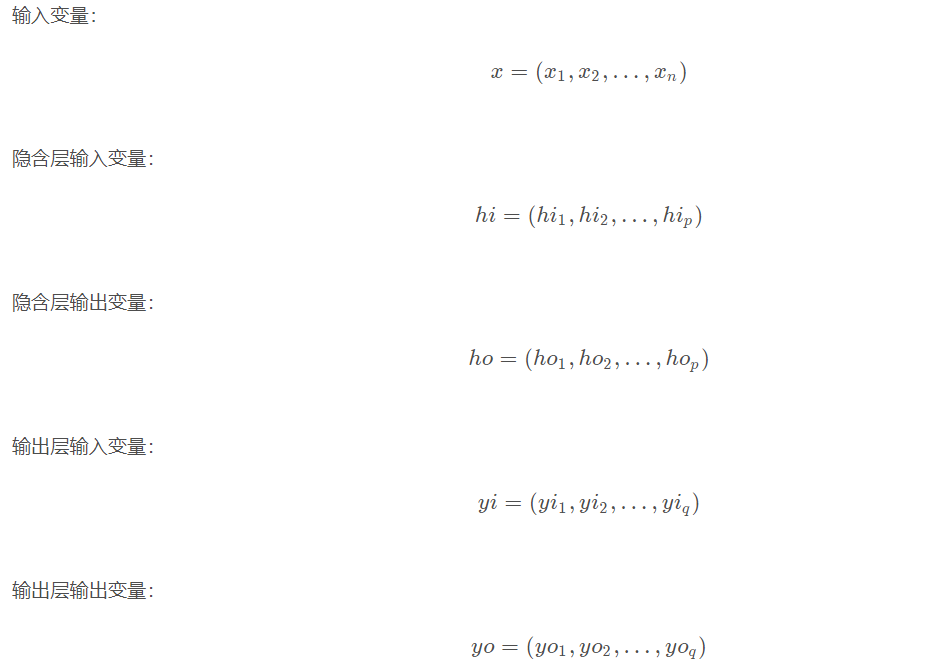

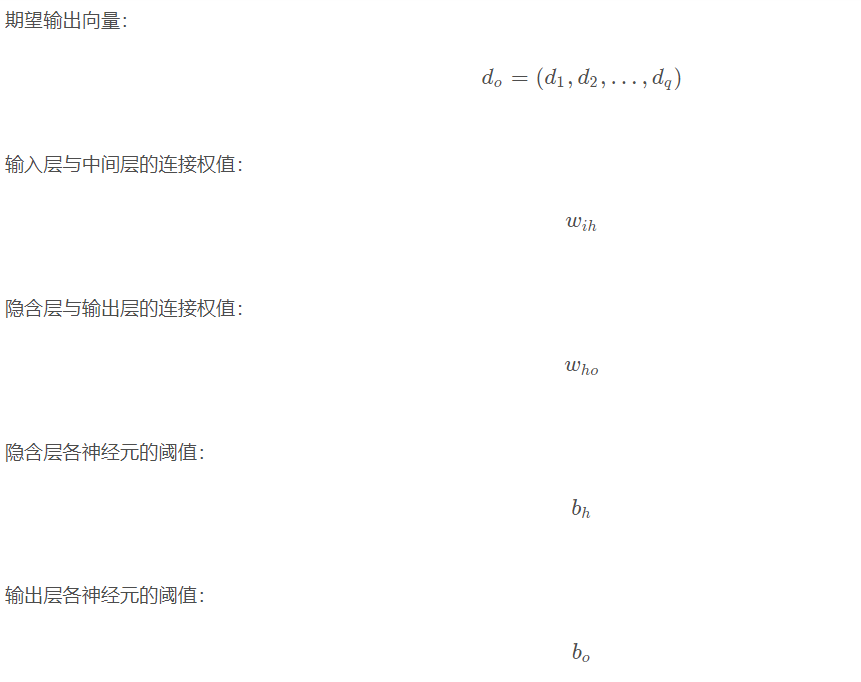

There are n nn neurons in the input layer, p pp neurons in the hidden layer and q qq neurons in the output layer.

5.2 variable definition

Step 9: judge the rationality of the model

Judge whether the network error meets the requirements.

When the error reaches the preset accuracy or the learning times are greater than the designed maximum times, the algorithm is ended.

Otherwise, select the next learning sample and the corresponding output expectation, return to the third part and enter the next round of learning.

6 design of BP network

In the design of BP network, we should generally consider the number of layers of the network, the number of neurons and activation function in each layer, initial value and learning rate. The following are some selection principles.

6.1 layers of network

Theory has proved that the network with deviation and at least one S-type hidden layer plus a linear output layer can approach any rational function. Increasing the number of layers can further reduce the error and improve the accuracy, but it is also the complexity of the network. In addition, the single-layer network with only nonlinear activation function can not be used to solve the problem, because the problem that can be solved with single-layer network can also be solved with adaptive linear network, and the operation speed of adaptive linear network is faster. For the problem that can only be solved with nonlinear function, the single-layer accuracy is not high enough, and only increasing the number of layers can achieve the desired result.

6.2 number of neurons in the hidden layer

The improvement of network training accuracy can be obtained by using a hidden layer and increasing the number of neurons, which is much simpler in structure than increasing the number of network layers. Generally speaking, we use the accuracy and the time of training the network to measure the quality of a neural network design:

(1) When the number of neurons is too small, the network can not learn well, the number of training iterations is relatively large, and the training accuracy is not high.

(2) When the number of iterations of fitting is too large, the more powerful the fitting function of the network may be, the more the fitting function is.

Therefore, we get the selection principle of the number of neurons in the hidden layer of neural network: on the premise of solving the problem, add one or two neurons to speed up the decline of error.

6.3 selection of initial weight

Generally, the initial weight is a random number with a value between (− 1,1). In addition, after analyzing how the two-layer network trains a function, wedrow et al. Proposed a strategy to select the initial weight order as s √ r, where r is the number of inputs and S is the number of neurons in the first layer.

6.4 learning rate

The learning rate is generally 0.01 − 0.8. A large learning rate may lead to the instability of the system, but a small learning rate leads to too slow convergence and requires a long training time. For more complex networks, different learning rates may be required at different positions of the error surface. In order to reduce the training times and time to find the learning rate, a more appropriate method is to use the variable adaptive learning rate to set different learning rates in different stages.

6.5 selection of expected error

In the process of designing the network, the expected error value should also determine an appropriate value after comparative training, which is determined relative to the required number of hidden layer nodes. In general, two networks with different expected error values can be trained at the same time, and finally one of them can be determined by comprehensive factors.

7 limitations of BP network

BP network has the following problems:

(1) It needs a long training time: This is mainly caused by the small learning rate, which can be improved by changing or adaptive learning rate.

(2) Completely unable to train: This is mainly reflected in the paralysis of the network. Generally, in order to avoid this situation, one is to select a smaller initial weight, but use a smaller learning rate.

(3) Local minimum value: the gradient descent method adopted here may converge to the local minimum value, and it is possible to obtain better results by using multi-layer network or more neurons.

8 improvement of BP network

The main goal of the improvement of P algorithm is to speed up the training speed and avoid falling into local minima. The common improvement methods include the algorithm with momentum factor, adaptive learning rate, changing learning rate and action function contraction method. The basic idea of momentum factor method is to add a value proportional to the previous weight change to each weight change on the basis of back propagation, and generate a new weight change according to the back propagation method. The method of adaptive learning rate is aimed at some specific problems. The principle of the method of changing the learning rate is that if the sign of the objective function for the reciprocal of a weight is the same in several consecutive iterations, the learning rate of this weight will increase, on the contrary, if the sign is opposite, the learning rate will decrease. The contraction rule of the action function is to translate the action function, that is, add a constant.

2, Source code

function varargout = untitled1(varargin)

% UNTITLED1 MATLAB code for untitled1.fig

% UNTITLED1, by itself, creates a new UNTITLED1 or raises the existing

% singleton*.

%

% H = UNTITLED1 returns the handle to a new UNTITLED1 or the handle to

% the existing singleton*.

%

% UNTITLED1('CALLBACK',hObject,eventData,handles,...) calls the local

% function named CALLBACK in UNTITLED1.M with the given input arguments.

%

% UNTITLED1('Property','Value',...) creates a new UNTITLED1 or raises the

% existing singleton*. Starting from the left, property value pairs are

% applied to the GUI before untitled1_OpeningFcn gets called. An

% unrecognized property name or invalid value makes property application

% stop. All inputs are passed to untitled1_OpeningFcn via varargin.

%

% *See GUI Options on GUIDE's Tools menu. Choose "GUI allows only one

% instance to run (singleton)".

%

% See also: GUIDE, GUIDATA, GUIHANDLES

% Edit the above text to modify the response to help untitled1

% Last Modified by GUIDE v2.5 03-Jun-2021 17:08:32

% Begin initialization code - DO NOT EDIT

gui_Singleton = 1;

gui_State = struct('gui_Name', mfilename, ...

'gui_Singleton', gui_Singleton, ...

'gui_OpeningFcn', @untitled1_OpeningFcn, ...

'gui_OutputFcn', @untitled1_OutputFcn, ...

'gui_LayoutFcn', [] , ...

'gui_Callback', []);

if nargin && ischar(varargin{1})

gui_State.gui_Callback = str2func(varargin{1});

end

if nargout

[varargout{1:nargout}] = gui_mainfcn(gui_State, varargin{:});

else

gui_mainfcn(gui_State, varargin{:});

end

% End initialization code - DO NOT EDIT

% --- Executes just before untitled1 is made visible.

function untitled1_OpeningFcn(hObject, eventdata, handles, varargin)

% This function has no output args, see OutputFcn.

% hObject handle to figure

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

% varargin command line arguments to untitled1 (see VARARGIN)

% Choose default command line output for untitled1

handles.output = hObject;

% Update handles structure

guidata(hObject, handles);

% UIWAIT makes untitled1 wait for user response (see UIRESUME)

% uiwait(handles.figure1);

% --- Outputs from this function are returned to the command line.

function varargout = untitled1_OutputFcn(hObject, eventdata, handles)

% varargout cell array for returning output args (see VARARGOUT);

% hObject handle to figure

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

% Get default command line output from handles structure

varargout{1} = handles.output;

% --- Executes during object creation, after setting all properties.

function wt_tu_CreateFcn(hObject, eventdata, handles)

% hObject handle to wt_tu (see GCBO)

% eventdata reserved - to be defined in a future version of MATLAB

% handles empty - handles not created until after all CreateFcns called

% Hint: place code in OpeningFcn to populate wt_tu

% --- Executes on button press in wt_buton.

function wt_buton_Callback(hObject, eventdata, handles)

% hObject handle to wt_buton (see GCBO)

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

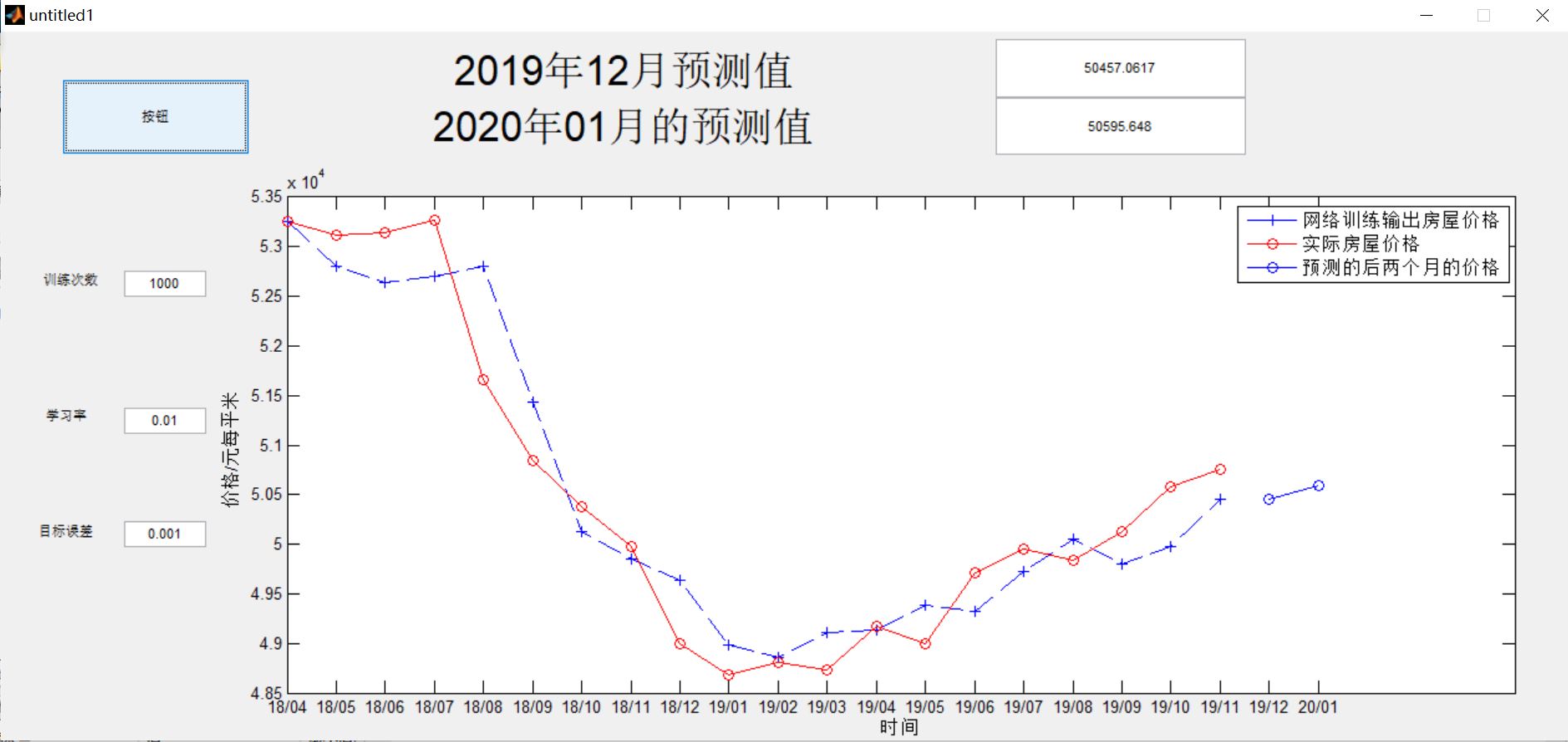

TestSamNum = 20; % Number of learning samples

ForcastSamNum = 2; % Number of predicted samples

HiddenUnitNum=8; % Hidden layer

InDim = 3; % Input layer

OutDim = 1; % Output layer

% raw data

% 2018 Average house price in Pudong from January to August 2019(Unit: Yuan\square metre)

s1 = [54344 54310 53949 53250 53122 53148 53266 51665 50844 50379 49971 48993 48678 48809 48732 49166 48996 49714 49955 49841 ];

% 2018 Average house price in Pudong from February to September 2019(Unit: Yuan\square metre)

s2 = [54310 53949 53250 53122 53148 53266 51665 50844 50379 49971 48993 48678 48809 48732 49166 48996 49714 49955 49841 50133];

% 2018 Average house price in Pudong from March to October 2019(Unit: Yuan\square metre)

s3 = [53949 53250 53122 53148 53266 51665 50844 50379 49971 48993 48678 48809 48732 49166 48996 49714 49955 49841 50133 50585];

% 2018 Average house price in Pudong from April 2014 to November 2019(Unit: Yuan\square metre)

gl = [ 53250 53122 53148 53266 51665 50844 50379 49971 48993 48678 48809 48732 49166 48996 49714 49955 49841 50133 50585 50749];

p = [s1; s2; s3]; % Input data matrix

t = gl; % Target data matrix

[SamIn, minp, maxp, tn, mint, maxt] = premnmx(p, t); % Initialization of original sample pair (input and output)

SamOut = tn; % Output sample

MaxEpochs = str2double(get(handles.edit2,'String')); % Maximum training times

lr =str2double(get(handles.edit3,'String')); % Learning rate

E0 = str2double(get(handles.edit4,'String')); % Target error

rng('default');

W1 = rand(HiddenUnitNum, InDim); % Initializes the weights between the input layer and the hidden layer

B1 = rand(HiddenUnitNum, 1); % Initializes the threshold between the input layer and the hidden layer

W2 = rand(OutDim, HiddenUnitNum); % Initializes the weights between the output layer and the hidden layer

B2 = rand(OutDim, 1); % Initializes the threshold between the output layer and the hidden layer

ErrHistory = zeros(MaxEpochs, 1);

for i = 1 : MaxEpochs

HiddenOut = logsig(W1*SamIn + repmat(B1, 1, TestSamNum)); % Hidden layer network output

NetworkOut = W2*HiddenOut + repmat(B2, 1, TestSamNum); % Output layer network output

Error = SamOut - NetworkOut; % Difference between actual output and network output

SSE = sumsqr(Error); % Energy function (sum of squares of errors)

ErrHistory(i) = SSE;

if SSE < E0

break;

end

3, Operation results

4, Remarks

Version: 2014a