In the previous version, netty was used to implement a simple one-to-one RPC, which required manual service address setup and was more restrictive.

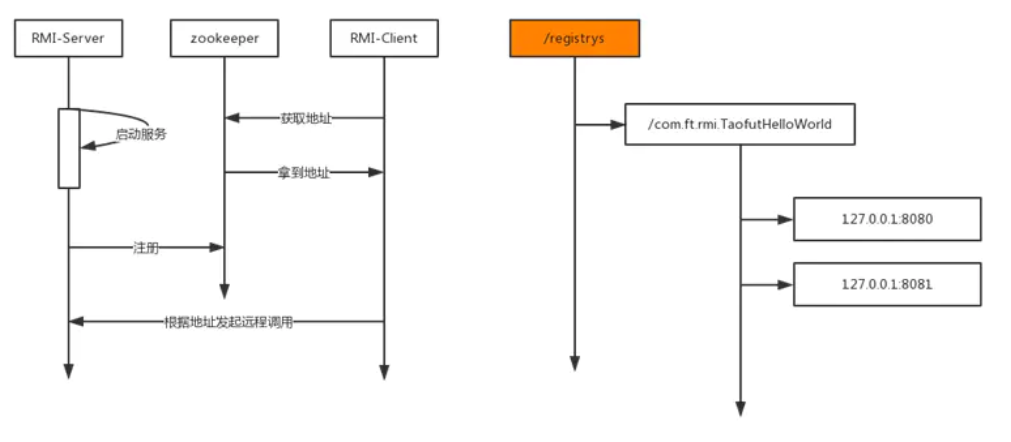

In this article, using zookeeper as a service registry, local service information is registered in zookeeper at service startup. When a client initiates a remote service call, the address of the service is obtained from zookeeper, and then netty is used for network transmission based on the address obtained.

Monitoring is required between the server and the registry, and modifications to the registry's service information are required when service information changes or network connectivity issues occur.In this paper, a service registration monitoring center is created, which uses the heartbeat mechanism to determine if there is a stable connection with the server. When network instability occurs, service information belonging to the server is deleted from the registry.In this project, it is set that no heartbeat packet is sent more than three times in five minutes and is unstable.

As for the heart beat mechanism, a previous article described: Dubbo Heartbeat Mechanism

zookeeper registry

zookeeper is an important component of hadoop and serves primarily as a distributed coordination service

zookeeper uses a node tree data model, similar to the linux file system.

Each node is called a ZNode, and each ZNode can be uniquely identified by a path, while each node can store a small amount of data.

This project uses the dubbo registry model to design the registry for this article.

Overall, a four-level node is designed, where one node is a persistent node/register, representing the area where the registration service is recorded.The secondary node is the service interface name, the third node is the remote service ip address, the node is a temporary node, and the data stored by the node is the specific implementation class name.

On the client side, the service interface name is searched in the registry, the remote service ip address is obtained, and the reflection is based on the implementation class name stored in the node.

zookeeper initialization takes advantage of the CuratorFramework related classes

private static void init() {

RetryPolicy retryPolicy = new RetryNTimes(ZKConsts.RETRYTIME, ZKConsts.SLEEP_MS_BEWTEENR_RETRY);

client = CuratorFrameworkFactory.builder().connectString(ZKConsts.ZK_SERVER_PATH)

.sessionTimeoutMs(ZKConsts.SESSION_TIMEOUT_MS).retryPolicy(retryPolicy)

.namespace(ZKConsts.WORK_SPACE).build();

client.start();

}Service registration code

public static void register(URL url) {

try {

String interfaceName = url.getInterfaceName();

String implClassName = url.getImplClassName();

Stat stat = client.checkExists().forPath(getPath(interfaceName, url.toString()));

if (stat != null) {

System.out.println("The node already exists!");

client.delete().forPath(getPath(interfaceName, url.toString()));

}

client.create()

.creatingParentsIfNeeded()

.withMode(CreateMode.EPHEMERAL)

//Permission control, which any connected client can operate on

.withACL(ZooDefs.Ids.OPEN_ACL_UNSAFE)

.forPath(getPath(interfaceName, url.toString()), implClassName.getBytes());

System.out.println(getPath(interfaceName, url.toString()));

} catch (Exception e) {

e.printStackTrace();

}

}Get the remote service connection address based on the service interface name

public static URL random(String interfaceName) {

try {

System.out.println("Start looking for service nodes:" + getPath(interfaceName));

List<String> urlList = client.getChildren().forPath("/" + interfaceName);

System.out.println("Result:" + urlList);

String serviceUrl = urlList.get(0);

String[] urls = serviceUrl.split(":");

String implClassName = get(interfaceName, serviceUrl);

return new URL(urls[0], Integer.valueOf(urls[1]), interfaceName, implClassName);

} catch (Exception e) {

System.out.println(e);

e.printStackTrace();

}

return null;

}The registry needs to determine whether a stable connection is maintained when it connects to the server, and delete these services from the registry if the server is down, etc.

Previous processing mechanisms include session and wacher.

session mechanism

Each zookeeper Registry will create a session when it connects to the server. Within the set session Timeout, the server will send a heartbeat packet periodically with the registry to sense if each client is down, and the corresponding temporary node will be deleted by the registry if the session corresponding to a temporary Znode node is created and destroyed.

watcher mechanism

For each node's operation, a watcher is given to the supervisor, and when one of the monitored nodes changes, a watcher event is triggered.The registry watcher is one-time and will be destroyed when triggered.The watcher can be triggered by both parent node and child node additions and deletions.After triggering the destruction, you will need to register again the next time you need to listen.

Heart beat mechanism in this article

The server sends the local address to the registry periodically as a heartbeat packet, while the registry monitor maintains a map of channelId and address, and listens for idle events through the IdleHandler, reaching a certain number of idle times as inactive. When inactive, zookeeper deletes the corresponding url node.This version implements the above, and the next steps will be in a later version.

If no read is triggered within 10 seconds, the userEventTriggered method is executed.If two inactivity occurs in five minutes, the connection is considered unstable and the registry removes the service belonging to that server.You can also set standards for instability based on actual conditions.

service.scheduleAtFixedRate(() -> {

if (future.channel().isActive()) {

int time = new Random().nextInt(5);

log.info("Random number for this timed task:{}", time);

if (time > 3) {

log.info("Send the local address to the registry:{}", url);

future.channel().writeAndFlush(url);

}

}

}, 60, 60, TimeUnit.SECONDS); @Override

public void userEventTriggered(ChannelHandlerContext ctx, Object evt) throws Exception {

if (evt instanceof IdleStateEvent) {

IdleStateEvent state = (IdleStateEvent)evt;

if (state.state().equals(IdleState.READER_IDLE)) {

log.info("Read idle");

} else if (state.state().equals(IdleState.WRITER_IDLE)) {

log.info("Write idle");

}

//Links are closed only when reading and writing are idle for a certain period of time

else if (state.state().equals(IdleState.ALL_IDLE)) {

if (++inActiveCount == 1) {

start = System.currentTimeMillis();

}

int minute = (int)((System.currentTimeMillis() - start) / (60 * 1000)) + 1;

log.info("No.{}Secondary reading and writing are idle, counting minutes{}", inActiveCount, minute);

if (inActiveCount > 2 && minute < 5) {

log.info("Remove inactivity ip");

removeAndClose(ctx);

} else {

if (minute >= 5) {

log.info("New cycle start");

start = 0;

inActiveCount = 0;

}

}

}

}

}Specific implementation code: RPC Second Edition