4. Netty entry and advanced 4 ❤❤❤ Network programming

4.1 non blocking vs blocking

block

- In blocking mode, the related methods will cause the thread to pause

- ServerSocketChannel.accept suspends the thread when no connection is established

- SocketChannel.read pauses the thread when there is no data to read

- The performance of blocking is actually that the thread is suspended. During the suspension, the cpu will not be occupied, but the thread is equivalent to idle

- In a single thread, blocking methods interact with each other and can hardly work normally, requiring multithreading support

- However, under multithreading, there are new problems, which are reflected in the following aspects

- A 32-bit jvm has 320k threads and a 64 bit jvm has 1024k threads. If there are too many connections, it will inevitably lead to OOM and too many threads. On the contrary, the performance will be reduced due to frequent context switching

- Thread pool technology can be used to reduce the number of threads and thread context switching, but the symptoms are not the root cause. If many connections are established but inactive for a long time, all threads in the thread pool will be blocked. Therefore, it is not suitable for long connections, but only for short connections

Server side

// Using nio to understand blocking mode, single thread

// 0. ByteBuffer

ByteBuffer buffer = ByteBuffer.allocate(16);

// 1. Created the server

ServerSocketChannel ssc = ServerSocketChannel.open();

// 2. Bind listening port

ssc.bind(new InetSocketAddress(8080));

// 3. Connection set

List<SocketChannel> channels = new ArrayList<>();

while (true) {

// 4. Socket accept is used to establish communication with the client

log.debug("connecting...");

SocketChannel sc = ssc.accept(); // Blocking method, thread stops running

log.debug("connected... {}", sc);

channels.add(sc);

for (SocketChannel channel : channels) {

// 5. Receive the data sent by the client

log.debug("before read... {}", channel);

channel.read(buffer); // Blocking method, thread stops running

buffer.flip();

debugRead(buffer);

buffer.clear();

log.debug("after read...{}", channel);

}

}

client

SocketChannel sc = SocketChannel.open();

sc.connect(new InetSocketAddress("localhost", 8080));

System.out.println("waiting...");

Non blocking

- In non blocking mode, will the related methods suspend the thread

- In serversocketchannel When no connection is established, accept will return null and continue to run

- SocketChannel.read returns 0 when there is no data to read, but the thread does not need to be blocked. You can read other socketchannels or execute serversocketchannel accept

- When writing data, the thread just waits for the data to be written to the Channel without waiting for the Channel to send the data through the network

- However, in non blocking mode, even if there is no connection and readable data, the thread is still running, wasting cpu in vain

- During data replication, threads are actually blocked (where AIO improves)

On the server side, the client code remains unchanged

// Use nio to understand non blocking mode, single thread

// 0. ByteBuffer

ByteBuffer buffer = ByteBuffer.allocate(16);

// 1. Created the server

ServerSocketChannel ssc = ServerSocketChannel.open();

ssc.configureBlocking(false); // Non blocking mode

// 2. Bind listening port

ssc.bind(new InetSocketAddress(8080));

// 3. Connection set

List<SocketChannel> channels = new ArrayList<>();

while (true) {

// 4. accept establishes a connection with the client, and the SocketChannel is used to communicate with the client

SocketChannel sc = ssc.accept(); // Non blocking, the thread will continue to run if no connection is established, but sc is null

if (sc != null) {

log.debug("connected... {}", sc);

sc.configureBlocking(false); // Non blocking mode

channels.add(sc);

}

for (SocketChannel channel : channels) {

// 5. Receive the data sent by the client

int read = channel.read(buffer);// Non blocking, the thread will continue to run. If no data is read, read returns 0

if (read > 0) {

buffer.flip();

debugRead(buffer);

buffer.clear();

log.debug("after read...{}", channel);

}

}

}

Multiplexing

A single thread can cooperate with the Selector to monitor multiple Channel read-write events, which is called multiplexing

- Multiplexing is only for network IO and ordinary file IO, and multiplexing cannot be used

- Without the non blocking mode of the Selector, threads spend most of their time doing useless work, and the Selector can ensure that

- Connect only when there are connectable events

- Read only when there are readable events

- Write only when there are writable events

- Limited to the network transmission capacity, the Channel may not always be writable. Once the Channel is writable, the writable event of the Selector will be triggered

4.2 Selector

benefit

- When a thread cooperates with the selector, it can monitor the events of multiple channel s, and the event occurs before the thread processes it. Avoid reactive work done in non blocking mode

- Let this thread be fully utilized

- Saves the number of threads

- Reduced thread context switching

establish

Selector selector = Selector.open();

Bind Channel events

It is also called registration event. Only the bound event selector cares

channel.configureBlocking(false); SelectionKey key = channel.register(selector, Binding event);

- channel must work in non blocking mode

- FileChannel does not have a non blocking mode, so it cannot be used with a selector

- Bound event types can include

- connect - triggered when the client connection is successful

- accept - triggered when the server successfully accepts the connection

- Read - triggered when the data can be read in, because the receiving capacity is weak and the data cannot be read in temporarily

- write - triggered when the data can be written out. The data cannot be written out temporarily because of weak sending ability

Listen for Channel events

You can listen for events through the following three methods. The return value of the method represents how many channel s have events

Method 1, block until the binding event occurs

int count = selector.select();

Method 2: block until binding event occurs or timeout (time unit: ms)

int count = selector.select(long timeout);

Method 3: it will not block, that is, it will return immediately regardless of whether there is an event, and check whether there is an event according to the return value

int count = selector.selectNow();

💡 When does select not block

- When the event occurs

- When a client initiates a connection request, an accept event will be triggered

- When the client sends data, the read event will be triggered when the client closes normally or abnormally. In addition, if the sent data is larger than the buffer, multiple read events will be triggered

- If the channel is writable, the write event will be triggered

- When nio bug occurs under linux

- Call selector wakeup()

- Call selector close()

- interrupt of the thread where the selector is located

4.3 handling accept events

Client code is

public class Client {

public static void main(String[] args) {

try (Socket socket = new Socket("localhost", 8080)) {

System.out.println(socket);

socket.getOutputStream().write("world".getBytes());

System.in.read();

} catch (IOException e) {

e.printStackTrace();

}

}

}

Server side code is

@Slf4j

public class ChannelDemo6 {

public static void main(String[] args) {

try (ServerSocketChannel channel = ServerSocketChannel.open()) {

channel.bind(new InetSocketAddress(8080));

System.out.println(channel);

Selector selector = Selector.open();

channel.configureBlocking(false);

channel.register(selector, SelectionKey.OP_ACCEPT);

while (true) {

int count = selector.select();

// int count = selector.selectNow();

log.debug("select count: {}", count);

// if(count <= 0) {

// continue;

// }

// Get all events

Set<SelectionKey> keys = selector.selectedKeys();

// Traverse all events and handle them one by one

Iterator<SelectionKey> iter = keys.iterator();

while (iter.hasNext()) {

SelectionKey key = iter.next();

// Judge event type

if (key.isAcceptable()) {

ServerSocketChannel c = (ServerSocketChannel) key.channel();

// Must handle

SocketChannel sc = c.accept();

log.debug("{}", sc);

}

// After processing, the event must be removed

iter.remove();

}

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

💡 Can the incident not be handled after it occurs

After an event occurs, you can either process it or cancel it. You can't do nothing. Otherwise, the event will still be triggered next time. This is because the nio bottom layer uses horizontal triggering

4.4 handling read events

@Slf4j

public class ChannelDemo6 {

public static void main(String[] args) {

try (ServerSocketChannel channel = ServerSocketChannel.open()) {

channel.bind(new InetSocketAddress(8080));

System.out.println(channel);

Selector selector = Selector.open();

channel.configureBlocking(false);

channel.register(selector, SelectionKey.OP_ACCEPT);

while (true) {

int count = selector.select();

// int count = selector.selectNow();

log.debug("select count: {}", count);

// if(count <= 0) {

// continue;

// }

// Get all events

Set<SelectionKey> keys = selector.selectedKeys();

// Traverse all events and handle them one by one

Iterator<SelectionKey> iter = keys.iterator();

while (iter.hasNext()) {

SelectionKey key = iter.next();

// Judge event type

if (key.isAcceptable()) {

ServerSocketChannel c = (ServerSocketChannel) key.channel();

// Must handle

SocketChannel sc = c.accept();

sc.configureBlocking(false);

sc.register(selector, SelectionKey.OP_READ);

log.debug("Connection established: {}", sc);

} else if (key.isReadable()) {

SocketChannel sc = (SocketChannel) key.channel();

ByteBuffer buffer = ByteBuffer.allocate(128);

int read = sc.read(buffer);

if(read == -1) {

key.cancel();

sc.close();

} else {

buffer.flip();

debug(buffer);

}

}

// After processing, the event must be removed

iter.remove();

}

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

Open two clients, modify the sending text and output

sun.nio.ch.ServerSocketChannelImpl[/0:0:0:0:0:0:0:0:8080]

21:16:39 [DEBUG] [main] c.i.n.ChannelDemo6 - select count: 1

21:16:39 [DEBUG] [main] c.i.n.ChannelDemo6 - Connection established: java.nio.channels.SocketChannel[connected local=/127.0.0.1:8080 remote=/127.0.0.1:60367]

21:16:39 [DEBUG] [main] c.i.n.ChannelDemo6 - select count: 1

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 68 65 6c 6c 6f |hello |

+--------+-------------------------------------------------+----------------+

21:16:59 [DEBUG] [main] c.i.n.ChannelDemo6 - select count: 1

21:16:59 [DEBUG] [main] c.i.n.ChannelDemo6 - Connection established: java.nio.channels.SocketChannel[connected local=/127.0.0.1:8080 remote=/127.0.0.1:60378]

21:16:59 [DEBUG] [main] c.i.n.ChannelDemo6 - select count: 1

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 77 6f 72 6c 64 |world |

+--------+-------------------------------------------------+----------------+

💡 Why ITER remove()

After the select event occurs, the relevant keys will be put into the selectedKeys collection, but will not be removed from the selectedKeys collection after processing. We need to code and delete them ourselves. for example

- The accept event on ssckey was triggered for the first time, and ssckey was not removed

- The read event on the sckey is triggered for the second time, but there is also the last ssckey in the selectedKeys. During processing, because no real serverSocket is connected, null pointer exception will be caused

💡 Function of cancel

cancel cancels the channel registered on the selector and deletes the key from the keys collection. The subsequent events will not be monitored

⚠️ Do not deal with boundary issues

Some students have written such code before. They think about the two problems in comments. Take bio as an example. In fact, nio is the same

public class Server {

public static void main(String[] args) throws IOException {

ServerSocket ss=new ServerSocket(9000);

while (true) {

Socket s = ss.accept();

InputStream in = s.getInputStream();

// Is there a problem with it

byte[] arr = new byte[4];

while(true) {

int read = in.read(arr);

// Is there a problem with it

if(read == -1) {

break;

}

System.out.println(new String(arr, 0, read));

}

}

}

}

client

public class Client {

public static void main(String[] args) throws IOException {

Socket max = new Socket("localhost", 9000);

OutputStream out = max.getOutputStream();

out.write("hello".getBytes());

out.write("world".getBytes());

out.write("Hello".getBytes());

max.close();

}

}

output

hell owor ld� �good

Why?

Boundaries for processing messages

- One idea is to fix the message length, the packet size is the same, and the server reads according to the predetermined length. The disadvantage is to waste bandwidth

- Another idea is to split by separator, which has the disadvantage of low efficiency

- TLV format, i.e. Type, Length and Value data. When the Type and Length are known, it is convenient to obtain the message size and allocate appropriate buffer. The disadvantage is that the buffer needs to be allocated in advance. If the content is too large, it will affect the server throughput

- Http 1.1 is a TLV format

- Http 2.0 is an LTV format

Server side

private static void split(ByteBuffer source) {

source.flip();

for (int i = 0; i < source.limit(); i++) {

// Found a complete message

if (source.get(i) == '\n') {

int length = i + 1 - source.position();

// Store this complete message in a new ByteBuffer

ByteBuffer target = ByteBuffer.allocate(length);

// Read from source and write to target

for (int j = 0; j < length; j++) {

target.put(source.get());

}

debugAll(target);

}

}

source.compact(); // 0123456789abcdef position 16 limit 16

}

public static void main(String[] args) throws IOException {

// 1. Create a selector to manage multiple channel s

Selector selector = Selector.open();

ServerSocketChannel ssc = ServerSocketChannel.open();

ssc.configureBlocking(false);

// 2. Establish the connection between selector and channel (Registration)

// SelectionKey is used to know the event and which channel event after the future event occurs

SelectionKey sscKey = ssc.register(selector, 0, null);

// key only focuses on the accept event

sscKey.interestOps(SelectionKey.OP_ACCEPT);

log.debug("sscKey:{}", sscKey);

ssc.bind(new InetSocketAddress(8080));

while (true) {

// 3. select method. If no event occurs, the thread is blocked. If there is an event, the thread will resume operation

// select will not block when the event is not processed. After the event occurs, it can be processed or cancelled. It cannot be ignored

selector.select();

// 4. Handle events. selectedKeys contains all the events that occur

Iterator<SelectionKey> iter = selector.selectedKeys().iterator(); // accept, read

while (iter.hasNext()) {

SelectionKey key = iter.next();

// When processing keys, delete them from the selectedKeys collection, otherwise there will be problems in the next processing

iter.remove();

log.debug("key: {}", key);

// 5. Distinguish event types

if (key.isAcceptable()) { // If accept

ServerSocketChannel channel = (ServerSocketChannel) key.channel();

SocketChannel sc = channel.accept();

sc.configureBlocking(false);

ByteBuffer buffer = ByteBuffer.allocate(16); // attachment

// Associate a byteBuffer as an attachment to the selectionKey

SelectionKey scKey = sc.register(selector, 0, buffer);

scKey.interestOps(SelectionKey.OP_READ);

log.debug("{}", sc);

log.debug("scKey:{}", scKey);

} else if (key.isReadable()) { // If read

try {

SocketChannel channel = (SocketChannel) key.channel(); // Get the channel that triggered the event

// Gets the associated attachment on the selectionKey

ByteBuffer buffer = (ByteBuffer) key.attachment();

int read = channel.read(buffer); // If the disconnection is normal, the return value of the read method is - 1

if(read == -1) {

key.cancel();

} else {

split(buffer);

// Capacity expansion required

if (buffer.position() == buffer.limit()) {

ByteBuffer newBuffer = ByteBuffer.allocate(buffer.capacity() * 2);

buffer.flip();

newBuffer.put(buffer); // 0123456789abcdef3333\n

key.attach(newBuffer);

}

}

} catch (IOException e) {

e.printStackTrace();

key.cancel(); // Because the client is disconnected, you need to cancel the key (actually delete the key from the keys collection of the selector)

}

}

}

}

}

client

SocketChannel sc = SocketChannel.open();

sc.connect(new InetSocketAddress("localhost", 8080));

SocketAddress address = sc.getLocalAddress();

// sc.write(Charset.defaultCharset().encode("hello\nworld\n"));

sc.write(Charset.defaultCharset().encode("0123\n456789abcdef"));

sc.write(Charset.defaultCharset().encode("0123456789abcdef3333\n"));

System.in.read();

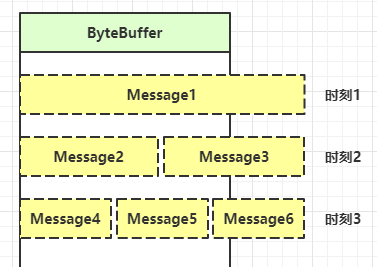

ByteBuffer size allocation

- Each channel needs to record messages that may be segmented. Because ByteBuffer cannot be used by multiple channels, it is necessary to maintain an independent ByteBuffer for each channel

- For example, if the memory size of tbyteb is too large, a buffer by1b cannot be connected

- One idea is to allocate a smaller buffer, such as 4k, first. If you find that the data is not enough, allocate 8k buffer and copy the 4k buffer content to 8k buffer. The advantage is that the messages are continuous and easy to process. The disadvantage is that the data copy consumes performance. Refer to the implementation http://tutorials.jenkov.com/java-performance/resizable-array.html

- Another idea is to use multiple arrays to form a buffer. If one array is not enough, write the extra contents into a new array. The difference from the previous one is that the message storage is discontinuous and the parsing is complex. The advantage is to avoid the performance loss caused by copying

4.5 handling write events

You can't finish the example at one time

- In the non blocking mode, it is impossible to write all data in the buffer to the channel. Therefore, it is necessary to track the return value of the write method (representing the actual number of bytes written)

- Use the selector to listen for writable events of all channels. Each channel needs a key to track the buffer, but this will lead to excessive memory consumption. There is a two-stage strategy

- When the message processor writes a message for the first time, it registers the channel with the selector

- The selector checks the writable events on the channel. If all data is written, it cancels the registration of the channel

- If you do not cancel, the write event will be triggered every time it is writable

public class WriteServer {

public static void main(String[] args) throws IOException {

ServerSocketChannel ssc = ServerSocketChannel.open();

ssc.configureBlocking(false);

ssc.bind(new InetSocketAddress(8080));

Selector selector = Selector.open();

ssc.register(selector, SelectionKey.OP_ACCEPT);

while(true) {

selector.select();

Iterator<SelectionKey> iter = selector.selectedKeys().iterator();

while (iter.hasNext()) {

SelectionKey key = iter.next();

iter.remove();

if (key.isAcceptable()) {

SocketChannel sc = ssc.accept();

sc.configureBlocking(false);

SelectionKey sckey = sc.register(selector, SelectionKey.OP_READ);

// 1. Send content to client

StringBuilder sb = new StringBuilder();

for (int i = 0; i < 3000000; i++) {

sb.append("a");

}

ByteBuffer buffer = Charset.defaultCharset().encode(sb.toString());

int write = sc.write(buffer);

// 3. write indicates how many bytes are actually written

System.out.println("Actual write byte:" + write);

// 4. If there are unread bytes left, you need to pay attention to the write event

if (buffer.hasRemaining()) {

// read 1 write 4

// Pay more attention to write events on the basis of the original events

sckey.interestOps(sckey.interestOps() + SelectionKey.OP_WRITE);

// Add buffer to sckey as an attachment

sckey.attach(buffer);

}

} else if (key.isWritable()) {

ByteBuffer buffer = (ByteBuffer) key.attachment();

SocketChannel sc = (SocketChannel) key.channel();

int write = sc.write(buffer);

System.out.println("Actual write byte:" + write);

if (!buffer.hasRemaining()) { // Finished

key.interestOps(key.interestOps() - SelectionKey.OP_WRITE);

key.attach(null);

}

}

}

}

}

}

client

public class WriteClient {

public static void main(String[] args) throws IOException {

Selector selector = Selector.open();

SocketChannel sc = SocketChannel.open();

sc.configureBlocking(false);

sc.register(selector, SelectionKey.OP_CONNECT | SelectionKey.OP_READ);

sc.connect(new InetSocketAddress("localhost", 8080));

int count = 0;

while (true) {

selector.select();

Iterator<SelectionKey> iter = selector.selectedKeys().iterator();

while (iter.hasNext()) {

SelectionKey key = iter.next();

iter.remove();

if (key.isConnectable()) {

System.out.println(sc.finishConnect());

} else if (key.isReadable()) {

ByteBuffer buffer = ByteBuffer.allocate(1024 * 1024);

count += sc.read(buffer);

buffer.clear();

System.out.println(count);

}

}

}

}

}

💡 Why should write cancel

As long as the socket buffer is writable when sending data to the channel, this event will be triggered frequently. Therefore, you should pay attention to the writable event only when the socket buffer cannot be written, and cancel the attention after the data is written

4.6 further

💡 Using multithreading optimization

Now they are all multi-core CPUs. We should take full account of them when designing them. Don't let the power of cpu be wasted

The previous code has only one selector and does not make full use of multi-core cpu. How to improve it?

Two sets of selectors

- A single thread is equipped with a selector to handle the accept event

- Create threads with the number of cpu cores. Each thread is equipped with a selector to process read events in turn

public class ChannelDemo7 {

public static void main(String[] args) throws IOException {

new BossEventLoop().register();

}

@Slf4j

static class BossEventLoop implements Runnable {

private Selector boss;

private WorkerEventLoop[] workers;

private volatile boolean start = false;

AtomicInteger index = new AtomicInteger();

public void register() throws IOException {

if (!start) {

ServerSocketChannel ssc = ServerSocketChannel.open();

ssc.bind(new InetSocketAddress(8080));

ssc.configureBlocking(false);

boss = Selector.open();

SelectionKey ssckey = ssc.register(boss, 0, null);

ssckey.interestOps(SelectionKey.OP_ACCEPT);

workers = initEventLoops();

new Thread(this, "boss").start();

log.debug("boss start...");

start = true;

}

}

public WorkerEventLoop[] initEventLoops() {

// EventLoop[] eventLoops = new EventLoop[Runtime.getRuntime().availableProcessors()];

WorkerEventLoop[] workerEventLoops = new WorkerEventLoop[2];

for (int i = 0; i < workerEventLoops.length; i++) {

workerEventLoops[i] = new WorkerEventLoop(i);

}

return workerEventLoops;

}

@Override

public void run() {

while (true) {

try {

boss.select();

Iterator<SelectionKey> iter = boss.selectedKeys().iterator();

while (iter.hasNext()) {

SelectionKey key = iter.next();

iter.remove();

if (key.isAcceptable()) {

ServerSocketChannel c = (ServerSocketChannel) key.channel();

SocketChannel sc = c.accept();

sc.configureBlocking(false);

log.debug("{} connected", sc.getRemoteAddress());

workers[index.getAndIncrement() % workers.length].register(sc);

}

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

@Slf4j

static class WorkerEventLoop implements Runnable {

private Selector worker;

private volatile boolean start = false;

private int index;

private final ConcurrentLinkedQueue<Runnable> tasks = new ConcurrentLinkedQueue<>();

public WorkerEventLoop(int index) {

this.index = index;

}

public void register(SocketChannel sc) throws IOException {

if (!start) {

worker = Selector.open();

new Thread(this, "worker-" + index).start();

start = true;

}

tasks.add(() -> {

try {

SelectionKey sckey = sc.register(worker, 0, null);

sckey.interestOps(SelectionKey.OP_READ);

worker.selectNow();

} catch (IOException e) {

e.printStackTrace();

}

});

worker.wakeup();

}

@Override

public void run() {

while (true) {

try {

worker.select();

Runnable task = tasks.poll();

if (task != null) {

task.run();

}

Set<SelectionKey> keys = worker.selectedKeys();

Iterator<SelectionKey> iter = keys.iterator();

while (iter.hasNext()) {

SelectionKey key = iter.next();

if (key.isReadable()) {

SocketChannel sc = (SocketChannel) key.channel();

ByteBuffer buffer = ByteBuffer.allocate(128);

try {

int read = sc.read(buffer);

if (read == -1) {

key.cancel();

sc.close();

} else {

buffer.flip();

log.debug("{} message:", sc.getRemoteAddress());

debugAll(buffer);

}

} catch (IOException e) {

e.printStackTrace();

key.cancel();

sc.close();

}

}

iter.remove();

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

}

💡 How to get the number of CPUs

- Runtime. getRuntime(). If availableprocessors () works under the docker container, because the container is not physically isolated, it will get the number of physical CPUs instead of the number when the container applies

- This problem was not fixed until jdk 10. Use the jvm parameter UseContainerSupport to configure it. It is enabled by default

4.7 UDP

- UDP is connectionless. The client sends data regardless of whether the server is enabled

- The receive method on the server side will store the received data into the byte buffer, but if the data message exceeds the buffer size, the extra data will be silently discarded

Start the server first

public class UdpServer {

public static void main(String[] args) {

try (DatagramChannel channel = DatagramChannel.open()) {

channel.socket().bind(new InetSocketAddress(9999));

System.out.println("waiting...");

ByteBuffer buffer = ByteBuffer.allocate(32);

channel.receive(buffer);

buffer.flip();

debug(buffer);

} catch (IOException e) {

e.printStackTrace();

}

}

}

output

waiting...

Run client

public class UdpClient {

public static void main(String[] args) {

try (DatagramChannel channel = DatagramChannel.open()) {

ByteBuffer buffer = StandardCharsets.UTF_8.encode("hello");

InetSocketAddress address = new InetSocketAddress("localhost", 9999);

channel.send(buffer, address);

} catch (Exception e) {

e.printStackTrace();

}

}

}

Next, the server-side output

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 68 65 6c 6c 6f |hello |

+--------+-------------------------------------------------+----------------+