Catalog

Reactor Model

Reactor model in Netty is mainly composed of Acceptor, Dispatcher and Handler, which can be divided into three kinds.

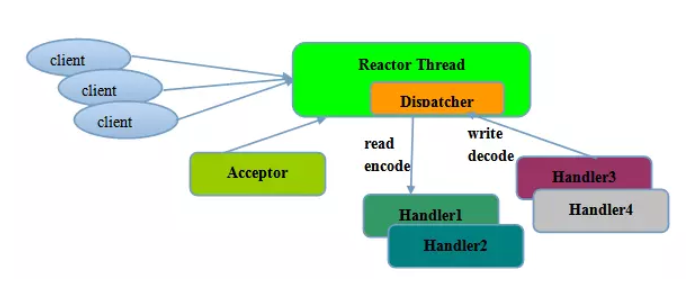

Single Thread Model

All I/O operations are performed by a single thread, that is, multiplexing, event distribution and processing are performed on a Reactor thread.

For some small-capacity application scenarios, a single-threaded model can be used. However, it is not suitable for applications with high load and large concurrency. The main reasons are as follows:

- A thread processing hundreds of links at the same time can not support performance. Even if the CPU load reaches 100%, it can not meet the encoding, decoding, reading and sending of massive messages.

- When the load is too heavy, the processing speed will slow down, which will lead to a large number of client connection timeouts, which will often be retransmitted, eventually lead to a large number of message backlog and processing timeouts, which become the performance bottleneck of the system.

- Once a single thread unexpectedly runs away or enters a dead cycle, it will cause the whole system communication module to be unavailable, unable to receive and process external messages, resulting in node failure and low reliability.

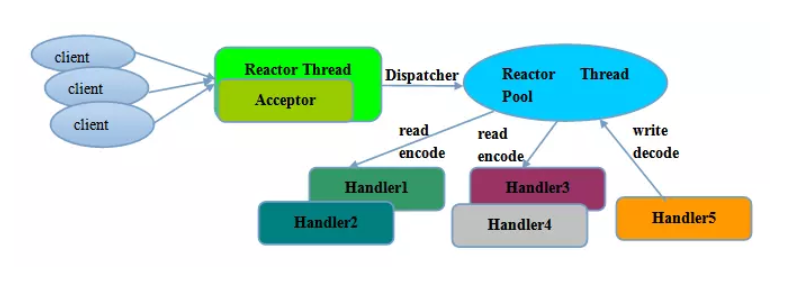

Multithread model

In order to solve some problems of single thread model, the evolved Reactor thread model is proposed.

The characteristics of multithreading model:

- There is a special Acceptor thread for listening to the server and receiving TCP connection requests from the client.

- The read and write operation of network IO is performed by a NIO thread pool, which can be implemented by a standard JDK thread pool, including a task queue and N available threads. These NIO threads are responsible for reading, decoding, encoding and sending messages.

- A NIO thread can handle multiple links at the same time, but a link can only correspond to one NIO thread to prevent concurrent operation problems.

In most scenarios, Reactor multithreading model can meet performance requirements; however, in very special application scenarios, a NIO thread is responsible for monitoring and processing all client connections, which may have performance problems. For example, the concurrent connection of millions of clients, or the security authentication of the handshake messages of clients is required by the server, the authentication itself is very performance-degrading. In such scenarios, a single Acceptor thread may suffer from performance deficiencies. In order to solve the performance problem, a third Reactor thread model, master-slave Reactor multithreading model, is proposed.

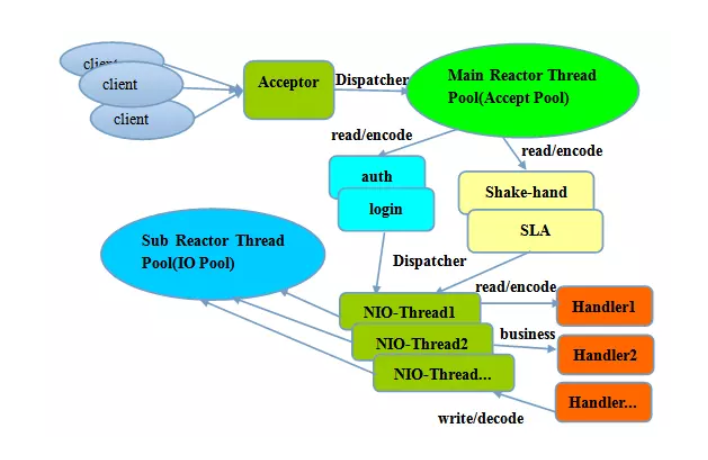

Master multithreading model

The server side is no longer a single NIO thread for receiving client connections, but a separate NIO thread.

With multiple reactors, each reactor executes in its own separate thread. If it is multi-core, it can respond to requests from multiple clients at the same time. Once the link is successfully established, it will be registered on the SubReactor thread pool responsible for I/O reading and writing.

In fact, Netty's thread model is not fixed. Creating different EventLoopGroup instances in boot auxiliary classes and configuring appropriate parameters can support the above three Reactor thread models. It is because Netty's support for Reactor threading model provides flexible customization capabilities, so it can meet the performance requirements of different business scenarios.

Netty's server-side acceptor phase does not use multithreading, which means that n settings 1 and 10 are not different.

Server Socket Channel on the server side is only bound to one thread in the bossGroup, so when calling Selector.select of Java NIO to process connection requests from clients, it is actually in one thread. So for an application with only one service, it is no use for bossGroup to set up multiple threads. And it will cause waste of resources.

Sample code

Following is the example code of server and client, which uses Netty 4.x. Let's see how to implement it first, and then we will analyze each module in depth.

server code implementation

public class EchoServer {

private final int port;

public EchoServer(int port) {

this.port = port;

}

public void run() throws Exception {

// Configure the server.

EventLoopGroup bossGroup = new NioEventLoopGroup(); // (1)

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap b = new ServerBootstrap(); // (2)

b.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class) // (3)

.option(ChannelOption.SO_BACKLOG, 100)

.handler(new LoggingHandler(LogLevel.INFO))

.childHandler(new ChannelInitializer<SocketChannel>() { // (4)

@Override

public void initChannel(SocketChannel ch) throws Exception {

ch.pipeline().addLast(

//new LoggingHandler(LogLevel.INFO),

new EchoServerHandler());

}

});

// Start the server.

ChannelFuture f = b.bind(port).sync(); // (5)

// Wait until the server socket is closed.

f.channel().closeFuture().sync();

} finally {

// Shut down all event loops to terminate all threads.

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

}

public static void main(String[] args) throws Exception {

int port;

if (args.length > 0) {

port = Integer.parseInt(args[0]);

} else {

port = 8080;

}

new EchoServer(port).run();

}

}EchoServer Handler Implementation

public class EchoServerHandler extends ChannelInboundHandlerAdapter {

private static final Logger logger = Logger.getLogger(

EchoServerHandler.class.getName());

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ctx.write(msg);

}

@Override

public void channelReadComplete(ChannelHandlerContext ctx) throws Exception {

ctx.flush();

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

// Close the connection when an exception is raised.

logger.log(Level.WARNING, "Unexpected exception from downstream.", cause);

ctx.close();

}

} 1,NioEventLoopGroup Netty provides different implementations of the EventLoopGroup interface for different transport protocols. In this example, you need to instantiate two NioEventLoop Groups, usually the first called "boss" for accept client connections and the other called "worker" for handling read and write operations on client data.

2,ServerBootstrap The socket parameter can be set by Server Bootstrap.

3. The NioServerSocketChannel class is specified here to initialize the channel for accepting client requests.

4. Channel Pipeline is usually set up by adding handler s to the new Socket Channel. ChannelInitializer It is a special handler where the initChannel method can add a specified handler to the pipeline of SocketChannel.

5. By binding port 8080, we can provide services to the outside world.

client code implementation

public class EchoClient {

private final String host;

private final int port;

private final int firstMessageSize;

public EchoClient(String host, int port, int firstMessageSize) {

this.host = host;

this.port = port;

this.firstMessageSize = firstMessageSize;

}

public void run() throws Exception {

// Configure the client.

EventLoopGroup group = new NioEventLoopGroup();

try {

Bootstrap b = new Bootstrap();

b.group(group)

.channel(NioSocketChannel.class)

.option(ChannelOption.TCP_NODELAY, true)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ch.pipeline().addLast(

//new LoggingHandler(LogLevel.INFO),

new EchoClientHandler(firstMessageSize));

}

});

// Start the client.

ChannelFuture f = b.connect(host, port).sync();

// Wait until the connection is closed.

f.channel().closeFuture().sync();

} finally {

// Shut down the event loop to terminate all threads.

group.shutdownGracefully();

}

}

public static void main(String[] args) throws Exception {

final String host = args[0];

final int port = Integer.parseInt(args[1]);

final int firstMessageSize;

if (args.length == 3) {

firstMessageSize = Integer.parseInt(args[2]);

} else {

firstMessageSize = 256;

}

new EchoClient(host, port, firstMessageSize).run();

}

}

Implementation of EchoClientHandler

public class EchoClientHandler extends ChannelInboundHandlerAdapter {

private static final Logger logger = Logger.getLogger(

EchoClientHandler.class.getName());

private final ByteBuf firstMessage;

/**

* Creates a client-side handler.

*/

public EchoClientHandler(int firstMessageSize) {

if (firstMessageSize <= 0) {

throw new IllegalArgumentException("firstMessageSize: " + firstMessageSize);

}

firstMessage = Unpooled.buffer(firstMessageSize);

for (int i = 0; i < firstMessage.capacity(); i ++) {

firstMessage.writeByte((byte) i);

}

}

@Override

public void channelActive(ChannelHandlerContext ctx) {

ctx.writeAndFlush(firstMessage);

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ctx.write(msg);

}

@Override

public void channelReadComplete(ChannelHandlerContext ctx) throws Exception {

ctx.flush();

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

// Close the connection when an exception is raised.

logger.log(Level.WARNING, "Unexpected exception from downstream.", cause);

ctx.close();

}

}