preface

The notes are based on black horse's Netty teaching handout and some of my own understanding. I think it's very good in the videos I've seen. There's basically no nonsense. Video address: Black horse Netty

Sticky bag and half bag

1. Sticking phenomenon

Since sticky packets have been introduced in the Nio concept before, I won't say much here. Let's look at the test directly: test a piece of code here, which is divided into 10 times and send 16 bytes each time

//Server

@Slf4j

public class HelloWorldServer {

public static void main(String[] args) {

start();

}

static void start(){

NioEventLoopGroup boss = new NioEventLoopGroup();

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap.channel(NioServerSocketChannel.class).group(boss, worker)

.childHandler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

//Log processor

ch.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

}

});

ChannelFuture channelFuture = serverBootstrap.bind(8080).sync();

channelFuture.channel().closeFuture().sync();

} catch (Exception e) {

log.error("server error", e);

e.printStackTrace();

} finally {

//Close gracefully

boss.shutdownGracefully();

worker.shutdownGracefully();

}

}

}

//client

public class HelloWorldClient {

static final Logger log = LoggerFactory.getLogger(HelloWorldClient.class);

public static void main(String[] args) {

NioEventLoopGroup worker = new NioEventLoopGroup();

try{

Bootstrap bootstrap = new Bootstrap();

bootstrap.channel(NioSocketChannel.class).group(worker);

bootstrap.handler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

ch.pipeline().addLast(new ChannelInboundHandlerAdapter(){

//After connecting to the channel, the channelActive event will be triggered

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

for (int i = 0; i < 10; i++) {

//Created 10

ByteBuf buf = ctx.alloc().buffer(16);

buf.writeBytes(new byte[]{0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,});

ctx.writeAndFlush(buf);

}

}

});

}

});

ChannelFuture channelFuture = bootstrap.connect(new InetSocketAddress("localhost", 8080)).sync();

channelFuture.channel().closeFuture().sync();

}catch (Exception e){

log.error("client error", e);

}finally {

//Close gracefully

worker.shutdownGracefully();

}

}

}

//result

DEBUG [nioEventLoopGroup-3-1] (12:53:38,710) (AbstractInternalLogger.java:145) - [id: 0x14bde79c, L:/127.0.0.1:8080 - R:/127.0.0.1:61860] READ: 160B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000010| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000020| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000030| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000040| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000050| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000060| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000070| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000080| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000090| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

+--------+-------------------------------------------------+----------------+

DEBUG [nioEventLoopGroup-3-1] (12:53:38,710) (DefaultChannelPipeline.java:1182) - Discarded inbound message PooledUnsafeDirectByteBuf(ridx: 0, widx: 160, cap: 1024) that reached at the tail of the pipeline. Please check your pipeline configuration.

DEBUG [nioEventLoopGroup-3-1] (12:53:38,712) (DefaultChannelPipeline.java:1198) - Discarded message pipeline : [LoggingHandler#0, DefaultChannelPipeline$TailContext#0]. Channel : [id: 0x14bde79c, L:/127.0.0.1:8080 - R:/127.0.0.1:61860].

DEBUG [nioEventLoopGroup-3-1] (12:53:38,712) (AbstractInternalLogger.java:145) - [id: 0x14bde79c, L:/127.0.0.1:8080 - R:/127.0.0.1:61860] READ COMPLETE

Process finished with exit code -1

The result is: READ: 160B is found in the result, which means that 160 bytes are input at one time, so we can see that instead of sending 16 bytes at a time in 10 times as expected, packet sticking occurs and 160 bytes are sent at a time

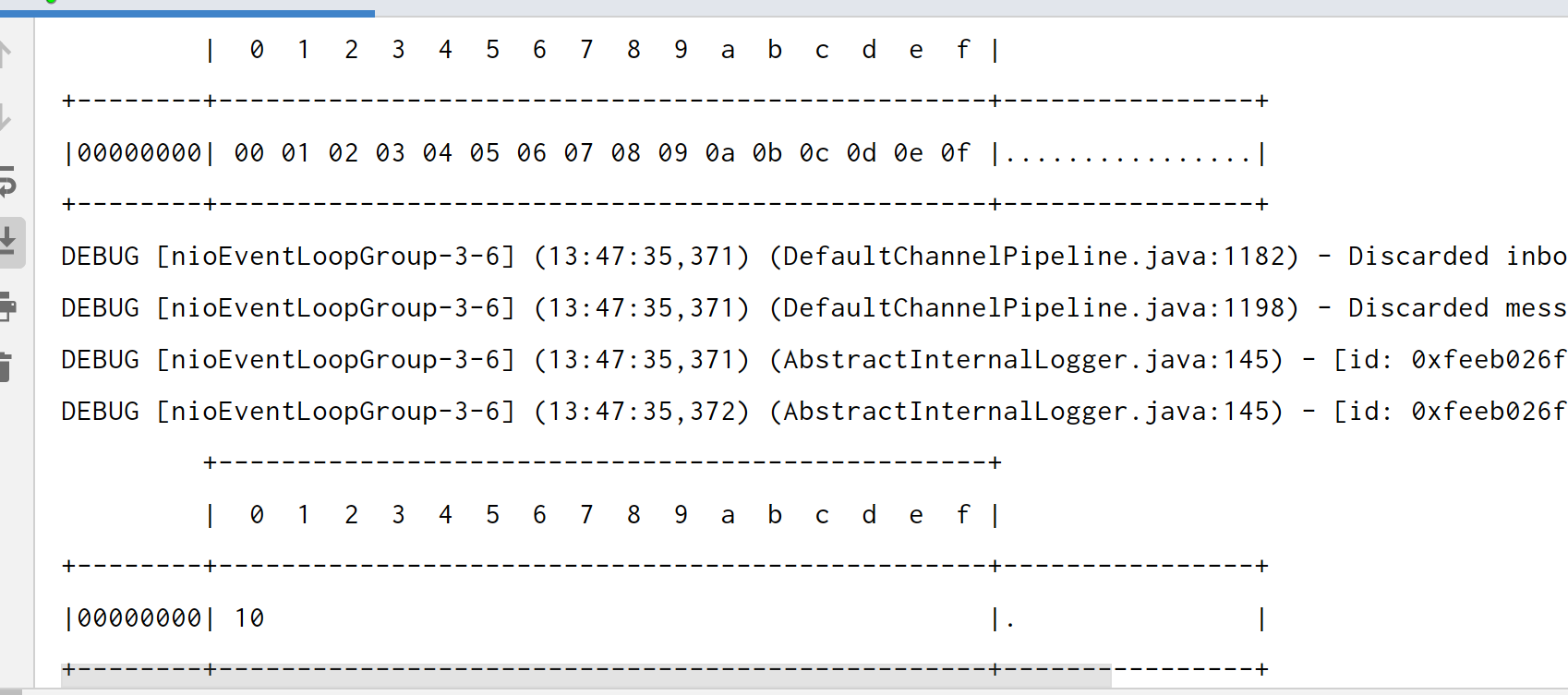

2. Half package phenomenon

Call serverbootstrap Option (channeloption. So_rcvbuf, 10) this method sets the receiving buffer of the server to be smaller.

result:

DEBUG [nioEventLoopGroup-3-1] (13:02:59,781) (AbstractInternalLogger.java:145) - [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092] READ: 36B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000010| 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f |................|

|00000020| 00 01 02 03 |.... |

+--------+-------------------------------------------------+----------------+

DEBUG [nioEventLoopGroup-3-1] (13:02:59,781) (DefaultChannelPipeline.java:1182) - Discarded inbound message PooledUnsafeDirectByteBuf(ridx: 0, widx: 36, cap: 1024) that reached at the tail of the pipeline. Please check your pipeline configuration.

DEBUG [nioEventLoopGroup-3-1] (13:02:59,782) (DefaultChannelPipeline.java:1198) - Discarded message pipeline : [LoggingHandler#0, DefaultChannelPipeline$TailContext#0]. Channel : [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092].

DEBUG [nioEventLoopGroup-3-1] (13:02:59,782) (AbstractInternalLogger.java:145) - [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092] READ COMPLETE

DEBUG [nioEventLoopGroup-3-1] (13:02:59,783) (AbstractInternalLogger.java:145) - [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092] READ: 50B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f 00 01 02 03 |................|

|00000010| 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f 00 01 02 03 |................|

|00000020| 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f 00 01 02 03 |................|

|00000030| 04 05 |.. |

+--------+-------------------------------------------------+----------------+

DEBUG [nioEventLoopGroup-3-1] (13:02:59,783) (DefaultChannelPipeline.java:1182) - Discarded inbound message PooledUnsafeDirectByteBuf(ridx: 0, widx: 50, cap: 1024) that reached at the tail of the pipeline. Please check your pipeline configuration.

DEBUG [nioEventLoopGroup-3-1] (13:02:59,783) (DefaultChannelPipeline.java:1198) - Discarded message pipeline : [LoggingHandler#0, DefaultChannelPipeline$TailContext#0]. Channel : [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092].

DEBUG [nioEventLoopGroup-3-1] (13:02:59,783) (AbstractInternalLogger.java:145) - [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092] READ COMPLETE

DEBUG [nioEventLoopGroup-3-1] (13:02:59,783) (AbstractInternalLogger.java:145) - [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092] READ: 50B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 06 07 08 09 0a 0b 0c 0d 0e 0f 00 01 02 03 04 05 |................|

|00000010| 06 07 08 09 0a 0b 0c 0d 0e 0f 00 01 02 03 04 05 |................|

|00000020| 06 07 08 09 0a 0b 0c 0d 0e 0f 00 01 02 03 04 05 |................|

|00000030| 06 07 |.. |

+--------+-------------------------------------------------+----------------+

DEBUG [nioEventLoopGroup-3-1] (13:02:59,784) (DefaultChannelPipeline.java:1182) - Discarded inbound message PooledUnsafeDirectByteBuf(ridx: 0, widx: 50, cap: 512) that reached at the tail of the pipeline. Please check your pipeline configuration.

DEBUG [nioEventLoopGroup-3-1] (13:02:59,784) (DefaultChannelPipeline.java:1198) - Discarded message pipeline : [LoggingHandler#0, DefaultChannelPipeline$TailContext#0]. Channel : [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092].

DEBUG [nioEventLoopGroup-3-1] (13:02:59,784) (AbstractInternalLogger.java:145) - [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092] READ COMPLETE

DEBUG [nioEventLoopGroup-3-1] (13:02:59,784) (AbstractInternalLogger.java:145) - [id: 0x13b94382, L:/127.0.0.1:8080 - R:/127.0.0.1:62092] READ: 24B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 08 09 0a 0b 0c 0d 0e 0f 00 01 02 03 04 05 06 07 |................|

|00000010| 08 09 0a 0b 0c 0d 0e 0f |........ |

+--------+-------------------------------------------------+----------------+

It can be found that the original data written at one time is now divided into four parts: 36 - 50 - 50 - 24, with a half package phenomenon. serverBootstrap. The size of the bottom receiving buffer (i.e. sliding window) affected by option (channeloption. So_rcvbuf, 10) only determines the minimum unit read by netty. In fact, netty usually reads an integer multiple of it every time. In fact, half packets stick to packets when processing data using TCP protocol.

3. Phenomenon analysis (watch video)

Gluing: combined splicing

- Phenomenon: send abc def and receive abcdef

- reason:

- Application layer: the receiver ByteBuf setting is too large (Netty default 1024), and the bottom layer helps us merge all data

- Sliding window: assuming that the sender 256 bytes represents a complete message, but because the receiver does not process it in time and the window size is large enough, these 256 bytes will be buffered in the sliding window of the receiver. When multiple messages are buffered in the sliding window, packets will stick

- Nagle algorithm: it will cause sticky packets (TCP level, computer network knowledge) to send as much data as possible

Half package: split

- Phenomenon, send abcdef and receive abc def

- reason

- Application layer: the receiver ByteBuf is less than the actual amount of data sent, and the sent messages can only be sent one by one

- Sliding window: assuming that the receiver's window has only 128 bytes left and the sender's message size is 256 bytes, it can't be put down. You can only send the first 128 bytes and wait for ack before sending the rest, resulting in half a packet

- MSS limit: when the transmitted data exceeds the MSS limit, the data will be sent by segmentation, resulting in half packets (knowledge of computer network and knowledge of transport layer)

The essence is that TCP is a streaming protocol. Messages have no boundaries. We must find the boundaries ourselves

- TCP takes a segment as a unit, and each segment sent requires an ack process. However, if this is done, the disadvantage is that the longer the round-trip time of the packet, the worse the performance

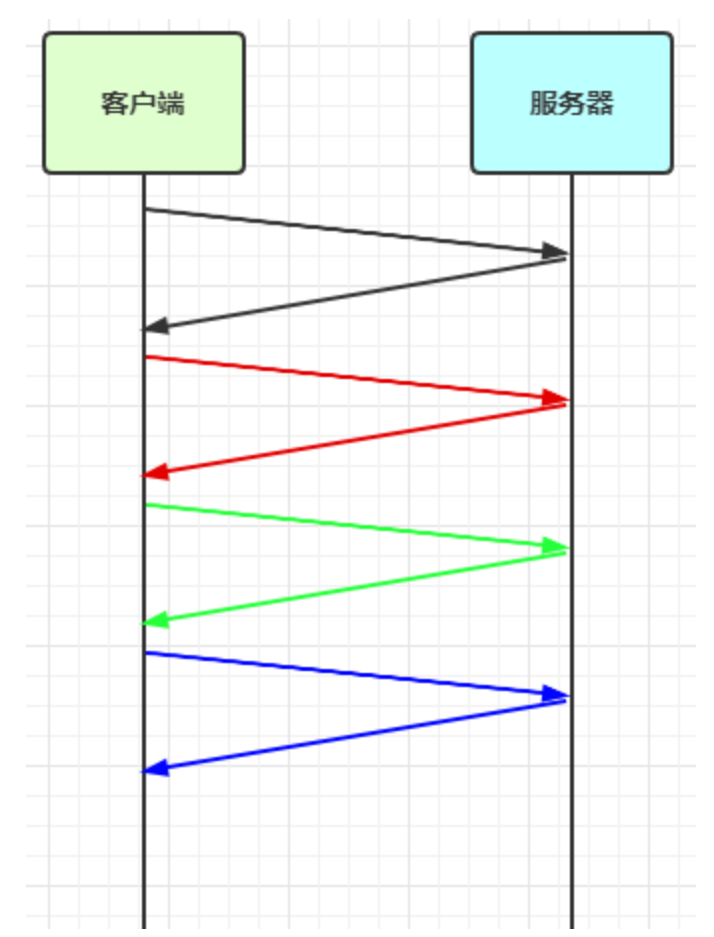

- As can be seen from the following figure, every time the client sends a request to the server, it needs the server to respond and return it to the client before it can continue to execute. The disadvantage is poor performance. If there is a request with a lot of data to process, the subsequent execution will be affected

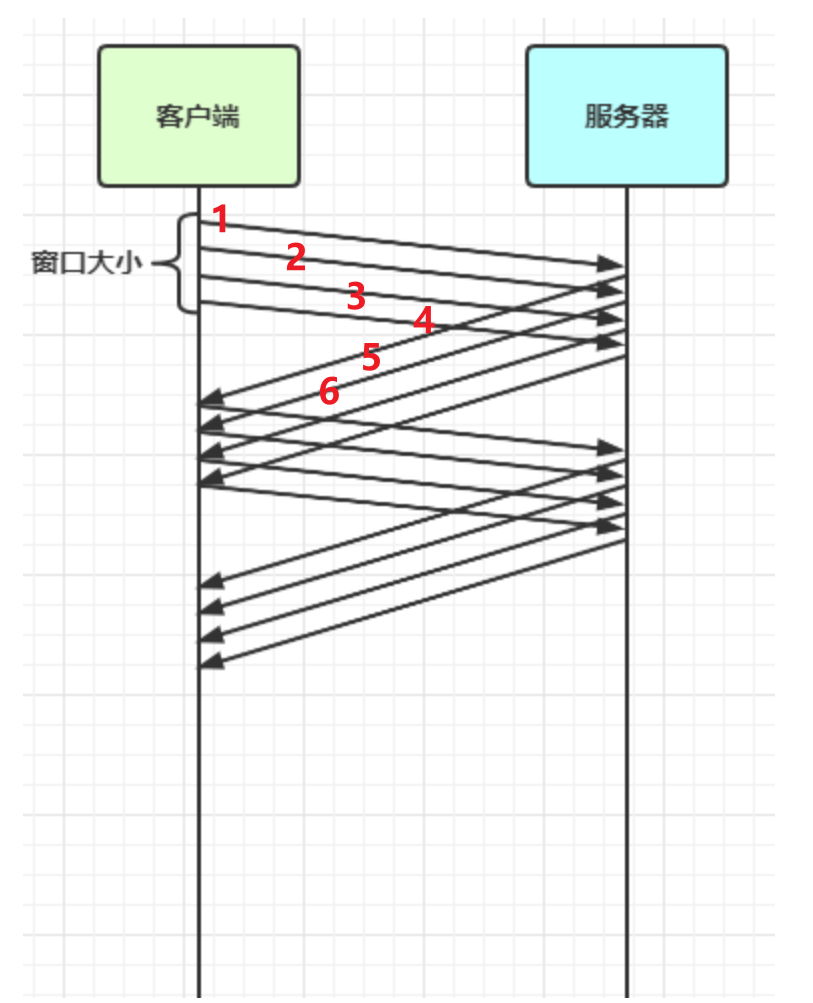

- To solve this problem, TCP uses the sliding window method

- Sliding window: define a fixed size window at the beginning. The size of the window is used to determine how much data can be sent out immediately without waiting for the server to return

- As can be seen from the following figure, at the beginning, the window size is 4, so when a data is sent and received, you can continue to slide down. For example, at the beginning, the window is 1, 2, 3 and 4. Later, 1 sends data and the server responds that 5 comes back. At this time, the window becomes 2, 3, 4 and 5

- The window actually acts as a buffer and also plays the role of flow control

- After a request is made, the window cannot slide until the response comes back

- The receiver will also maintain a window. Only the data falling in the window can be received

4. Sticking package solution - Short link

Short links can only solve the sticky packet phenomenon, not the semi packet phenomenon. The idea is to disconnect the client once, and then call ctx. after the data is sent out. channel(). Close () method to disconnect. When the client feels that the server is disconnected, it will naturally write out the data in the buffer. So as long as the buffer is large enough, this method can solve the problem of sticking packets.

- Send a packet to establish a connection. In this way, the boundary between connection establishment and connection disconnection is the message boundary. The disadvantage is that the efficiency is too low. We only need to modify the client and add CTX channel(). close();

private static void send(){

NioEventLoopGroup worker = new NioEventLoopGroup();

try{

Bootstrap bootstrap = new Bootstrap();

bootstrap.channel(NioSocketChannel.class).group(worker);

bootstrap.handler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

ch.pipeline().addLast(new ChannelInboundHandlerAdapter(){

//After connecting to the channel, the channelActive event will be triggered

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

for (int i = 0; i < 10; i++) {

//Created 10

ByteBuf buf = ctx.alloc().buffer(16);

buf.writeBytes(new byte[]{0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,});

ctx.writeAndFlush(buf);

//Send once and close once

ctx.channel().close();

}

}

});

}

});

ChannelFuture channelFuture = bootstrap.connect(new InetSocketAddress("localhost", 8080)).sync();

channelFuture.channel().closeFuture().sync();

}catch (Exception e){

log.error("client error", e);

}finally {

//Close gracefully

worker.shutdownGracefully();

}

}

- For the server, set serverbootstrap Option (channeloption. So_rcvbuf, 10). Because this line of code sets the size of the system receive buffer (sliding window)

We test the half packet: adjust the receiver buffer of netty to 16, and the client sends 17 bytes at a time

//Adjust the receiver buffer of netty //Adaptive recvbytebufallocator: parameter 1: minimum capacity parameter 2: initial capacity parameter 3: maximum capacity serverBootstrap.childOption(ChannelOption.RCVBUF_ALLOCATOR, new AdaptiveRecvByteBufAllocator(16, 16, 16))

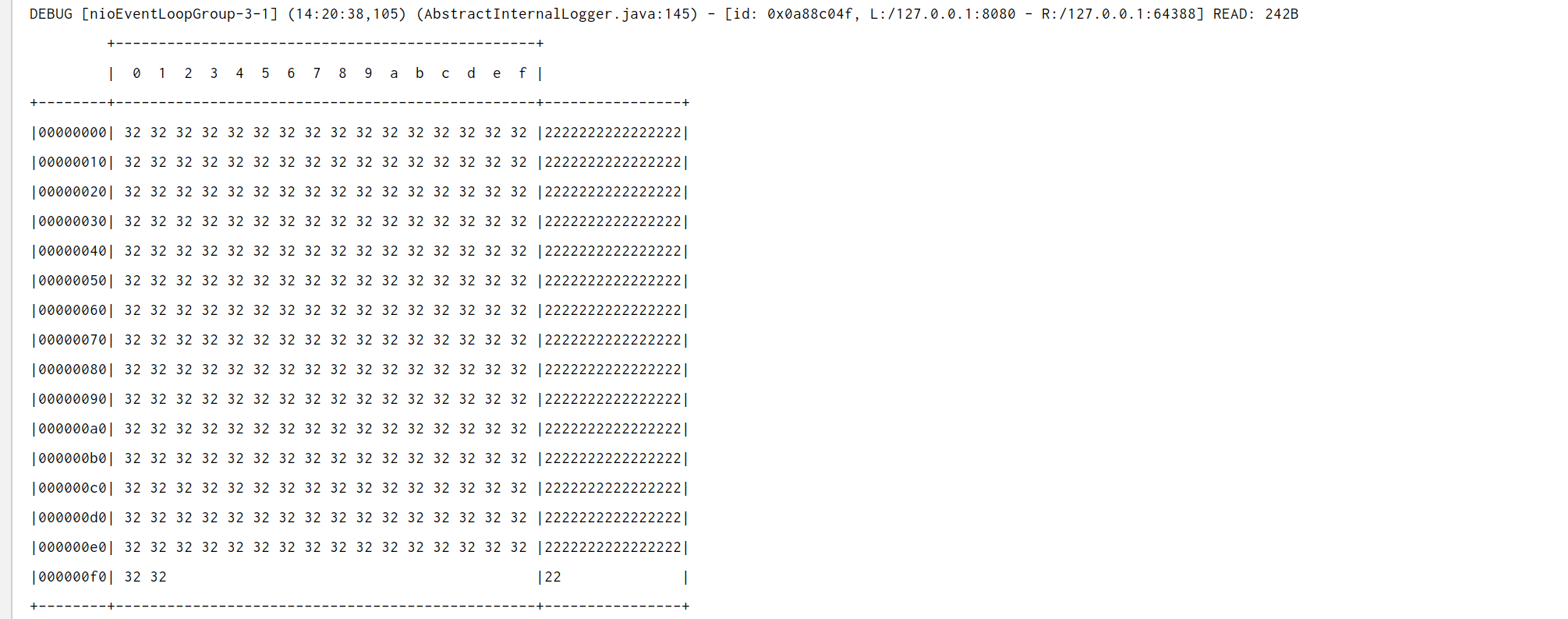

Phenomenon:

5. Half packet solution - fixed length decoder

The length of all data packets is fixed (assuming the length is 8 bytes), and the decoder is added to the server

- Note that this decoder should be placed before other message processing processors, such as LoggingHandler, because messages can be processed only after decoding

- The FixedLengthFrameDecoder specifies the decoding length, but we must manually calculate the maximum length of the message that may be sent, so as to ensure that the data can be written to ByteBuf every time it is sent

- This method is similar to the fixed length cycle of CPU refresh cycle, and uses the maximum cycle as the refresh time of all cycles

- For example, there are three data 11122223333 to be sent. At this time, we set the maximum decoding number to 5, and then fill 111 into 111 –, 2222 into 2222 -. We send 111 -- and 2222 - and 33333 together to the server. The server decodes according to the size of 5 bytes, and every 5 bytes are regarded as a message. In this way, the phenomenon of half packet is solved. Although the packet sticking phenomenon will occur when the client sends, it will not affect the reception of the server

ch.pipeline().addLast(new FixedLengthFrameDecoder(8));

This method actually has a fixed length. For example, if the fixed length of the data packet is 10, when the data occurs at the client, the server will receive the message and consider completing the reception only after receiving 10 bytes.

public class HelloWorldClient {

static final Logger log = LoggerFactory.getLogger(HelloWorldClient.class);

public static void main(String[] args) {

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.channel(NioSocketChannel.class);

bootstrap.group(worker);

bootstrap.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

log.debug("connetted...");

ch.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

ch.pipeline().addLast(new ChannelInboundHandlerAdapter() {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

log.debug("sending...");

// Send packets with random contents

Random r = new Random();

char c = 'a';

ByteBuf buffer = ctx.alloc().buffer();

for (int i = 0; i < 10; i++) {

byte[] bytes = new byte[8];

for (int j = 0; j < r.nextInt(8); j++) {

bytes[j] = (byte) c;

}

c++;

buffer.writeBytes(bytes);

}

ctx.writeAndFlush(buffer);

}

});

}

});

ChannelFuture channelFuture = bootstrap.connect("192.168.0.103", 9090).sync();

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

log.error("client error", e);

} finally {

worker.shutdownGracefully();

}

}

}

client:

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 61 61 61 61 00 00 00 00 62 00 00 00 00 00 00 00 |aaaa....b.......|

|00000010| 63 63 00 00 00 00 00 00 64 00 00 00 00 00 00 00 |cc......d.......|

|00000020| 00 00 00 00 00 00 00 00 66 66 66 66 00 00 00 00 |........ffff....|

|00000030| 67 67 67 00 00 00 00 00 68 00 00 00 00 00 00 00 |ggg.....h.......|

|00000040| 69 69 69 69 69 00 00 00 6a 6a 6a 6a 00 00 00 00 |iiiii...jjjj....|

+--------+-------------------------------------------------+----------------+

Server:

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 61 61 61 61 00 00 00 00 |aaaa.... |

+--------+-------------------------------------------------+----------------+

12:07:00 [DEBUG] [nioEventLoopGroup-3-1] i.n.h.l.LoggingHandler - [id: 0xd739f137, L:/192.168.0.103:9090 - R:/192.168.0.103:53155] READ: 8B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 62 00 00 00 00 00 00 00 |b....... |

+--------+-------------------------------------------------+----------------+

12:07:00 [DEBUG] [nioEventLoopGroup-3-1] i.n.h.l.LoggingHandler - [id: 0xd739f137, L:/192.168.0.103:9090 - R:/192.168.0.103:53155] READ: 8B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 63 63 00 00 00 00 00 00 |cc...... |

+--------+-------------------------------------------------+----------------+

12:07:00 [DEBUG] [nioEventLoopGroup-3-1] i.n.h.l.LoggingHandler - [id: 0xd739f137, L:/192.168.0.103:9090 - R:/192.168.0.103:53155] READ: 8B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 64 00 00 00 00 00 00 00 |d....... |

+--------+-------------------------------------------------+----------------+

12:07:00 [DEBUG] [nioEventLoopGroup-3-1] i.n.h.l.LoggingHandler - [id: 0xd739f137, L:/192.168.0.103:9090 - R:/192.168.0.103:53155] READ: 8B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 00 00 00 00 00 00 00 00 |........ |

+--------+-------------------------------------------------+----------------+

12:07:00 [DEBUG] [nioEventLoopGroup-3-1] i.n.h.l.LoggingHandler - [id: 0xd739f137, L:/192.168.0.103:9090 - R:/192.168.0.103:53155] READ: 8B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 66 66 66 66 00 00 00 00 |ffff.... |

+--------+-------------------------------------------------+----------------+

12:07:00 [DEBUG] [nioEventLoopGroup-3-1] i.n.h.l.LoggingHandler - [id: 0xd739f137, L:/192.168.0.103:9090 - R:/192.168.0.103:53155] READ: 8B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 67 67 67 00 00 00 00 00 |ggg..... |

+--------+-------------------------------------------------+----------------+

12:07:00 [DEBUG] [nioEventLoopGroup-3-1] i.n.h.l.LoggingHandler - [id: 0xd739f137, L:/192.168.0.103:9090 - R:/192.168.0.103:53155] READ: 8B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 68 00 00 00 00 00 00 00 |h....... |

+--------+-------------------------------------------------+----------------+

12:07:00 [DEBUG] [nioEventLoopGroup-3-1] i.n.h.l.LoggingHandler - [id: 0xd739f137, L:/192.168.0.103:9090 - R:/192.168.0.103:53155] READ: 8B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 69 69 69 69 69 00 00 00 |iiiii... |

+--------+-------------------------------------------------+----------------+

12:07:00 [DEBUG] [nioEventLoopGroup-3-1] i.n.h.l.LoggingHandler - [id: 0xd739f137, L:/192.168.0.103:9090 - R:/192.168.0.103:53155] READ: 8B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 6a 6a 6a 6a 00 00 00 00 |jjjj.... |

+--------+-------------------------------------------------+----------------+

Disadvantages: the size of the packet is not easy to grasp

- The length is too large to waste

- The length is set too small, which is not enough for some packets

- We have to measure the maximum one by one

6. Stick package half package solution - line decoder

Added by the server, with \ n or \ r\n as the separator by default. If the separator does not appear beyond the specified length, an exception will be thrown. We need to specify a maximum length during construction. In order to prevent the situation that there is no newline character in the message.

//Set the maximum length to 1024 and use the newline character as the end of the message ch.pipeline().addLast(new LineBasedFrameDecoder(1024));

Server: if the setting message exceeds 1024, an exception will be thrown without a newline character

@Slf4j

public class HelloWorldServer {

public static void main(String[] args) {

start();

}

static void start(){

NioEventLoopGroup boss = new NioEventLoopGroup();

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap.channel(NioServerSocketChannel.class).group(boss, worker)

//We set the buffer (sliding window) received by the system to be smaller

//serverBootstrap.option(ChannelOption.SO_RCVBUF, 10)

//We don't have to deliberately adjust the sliding window size. After the connection is established, the server and client will automatically help us set it according to the throughput

//Adjust the receiver buffer of netty

.childOption(ChannelOption.RCVBUF_ALLOCATOR, new AdaptiveRecvByteBufAllocator(16, 16, 16))

.childHandler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

//Log processor

ch.pipeline().addLast(new LineBasedFrameDecoder(1024));

ch.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

}

});

ChannelFuture channelFuture = serverBootstrap.bind(8080).sync();

channelFuture.channel().closeFuture().sync();

} catch (Exception e) {

log.error("server error", e);

e.printStackTrace();

} finally {

//Close gracefully

boss.shutdownGracefully();

worker.shutdownGracefully();

}

}

}

Client: send 10 pieces of data randomly, ending with \ n

public class HelloWorldClient {

static final Logger log = LoggerFactory.getLogger(HelloWorldClient.class);

public static StringBuilder makeString(char c, int len){

StringBuilder sb = new StringBuilder(len + 2);

for (int i = 0; i < len; i++) {

sb.append(c);

}

sb.append("\n");

return sb;

}

public static void main(String[] args) {

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.channel(NioSocketChannel.class);

bootstrap.group(worker);

bootstrap.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

log.debug("connetted...");

ch.pipeline().addLast(new LoggingHandler(LogLevel.DEBUG));

ch.pipeline().addLast(new ChannelInboundHandlerAdapter() {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

ByteBuf buf = ctx.alloc().buffer();

char c = '0';

Random r = new Random();

for (int i = 0; i < 10; i++) {

//Ends with a newline character

StringBuilder stringBuilder = makeString(c, r.nextInt(256) + 1);

c++;

buf.writeBytes(stringBuilder.toString().getBytes());

}

ctx.writeAndFlush(buf);

}

});

}

});

ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 8080).sync();

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

log.error("client error", e);

} finally {

worker.shutdownGracefully();

}

}

}

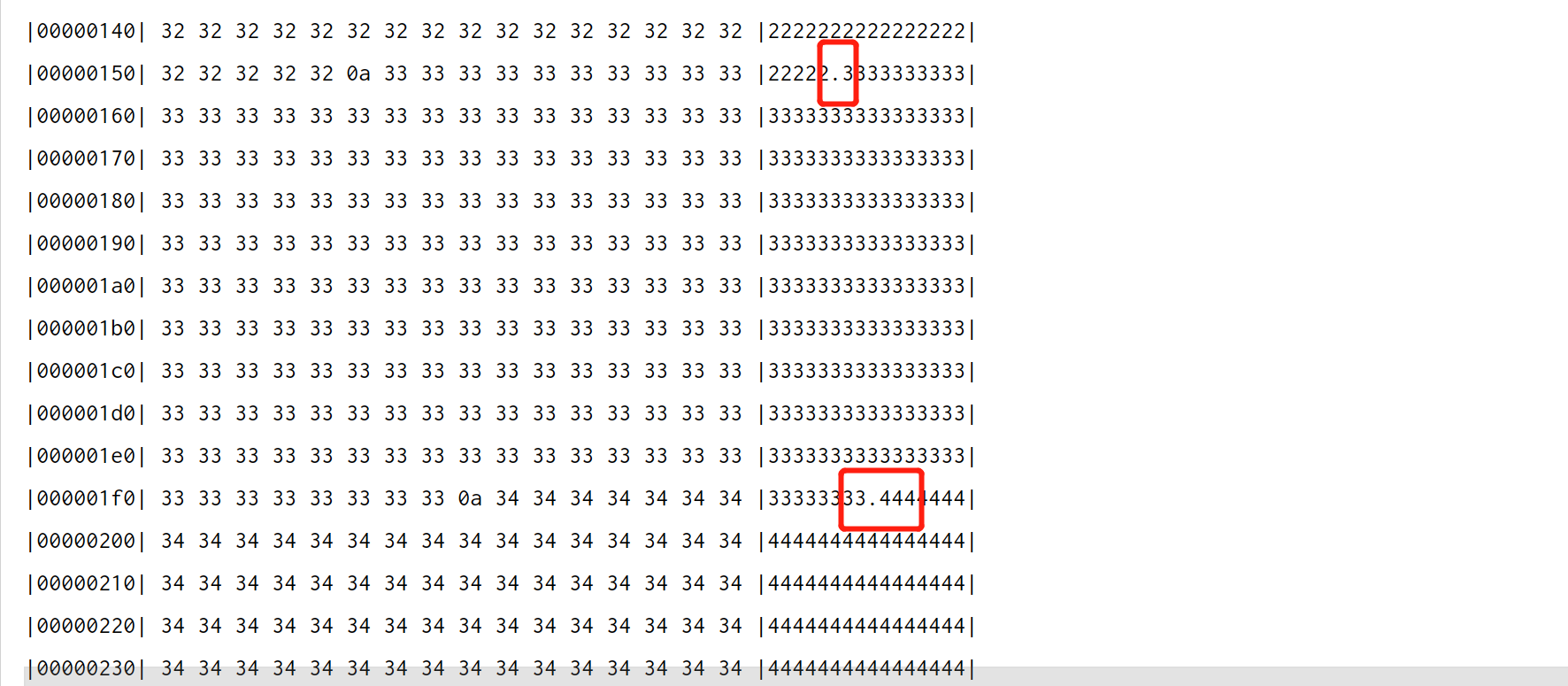

Result: client input, dot indicates line feed

Received by the server: separated by \ n

Disadvantages:

- It is appropriate to handle character data, but if the content itself contains separators (as is often the case with byte data), there will be parsing errors

- It is inefficient because it looks for line breaks one by one according to the transmitted bytes

7. Glued half pack solution - LTC decoder

1. Concept

Before sending a message, it is agreed to use fixed length bytes to represent the length of the next data

// Maximum length, length offset, length occupied bytes, length adjustment, stripped bytes ch.pipeline().addLast(new LengthFieldBasedFrameDecoder(1024, 0, 1, 0, 1));

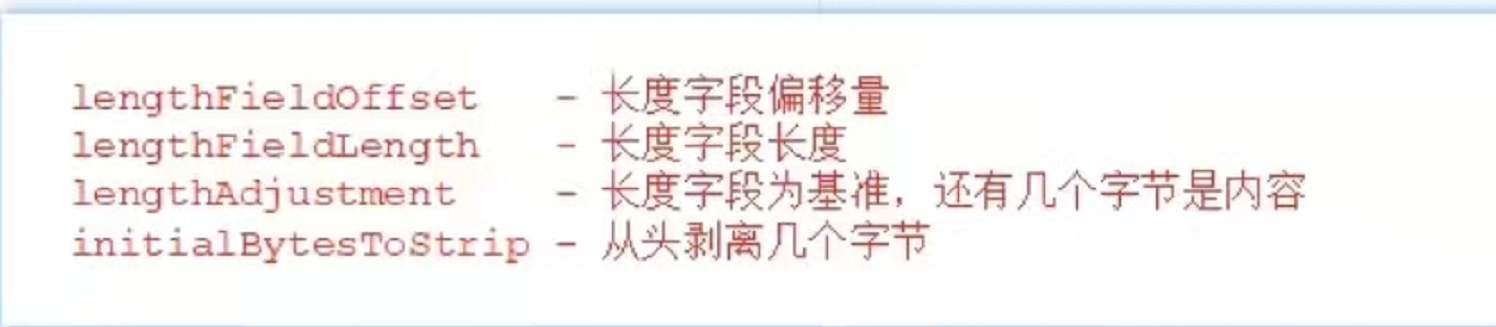

Parameters:

Let's look at the official examples in the source code and explain them:

-

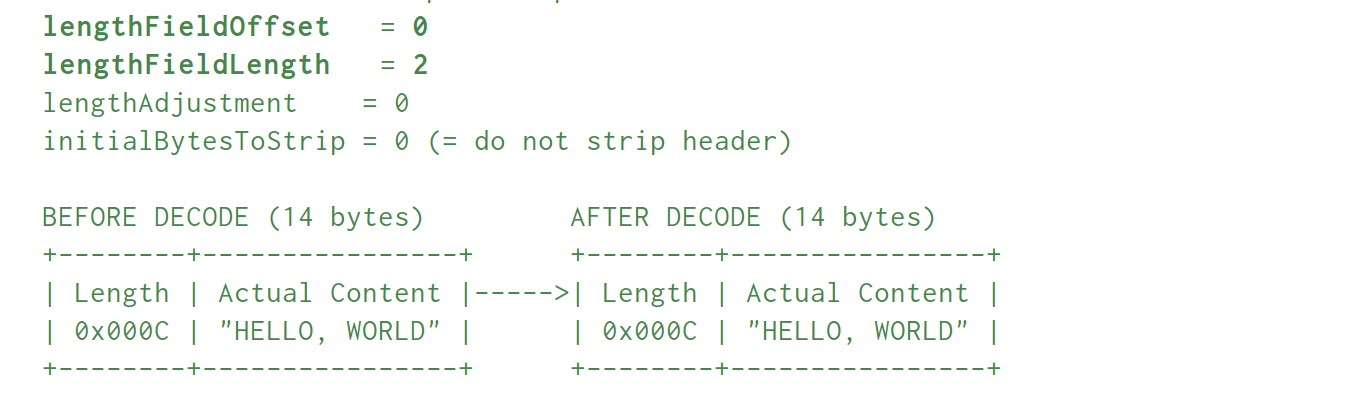

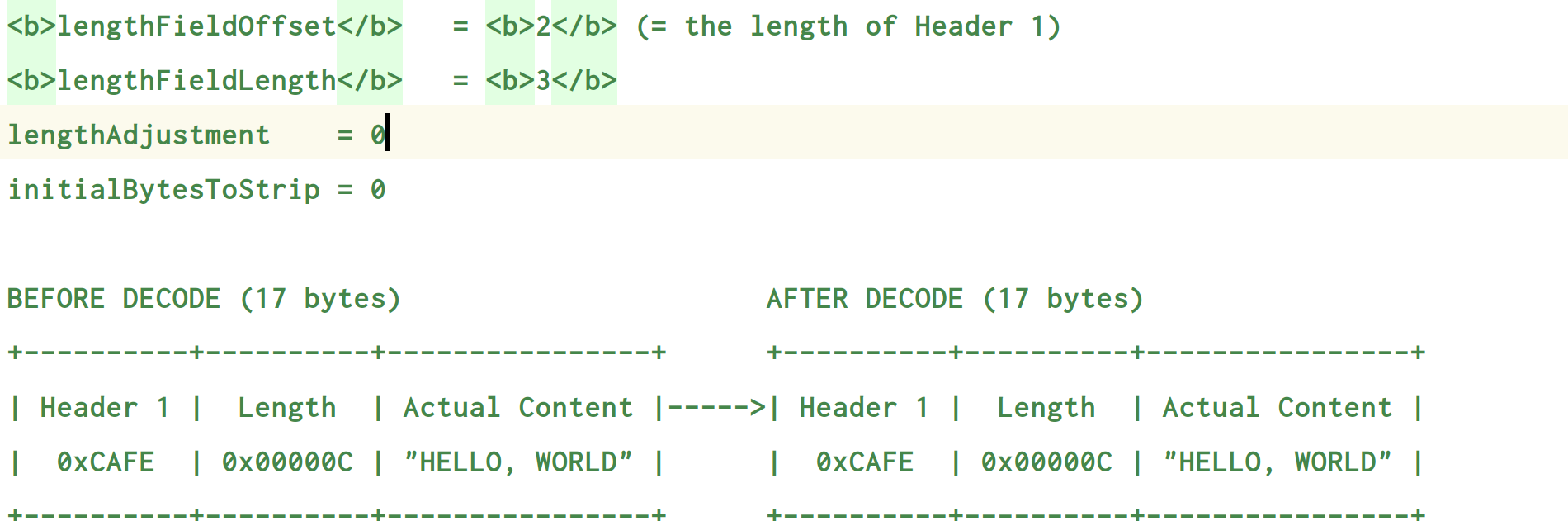

Starting from 0, the length is 2 bytes, and the Adjustment is 0, which means that all the contents are after 2. initial = 0 means that 0 bytes from the beginning are removed from the result

-

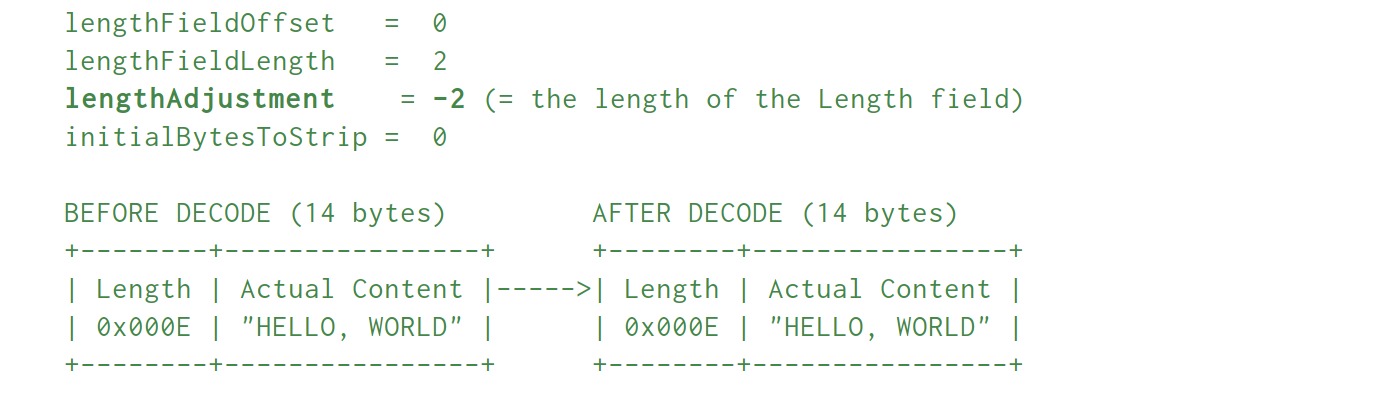

Starting from 0, the length is 2 bytes, and the adjustment is - 2, which means that - 2 is the whole content after starting from 2. initial = 0 means that 0 bytes from the beginning are removed from the result. Adjustment is negative because sometimes the length field represents the length of the whole message. For example, E here represents 14, which is the length of the whole message, not the content. At this time, the length is always 2 larger than the content length, so use an adjustment to adjust.

-

Indicates that it starts from 2, and the next three bytes are the length. Adjust the length to 0 and keep it unchanged. There is no need to subtract after calculating the result

-

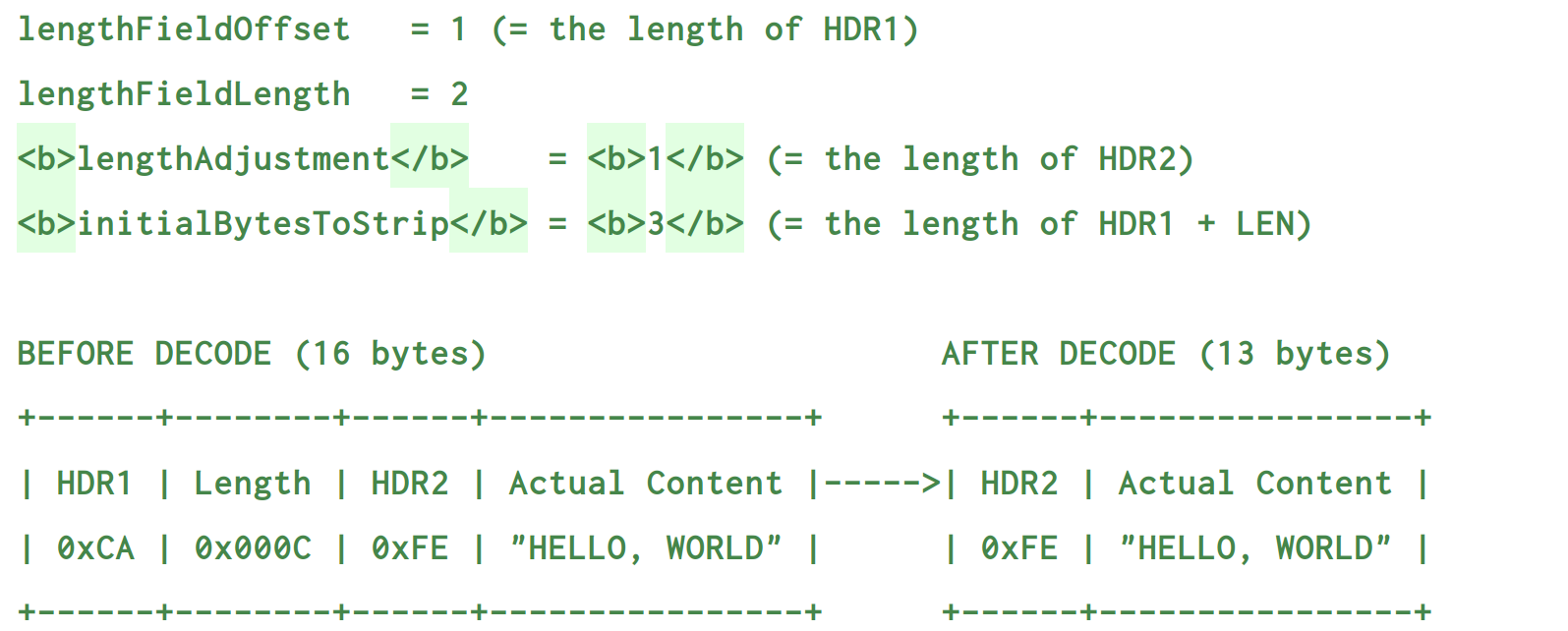

It means starting from 1, the next two bytes are the Length, and then a positive number means counting one byte forward (after HDR2) is the content. In the final result, the three bytes from the beginning (HDR1 and Length) are removed, and the remaining 13 bytes of HDR2 and content are left

2. Code

- When setting the LengthFieldBasedFrameDecoder, if we add some other content besides the length and content, such as the version number (which is reflected in the next code), we have to dynamically adjust the byte size of the Adjustment to read the content completely. If the following example is not adjusted to 1, the version number (1) bit and the content (12) bit will be combined, and then 12 bits will be read, so the content will be one less bit

public class TestLengthFieldDecoder {

public static void main(String[] args) {

EmbeddedChannel channel = new EmbeddedChannel(

//The length is 4, starting from the beginning, and the version number accounts for 1. Therefore, the Adjustment is set to 1, and then the content is read one bit backward. As a result, the 4 bytes from the beginning are stripped

new LengthFieldBasedFrameDecoder(1024, 0, 4, 1, 4),

new LoggingHandler(LogLevel.DEBUG)

);

//4-byte length actual content

ByteBuf buffer = ByteBufAllocator.DEFAULT.buffer();

send(buffer, "Hello world");

send(buffer, "Hi!");

channel.writeInbound(buffer);

/**

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 48 69 21 |Hi! |

+--------+-------------------------------------------------+----------------+

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 48 65 6c 6c 6f 20 77 6f 72 6c 64 |Hello world |

+--------+-------------------------------------------------+----------------+

*/

}

private static void send(ByteBuf buffer, String content){

byte[] bytes = content.getBytes(); //Actual content

int length = bytes.length; //Actual content length

// Length - version number - content

buffer.writeInt(length); //The large end indicates the length

buffer.writeByte(1); //Version number

buffer.writeBytes(bytes); //content

}

}

If there is any error, please point it out!!!