First let's think about a question: What is Channel?

Channel is an interface abstracted by Netty to read/write network I/O, similar to Channel in NIO.

So what are the main features of Channel?

- Read/Write of Network I/O

- Client initiates connection

- Actively close connections, close links

- Get the addresses of both sides of the communication

Explain:

Netty supports many protocols except TCP. Connections with different protocols and blocking types will have different Channel types corresponding to them.

Here's a look at several common Channel s:

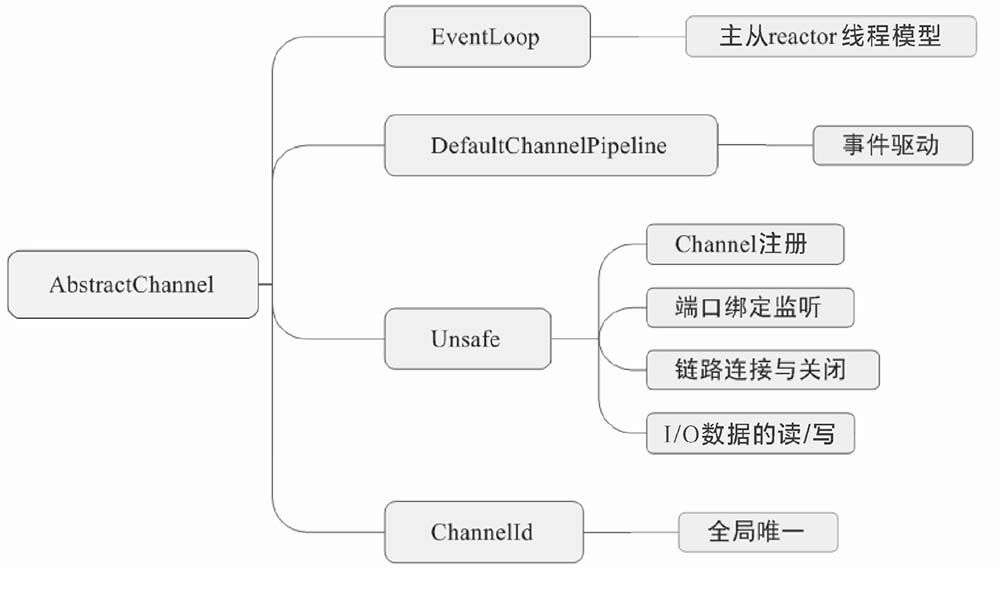

1. AbstractChannel

- First of all, he has several main attributes:

EventLoop: One EventLoop thread per Channel.

DefaultChannelPipeline: A container for a handler, which can also be understood as a handler chain. Handler handles data encoding/decoding and business logic.

Unsafe: Implement specific connections and read/write data, such as network read/write, link down, initiate connections, etc. Naming Unsafe means that it is not intended for external use and is not unsafe.

Let's take a look at AbstractChannel's functional diagram:

Next let's look at the source code:

- First, look at some global variables:

private final Channel parent;

private final ChannelId id;

// Implement specific connections to read/write data, such as network read/write, link down, initiate connections, etc. unsafe indicates that it is not unsafe to provide external use

private final Unsafe unsafe;

// handler call chain

private final DefaultChannelPipeline pipeline;

private final VoidChannelPromise unsafeVoidPromise = new VoidChannelPromise(this, false);

private final CloseFuture closeFuture = new CloseFuture(this);

private volatile SocketAddress localAddress;

private volatile SocketAddress remoteAddress;

// One eventloop thread per channel

private volatile EventLoop eventLoop;

private volatile boolean registered;

private boolean closeInitiated;

private Throwable initialCloseCause;

- AbstractUnsafe, a subclass of AbstractChannel, uses a large number of Template Design Patterns, and implementations are done by subclasses, such as the bind() method:

@Override

public final void bind(final SocketAddress localAddress, final ChannelPromise promise) {

assertEventLoop();

if (!promise.setUncancellable() || !ensureOpen(promise)) {

return;

}

// See: https://github.com/netty/netty/issues/576

if (Boolean.TRUE.equals(config().getOption(ChannelOption.SO_BROADCAST)) &&

localAddress instanceof InetSocketAddress &&

!((InetSocketAddress) localAddress).getAddress().isAnyLocalAddress() &&

!PlatformDependent.isWindows() && !PlatformDependent.maybeSuperUser()) {

// Warn a user about the fact that a non-root user can't receive a

// broadcast packet on *nix if the socket is bound on non-wildcard address.

logger.warn(

"A non-root user can't receive a broadcast packet if the socket " +

"is not bound to a wildcard address; binding to a non-wildcard " +

"address (" + localAddress + ") anyway as requested.");

}

boolean wasActive = isActive();

try {

// Template design mode, calling doBind() method of subclass NioServerSocketChannel

doBind(localAddress);

} catch (Throwable t) {

// Binding Failure Callback

safeSetFailure(promise, t);

closeIfClosed();

return;

}

// Active events triggered from inactive to active state

if (!wasActive && isActive()) {

invokeLater(new Runnable() {

@Override

public void run() {

pipeline.fireChannelActive();

}

});

}

// Bind Successful Callback Notification

safeSetSuccess(promise);

}

2. AbstractNioChannel

AbstractNioChannel inherits AbstractChannel, but adds properties and methods to it.

// Really Used NIO channel

private final SelectableChannel ch;

// Listen for interesting events

protected final int readInterestOp;

// Get Key after registering with Selector

volatile SelectionKey selectionKey;

Let's look at one of the main methods, doRegister():

/**

* Called in the register0() method of AbstractUnsafe

* @throws Exception

*/

@Override

protected void doRegister() throws Exception {

boolean selected = false;

for (;;) {

try {

/**

* Get specific Nio Channel through the Java Channel () method

* Register Channel on Selector of its EventLoop thread

* For the selectionKey returned after registration, you need to set events of interest to Channel

*/

selectionKey = javaChannel().register(eventLoop().unwrappedSelector(), 0, this);

return;

} catch (CancelledKeyException e) {

if (!selected) {

// Force the Selector to select now as the "canceled" SelectionKey may still be

// cached and not removed because no Select.select(..) operation was called yet.

// Select has not been called yet. Select (...)

// So the selectionKey may still be cached but not deleted but canceled

// Force a call to selector.selectNow method

// Remove canceled selectionKey from Selector

eventLoop().selectNow();

selected = true;

} else {

// Only the first time you run out of this exception can you call selector.selectorNow cancels

// If selector is called. SelectectNow also has a canceled cache, which may be a bug in JDK

// We forced a select operation on the selector before but the SelectionKey is still cached

// for whatever reason. JDK bug ?

throw e;

}

}

}

}

An important class in AbstractNioChannel is AbstractNioUnsafe, which inherits AbstractUnsafe and implements methods such as connect, flush0().

Let's explore the connect method:

@Override

public final void connect(

final SocketAddress remoteAddress, final SocketAddress localAddress, final ChannelPromise promise) {

// Set the task to a non-cancellable state and make sure the channel is open

if (!promise.setUncancellable() || !ensureOpen(promise)) {

return;

}

try {

// Make sure there are no connections in progress

if (connectPromise != null) {

// Already a connect in process.

throw new ConnectionPendingException();

}

// Get Previous Status

boolean wasActive = isActive();

/**

* There are three results when connecting remotely.

* 1. Connection successful, return true

* 2. There is no connection at this time, the server did not return the ACK reply, the connection result is uncertain, false

* 3. Connection failed, throw I/O exception directly

* Because protocols and I/O models differ and connections are made differently, implementations are implemented by subclasses

*/

if (doConnect(remoteAddress, localAddress)) {

/**

* ChannelActive event is triggered after successful connection

* The selectionKey in NioSocketChannel will eventually be

* Set to SelectionKey.OP_READ

* Used to monitor network read bits

*/

fulfillConnectPromise(promise, wasActive);

} else {

connectPromise = promise;

requestedRemoteAddress = remoteAddress;

// Schedule connect timeout.

// Get Connection Timeout Event

int connectTimeoutMillis = config().getConnectTimeoutMillis();

if (connectTimeoutMillis > 0) {

// Set timer tasks based on connection timeout events

connectTimeoutFuture = eventLoop().schedule(new Runnable() {

@Override

public void run() {

// Arrival connection timeout triggers check

ChannelPromise connectPromise = AbstractNioChannel.this.connectPromise;

if (connectPromise != null && !connectPromise.isDone()

&& connectPromise.tryFailure(new ConnectTimeoutException(

"connection timed out: " + remoteAddress))) {

// If a connection is not found to be complete, close the connection handle and release resources

// Set exception stack and initiate unregister operation

close(voidPromise());

}

}

}, connectTimeoutMillis, TimeUnit.MILLISECONDS);

}

// Add Connection Result Monitor

promise.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture future) throws Exception {

// If you receive a notification that the connection is complete, determine if the connection has been cancelled

// Close the connection handle, release resources, and initiate unregistration if cancelled

if (future.isCancelled()) {

if (connectTimeoutFuture != null) {

connectTimeoutFuture.cancel(false);

}

connectPromise = null;

close(voidPromise());

}

}

});

}

} catch (Throwable t) {

// Close connection handle, release resources, initiate unregister operation

// Remove from Multiplexer

promise.tryFailure(annotateConnectException(t, remoteAddress));

closeIfClosed();

}

}

The finishConnect () method is interpreted as follows:

@Override

public final void finishConnect() {

// Note this method is invoked by the event loop only if the connection attempt was

// neither cancelled nor timed out.

// Only eventLoop threads can call finishConnect methods

// This method is called in the processSelectedKey() method of NioEventLoop

assert eventLoop().inEventLoop();

try {

boolean wasActive = isActive();

/**

* Judging the result of a connection (done by its subclasses)

* Judging the connection result by the finishConnect() method of SocketChannel

* Connection successfully returned true

* Connection failure throws an exception

* Link shutdown, link interruption and other abnormalities are also connection failures

*/

doFinishConnect();

// Responsible for modifying socketChannel to listen-to-read bits

// Used to listen for network read events

fulfillConnectPromise(connectPromise, wasActive);

} catch (Throwable t) {

// Connection failed, connection handle closed, resource released, and unregister operation cancelled

fulfillConnectPromise(connectPromise, annotateConnectException(t, requestedRemoteAddress));

} finally {

// Check for null as the connectTimeoutFuture is only created if a connectTimeoutMillis > 0 is used

// See https://github.com/netty/netty/issues/1770

if (connectTimeoutFuture != null) {

connectTimeoutFuture.cancel(false);

}

connectPromise = null;

}

}

2. AbstractNioByteChannel

The AbstractNioByteChannel class inherits AbstractNioChannel and therefore has functions such as connection and registration, but I/O reading and writing are still handed over to subclasses.

public abstract class AbstractNioByteChannel extends AbstractNioChannel {

private static final ChannelMetadata METADATA = new ChannelMetadata(false, 16);

private static final String EXPECTED_TYPES =

" (expected: " + StringUtil.simpleClassName(ByteBuf.class) + ", " +

StringUtil.simpleClassName(FileRegion.class) + ')';

// Anonymous Inner Class

// Responsible for refreshing the data in the Send Cache Chain List, as the data from write is not written directly in the Socket, but in the ChannelOutboundBuffer cache.

// So when you call the flush() method, the data is written to the Socket and sent to the network.

private final Runnable flushTask = new Runnable() {

@Override

public void run() {

// Calling flush0 directly to ensure we not try to flush messages that were added via write(...) in the

// meantime.

((AbstractNioUnsafe) unsafe()).flush0();

}

};

private boolean inputClosedSeenErrorOnRead;

Let's look at its doWrite() method, which essentially takes the sent data from the ChannelOutboundBuffer cache and sends it in a circular fashion:

- This is interesting because when multiple threads write at the same time, we give the default Channel a 16-time loop to write data. If the data is not written in 16 cycles, we choose OP_for Key. WRITE events are removed from the set of interest events and a flushTask task is added to perform other tasks first, which are sent when detected

@Override

protected void doWrite(ChannelOutboundBuffer in) throws Exception {

// Write request self-looping number, default 16 times

int writeSpinCount = config().getWriteSpinCount();

do {

// Gets the current refresh message in the current Channel's cache ChannelOutboundBuffer

Object msg = in.current();

// All messages sent successfully

if (msg == null) {

// Clear OP_in Channel Select Key Interest Event Set WRITE Write Action Event

// Wrote all messages.

clearOpWrite();

// Go back straight, there's no need to add any more writing characters

// Directly return here so incompleteWrite(...) is not called.

return;

}

// Otherwise send data

writeSpinCount -= doWriteInternal(in, msg);

} while (writeSpinCount > 0);

/**

* When sending fails because the buffer is full

* doWriteInternal Return to Integer.MAX_VALUE

* WteSpinCount <0 is true at this time

* When 16 sends have not been completed, but each time they are written successfully

* writeSpinCount 0

*/

incompleteWrite(writeSpinCount < 0);

}

protected final void incompleteWrite(boolean setOpWrite) {

// Did not write completely.

// Put OP_WRITE Write Action Events added to Channel's Select Key Event Set

if (setOpWrite) {

setOpWrite();

} else {

// It is possible that we have set the write OP, woken up by NIO because the socket is writable, and then

// use our write quantum. In this case we no longer want to set the write OP because the socket is still

// writable (as far as we know). We will find out next time we attempt to write if the socket is writable

// and set the write OP if necessary.

// Clear OP_in Channel Select Key Interest Event Set WRITE Write Action Event

clearOpWrite();

// Add action tasks to the EventLoop thread for subsequent sending

// Schedule flush again later so other tasks can be picked up in the meantime

eventLoop().execute(flushTask);

}

}

private int doWriteInternal(ChannelOutboundBuffer in, Object msg) throws Exception {

if (msg instanceof ByteBuf) {

ByteBuf buf = (ByteBuf) msg;

if (!buf.isReadable()) {

// Remove from buffer if readable bytes are 0

in.remove();

return 0;

}

// Actual Byte Data Sent

final int localFlushedAmount = doWriteBytes(buf);

if (localFlushedAmount > 0) {

// Update the send progress of byte data

in.progress(localFlushedAmount);

if (!buf.isReadable()) {

// Remove from cache if readable bytes are 0

in.remove();

}

return 1;

}

} else if (msg instanceof FileRegion) {

FileRegion region = (FileRegion) msg;

// If it is a FileRegion message

if (region.transferred() >= region.count()) {

in.remove();

return 0;

}

// Actual Write Operation

long localFlushedAmount = doWriteFileRegion(region);

if (localFlushedAmount > 0) {

// Update the progress of sending data

in.progress(localFlushedAmount);

if (region.transferred() >= region.count()) {

// If all region s are successfully sent

// Remove from cache

in.remove();

}

return 1;

}

} else {

// Should not reach here.

// Sending other types of data is not supported

throw new Error();

}

// Returns Integer when the actual number of bytes sent is 0. MAX_ VALUE

return WRITE_STATUS_SNDBUF_FULL;

}

Next, let's look at the read method:

The implementation of NioByteUnsafe's read() method is roughly divided into the following three steps.

(1) Obtain the Channel's configuration object, memory allocator ByteBufAllocator, and calculate memory allocator RecvByte BufAllocator.Handle.

(2) Enter for loop. What the loop does: Use the memory allocator to get the data container ByteBuf, call the doReadBytes() method to read the data into the container, and jump out of the loop if the loop does not read the data or if the link is closed. In addition, when the number of loops reaches the defaultMaxMessagesPerRead number of times for property METADATA (default is 16), the loop will also jump out. Since TCP transport causes sticky packages, each read triggers the channelRead event, which in turn invokes business logic to process the Handler.

(3) After jumping out of the loop, the reading is completed. Call the readComplete() method of allocHandle and record the read records for the next allocation of reasonable memory.

Specific source view:

@Override

public final void read() {

final ChannelConfig config = config();

if (shouldBreakReadReady(config)) {

clearReadPending();

return;

}

final ChannelPipeline pipeline = pipeline();

// Get the memory allocator, default is PooledByteBufAllocator

final ByteBufAllocator allocator = config.getAllocator();

final RecvByteBufAllocator.Handle allocHandle = recvBufAllocHandle();

// Empty the number of bytes last read and recalculate each read

// Byte buf allocator and calculates the byte buf allocator Handler

allocHandle.reset(config);

ByteBuf byteBuf = null;

boolean close = false;

try {

do {

// Allocate memory

byteBuf = allocHandle.allocate(allocator);

// doReadBytes() reads data into a container

allocHandle.lastBytesRead(doReadBytes(byteBuf));

if (allocHandle.lastBytesRead() <= 0) {

// Jump out of the loop if no data is read or the link is closed

// nothing was read. release the buffer.

byteBuf.release();

byteBuf = null;

close = allocHandle.lastBytesRead() < 0;

if (close) {

// When reading -1, the Channel channel is closed

// No need to continue reading

// There is nothing left to read as we received an EOF.

readPending = false;

}

break;

}

// Update Read Message Counter

allocHandle.incMessagesRead(1);

readPending = false;

// Notification channel processing reads data, triggering fireChannelRead time for Channel pipeline

pipeline.fireChannelRead(byteBuf);

byteBuf = null;

} while (allocHandle.continueReading());

// Read operation completed

allocHandle.readComplete();

// fireChannelReadComplete event triggering Channel pipeline

pipeline.fireChannelReadComplete();

if (close) {

// Close the read operation if the Socket channel is closed

closeOnRead(pipeline);

}

} catch (Throwable t) {

// Handling read exceptions

handleReadException(pipeline, byteBuf, t, close, allocHandle);

} finally {

// Check if there is a readPending which was not processed yet.

// This could be for two reasons:

// * The user called Channel.read() or ChannelHandlerContext.read() in channelRead(...) method

// * The user called Channel.read() or ChannelHandlerContext.read() in channelReadComplete(...) method

//

// See https://github.com/netty/netty/issues/2254

// If the read operation is complete and no automatic read is configured

// Remove read operation time from Select Key Interest

if (!readPending && !config.isAutoRead()) {

removeReadOp();

}

}

}

3.AbstractNioMessageChannel

AbstractNioMessageChannel is primarily an operation object type, so there is no problem with unpacking and gluing. Take a look at its dowrite() and read() methods:

@Override

protected void doWrite(ChannelOutboundBuffer in) throws Exception {

final SelectionKey key = selectionKey();

// Get Key Interest Set

final int interestOps = key.interestOps();

int maxMessagesPerWrite = maxMessagesPerWrite();

while (maxMessagesPerWrite > 0) {

Object msg = in.current();

// Remove OP_from the set of interest after all data has been sent WRITE Events

if (msg == null) {

break;

}

try {

boolean done = false;

// Gets the maximum number of loop writes in the configuration

for (int i = config().getWriteSpinCount() - 1; i >= 0; i--) {

// Call subclass method and return true if msg writes successfully

if (doWriteMessage(msg, in)) {

done = true;

break;

}

}

// If successful, remove it from the cache chain list

// Continue sending next cache node data

if (done) {

maxMessagesPerWrite--;

in.remove();

} else {

// If unsuccessful, jump out of the loop

break;

}

} catch (Exception e) {

// Determine whether to continue writing when an exception occurs

if (continueOnWriteError()) {

maxMessagesPerWrite--;

in.remove(e);

} else {

throw e;

}

}

}

if (in.isEmpty()) {

// Wrote all messages.

if ((interestOps & SelectionKey.OP_WRITE) != 0) {

key.interestOps(interestOps & ~SelectionKey.OP_WRITE);

}

} else {

// Did not write all messages.

if ((interestOps & SelectionKey.OP_WRITE) == 0) {

key.interestOps(interestOps | SelectionKey.OP_WRITE);

}

}

}

Then its read() method:

@Override

public void read() {

assert eventLoop().inEventLoop();

// Get Channel's Configuration Object

final ChannelConfig config = config();

final ChannelPipeline pipeline = pipeline();

// Get Computed Memory Allocation Handle

final RecvByteBufAllocator.Handle allocHandle = unsafe().recvBufAllocHandle();

// Clear Last Record

allocHandle.reset(config);

boolean closed = false;

Throwable exception = null;

try {

try {

do {

/**

* Calling the subclass's doReadMessages() method

* Read the package and place it in the readBuf list

* Return 1 when read successfully

*/

int localRead = doReadMessages(readBuf);

// No data, jump out of the loop

if (localRead == 0) {

break;

}

// Link down, jump out of cycle

if (localRead < 0) {

closed = true;

break;

}

// Record number of successful reads

allocHandle.incMessagesRead(localRead);

// Default cannot exceed 16 times

} while (continueReading(allocHandle));

} catch (Throwable t) {

exception = t;

}

int size = readBuf.size();

// Loop through read packets

for (int i = 0; i < size; i ++) {

readPending = false;

// Trigger channelRead event

pipeline.fireChannelRead(readBuf.get(i));

}

readBuf.clear();

// Record the current read record to allocate reasonable memory next time

allocHandle.readComplete();

// Trigger readComplete event

pipeline.fireChannelReadComplete();

if (exception != null) {

// Handle channel exception shutdown

closed = closeOnReadError(exception);

pipeline.fireExceptionCaught(exception);

}

if (closed) {

inputShutdown = true;

if (isOpen()) {

close(voidPromise());

}

}

} finally {

// Check if there is a readPending which was not processed yet.

// This could be for two reasons:

// * The user called Channel.read() or ChannelHandlerContext.read() in channelRead(...) method

// * The user called Channel.read() or ChannelHandlerContext.read() in channelReadComplete(...) method

//

// See https://github.com/netty/netty/issues/2254

// Read operation completed without configuring automatic read

if (!readPending && !config.isAutoRead()) {

// Remove Read Action Events

removeReadOp();

}

}

}slice

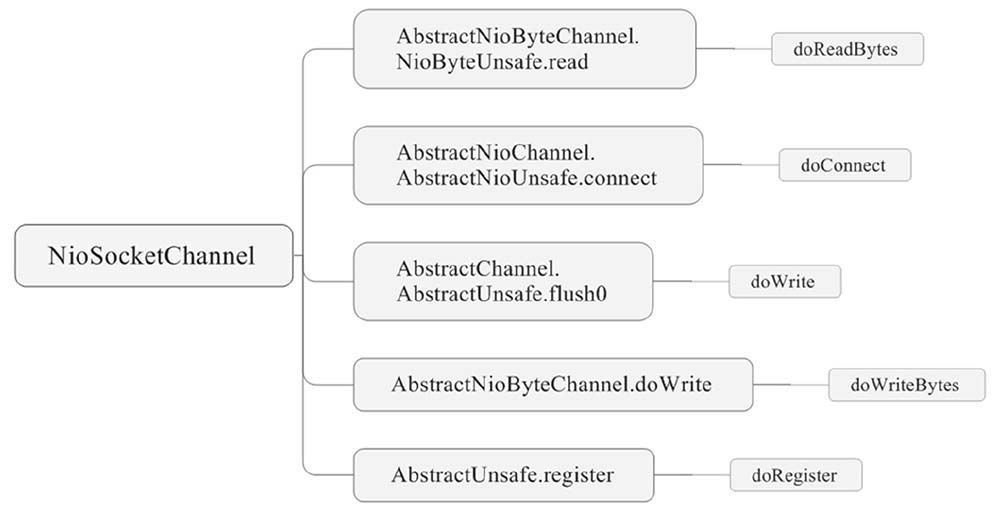

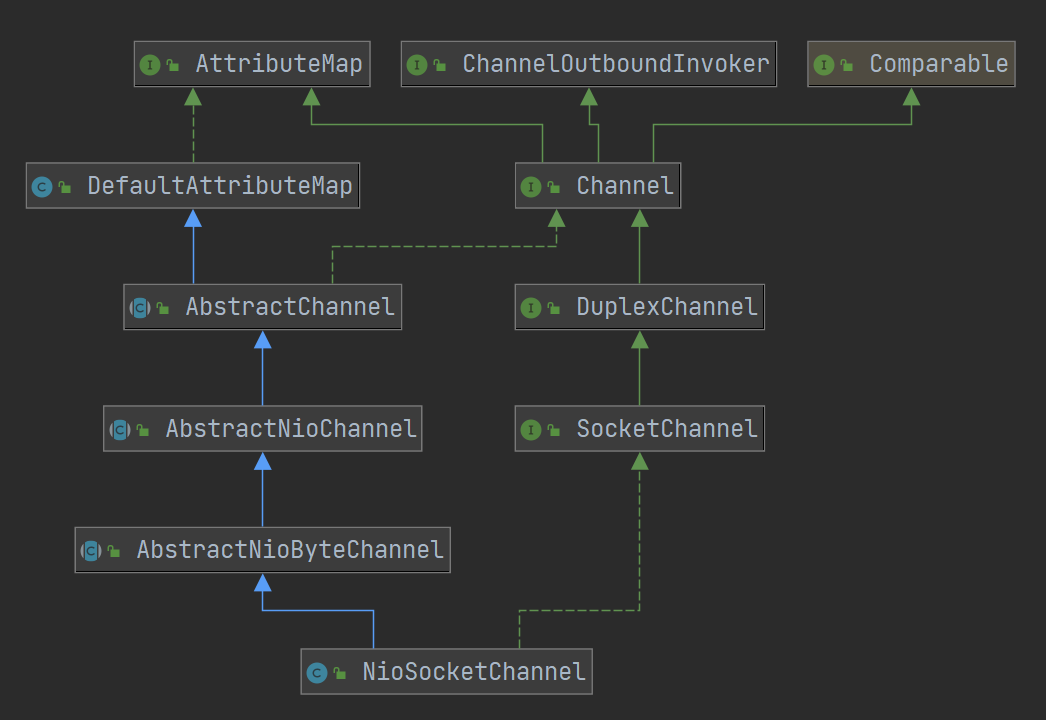

4.NioSocketChannel Source

NioSocketChannel is a subclass of AbstractNioByteChannel and is also the SocketChannel interface implementation class.

Each Socket connection in Netty generates a NioSocketChannel object.

Functional Diagram of NioSocketChannel:

Inheritance Diagram of NioSocketChannel:

Explanation:

* SocketChannel is selected by the SelectorProvider in the NioSocketChannel construction method. Provider (). OpenSocket Channel () is created to provide a Java Channel () method to get SocketChannel.

Implement the doReadBytes() method to read data from the SocketChannel.

* Override the doWrite() method, implement the doWriteBytes() method, and write data to the Socket.

Implement the doConnect() method and client connections.

Here are a few core approaches:

@Override

protected int doReadBytes(ByteBuf byteBuf) throws Exception {

// Get computed memory allocator handle

final RecvByteBufAllocator.Handle allocHandle = unsafe().recvBufAllocHandle();

// Set the number of writable sections that attempt to read bytes as buf

allocHandle.attemptedBytesRead(byteBuf.writableBytes());

// Reads bytes from Channel and writes them to buf, returning the number of bytes read

return byteBuf.writeBytes(javaChannel(), allocHandle.attemptedBytesRead());

}

@Override

protected int doWriteBytes(ByteBuf buf) throws Exception {

// Get the readable bytes of buf

final int expectedWrittenBytes = buf.readableBytes();

// Writes buf to the Socket cache, returning the number of bytes written

return buf.readBytes(javaChannel(), expectedWrittenBytes);

}

@Override

protected void doWrite(ChannelOutboundBuffer in) throws Exception {

// Get SocketChannel

SocketChannel ch = javaChannel();

// Get the maximum number of loop writes

int writeSpinCount = config().getWriteSpinCount();

do {

// Cached data is empty and no data is writable

if (in.isEmpty()) {

// All written so clear OP_WRITE

// Remove write time and return directly

clearOpWrite();

// Directly return here so incompleteWrite(...) is not called.

return;

}

// Ensure the pending writes are made of ByteBufs only.

// Get the maximum number of writable sections at a time

int maxBytesPerGatheringWrite = ((NioSocketChannelConfig) config).getMaxBytesPerGatheringWrite();

/**

* Cache consists of multiple Entries and may write multiple Entries each time it is written

* How much data to send at one time

* Determined by the maximum length of the byteBuffer array and the maximum number of writable sections at a time

*/

ByteBuffer[] nioBuffers = in.nioBuffers(1024, maxBytesPerGatheringWrite);

int nioBufferCnt = in.nioBufferCount();

// Always use nioBuffers() to workaround data-corruption.

// See https://github.com/netty/netty/issues/2761

// How many nioBuffer s are in the cache

switch (nioBufferCnt) {

case 0:

// We have something else beside ByteBuffers to write so fallback to normal writes.

// Non-ByteBuffer data, handed over to parent implementation

writeSpinCount -= doWrite0(in);

break;

case 1: {

// Only one ByteBuf so use non-gathering write

// Zero length buffers are not added to nioBuffers by ChannelOutboundBuffer, so there is no need

// to check if the total size of all the buffers is non-zero.

ByteBuffer buffer = nioBuffers[0];

// buf Readable Bytes

int attemptedBytes = buffer.remaining();

// Send buf to socket cache

final int localWrittenBytes = ch.write(buffer);

// fail in send

if (localWrittenBytes <= 0) {

// Add Write Events to Event Interest Set

incompleteWrite(true);

return;

}

/**

* Adjust the next maximum writable section based on the number of bytes successfully written and bytes attempted to be written

* When both are equal, if the number of bytes attempted to be written*2 is greater than the current maximum number of bytes written

* Then the next maximum writable section is equal to the number of bytes attempted to write*2

* When they are not equal, the number of bytes successfully written is less than the number of bytes attempted/2

* And when trying to access more than 4096 bytes

* Next maximum writable knots equals attempts to write bytes/2

*/

adjustMaxBytesPerGatheringWrite(attemptedBytes, localWrittenBytes, maxBytesPerGatheringWrite);

// Remove the number of bytes written from the cache

in.removeBytes(localWrittenBytes);

// Loop Writes minus 1

--writeSpinCount;

break;

}

default: {

// Zero length buffers are not added to nioBuffers by ChannelOutboundBuffer, so there is no need

// to check if the total size of all the buffers is non-zero.

// We limit the max amount to int above so cast is safe

// Attempt to Write Bytes

long attemptedBytes = in.nioBufferSize();

// Number of bytes actually sent to the Socket cache

final long localWrittenBytes = ch.write(nioBuffers, 0, nioBufferCnt);

// If send fails

if (localWrittenBytes <= 0) {

// Add Write Events to Event Interest Set

// So that the next time NioEventLoop continues triggering a write operation

incompleteWrite(true);

return;

}

// Casting to int is safe because we limit the total amount of data in the nioBuffers to int above.

// Adjust the next maximum number of writable sections

adjustMaxBytesPerGatheringWrite((int) attemptedBytes, (int) localWrittenBytes,

maxBytesPerGatheringWrite);

// Remove successfully sent bytes from cache

in.removeBytes(localWrittenBytes);

// Loop Writes minus 1

--writeSpinCount;

break;

}

}

} while (writeSpinCount > 0);

/**

* Not all sent:

* If writeSpinCount's 0

* Socket buffer is full and did not send successfully

* If writeSpinCount = 0

* That means Netty cached data is too big and has written 16 times before it is finished

*/

incompleteWrite(writeSpinCount < 0);

}

Reference Book: Analysis and Application of Netty Source Code