background

This paper follows the above StreamTask data flow , we explained how the data in the task in each executor is read, converted and written. In this article, we will explain the data transmission process between executors, including the shuffle implementation of flick and the details of reading and writing data of InputChannel and ResultSubPartition in StreamTask.

ShuffleMaster & ShuffleEnvironment

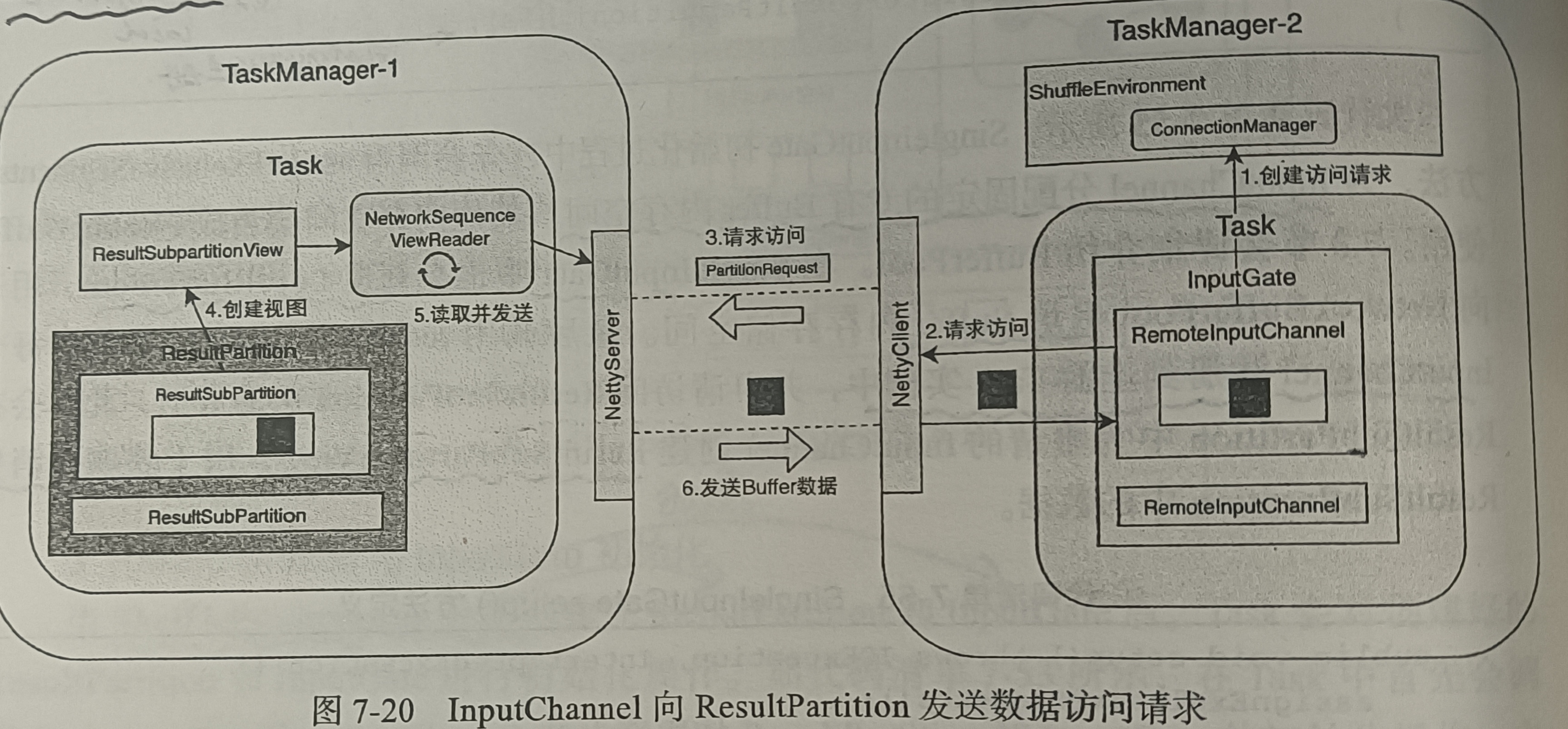

The data exchange between different tasks is designed to the Shuffle operation between tasks. It is mainly to establish a TCP connection between the InputGate component and the ResultPartition component in StreamTask to transmit data through the network. All tasks will create the ShuffleEnvironment component during initialization, and the InputGate and ResultPartition components will be created uniformly through the ShuffleEnvironment component. The ShuffleMaster component uniformly manages and monitors all InputGate and ResultPartition components in the job.

ShuffleService service

The ShuffleEnvironment and ShuffleMaster components are created through the ShuffleServiceFactory. Meanwhile, the pluggable ShuffleService service is implemented through the SPI mechanism inside the flash. The implementation class of ShuffleServiceFactory is loaded into ClassLoader through SPI, that is, the system configured ShuffleServiceFactory implementation class is loaded from the configuration file through ShuffleServiceFactory loader. Users can customize the implementation of the ShuffleService service to realize the pluggability of the Shuffle service.

public class TaskManagerServices {

private static ShuffleEnvironment<?, ?> createShuffleEnvironment(

TaskManagerServicesConfiguration taskManagerServicesConfiguration,

TaskEventDispatcher taskEventDispatcher,

MetricGroup taskManagerMetricGroup) throws FlinkException {

final ShuffleEnvironmentContext shuffleEnvironmentContext = new ShuffleEnvironmentContext(

taskManagerServicesConfiguration.getConfiguration(),

taskManagerServicesConfiguration.getResourceID(),

taskManagerServicesConfiguration.getNetworkMemorySize(),

taskManagerServicesConfiguration.isLocalCommunicationOnly(),

taskManagerServicesConfiguration.getTaskManagerAddress(),

taskEventDispatcher,

taskManagerMetricGroup);

//Load the ShuffleServiceFactory implementation class corresponding to the configuration file through the ShuffleServiceLoader,

//TaskManagerServices creates a ShuffleEnvironment through the ShuffleServiceFactory

return ShuffleServiceLoader

.loadShuffleServiceFactory(taskManagerServicesConfiguration.getConfiguration())

.createShuffleEnvironment(shuffleEnvironmentContext);

}

}

When the ShuffleServiceFactory creates the ShuffleEnvironment, it initializes the ConnectionManager required by the ShuffleEnvironment (used to create InputChannel and communicate with the client of ResultPartition), FileChannelManager (specify the temporary folder in the configuration to store temporary files for offline jobs), and NetworkBufferPool (allocate and manage MemorySegment), ResultPartitionFactory (manage ResultPartition and create ResultSubpartitionView to consume buffer data), SingleInputGateFactory (create InputGate)

Tips: the ResultPartitionFactory and SingleInputGateFactory components inside the ShuffleEnvironment use the same NetworkBufferPool to create ResultPartition and InputGate, so the same TaskManager uses the same NetworkBufferPool.

public enum ShuffleServiceLoader {

;

public static final ConfigOption<String> SHUFFLE_SERVICE_FACTORY_CLASS = ConfigOptions

.key("shuffle-service-factory.class")

.defaultValue("org.apache.flink.runtime.io.network.NettyShuffleServiceFactory")

.withDescription("The full class name of the shuffle service factory implementation to be used by the cluster. " +

"The default implementation uses Netty for network communication and local memory as well disk space " +

"to store results on a TaskExecutor.");

public static ShuffleServiceFactory<?, ?, ?> loadShuffleServiceFactory(Configuration configuration) throws FlinkException {

String shuffleServiceClassName = configuration.getString(SHUFFLE_SERVICE_FACTORY_CLASS);

ClassLoader classLoader = Thread.currentThread().getContextClassLoader();

return InstantiationUtil.instantiate(

shuffleServiceClassName,

ShuffleServiceFactory.class,

classLoader);

}

}

ShuffleEnvironment creates ResultPartition and InputGate

After receiving the Task job description information submitted by the JobManager, the TaskManager will initialize the Task object, and the ResultPartition and inputchat components will be created in the Task object through the ShuffleEnvironment according to the description information.

Task constructor partial logic

// Create ResultPartition

final ResultPartitionWriter[] resultPartitionWriters = shuffleEnvironment.createResultPartitionWriters(

taskShuffleContext,

resultPartitionDeploymentDescriptors).toArray(new ResultPartitionWriter[] {});

this.consumableNotifyingPartitionWriters = ConsumableNotifyingResultPartitionWriterDecorator.decorate(

resultPartitionDeploymentDescriptors,

resultPartitionWriters,

this,

jobId,

resultPartitionConsumableNotifier);

// Create InputGate

final InputGate[] gates = shuffleEnvironment.createInputGates(

taskShuffleContext,

this,

inputGateDeploymentDescriptors).toArray(new InputGate[] {});

this.inputGates = new InputGate[gates.length];

int counter = 0;

for (InputGate gate : gates) {

inputGates[counter++] = new InputGateWithMetrics(gate, metrics.getIOMetricGroup().getNumBytesInCounter());

}

The Task instance encapsulates the InputGate and ResultPartition into the RuntimeEnvironment environment information object and passes them to StreamTask. StreamTask is used to wear StreamNetWorkTaskInput and RecordWriter components to complete the input and output of Task data.

ResultPartition & InputGate

ResultPartition contains multiple ResultSubPartition instances and a LocalBufferPool component. Each ResultSubPartition instance has a Buffer queue to store Buffer data. The LocalBufferPool component is used to obtain the memory storage space of Buffer data. The ResultPartition manager is used to monitor and manage all production and consumption partitions in the same TaskManager, i.e. ResultPartition and ResultPartitionView. ResultSubPartitionView is used to consume the Buffer data cached by ResultSubPartitionView, and then push it to the network.

InputGate contains multiple InputChannel instances and a LocalBufferPool component. The InputGate is encapsulated as a CheckPoint InputGate to complete the relevant operations of CheckPoint. The InputChannel instance establishes a connection with the upstream ResultManager through the ConnectManager to read the data in the network; Apply for Buffer memory storage space from NetworkBufferPool through LocalBufferPool component, and cache binary data read from the network through Buffer. Then push the data to the operator chain for processing with the help of the DataOut component.

Resultpartition & startup and initialization of inputgate

As mentioned above, the ResultPartition & InputGate is created through the ShuffleEnvironment during Task initialization. Then, when the Task thread is running, the setupPartitionsAndGates method will be called to start ResultPartition & InputGate. At this time, a LocalBufferPool will be created for each InputGate and ResultPartition. At the same time, the ResultPartition will be registered with the ResultPartition manager, and each InputChannel will be registered with the corresponding Task instance in the upstream. That is, the InputChannel creates a connection with the upstream ResultPartition through the ConnectManager.

public class Task implements Runnable, TaskSlotPayload, TaskActions, PartitionProducerStateProvider, CheckpointListener, BackPressureSampleableTask {

private void doRun(){

//...

setupPartitionsAndGates(consumableNotifyingPartitionWriters, inputGates);

//...

//Start StreamTask

this.invokable = invokable;

invokable.invoke();

}

@VisibleForTesting

public static void setupPartitionsAndGates(

ResultPartitionWriter[] producedPartitions, InputGate[] inputGates) throws IOException, InterruptedException {

//Create a LocalBufferPool for each ResultPartition and register the ResultPartition in TM's ResultPartition manager

for (ResultPartitionWriter partition : producedPartitions) {

partition.setup();

}

// Create a LocalBufferPool for each InputGate, and the InputChannel registers with the ResultPartition

//Obtain the Client of the upstream ResultPartition through the ConnectManager, and then send a PartitionRequest to the upstream

for (InputGate gate : inputGates) {

gate.setup();

}

}

}

Startup process of InputGate

public class SingleInputGate extends InputGate {

@Override

public void setup() throws IOException, InterruptedException {

checkState(this.bufferPool == null, "Bug in input gate setup logic: Already registered buffer pool.");

// assign exclusive buffers to input channels directly and use the rest for floating buffers

assignExclusiveSegments();

//Create LocalBufferPool

BufferPool bufferPool = bufferPoolFactory.get();

setBufferPool(bufferPool);

//register

requestPartitions();

}

@VisibleForTesting

void requestPartitions() throws IOException, InterruptedException {

synchronized (requestLock) {

if (!requestedPartitionsFlag) {

//... Check operation

//InputChannel registers with the upstream ResultPartition

for (InputChannel inputChannel : inputChannels.values()) {

inputChannel.requestSubpartition(consumedSubpartitionIndex);

}

}

requestedPartitionsFlag = true;

}

}

}

public class RemoteInputChannel extends InputChannel implements BufferRecycler, BufferListener {

public void requestSubpartition(int subpartitionIndex) throws IOException, InterruptedException {

if (partitionRequestClient == null) {

// Create client

try {

partitionRequestClient = connectionManager.createPartitionRequestClient(connectionId);

} catch (IOException e) {

// IOExceptions indicate that we could not open a connection to the remote TaskExecutor

throw new PartitionConnectionException(partitionId, e);

}

//Send a PartitionRequest, and the nettyServer of the upstream ResultPartition receives it

//The ResultSubPartitionView component corresponding to the ResultSubPartition will be created to read the data

//(via the NetworkSequenceViewReader listener inside ResultSubPartitionView)

partitionRequestClient.requestSubpartition(partitionId, subpartitionIndex, this, 0);

}

}

}

At this point, the resultpartition & inputgate is started and TCP connections are established with each other

At this point, the resultpartition & inputgate is started and TCP connections are established with each other

ResulSubtPartitionView reads Buffer data of ResulSubtPartition

Above, we serialized the data in the Task through the RecordWriter and wrote it into the Buffer queue of ResultSubPartition in the type of BufferConsumer for consumption. After the upstream NettyServer receives the PartitionRequest request from the InputChannel, it will create a resultsubpartitionview for the corresponding ResultSubPartition to read the data. At the same time, the NetworkSequenceViewReader listener will be registered in the resultsubpartitionview to obtain the Buffer in the ResultSubPartition queue through the listener.

class PipelinedSubpartition extends ResultSubpartition {

@Override

public PipelinedSubpartitionView createReadView(BufferAvailabilityListener availabilityListener) throws IOException {

final boolean notifyDataAvailable;

synchronized (buffers) {

//. . .

//availabilityListener is a CreditBasedSequenceNumberingViewReader. When the buffer in the buffer queue is available and there is enough Credit,

//CreditBasedSequenceNumberingViewReader will call back PipelinedSubpartitionView

//The getNextBuffer method of reads the data (that is, the pollBuffer() of ResultSubPartition is finally called to read the Buffer data cached in the queue)

readView = new PipelinedSubpartitionView(this, availabilityListener);

notifyDataAvailable = !buffers.isEmpty();

}

if (notifyDataAvailable) {

notifyDataAvailable();

}

return readView;

}

}

class PipelinedSubpartitionView implements ResultSubpartitionView {

private final PipelinedSubpartition parent;

//Call back this method through the listener to read the Buffer data cached in ResultSubPartition

@Nullable

@Override

public BufferAndBacklog getNextBuffer() {

return parent.pollBuffer();

}

}

class CreditBasedSequenceNumberingViewReader implements BufferAvailabilityListener, NetworkSequenceViewReader {

@Override

public BufferAndAvailability getNextBuffer() throws IOException, InterruptedException {

BufferAndBacklog next = subpartitionView.getNextBuffer();

if (next != null) {

sequenceNumber++;

if (next.buffer().isBuffer() && --numCreditsAvailable < 0) {

throw new IllegalStateException("no credit available");

}

return new BufferAndAvailability(

next.buffer(), isAvailable(next), next.buffersInBacklog());

} else {

return null;

}

}

}

The above is how the ResultSubPartitionView reads the Buffer data in the ResultSubPartition. Next, let's see how the Buffer data is written to the network after being read.

ResultPartition will start a NettyServer through the ConnectManager component. After startup, call back the getNextBuffer method of NetworkSequenceViewReader through the PartitionRequestQueue implementation class of Netty's ChannelHandler, and then convert the Buffer data into NettyMassage type and write it to the network.

PartitionRequestQueue registers all networksequenceviewreaders in allReaders, and availableReaders saves the currently activated NetworkSequenceViewReader. The PartitionRequestQueue will cycle through the activated NetworkSequenceViewReader

class PartitionRequestQueue extends ChannelInboundHandlerAdapter {

//After the downstream Client establishes a connection, it obtains the other party's channel and writes the data to the network through the channel

@Override

public void channelWritabilityChanged(ChannelHandlerContext ctx) throws Exception {

writeAndFlushNextMessageIfPossible(ctx.channel());

}

//After the downstream channel is established, it is read circularly

private void writeAndFlushNextMessageIfPossible(final Channel channel) throws IOException {

if (fatalError || !channel.isWritable()) {

return;

}

BufferAndAvailability next = null;

try {

while (true) {

//Loop through available NetworkSequenceViewReader

NetworkSequenceViewReader reader = pollAvailableReader();

// No queue with available data. We allow this here, because

// of the write callbacks that are executed after each write.

if (reader == null) {

return;

}

//Read the Buffer data of ResultSubPartition through NetworkSequenceViewReader,

//And reduce the Credit by one

next = reader.getNextBuffer();

if (next == null) {

if (!reader.isReleased()) {

continue;

}

Throwable cause = reader.getFailureCause();

if (cause != null) {

ErrorResponse msg = new ErrorResponse(

new ProducerFailedException(cause),

reader.getReceiverId());

ctx.writeAndFlush(msg);

}

} else {

// If the NetworkSequenceViewReader is still available, it will be put into the available queue and wait for the next reading

if (next.moreAvailable()) {

registerAvailableReader(reader);

}

//Encapsulate Buffer data into NettyMessage type

BufferResponse msg = new BufferResponse(

next.buffer(),

reader.getSequenceNumber(),

reader.getReceiverId(),

next.buffersInBacklog());

// Write out data through Channel

channel.writeAndFlush(msg).addListener(writeListener);

return;

}

}

} catch (Throwable t) {

if (next != null) {

next.buffer().recycleBuffer();

}

throw new IOException(t.getMessage(), t);

}

}

}

There are two ways to activate NetworkSequenceViewReader:

1. Adding a new BufferConsumer object to ResultSubPartition will synchronously call the notifyDataAvailable method of PipelinedSubpartitionView, and finally call back to the userEventTriggered method of PartitionRequestQueue to add the corresponding NetworkSequenceViewReader to the activation queue.

class PartitionRequestQueue extends ChannelInboundHandlerAdapter {

@Override

public void userEventTriggered(ChannelHandlerContext ctx, Object msg) throws Exception {

// The user event triggered event loop callback is used for thread-safe

// hand over of reader queues and cancelled producers.

if (msg instanceof NetworkSequenceViewReader) {

enqueueAvailableReader((NetworkSequenceViewReader) msg);

} else if (msg.getClass() == InputChannelID.class) {

// Release partition view that get a cancel request.

InputChannelID toCancel = (InputChannelID) msg;

// remove reader from queue of available readers

availableReaders.removeIf(reader -> reader.getReceiverId().equals(toCancel));

// remove reader from queue of all readers and release its resource

final NetworkSequenceViewReader toRelease = allReaders.remove(toCancel);

if (toRelease != null) {

releaseViewReader(toRelease);

}

} else {

ctx.fireUserEventTriggered(msg);

}

}

}

2. Activate the NetworkSequenceViewReader based on the change of credit index. Credit shows the processing capacity of the downstream. Only when there is a credit in the upstream can the NetworkSequenceViewReader corresponding to ResultSubPartition be activated to read and send data.

class PartitionRequestQueue extends ChannelInboundHandlerAdapter {

void addCredit(InputChannelID receiverId, int credit) throws Exception {

if (fatalError) {

return;

}

NetworkSequenceViewReader reader = allReaders.get(receiverId);

if (reader != null) {

reader.addCredit(credit);

enqueueAvailableReader(reader);

} else {

throw new IllegalStateException("No reader for receiverId = " + receiverId + " exists.");

}

}

}

InputChannel reads Buffer data

We talked about how StreamTask consumes data from the Buffer queue of InputChannel. Here we will explain how InputChannel reads data from the network.

The OnBuffer method is provided in InputChannel to cache Buffer data in the queue of InputChannel. The OnBuffer method is called back by the implementation class CreditBasedPartitionRequestClientHandler of Netty's ChannelHandler. When the Buffer enters the InputChannel from Netty's TCP network, it will be written to the queue of InputChannel through the OnBuffer method.

ChannelInboundHandlerAdapter parses Buffer data from the network and writes it to InputChannel

class CreditBasedPartitionRequestClientHandler extends ChannelInboundHandlerAdapter implements NetworkClientHandler {

private void decodeMsg(Object msg) throws Throwable {

final Class<?> msgClazz = msg.getClass();

// ----Processing Buffer data--------------------------------------------------------

if (msgClazz == NettyMessage.BufferResponse.class) {

NettyMessage.BufferResponse bufferOrEvent = (NettyMessage.BufferResponse) msg;

RemoteInputChannel inputChannel = inputChannels.get(bufferOrEvent.receiverId);

if (inputChannel == null) {

bufferOrEvent.releaseBuffer();

cancelRequestFor(bufferOrEvent.receiverId);

return;

}

decodeBufferOrEvent(inputChannel, bufferOrEvent);

} else if (msgClazz == NettyMessage.ErrorResponse.class) {

// ---- Error ---------------------------------------------------------

NettyMessage.ErrorResponse error = (NettyMessage.ErrorResponse) msg;

SocketAddress remoteAddr = ctx.channel().remoteAddress();

//. . .

}

} else {

throw new IllegalStateException("Received unknown message from producer: " + msg.getClass());

}

}

private void decodeBufferOrEvent(RemoteInputChannel inputChannel, NettyMessage.BufferResponse bufferOrEvent) throws Throwable {

try {

ByteBuf nettyBuffer = bufferOrEvent.getNettyBuffer();

final int receivedSize = nettyBuffer.readableBytes();

if (bufferOrEvent.isBuffer()) {

// ---- Buffer ------------------------------------------------

// Early return for empty buffers. Otherwise Netty's readBytes() throws an

// IndexOutOfBoundsException.

if (receivedSize == 0) {

inputChannel.onEmptyBuffer(bufferOrEvent.sequenceNumber, bufferOrEvent.backlog);

return;

}

//Get the Buffer memory storage space from the LocalBufferPool of InputChannel

Buffer buffer = inputChannel.requestBuffer();

if (buffer != null) {

//Write data in nettyBuffer to Buffer

nettyBuffer.readBytes(buffer.asByteBuf(), receivedSize);

buffer.setCompressed(bufferOrEvent.isCompressed);

//Write Buffer to the Buffer queue of InputChannel

inputChannel.onBuffer(buffer, bufferOrEvent.sequenceNumber, bufferOrEvent.backlog);

} else if (inputChannel.isReleased()) {

cancelRequestFor(bufferOrEvent.receiverId);

} else {

throw new IllegalStateException("No buffer available in credit-based input channel.");

}

} else {

// ---- Event -------------------------------------------------

// TODO We can just keep the serialized data in the Netty buffer and release it later at the reader

byte[] byteArray = new byte[receivedSize];

nettyBuffer.readBytes(byteArray);

MemorySegment memSeg = MemorySegmentFactory.wrap(byteArray);

Buffer buffer = new NetworkBuffer(memSeg, FreeingBufferRecycler.INSTANCE, false, receivedSize);

inputChannel.onBuffer(buffer, bufferOrEvent.sequenceNumber, bufferOrEvent.backlog);

}

} finally {

bufferOrEvent.releaseBuffer();

}

}

}

RemoteInputChannel provides the OnBuffer method to write Buffer data to the cache queue

public class RemoteInputChannel extends InputChannel implements BufferRecycler, BufferListener {

public void onBuffer(Buffer buffer, int sequenceNumber, int backlog) throws IOException {

boolean recycleBuffer = true;

try {

final boolean wasEmpty;

synchronized (receivedBuffers) {

//. . .

//Put the Buffer into the receivedBuffers queue for caching

wasEmpty = receivedBuffers.isEmpty();

receivedBuffers.add(buffer);

recycleBuffer = false;

}

//Ensure the sequence of message reception

++expectedSequenceNumber;

if (wasEmpty) {

notifyChannelNonEmpty();

}

if (backlog >= 0) {

onSenderBacklog(backlog);

}

} finally {

//Reclaim Buffer memory space

if (recycleBuffer) {

buffer.recycleBuffer();

}

}

}

}

The above is the data interaction between InputGate and ResultPartition. The data interaction between InputGate and ResultPartition components is the upper data interaction logic. The transmission process of Buffer and Event data through TCP network is realized through ConnectManager components. The ConnectManager component can create underlying network components such as NettyServer and NettyClient.