I haven't realized the shadow myself before, but I understand it conceptually. This time, I write it through Demo.

Generally speaking, nothing can be optimized, but for windows, which can be replaced by facets, it seems that they can be optimized to map, which was done by demo at arm's chess house before.

Back to Shadowmap, the main idea is to get the world coordinate position by depth map, so the light source position renders a scene depth map to get the world coordinate of the light source position pixels.

If the distance between the two world coordinates is less than the error, then both of them can see the point, then the point is not in the shadow, otherwise it is in the shadow area.

Of course, there are many more efficient ways to do it. The problem of how to switch the pixels in A camera to B camera can be realized by projection transformation.

Camera.main.WorldToViewportPoint. Converting the world coordinate position to the viewport coordinate, the range of 0-1, is directly applicable to mapping sampling.

It's relatively simple and straightforward. I've saved some operations on the Internet, so let's look at the specific steps.

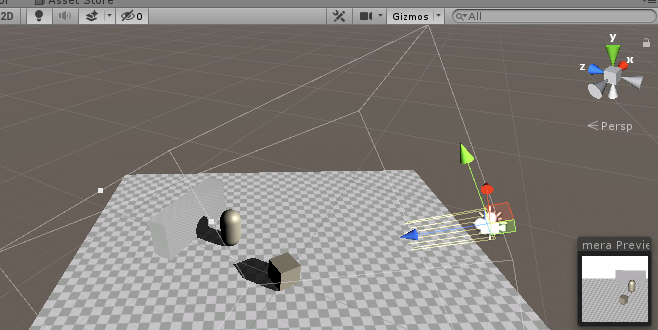

1. Mount a camera on the sub-node of the light source and display it orthogonally, but the light source camera should be able to see the model of the object to be projected. Then mount the script of the camera's rendering depth to the light source camera, where you lazy and use OnRenderImage directly.

LightShadowMapFilter public class LightShadowMapFilter : MonoBehaviour { public Material mat; void Awake() { GetComponent<Camera>().depthTextureMode |= DepthTextureMode.Depth; } void OnRenderImage(RenderTexture source, RenderTexture destination) { Graphics.Blit(source, destination, mat); } }

public class LightShadowMapFilter : MonoBehaviour { public Material mat; void Awake() { GetComponent<Camera>().depthTextureMode |= DepthTextureMode.Depth; } void OnRenderImage(RenderTexture source, RenderTexture destination) { Graphics.Blit(source, destination, mat); } }