Packet sticking and unpacking are inevitable in TCP network programming. Whether the server or the client, when we read or send messages, we need to consider the underlying TCP packet sticking/unpacking mechanism.

TCP sticking and unpacking

TCP is a "flow" protocol. The so-called stream is a string of data without boundaries. The underlying layer of TCP does not understand the specific meaning of the upper layer business data, it will divide the packets according to the actual situation of the TCP buffer, so in business, it is believed that a complete package may be divided into several packets for transmission by TCP, or it may encapsulate several small packets into a large packet for transmission, which is the so-called TCP sticking and unpacking problem.

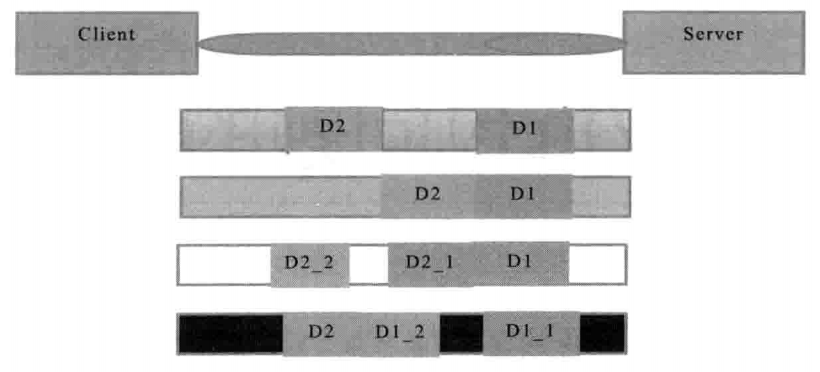

As shown in the figure, assuming that the client sends two data packets D1 and D2 to the server, there may be four situations as the number of bytes read by the server is uncertain at one time.

- The server reads two separate data packets, D1 and D2, without sticking and unpacking.

- The server receives two packets at a time. D1 and D2 are glued together, which is called TCP glue.

- The server reads two data packets twice. The first reads part of the complete D1 and D2 packets, and the second reads the rest of the D2 packets. This is called TCP unpacking.

- The server reads two packets twice, the first part of D1 package D1_1, the second part of D1 package D1_2 and the whole package of D2 package.

If the TCP receiving sliding window is very small at this time, and the data packets D1 and D2 are relatively large, there is a fifth possibility, that is, the server can receive D1 and D2 packets completely several times, during which several unpacking occurs.

Reasons for TCP Packing and Unpacking

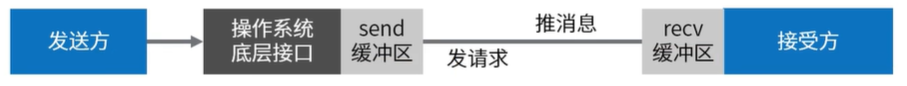

Data from the sender to the receiver needs to pass through the buffer of the operating system, which is the main reason for sticking and unpacking. Packet sticking can be understood as buffer data accumulation, resulting in multiple requests data sticking together, and unpacking can be understood as sending more data than the buffer, for split processing.

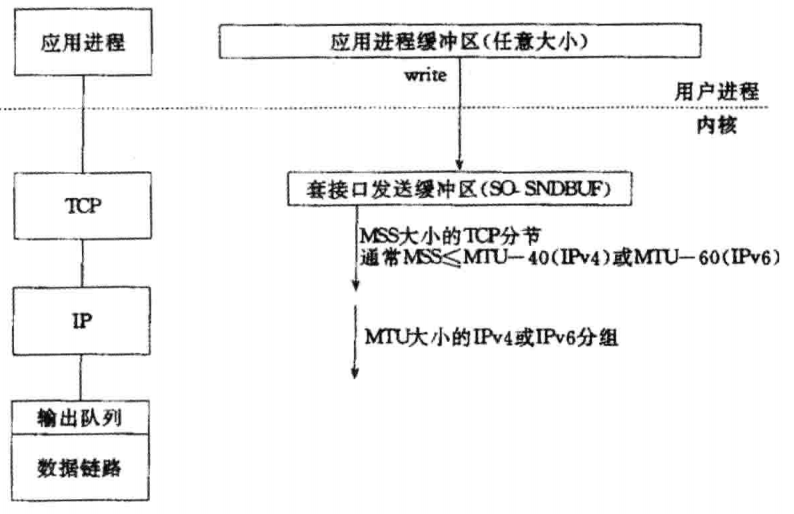

In detail, there are three main reasons for sticking and unpacking.

- The byte size written by the application write is larger than the sending buffer size of the socket interface.

- TCP Segmentation of MSS Size

- The payload of Ethernet frame is larger than that of MTU for IP fragmentation.

Solutions to sticking and unpacking

Because the underlying TCP can not understand the business data of the upper layer, it is impossible to guarantee that the data packet will not be split and reorganized at the lower layer. This problem can only be solved by the design of the application protocol stack of the upper layer. According to the solutions of the mainstream protocols in the industry, it can be summarized as follows.

- The message length is fixed. After reading the message with the length of LEN and the length of LEN, we think we have read a complete message.

- Use return line break as message Terminator

- The return line break is a special end separator that uses a special separator as the end sign of a message.

- Identify the total length of a message by defining a length field in the header

Solutions for sticking and unpacking in Netty

For the solution of sticking and unpacking described in the previous section, the problem of unpacking is relatively simple. Users can define their own coders for processing. Netty does not provide the corresponding components. For the problem of sticky package, due to the complexity of unpacking and the complexity of code comparison, Netty provides four decoders to solve the problem, which are as follows:

- Fixed Length Frame Decoder, a fixed-length unpacker, with each application-layer packet split into fixed-length sizes

- Line BasedFrameDecoder, a line splitter, uses line breakers as separators for each application layer packet.

- DelimiterBasedFrameDecoder, a delimiter, uses custom delimiters to partition and decompose each application-level packet.

- Length Field Based Frame Decoder, a packet splitter based on packet length, uses the length of application layer data packets as the basis for the splitting of application layer data packets at the receiving end. According to the size of application layer data package, unpacking. One requirement of this unpacker is the length of the packet contained in the application layer protocol.

The above decoders only need to be added to Netty's responsibility chain when they are used. In most cases, these four decoders can be satisfied. Of course, besides the above four decoders, users can also customize their own decoders for processing. Specifically, you can refer to the following code examples:

// Server main program

public class XNettyServer {

public static void main(String[] args) throws Exception {

// accept handles connection thread pools

NioEventLoopGroup acceptGroup = new NioEventLoopGroup();

// read io thread pool for data processing

NioEventLoopGroup readGroup = new NioEventLoopGroup();

try {

ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap

.group(acceptGroup, readGroup)

.channel(NioServerSocketChannel.class)

.childHandler(

new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

// Increase decoder

pipeline.addLast(new XDecoder());

// Print out content handdler

pipeline.addLast(new XHandler());

}

});

System.out.println("Successful startup, port 7777");

serverBootstrap.bind(7777).sync().channel().closeFuture().sync();

} finally {

acceptGroup.shutdownGracefully();

readGroup.shutdownGracefully();

}

}

}

// Decoder

public class XDecoder extends ByteToMessageDecoder {

static final int PACKET_SIZE = 220;

// Used to temporarily retain requests that have not been processed

ByteBuf tempMsg = Unpooled.buffer();

/**

* @param ctx

* @param in Request data

* @param out Retain the results of splitting the glued messages

* @throws Exception

*/

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List<Object> out) throws Exception {

System.out.println(Thread.currentThread() + "A packet was received, the length of which is:" + in.readableBytes());

// Consolidated message

ByteBuf message = null;

int tmpMsgSize = tempMsg.readableBytes();

// If the last remaining request message is temporarily saved, it is merged

if (tmpMsgSize > 0) {

message = Unpooled.buffer();

message.writeBytes(tempMsg);

message.writeBytes(in);

System.out.println("Merge: The remaining length of the previous packet is:" + tmpMsgSize + ",The merged length is:" + message.readableBytes());

} else {

message = in;

}

int size = message.readableBytes();

int counter = size / PACKET_SIZE;

for (int i = 0; i < counter; i++) {

byte[] request = new byte[PACKET_SIZE];

// Read 220 bytes of data from the total message at a time

message.readBytes(request);

// Put the split results in the out list and leave it to the business logic behind to process.

out.add(Unpooled.copiedBuffer(request));

}

// Redundant messages are saved

// First message: i + temporary deposit

// The second message: 1 and the first

size = message.readableBytes();

if (size != 0) {

System.out.println("Redundant data length:" + size);

// The remaining data is temporarily stored in tempMsg

tempMsg.clear();

tempMsg.writeBytes(message.readBytes(size));

}

}

}

// processor

public class XHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelReadComplete(ChannelHandlerContext ctx) throws Exception {

ctx.flush();

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ByteBuf byteBuf = (ByteBuf) msg;

byte[] content = new byte[byteBuf.readableBytes()];

byteBuf.readBytes(content);

System.out.println(Thread.currentThread() + ": Final printing" + new String(content));

((ByteBuf) msg).release();

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

}

}