TCP sticking / unpacking

TCP is a "flow" protocol. The so-called flow is a string of data without boundary. You can think of the running water in the river. They are connected in one piece and there is no boundary between them. The bottom layer of TCP does not know the specific meaning of the upper layer business data. It will divide the packets according to the actual situation of the TCP buffer. Therefore, it is considered that TCP can split the packets into multiple packets for transmission, and it is possible to package multiple small packets into one large packet for transmission. This is the so-called problem of TCP sticking and unpacking.

Explanation of sticking / unpacking problems

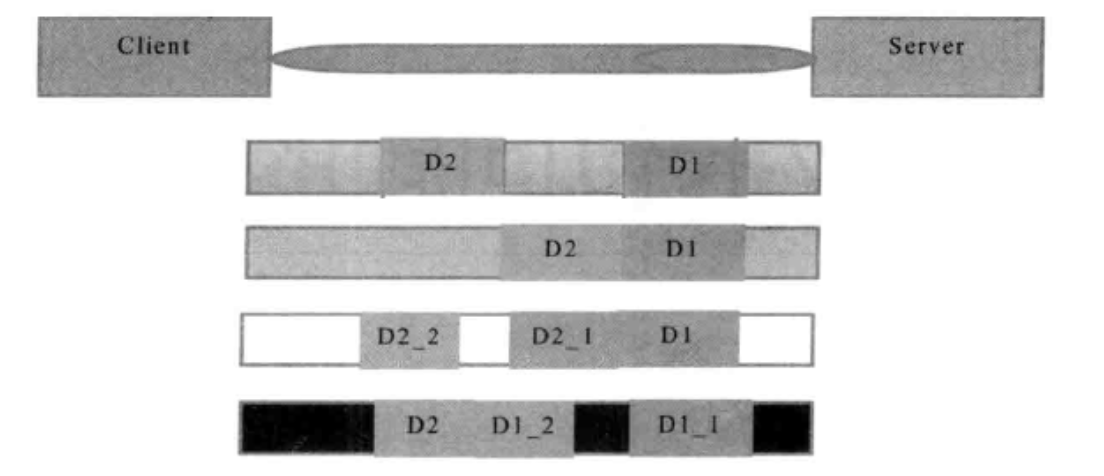

Suppose that the client sends two packets D1 and D2 to the server respectively. Because the number of bytes read by the server at one time is uncertain, the following four situations may exist.

- The server reads two independent packets, D1 and D2, without sticking or unpacking;

- The server receives two packets at a time. D1 and D2 are glued together, which is called TCP gluing;

- The server reads two packets twice, the first time it reads the complete DI packet and part of D2 packet, and the second time it reads the rest of D2 packet, which is called TCP unpacking;

- The server reads two packets twice, the first time it reads part of D1_1. Read the rest of D1 package for the second time_ 2 and D2.

If the TCP receiving window of the server is very small at this time, and the packets D1 and D2 are relatively large, the fifth possibility is likely to occur, that is, the server can receive the packets D1 and D2 completely in several times, and multiple packet splitting occurs during this period.

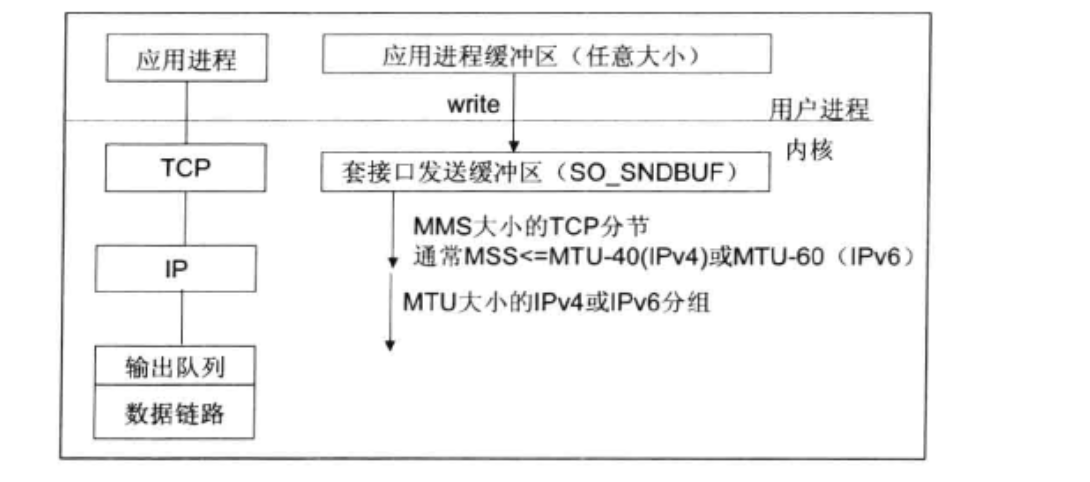

Causes of TCP packet sticking / unpacking:

- The size of the bytes written by the application write is larger than the size of the transmit buffer of the socket interface;

- Carry out TCP segmentation of MSS size;

- Ethernet frame's payload is larger than MTU for IP fragmentation

Solutions to sticking problems:

Because the underlying TCP cannot understand and the upper business data, there is no guarantee that the packets will not be split and reorganized at the bottom. This problem can only be solved by the design of the upper application protocol stack. According to the solutions of the mainstream protocols in the industry, it can be summarized as follows.

- Message fixed length, for example, the size of each message is fixed length of 200 bytes, if not enough, the space is filled in;

- Add carriage return line break at the end of the package for segmentation, such as FTP protocol;

- The message is divided into message header and message body. The message header contains the fields representing the total length of the message (or message body length). The general design idea is that the first field of the message header uses int32 to represent the total length of the message;

- More complex application layer protocol.

Case of abnormal function caused by TCP packet sticking is not considered

Server:

public class TimeServer { public void bind(int port) throws Exception { //Configure NIO thread group of server EventLoopGroup bossGroup = new NioEventLoopGroup(); EventLoopGroup workerGroup = new NioEventLoopGroup(); try { ServerBootstrap bootstrap = new ServerBootstrap(); bootstrap.group(bossGroup, workerGroup) .channel(NioServerSocketChannel.class) .option(ChannelOption.SO_BACKLOG, 1024) .childHandler(new ChildChannelHandler()); //Binding port, synchronization waiting for success ChannelFuture future = bootstrap.bind(port).sync(); //Wait for the server listening port to close future.channel().closeFuture().sync(); } finally { //Elegant exit, release the resources of the thread pool bossGroup.shutdownGracefully(); workerGroup.shutdownGracefully(); } } private class ChildChannelHandler extends ChannelInitializer<SocketChannel> { @Override protected void initChannel(SocketChannel ch) throws Exception { ch.pipeline().addLast(new TimeServerHanler()); } } public static void main(String[] args) throws Exception { int port = 8080; if (args != null && args.length > 0) { try { port = Integer.valueOf(args[0]); } catch (NumberFormatException e) { port = 6666;//Default } } new TimeServer().bind(port); } }

public class TimeServerHanler extends ChannelHandlerAdapter { private int counter = 0; @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { ByteBuf buf = (ByteBuf)msg; //readableBytes() can get the number of bytes read by the buffer byte[] req = new byte[buf.readableBytes()]; //Copy byte array in buffer to new byte array buf.readBytes(req); //Build string String body = new String(req, "UTF-8").substring(0, req.length - System.getProperty("line.separator").length()); //If it is query time order, create an answer message System.out.println("The time server receive order :" + body + " ; the counter is :" + ++counter); String currentTime = "QUERY TIME ORDER".equalsIgnoreCase(body) ? new Date(System.currentTimeMillis()).toString() : "BAD ORDER"; ByteBuf resp = Unpooled.copiedBuffer(currentTime.getBytes()); ctx.writeAndFlush(resp); } @Override public void channelReadComplete(ChannelHandlerContext ctx) throws Exception { //Write the message in the message sending queue to SecketChannel and send it to the other party ctx.flush(); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { //Release resources when an exception occurs ctx.close(); } }

Every time a message is read, it counts the number of times, and then sends an answer message to the client. According to the design, the total number of messages received by the server should be the same as the total number of messages sent by the client, and the request message should be "QUERY TIME ORDER" after the carriage return line break is deleted.

client:

public class TimeClient { public void connect(int port, String host) throws Exception { //Configure client NIO thread groups EventLoopGroup group = new NioEventLoopGroup(); try { Bootstrap b = new Bootstrap(); b.group(group).channel(NioSocketChannel.class) .option(ChannelOption.TCP_NODELAY, true) .handler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel socketChannel) throws Exception { socketChannel.pipeline().addLast(new TimeClientHandler()); } }); //Initiate asynchronous connection operation ChannelFuture f = b.connect(host, port).sync(); //Wait for client link to close f.channel().closeFuture().sync(); } finally { //Graceful exit, release NIO thread group group.shutdownGracefully(); } } public static void main(String[] args) throws Exception { int port = 8080; if (args != null && args.length > 0) { try { port = Integer.valueOf(args[0]); } catch (NumberFormatException e) { port = 6666; } } new TimeClient().connect(port, "127.0.0.1"); } }

public class TimeClientHandler extends ChannelHandlerAdapter { private static final Logger logger = Logger.getLogger(TimeClientHandler.class.getName()); private int counter; private byte[] req; public TimeClientHandler() { req = ("QUERY TIME ORDER" + System.getProperty("line.separator")).getBytes(); } //After the successful establishment of the client and server TCP links, the @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { ByteBuf message = null; for (int i = 0; i < 100; i++) { message = Unpooled.buffer(req.length); message.writeBytes(req); ctx.writeAndFlush(message); } } //Called when the server returns the reply message @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { ByteBuf buf = (ByteBuf) msg; byte[] req = new byte[buf.readableBytes()]; buf.readBytes(req); String body = new String(req, "UTF-8"); System.out.println("Now is :" + body + " ; the counter is :" + ++counter); } //Called when an exception occurs @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { //Release resources logger.warning("Unexpected exception from downstream :" + cause.getMessage()); ctx.close(); } }

After the link between the client and the server is established successfully, 100 messages will be sent in a circular manner, and each message will be refreshed once to ensure that each message will be written into the Channel.

Every time the client receives a reply message from the server, it prints the counter. According to the original design, the client should print the system time of the server 100 times.

Operation result:

Server output:

The time server receive order :QUERY TIME ORDER ...ellipsis55That's ok QUERY TIME ORDER QUE ; the counter is :1 The time server receive order :Y TIME ORDER ...ellipsis42That's ok QUERY TIME ORDER QUERY TIME ORDER ; the counter is :2

The running result of the server shows that it only received two messages. The first contains 57 "query timer" instructions, and the second contains 43 "QUERY TIME ORDER" instructions, with a total of exactly 100. We expect to receive 100 messages, each containing a "QUERY TIME ORDER" instruction. This indicates that TCP packet sticking has occurred.

Client output:

Now is :BAD ORDER BAD ORDER ; the counter is :1

According to the original design, the client should receive 100 messages about the current system time, but only one message is actually received. This is not difficult to understand, because the server only received two request messages, so the actual server only sent two replies. Because the request message does not meet the query criteria, it returned two "BAD ORDER" reply messages. But in fact, the client and client only received a message containing two "BADORDER" instructions, indicating that the reply message returned by the server also had packet sticking.

Because the above routine does not consider TCP packet sticking / unpacking, our program cannot work normally when TCP packet sticking occurs.

Using LineBasedFrameDecoder to solve the problem of TCP packet sticking

In order to solve the problem of half packet read and write caused by TCP packet sticking / unpacking, Netty provides a variety of codecs by default for handling half packets. As long as you can master the use of these class libraries skillfully, the problem of TCP packet sticking will become very easy, and you don't even need to care about them, which is incomparable to other NIO frameworks and JDK native NIO API s.

TImeServer supporting TCP packet sticking:

public class TimeServer { public void bind(int port) throws Exception { //Configure NIO thread group of server EventLoopGroup bossGroup = new NioEventLoopGroup(); EventLoopGroup workerGroup = new NioEventLoopGroup(); try { ServerBootstrap bootstrap = new ServerBootstrap(); bootstrap.group(bossGroup, workerGroup) .channel(NioServerSocketChannel.class) .option(ChannelOption.SO_BACKLOG, 1024) .childHandler(new ChildChannelHandler()); //Binding port, synchronization waiting for success ChannelFuture future = bootstrap.bind(port).sync(); //Wait for the server listening port to close future.channel().closeFuture().sync(); } finally { //Exit gracefully and release resources of thread pool bossGroup.shutdownGracefully(); workerGroup.shutdownGracefully(); } } private class ChildChannelHandler extends ChannelInitializer<SocketChannel> { @Override protected void initChannel(SocketChannel ch) throws Exception { //Two new decoders ch.pipeline().addLast(new LineBasedFrameDecoder(1024)); ch.pipeline().addLast(new StringDecoder()); ch.pipeline().addLast(new TimeServerHanler()); } } public static void main(String[] args) throws Exception { int port = 8080; if (args != null && args.length > 0) { try { port = Integer.valueOf(args[0]); } catch (NumberFormatException e) { port = 6666;//Default } } new TimeServer().bind(port); } }

public class TimeServerHanler extends ChannelHandlerAdapter { private int counter = 0; @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { String body = (String)msg; System.out.println("The time server receive order :" + body + " ; the counter is : " + ++counter); String currentTime = "QUERY TIME ORDER".equalsIgnoreCase(body) ? new Date(System.currentTimeMillis()).toString() : "BAD ORDER"; currentTime = currentTime + System.getProperty("line.separator"); ByteBuf resp = Unpooled.copiedBuffer(currentTime.getBytes()); ctx.writeAndFlush(resp); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { //Release resources when an exception occurs ctx.close(); } }

TimeClient supporting TCP packet sticking:

public class TimeClient { public void connect(int port, String host) throws Exception { //Configure client NIO thread groups EventLoopGroup group = new NioEventLoopGroup(); try { Bootstrap b = new Bootstrap(); b.group(group).channel(NioSocketChannel.class) .option(ChannelOption.TCP_NODELAY, true) .handler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel socketChannel) throws Exception { socketChannel.pipeline().addLast(new LineBasedFrameDecoder(1024)); socketChannel.pipeline().addLast(new StringDecoder()); socketChannel.pipeline().addLast(new TimeClientHandler()); } }); //Initiate asynchronous connection operation ChannelFuture f = b.connect(host, port).sync(); //Wait for client link to close f.channel().closeFuture().sync(); } finally { //Graceful exit, release NIO thread group group.shutdownGracefully(); } } public static void main(String[] args) throws Exception { int port = 8080; if (args != null && args.length > 0) { try { port = Integer.valueOf(args[0]); } catch (NumberFormatException e) { port = 6666; } } new TimeClient().connect(port, "127.0.0.1"); } }

public class TimeClientHandler extends ChannelHandlerAdapter { private static final Logger logger = Logger.getLogger(TimeClientHandler.class.getName()); private int counter; private byte[] req; public TimeClientHandler() { req = ("QUERY TIME ORDER" + System.getProperty("line.separator")).getBytes(); } //After the successful establishment of the client and server TCP links, the @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { ByteBuf message = null; for (int i = 0; i < 100; i++) { message = Unpooled.buffer(req.length); message.writeBytes(req); ctx.writeAndFlush(message); } } //Called when the server returns the reply message @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { String body = (String)msg; System.out.println("Now is : " + body + " ; the counter is : " + ++counter); } //Called when an exception occurs @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { //Release resources logger.warning("Unexpected exception from downstream :" + cause.getMessage()); ctx.close(); } }

Run the time server program that supports TCP packet sticking:

Server output:

The time server receive order :QUERY TIME ORDER ; the counter is : 1 ...ellipsis98strip The time server receive order :QUERY TIME ORDER ; the counter is : 100

Client output:

Now is : Sun Jun 14 14:20:53 CST 2020 ; the counter is : 1 ...ellipsis98strip Now is : Sun Jun 14 14:20:53 CST 2020 ; the counter is : 100

The running result of the program completely meets the expectation, which indicates that the problem of reading half packet caused by TCP sticking is successfully solved by using LineBasedFrameDecoder and StringDecoder. For the user, as long as the Handler supporting half packet decoding is added to the ChannelPipeline, no additional code is needed, and the user is very simple to use.

Principle:

The working principle of LineBasedFrameDecoder is that it traverses the readable bytes in ByteBuf in turn to determine whether there is "\ n" or "\ r\n". If there is, it takes this position as the end position, and the bytes from the readable index to the end position range form a line. It is a decoder with line break as the end flag. It supports two decoding methods: carrying or not carrying the end flag. At the same time, it supports configuring the maximum length of a single line. If no newline is found after the maximum length is read continuously, an exception will be thrown and the previously read exception code stream will be ignored.

The function of StringDecoder is very simple, which is to convert the received object into a string, and then continue to call the subsequent Handler. The combination of LineBasedFrameDecoder + StringDecoder is a text decoder that switches by line. It is designed to support TCP packet gluing and unpacking.

If the message sent does not end with a newline character, what should I do? Or if there is no return newline character, what can I do with the length field in the message header? Do I need to write my own half packet decoder? The answer is No. Netty provides a variety of decoders that support TCP packet sticking / unpacking to meet different demands of users.