Introduction: We built the AlexNet network using the paddy framework, and tested the classification effect of AlexNet for Cifar10 using its supreme version on AI Studio. The classification effect of basic training on the test set can not exceed 60%, which is still a certain distance from the 80% classification effect mentioned in some articles.

Key words: Cifar10, Alexnet

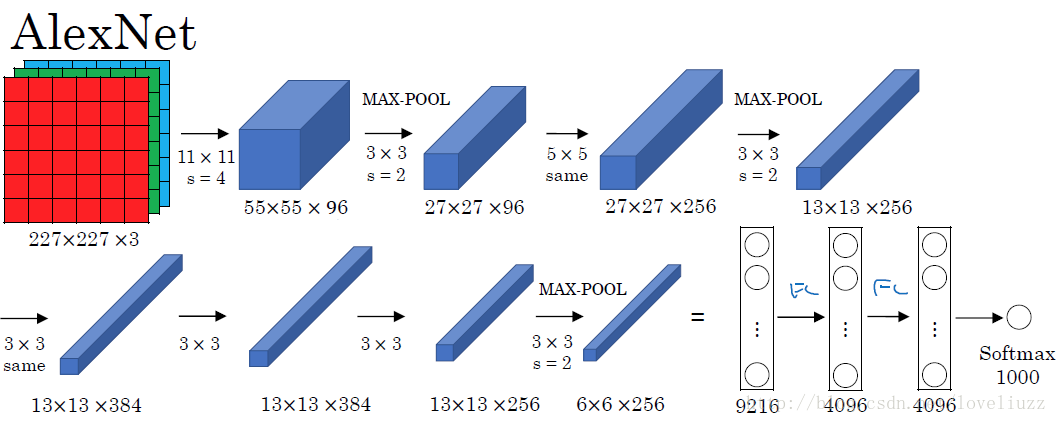

§01 AlexNet

1.1 background introduction

in Requirements for the fourth operation of artificial neural network in 2021 The fourth assignment requirements in NN course are given. For the Cifar10 data set, see The fourth assignment of artificial neural network in 2021 - question 3 Cifar10 We try to use BP and LeNet structure for training, and the accuracy on the test set can not exceed 30%. But the accuracy of the test set soon reached saturation.

the network structure is simply modified, the learning rate is adjusted and the Dropout layer is used, which has no impact on the results.

reference blog Implementation and comparison of deep learning recognition CIFAR10: pytorch training LeNet, AlexNet and VGG19 (II) Introduced in AlexNet The network is built and tested on the paddy platform.

1.2 original code

the original text is combined according to the structure of AlexNet The CIFAR-10 dataset Picture features (32) × thirty-two × 3) , fine tune the AlexNet network structure:

network structure of AlexNet:

for CIFAR10, the picture is 3232, and the size is much smaller than 227227. Therefore, the network structure and parameters need to be fine tuned:

- Convolution layer 1: Kernel Size 7 * 7, step 2, filling 2

- The last max pool layer is deleted

1.2. 1 network code

the network definition code is as follows:

1 class AlexNet(nn.Module): 2 def __init__(self): 3 super(AlexNet, self).__init__() 4 5 self.cnn = nn.Sequential( 6 # Convolution layer 1, 3-channel input, 96 convolution kernels, kernel size 7 * 7, step size 2, filling 2 7 # After this layer, the image size becomes 32-7 + 2 * 2 / 2 + 1, 15 * 15 8 # After 3 * 3 Maximum pooling and 2 steps, the image becomes 15-3 / 2 + 1, 7 * 7 9 nn.Conv2d(3, 96, 7, 2, 2), 10 nn.ReLU(inplace=True), 11 nn.MaxPool2d(3, 2, 0), 12 13 # Convolution layer 2, 96 input channels, 256 convolution cores, core size 5 * 5, step size 1, filling 2 14 # After this layer, the image becomes 7-5 + 2 * 2 / 1 + 1, 7 * 7 15 # After 3 * 3 Maximum pooling and 2 steps, the image becomes 7-3 / 2 + 1, 3 * 3 16 nn.Conv2d(96, 256, 5, 1, 2), 17 nn.ReLU(inplace=True), 18 nn.MaxPool2d(3, 2, 0), 19 20 # Convolution layer 3256 input channels, 384 convolution cores, core size 3 * 3, step size 1, filling 1 21 # After this layer, the image becomes 3-3 + 2 * 1 / 1 + 1, 3 * 3 22 nn.Conv2d(256, 384, 3, 1, 1), 23 nn.ReLU(inplace=True), 24 25 # Convolution layer 3384 input channels, 384 convolution kernels, kernel size 3 * 3, step size 1, filling 1 26 # After this layer, the image becomes 3-3 + 2 * 1 / 1 + 1, 3 * 3 27 nn.Conv2d(384, 384, 3, 1, 1), 28 nn.ReLU(inplace=True), 29 30 # Convolution layer 3384 input channels, 256 convolution cores, core size 3 * 3, step size 1, filling 1 31 # After this layer, the image becomes 3-3 + 2 * 1 / 1 + 1, 3 * 3 32 nn.Conv2d(384, 256, 3, 1, 1), 33 nn.ReLU(inplace=True) 34 ) 35 36 self.fc = nn.Sequential( 37 # 256 features, 3 * 3 for each feature 38 nn.Linear(256*3*3, 1024), 39 nn.ReLU(), 40 nn.Linear(1024, 512), 41 nn.ReLU(), 42 nn.Linear(512, 10) 43 ) 44 45 def forward(self, x): 46 x = self.cnn(x) 47 48 # x.size()[0]: batch size 49 x = x.view(x.size()[0], -1) 50 x = self.fc(x) 51 52 return x

1.3 paddy model implementation

use the neural network model in Paddle to build Alexnet.

1.3. 1. Build Alexnet network

(1) Network code

import paddle

class alexnet(paddle.nn.Layer):

def __init__(self, ):

super(alexnet, self).__init__()

self.conv1 = paddle.nn.Conv2D(in_channels=3, out_channels=96, kernel_size=7, stride=2, padding=2)

self.conv2 = paddle.nn.Conv2D(in_channels=96, out_channels=256, kernel_size=5, stride=1, padding=2)

self.conv3 = paddle.nn.Conv2D(in_channels=256, out_channels=384, kernel_size=3, stride=1, padding=1)

self.conv4 = paddle.nn.Conv2D(in_channels=384, out_channels=384, kernel_size=3, stride=1, padding=1)

self.conv5 = paddle.nn.Conv2D(in_channels=384, out_channels=256, kernel_size=3, stride=1, padding=1)

self.mp1 = paddle.nn.MaxPool2D(kernel_size=3, stride=2)

self.mp2 = paddle.nn.MaxPool2D(kernel_size=3, stride=2)

self.L1 = paddle.nn.Linear(in_features=256*3*3, out_features=1024)

self.L2 = paddle.nn.Linear(in_features=1024, out_features=512)

self.L3 = paddle.nn.Linear(in_features=512, out_features=10)

def forward(self, x):

x = self.conv1(x)

x = paddle.nn.functional.relu(x)

x = self.mp1(x)

x = self.conv2(x)

x = paddle.nn.functional.relu(x)

x = self.mp2(x)

x = self.conv3(x)

x = paddle.nn.functional.relu(x)

x = self.conv4(x)

x = paddle.nn.functional.relu(x)

x = self.conv5(x)

x = paddle.nn.functional.relu(x)

x = paddle.flatten(x, start_axis=1, stop_axis=-1)

x = self.L1(x)

x = paddle.nn.functional.relu(x)

x = self.L2(x)

x = paddle.nn.functional.relu(x)

x = self.L3(x)

return x

(2) Network structure

apply padding Summary check whether the network structure is correct.

model = alexnet() paddle.summary(model, (100,3,32,32))

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-16 [[100, 3, 32, 32]] [100, 96, 15, 15] 14,208

MaxPool2D-7 [[100, 96, 15, 15]] [100, 96, 7, 7] 0

Conv2D-17 [[100, 96, 7, 7]] [100, 256, 7, 7] 614,656

MaxPool2D-8 [[100, 256, 7, 7]] [100, 256, 3, 3] 0

Conv2D-18 [[100, 256, 3, 3]] [100, 384, 3, 3] 885,120

Conv2D-19 [[100, 384, 3, 3]] [100, 384, 3, 3] 1,327,488

Conv2D-20 [[100, 384, 3, 3]] [100, 256, 3, 3] 884,992

Linear-10 [[100, 2304]] [100, 1024] 2,360,320

Linear-11 [[100, 1024]] [100, 512] 524,800

Linear-12 [[100, 512]] [100, 10] 5,130

===========================================================================

Total params: 6,616,714

Trainable params: 6,616,714

Non-trainable params: 0

---------------------------------------------------------------------------

Input size (MB): 1.17

Forward/backward pass size (MB): 39.61

Params size (MB): 25.24

Estimated Total Size (MB): 66.02

---------------------------------------------------------------------------

{'total_params': 6616714, 'trainable_params': 6616714}

in the process of network design, structural errors often occur between the volume layer and the full connection layer. After flattening, the data dimensions are not aligned. In the process of network definition, first remove the full connection layer after flattening, and confirm that the number of volume layers is 256 through the pad.summary output structure × three × After 3, connect the full connection layer. If an error occurs, each layer can be verified.

1.4 Cifar10 training Alex net

1.4. 1 load data

import sys,os,math,time

import matplotlib.pyplot as plt

from numpy import *

import paddle

from paddle.vision.transforms import Normalize

normalize = Normalize(mean=[0.5,0.5,0.5], std=[0.5,0.5,0.5], data_format='HWC')

from paddle.vision.datasets import Cifar10

cifar10_train = Cifar10(mode='train', transform=normalize)

cifar10_test = Cifar10(mode='test', transform=normalize)

train_dataset = [cifar10_train.data[id][0].reshape(3,32,32) for id in range(len(cifar10_train.data))]

train_labels = [cifar10_train.data[id][1] for id in range(len(cifar10_train.data))]

class Dataset(paddle.io.Dataset):

def __init__(self, num_samples):

super(Dataset, self).__init__()

self.num_samples = num_samples

def __getitem__(self, index):

data = train_dataset[index]

label = train_labels[index]

return paddle.to_tensor(data,dtype='float32'), paddle.to_tensor(label,dtype='int64')

def __len__(self):

return self.num_samples

_dataset = Dataset(len(cifar10_train.data))

train_loader = paddle.io.DataLoader(_dataset, batch_size=100, shuffle=True)

1.4. 2. Build network

class alexnet(paddle.nn.Layer):

def __init__(self, ):

super(alexnet, self).__init__()

self.conv1 = paddle.nn.Conv2D(in_channels=3, out_channels=96, kernel_size=7, stride=2, padding=2)

self.conv2 = paddle.nn.Conv2D(in_channels=96, out_channels=256, kernel_size=5, stride=1, padding=2)

self.conv3 = paddle.nn.Conv2D(in_channels=256, out_channels=384, kernel_size=3, stride=1, padding=1)

self.conv4 = paddle.nn.Conv2D(in_channels=384, out_channels=384, kernel_size=3, stride=1, padding=1)

self.conv5 = paddle.nn.Conv2D(in_channels=384, out_channels=256, kernel_size=3, stride=1, padding=1)

self.mp1 = paddle.nn.MaxPool2D(kernel_size=3, stride=2)

self.mp2 = paddle.nn.MaxPool2D(kernel_size=3, stride=2)

self.L1 = paddle.nn.Linear(in_features=256*3*3, out_features=1024)

self.L2 = paddle.nn.Linear(in_features=1024, out_features=512)

self.L3 = paddle.nn.Linear(in_features=512, out_features=10)

def forward(self, x):

x = self.conv1(x)

x = paddle.nn.functional.relu(x)

x = self.mp1(x)

x = self.conv2(x)

x = paddle.nn.functional.relu(x)

x = self.mp2(x)

x = self.conv3(x)

x = paddle.nn.functional.relu(x)

x = self.conv4(x)

x = paddle.nn.functional.relu(x)

x = self.conv5(x)

x = paddle.nn.functional.relu(x)

x = paddle.flatten(x, start_axis=1, stop_axis=-1)

x = self.L1(x)

x = paddle.nn.functional.relu(x)

x = self.L2(x)

x = paddle.nn.functional.relu(x)

x = self.L3(x)

return x

model = alexnet()

1.4. 3 training network

test_dataset = [cifar10_test.data[id][0].reshape(3,32,32) for id in range(len(cifar10_test.data))]

test_label = [cifar10_test.data[id][1] for id in range(len(cifar10_test.data))]

test_input = paddle.to_tensor(test_dataset, dtype='float32')

test_l = paddle.to_tensor(array(test_label)[:,newaxis])

optimizer = paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters())

def train(model):

model.train()

epochs = 2

accdim = []

lossdim = []

testaccdim = []

for epoch in range(epochs):

for batch, data in enumerate(train_loader()):

out = model(data[0])

loss = paddle.nn.functional.cross_entropy(out, data[1])

acc = paddle.metric.accuracy(out, data[1])

loss.backward()

optimizer.step()

optimizer.clear_grad()

accdim.append(acc.numpy())

lossdim.append(loss.numpy())

predict = model(test_input)

testacc = paddle.metric.accuracy(predict, test_l)

testaccdim.append(testacc.numpy())

if batch%10 == 0 and batch>0:

print('Epoch:{}, Batch: {}, Loss:{}, Accuracys:{}{}'.format(epoch, batch, loss.numpy(), acc.numpy(), testacc.numpy()))

plt.figure(figsize=(10, 6))

plt.plot(accdim, label='Accuracy')

plt.plot(testaccdim, label='Test')

plt.xlabel('Step')

plt.ylabel('Acc')

plt.grid(True)

plt.legend(loc='upper left')

plt.tight_layout()

train(model)

1.4. 4 training results

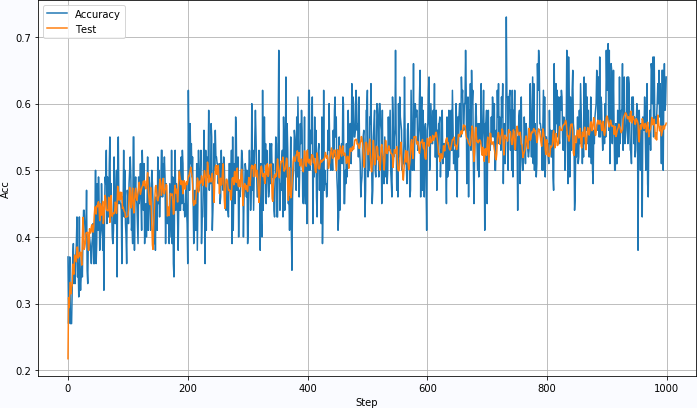

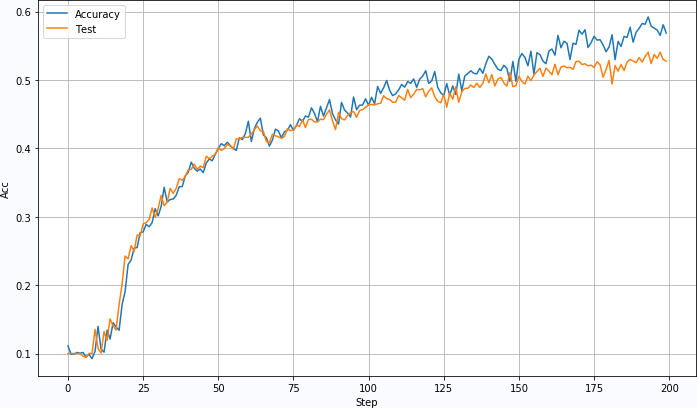

Training parameters: BatchSize: 100LearningRate: 0.001

if the BatchSize is too small, the training speed will slow down.

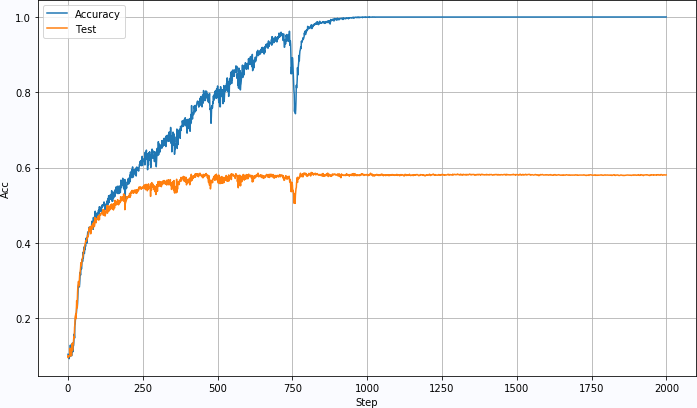

LearningRate: 0.0005

※ general ※ conclusion ※

built the AlexNet network with the paddy framework, and tested the classification effect of AlexNet for Cifar10 with its supreme version on AI Studio. The classification effect of basic training on the test set can not exceed 60%, which is still a certain distance from the 80% classification effect mentioned in some articles.

■ links to relevant literature:

- Requirements for the fourth operation of artificial neural network in 2021

- The fourth assignment of artificial neural network in 2021 - question 3 Cifar10

- Implementation and comparison of deep learning recognition CIFAR10: pytorch training LeNet, AlexNet and VGG19 (II)

- AlexNet

- The CIFAR-10 dataset

● relevant chart links: