Java network programming Netty

- Analysis of Netty core source code

- Source code analysis of Netty startup process

- The overall understanding of the Demo source startup class of Echo program

- Source code analysis of NioEventLoopGroup

- ServerBootstrap creation and build process

- Source code analysis of binding port

- Netty startup process

- Source code analysis of Netty receiving request process

- Source code analysis of Pipeline, Handler and HandlerContext creation

- Relationship among the three

- Function and design of ChannelPipeline

- Function and design of ChannelHandler

- ChannelInboundHandler inbound event interface

- Channel outbound handler outbound event interface

- ChannelDuplexHandler handles outbound and inbound events

- Function and design of ChannelHandlerContext

- ChannelPipeline | ChannelHandler | ChannelHandlerContext creation process

- Pipeline Handler HandlerContext creation process combing

- Source code analysis of channel pipeline scheduling handler

- Source code analysis of Netty heartbeat service

- IdleStateHandler analysis

- Analysis of the run method of the read event (that is, the run method of the reader idletimeouttask)

- Analysis of the run method for writing events (that is, the run method for writer idletimeouttask)

- Analysis of run method of all events (i.e. run method of AllIdleTimeoutTask)

- Summary of the heartbeat mechanism of Netty

- Source code analysis of Netty core component NioEventLoop

- NioEventLoop inheritance relationship

- Source code analysis of the execute method of NioEventLoop

- A summary of the operation mechanism of the Netty core component NioEventLoop

- Source code analysis of adding thread pool in handler and adding thread pool in Context

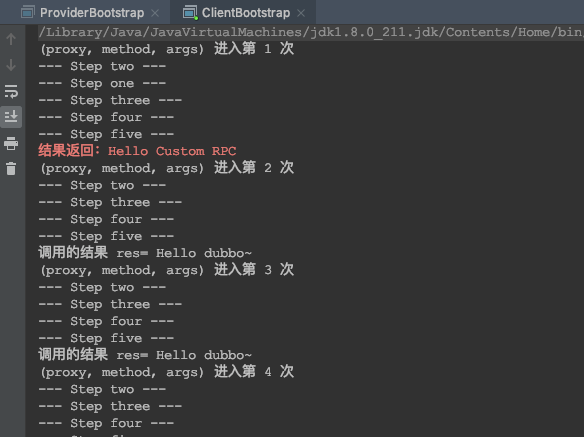

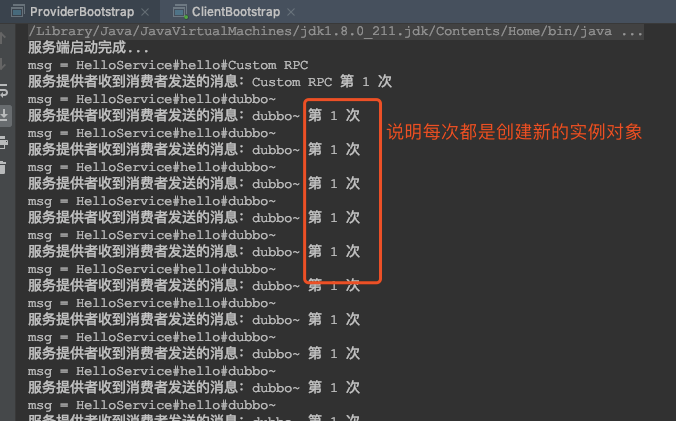

- Netty implements dubbo RPC

Analysis of Netty core source code

- The source version of Netty analyzed is 4.1.36

Source code analysis of Netty startup process

- Go through the startup process of Netty (server) in the way of source code analysis to better understand the overall design and operation mechanism of Netty.

- To start source code analysis, you need to call doBind method from Netty and trace to doBind method of NioServerSocketChannel.

- And to Debug the program to the NioEventLoop class run code, infinite loop, run on the server side.

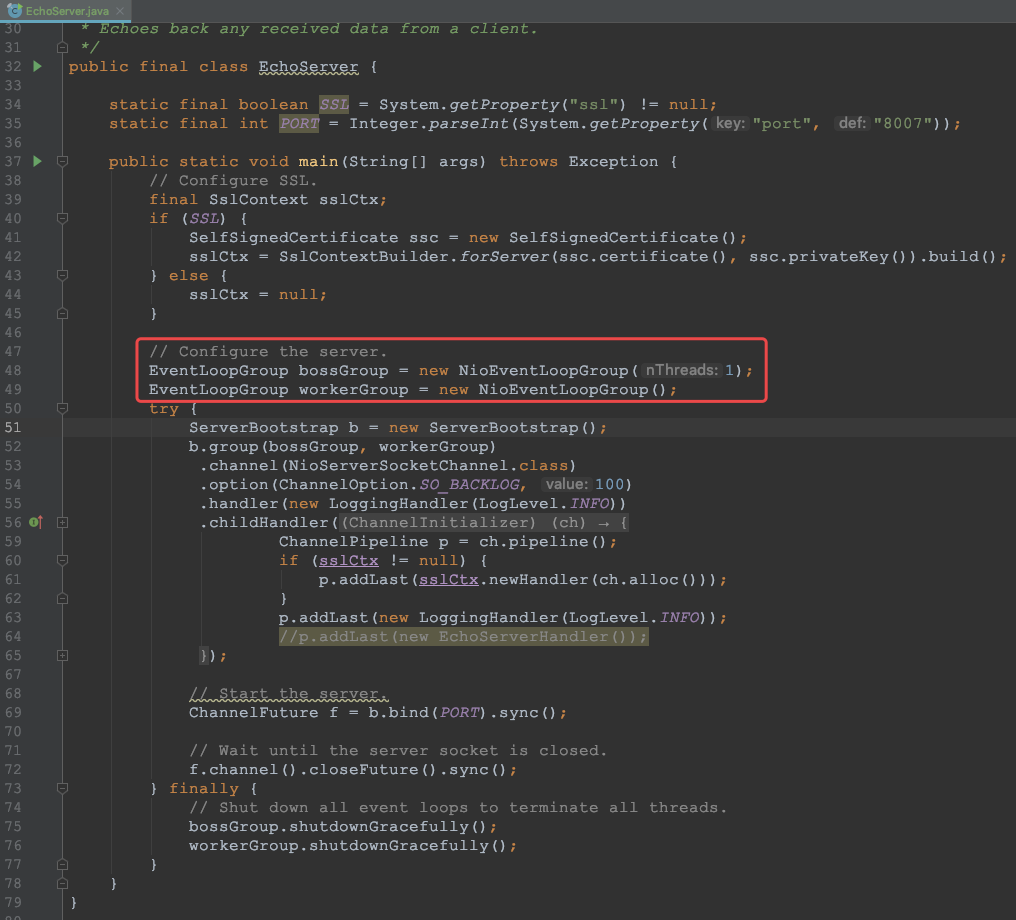

The overall understanding of the Demo source startup class of Echo program

- First look at the startup class: in the main method, the configuration class about SSL is created first.

- Focus on the two EventLoopGroup objects created under the analysis: EventLoopGroup bossGroup = new NioEventLoopGroup(1); EventLoopGroup workerGroup = new NioEventLoopGroup();.

- ① These two objects are the core objects of the whole Netty. It can be said that the operation of the whole Netty depends on them. bossGroup is used to accept Tcp requests. It will give the request to workerGroup, and workerGroup will get the real connection, and then communicate with the connection, such as read-write decoding and encoding.

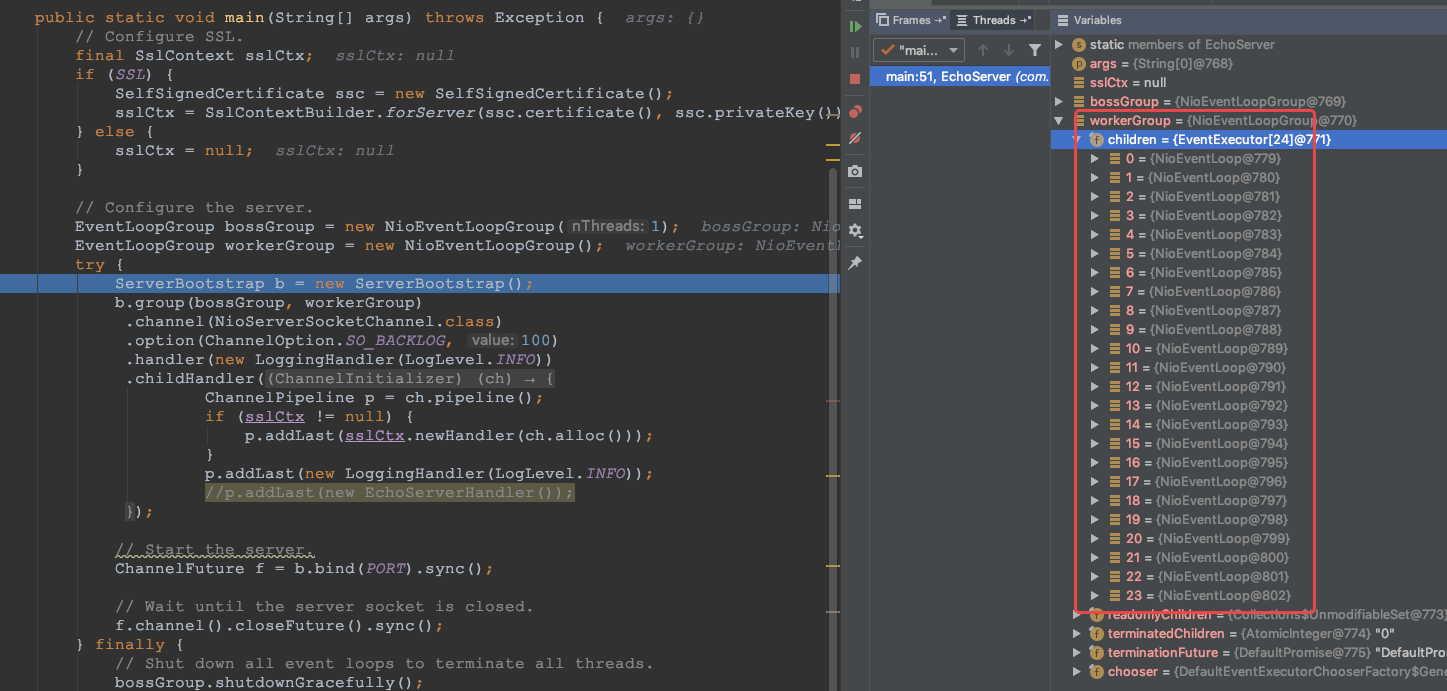

- ② NioEventLoopGroup is an event loop group (thread group) that contains multiple nioeventloops. You can register channel s to select among the event loops (related to selectors). Debug:

- ③ new NioEventLoopGroup(1); this 1 means that the event group of bossGroup has one thread that you can specify. If new NioEventLoopGroup() contains the default number of thread cpu cores * 2, you can make full use of the advantages of multi-core. Debug as follows:

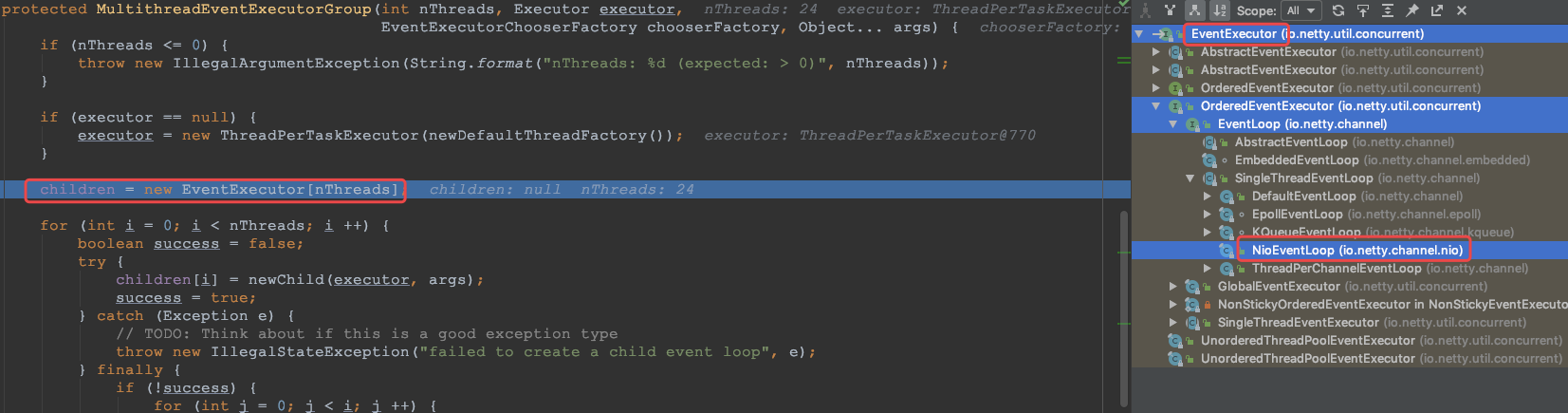

- The EventExecutor array children = new EventExecutor[nThreads] will be created; the type of each element is nioeventloop, which implements the EventLoop interface and Executor interface.

- ④ In the try block, a ServerBootstrap object is created, which is a boot class used to start the server and boot the initialization of the entire program. It is associated with ServerChannel, and ServerChannel inherits Channel. There are some methods, such as remoteAddress.

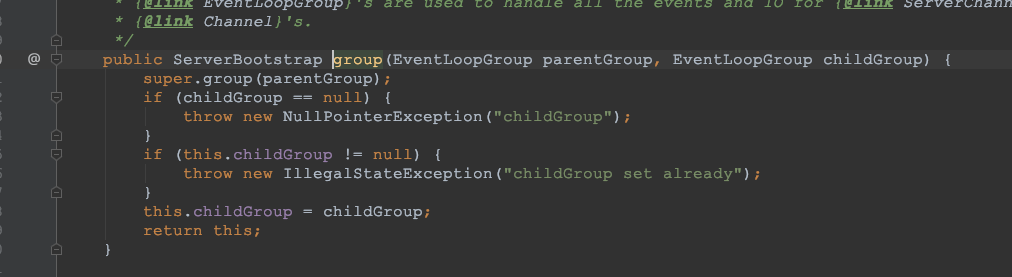

- Subsequently, variable b calls the group method to put two groups into its own fields for later boot use. Debug:

- ⑤ Then a channel [. Channel (Ni oServerSocketChannel.Class )], the bootstrap Class will create ChannelFactory through this Class object reflection. (4) Then I added some TCP parameters. [Note: the channel is created in the bind method. You can find the channel in the bind method under Debug= ChannelFactory.newChannel ();].

- ⑥ Another log handler dedicated to the server is added.

- ⑦ Add a socket channel (not a server socket channel) handler.

- ⑧ Then bind the port and block to connect successfully.

- ⑨ Finally, the main thread is blocked and waiting to be closed.

- ⑩ The code in the finally block gracefully shuts down all resources when the server is shut down.

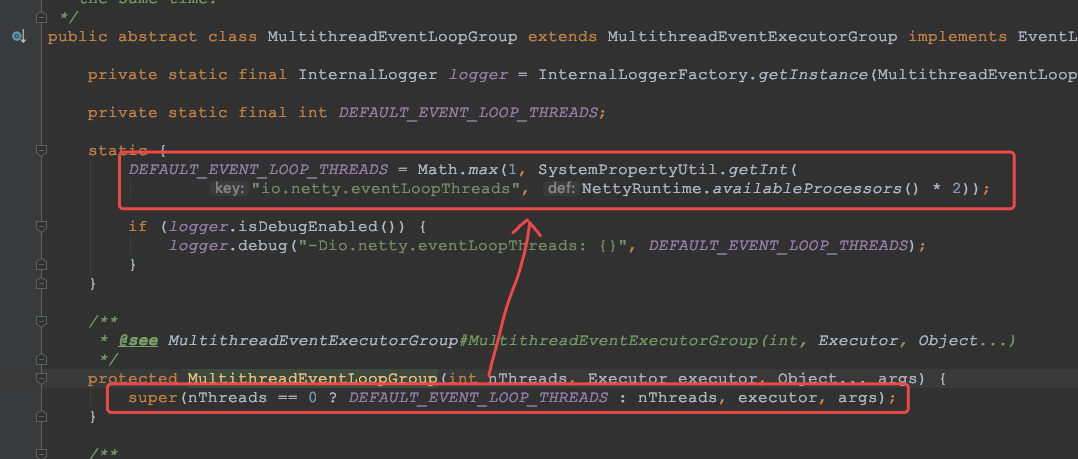

Source code analysis of NioEventLoopGroup

- ① Analysis entry: EventLoopGroup bossGroup = new NioEventLoopGroup(1);

public NioEventLoopGroup(int nThreads) { this(nThreads, (Executor) null); } ↓↓↓↓↓ public NioEventLoopGroup(int nThreads, Executor executor) { this(nThreads, executor, SelectorProvider.provider()); } ↓↓↓↓↓ public NioEventLoopGroup(int nThreads, Executor executor, final SelectorProvider selectorProvider) { this(nThreads, executor, selectorProvider, DefaultSelectStrategyFactory.INSTANCE); } ↓↓↓↓↓ public NioEventLoopGroup(int nThreads, Executor executor, final SelectorProvider selectorProvider, final SelectStrategyFactory selectStrategyFactory) { super(nThreads, executor, selectorProvider, selectStrategyFactory, RejectedExecutionHandlers.reject()); }

- ② Next, click to enter the super() method, whose parent class is MultithreadEventLoopGroup, and then continue to trace to the source abstract class MultithreadEventExecutorGroup. The constructor method of MultithreadEventExecutorGroup is the real construction method of NioEventLoopGroup. Here we can see that the abstract template design pattern is used.

protected MultithreadEventLoopGroup(int nThreads, Executor executor, Object... args) { super(nThreads == 0 ? DEFAULT_EVENT_LOOP_THREADS : nThreads, executor, args); } ↓↓↓↓↓ protected MultithreadEventExecutorGroup(int nThreads, Executor executor, Object... args) { this(nThreads, executor, DefaultEventExecutorChooserFactory.INSTANCE, args); } ↓↓↓↓↓ /** * Create a new instance. * * @param nThreads Number of threads used, core *2 by default * @param executor Executor: if null is passed in, the default thread factory of Netty and the default executor threadpertask executor are used * @param chooserFactory Single example new DefaultEventExecutorChooserFactory() * @param args Pass in fixed parameters when creating actuator */ protected MultithreadEventExecutorGroup(int nThreads, Executor executor, EventExecutorChooserFactory chooserFactory, Object... args) { if (nThreads <= 0) { throw new IllegalArgumentException(String.format("nThreads: %d (expected: > 0)", nThreads)); } // If the incoming actuator is empty, the default thread factory and the default actuator are used if (executor == null) { executor = new ThreadPerTaskExecutor(newDefaultThreadFactory()); } // Create an array of Executors for the specified number of threads children = new EventExecutor[nThreads]; // Initialize thread array for (int i = 0; i < nThreads; i ++) { boolean success = false; try { // Create NioEventLoop children[i] = newChild(executor, args); success = true; } catch (Exception e) { // TODO: Think about if this is a good exception type throw new IllegalStateException("failed to create a child event loop", e); } finally { // Graceful close if creation fails if (!success) { for (int j = 0; j < i; j ++) { children[j].shutdownGracefully(); } for (int j = 0; j < i; j ++) { EventExecutor e = children[j]; try { while (!e.isTerminated()) { e.awaitTermination(Integer.MAX_VALUE, TimeUnit.SECONDS); } } catch (InterruptedException interrupted) { // Let the caller handle the interruption. Thread.currentThread().interrupt(); break; } } } } } chooser = chooserFactory.newChooser(children); final FutureListener<Object> terminationListener = new FutureListener<Object>() { @Override public void operationComplete(Future<Object> future) throws Exception { if (terminatedChildren.incrementAndGet() == children.length) { terminationFuture.setSuccess(null); } } }; // Add a shutdown listener for each singleton thread pool for (EventExecutor e: children) { e.terminationFuture().addListener(terminationListener); } Set<EventExecutor> childrenSet = new LinkedHashSet<EventExecutor>(children.length); // Add all singleton thread pools to a HashSet Collections.addAll(childrenSet, children); readonlyChildren = Collections.unmodifiableSet(childrenSet); }

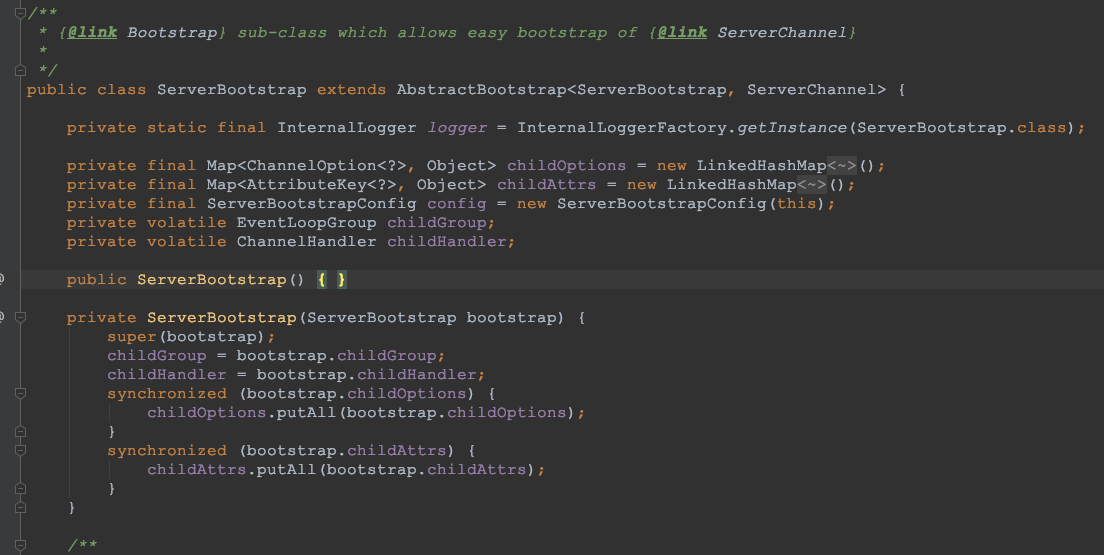

ServerBootstrap creation and build process

- ① Analysis entry: ServerBootstrap b = new ServerBootstrap(); basic usage of ServerBootstrap:

ServerBootstrap b = new ServerBootstrap(); // Chain call mode // The group method passes in the bossGroup and workerGroup, the bossGroup is assigned to the parentGroup property, and the workerGroup is assigned to the childGroup property b.group(bossGroup, workerGroup) // The channel method passes in the NioServerSocketChannel class object, according to which the channel object will be created .channel(NioServerSocketChannel.class) // The option method passes in the TCP parameter and puts it in a LinkedHashMap .option(ChannelOption.SO_BACKLOG, 100) // The handler method passes in a handler, which only belongs to ServerSocketChannel, not SocketChannel .handler(new LoggingHandler(LogLevel.INFO)) // The childHandler method passes in a handler, which will be called when each client connects and used by SocketChannel .childHandler(new ChannelInitializer<SocketChannel>() { @Override public void initChannel(SocketChannel ch) throws Exception { ChannelPipeline p = ch.pipeline(); if (sslCtx != null) { p.addLast(sslCtx.newHandler(ch.alloc())); } p.addLast(new LoggingHandler(LogLevel.INFO)); //p.addLast(new EchoServerHandler()); } });

- ② Enter the constructor of ServerBootstrap. It is an empty construct, but it initializes an important member variable:

public class ServerBootstrap extends AbstractBootstrap<ServerBootstrap, ServerChannel> { private static final InternalLogger logger = InternalLoggerFactory.getInstance(ServerBootstrap.class); private final Map<ChannelOption<?>, Object> childOptions = new LinkedHashMap<ChannelOption<?>, Object>(); private final Map<AttributeKey<?>, Object> childAttrs = new LinkedHashMap<AttributeKey<?>, Object>(); // This configuration will be very useful later private final ServerBootstrapConfig config = new ServerBootstrapConfig(this); private volatile EventLoopGroup childGroup; private volatile ChannelHandler childHandler; public ServerBootstrap() { } private ServerBootstrap(ServerBootstrap bootstrap) { super(bootstrap); childGroup = bootstrap.childGroup; childHandler = bootstrap.childHandler; synchronized (bootstrap.childOptions) { childOptions.putAll(bootstrap.childOptions); } synchronized (bootstrap.childAttrs) { childAttrs.putAll(bootstrap.childAttrs); } } // Code omitted after }

Source code analysis of binding port

- ① Analysis entry: ChannelFuture f = b.bind(PORT).sync(); the server is started and completed in the bind method. The bind method code is traced inside layer by layer, and the core code is in the AbstractBootstrap.doBind(… ).

// public abstract class AbstractBootstrap<B extends AbstractBootstrap<B, C>, C extends Channel> implements Cloneable public ChannelFuture bind(int inetPort) { // Create a port object return bind(new InetSocketAddress(inetPort)); } ↓↓↓↓↓ public ChannelFuture bind(SocketAddress localAddress) { // Make some check and empty judgment validate(); if (localAddress == null) { throw new NullPointerException("localAddress"); } return doBind(localAddress); } private ChannelFuture doBind(final SocketAddress localAddress) { // Core method 1: execute the method to complete the creation, initialization and registration of NioServerSocketChannel final ChannelFuture regFuture = initAndRegister(); final Channel channel = regFuture.channel(); if (regFuture.cause() != null) { return regFuture; } if (regFuture.isDone()) { // At this point we know that the registration was complete and successful. ChannelPromise promise = channel.newPromise(); // Core method 2: execute the method to complete the port binding doBind0(regFuture, channel, localAddress, promise); return promise; } else { // Registration future is almost always fulfilled already, but just in case it's not. final PendingRegistrationPromise promise = new PendingRegistrationPromise(channel); regFuture.addListener(new ChannelFutureListener() { @Override public void operationComplete(ChannelFuture future) throws Exception { Throwable cause = future.cause(); if (cause != null) { // Registration on the EventLoop failed so fail the ChannelPromise directly to not cause an // IllegalStateException once we try to access the EventLoop of the Channel. promise.setFailure(cause); } else { // Registration was successful, so set the correct executor to use. // See https://github.com/netty/netty/issues/2586 promise.registered(); doBind0(regFuture, channel, localAddress, promise); } } }); return promise; } }

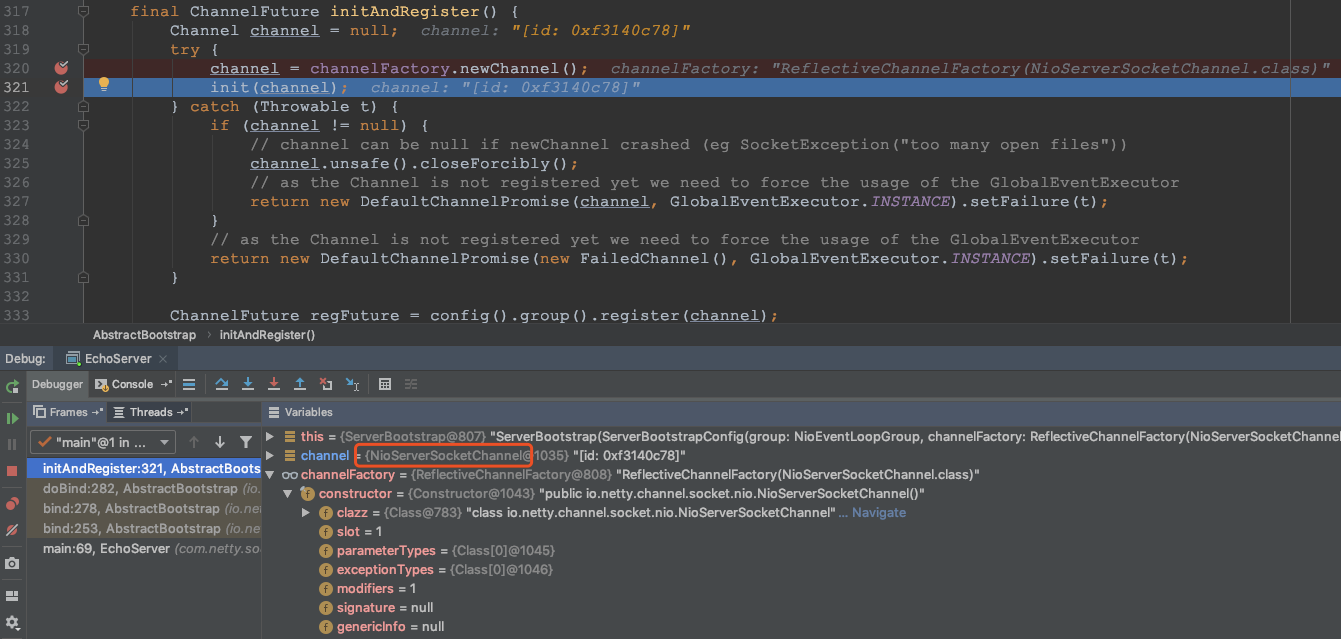

- ② First, take a look at the initAndRegister() method:

final ChannelFuture initAndRegister() { Channel channel = null; try { // channelFactory.newChannel() this method creates a NioServerSocketChannel through the channel factory reflection of ServerBootstrap. The following conclusions can be obtained by tracing the source code: // The channel of JDK is obtained through the openServerSocketChannel method of NIO's SelectorProvider. The purpose is to let Netty package the channels of JDK. // A unique ChannelId is created, a NioMessageUnsafe is created, which is used to operate messages, and a DefaultChannelPipeline is created, which is a two-way linked list structure, which is used to filter all incoming and outgoing messages. // A NioServerSocketChannelConfig object is created to show some configurations. channel = channelFactory.newChannel(); // init initializes the NioServerSocketChannel init(channel); } catch (Throwable t) { if (channel != null) { // channel can be null if newChannel crashed (eg SocketException("too many open files")) channel.unsafe().closeForcibly(); // as the Channel is not registered yet we need to force the usage of the GlobalEventExecutor return new DefaultChannelPromise(channel, GlobalEventExecutor.INSTANCE).setFailure(t); } // as the Channel is not registered yet we need to force the usage of the GlobalEventExecutor return new DefaultChannelPromise(new FailedChannel(), GlobalEventExecutor.INSTANCE).setFailure(t); } // Register NioServerSocketChannel through bossGroup of ServerBootstrap ChannelFuture regFuture = config().group().register(channel); if (regFuture.cause() != null) { if (channel.isRegistered()) { channel.close(); } else { channel.unsafe().closeForcibly(); } } // If we are here and the promise is not failed, it's one of the following cases: // 1) If we attempted registration from the event loop, the registration has been completed at this point. // i.e. It's safe to attempt bind() or connect() now because the channel has been registered. // 2) If we attempted registration from the other thread, the registration request has been successfully // added to the event loop's task queue for later execution. // i.e. It's safe to attempt bind() or connect() now: // because bind() or connect() will be executed *after* the scheduled registration task is executed // because register(), bind(), and connect() are all bound to the same thread. // Returns the placeholder for the asynchronous execution, regFuture return regFuture; }

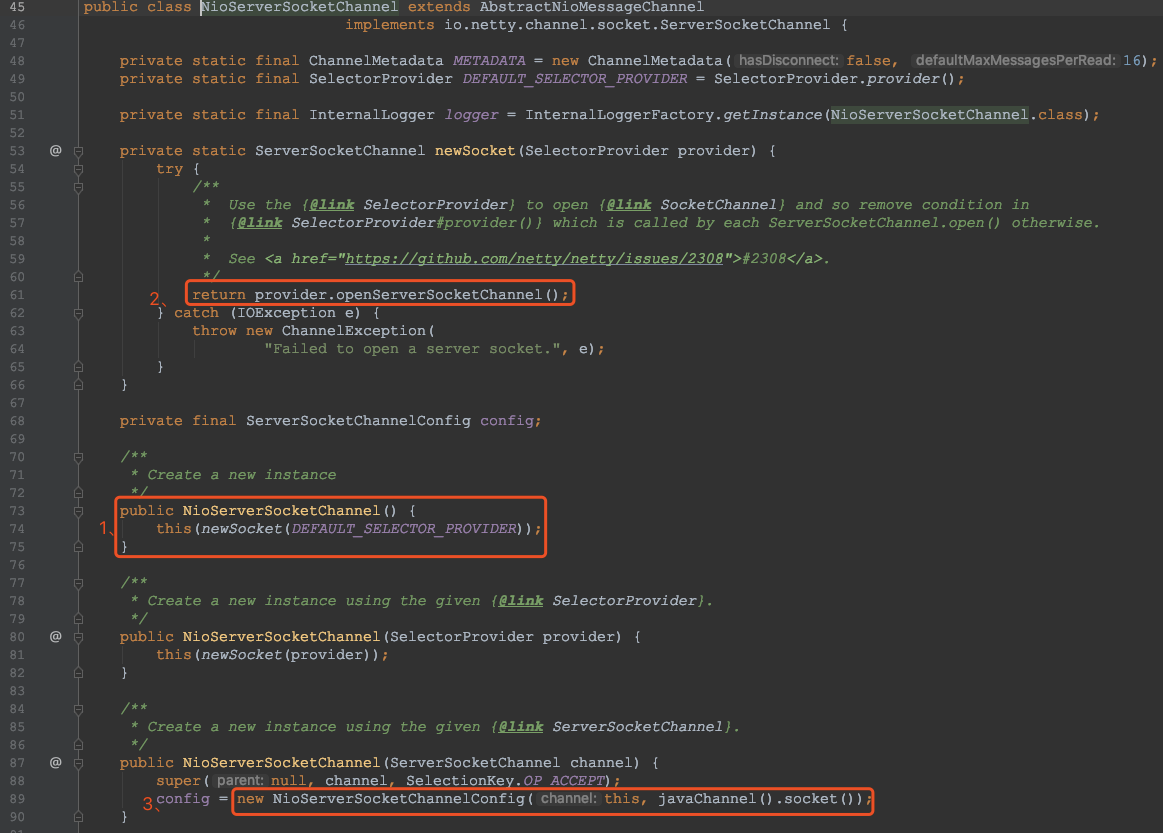

- Next, let's look at the creation process of NioServerSocketChannel: get the channel of JDK through the openServerSocketChannel method of NIO's SelectorProvider, so that Netty can package the channel of JDK.

// public class NioServerSocketChannel extends AbstractNioMessageChannel implements io.netty.channel.socket.ServerSocketChannel public NioServerSocketChannel(ServerSocketChannel channel) { super(null, channel, SelectionKey.OP_ACCEPT); // A NioServerSocketChannelConfig object is created to show some configurations config = new NioServerSocketChannelConfig(this, javaChannel().socket()); } ↓↓↓↓↓ // public abstract class AbstractNioMessageChannel extends AbstractNioChannel protected AbstractNioMessageChannel(Channel parent, SelectableChannel ch, int readInterestOp) { super(parent, ch, readInterestOp); } ↓↓↓↓↓ // public abstract class AbstractNioChannel extends AbstractChannel protected AbstractNioChannel(Channel parent, SelectableChannel ch, int readInterestOp) { super(parent); this.ch = ch; this.readInterestOp = readInterestOp; try { ch.configureBlocking(false); } catch (IOException e) { try { ch.close(); } catch (IOException e2) { if (logger.isWarnEnabled()) { logger.warn( "Failed to close a partially initialized socket.", e2); } } throw new ChannelException("Failed to enter non-blocking mode.", e); } } // public abstract class AbstractChannel extends DefaultAttributeMap implements Channel protected AbstractChannel(Channel parent) { this.parent = parent; // Set ChannelId id = newId(); // Set Unsafe unsafe = newUnsafe(); // Set Pipeline pipeline = newChannelPipeline(); } // To summarize the process of creating NioServerSocketChannel 1,adopt ReflectiveChannelFactory Factory class, reflecting channel Create; 2,During the creation process, four important objects are created: ChannelId,ChannelConfig,ChannelPipeline,Unsafe.

- Next, look at the init method, which is an abstract method. It is implemented by the subclass ServerBootstrap of AbstractBootstrap:

@Override void init(Channel channel) throws Exception { final Map<ChannelOption<?>, Object> options = options0(); synchronized (options) { setChannelOptions(channel, options, logger); } final Map<AttributeKey<?>, Object> attrs = attrs0(); synchronized (attrs) { for (Entry<AttributeKey<?>, Object> e: attrs.entrySet()) { @SuppressWarnings("unchecked") AttributeKey<Object> key = (AttributeKey<Object>) e.getKey(); channel.attr(key).set(e.getValue()); } } ChannelPipeline p = channel.pipeline(); final EventLoopGroup currentChildGroup = childGroup; final ChannelHandler currentChildHandler = childHandler; final Entry<ChannelOption<?>, Object>[] currentChildOptions; final Entry<AttributeKey<?>, Object>[] currentChildAttrs; synchronized (childOptions) { currentChildOptions = childOptions.entrySet().toArray(newOptionArray(0)); } synchronized (childAttrs) { currentChildAttrs = childAttrs.entrySet().toArray(newAttrArray(0)); } p.addLast(new ChannelInitializer<Channel>() { @Override public void initChannel(final Channel ch) throws Exception { final ChannelPipeline pipeline = ch.pipeline(); ChannelHandler handler = config.handler(); if (handler != null) { pipeline.addLast(handler); } ch.eventLoop().execute(new Runnable() { @Override public void run() { pipeline.addLast(new ServerBootstrapAcceptor( ch, currentChildGroup, currentChildHandler, currentChildOptions, currentChildAttrs)); } }); } }); } // Summary of init initialization process: 1,set up NioServerSocketChannel Of TCP Property, due to LinkedHashMap Non thread safe, so use synchronization(synchronized)Processing 2,Yes NioServerSocketChannel Of ChannelPipeline add to ChannelInitializer processor 3,init And ChannelPipeline relevant 4,from NioServerSocketChannel During the initialization of the, pipeline It's a two-way list, and it initializes itself head and tail,He's called here addLast Method, that is to say, the whole handler Insert into tail In front of, because tail It will always be at the back. It needs to do some systematic fixed work

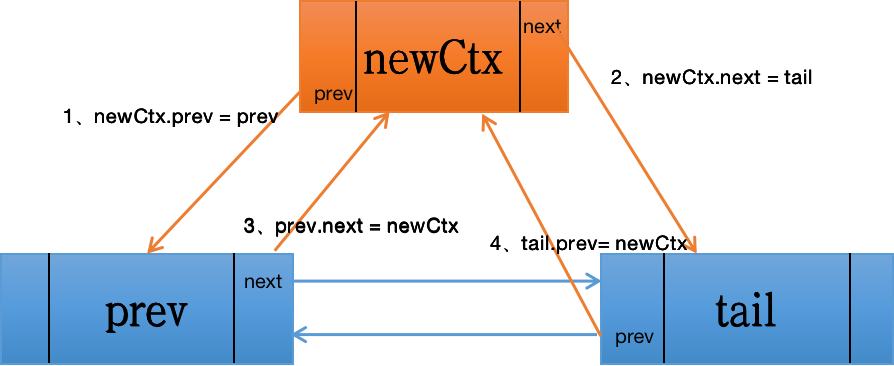

- The init method will call the addList method. Let's go to the addList method to see: the addLast method is implemented in the DefaultChannelPipeline class, which is the core of the Pipeline method

// public class DefaultChannelPipeline implements ChannelPipeline @Override public final ChannelPipeline addLast(ChannelHandler... handlers) { return addLast(null, handlers); } ↓↓↓↓↓ @Override public final ChannelPipeline addLast(EventExecutorGroup executor, ChannelHandler... handlers) { if (handlers == null) { throw new NullPointerException("handlers"); } for (ChannelHandler h: handlers) { if (h == null) { break; } addLast(executor, null, h); } return this; } ↓↓↓↓↓ @Override public final ChannelPipeline addLast(EventExecutorGroup group, String name, ChannelHandler handler) { final AbstractChannelHandlerContext newCtx; synchronized (this) { // Check if the handler meets the standard checkMultiplicity(handler); // Create an AbstractChannelHandlerContext object [the ChannelHandlerContext object is the association between ChannelHandler and ChannelPipeline. Whenever a ChannelHandler is added to the Pipeline, the Context will be created] // The main function of Context is to manage the interaction between its associated Handler and other handlers in the same Pipeline newCtx = newContext(group, filterName(name, handler), handler); // Add Context to the linked list. That is, append to the front of the tail node addLast0(newCtx); // If the registered is false it means that the channel was not registered on an eventLoop yet. // In this case we add the context to the pipeline and add a task that will call // ChannelHandler.handlerAdded(...) once the channel is registered. if (!registered) { newCtx.setAddPending(); callHandlerCallbackLater(newCtx, true); return this; } EventExecutor executor = newCtx.executor(); if (!executor.inEventLoop()) { callHandlerAddedInEventLoop(newCtx, executor); return this; } } // Call the callHandlerAdded0 method synchronously or asynchronously or late asynchronously callHandlerAdded0(newCtx); return this; } ↓↓↓↓↓ private void addLast0(AbstractChannelHandlerContext newCtx) { AbstractChannelHandlerContext prev = tail.prev; newCtx.prev = prev; newCtx.next = tail; prev.next = newCtx; tail.prev = newCtx; }

- ③ The dobind method has two important steps. initAndRegister is finished. Next, look at doBind0 method. The code is as follows

// (1) public abstract class AbstractBootstrap<B extends AbstractBootstrap<B, C>, C extends Channel> implements Cloneable /* Parameter Description: Parameter 1: return value future of initAndRegister method Parameter 2: NioServerSocketChannel Parameter 3: port address Parameter 4: promise of NioServerSocketChannel */ private static void doBind0( final ChannelFuture regFuture, final Channel channel, final SocketAddress localAddress, final ChannelPromise promise) { // This method is invoked before channelRegistered() is triggered. Give user handlers a chance to set up // the pipeline in its channelRegistered() implementation. channel.eventLoop().execute(new Runnable() { @Override public void run() { if (regFuture.isSuccess()) { // !! Here is the breakpoint Debug channel.bind(localAddress, promise).addListener(ChannelFutureListener.CLOSE_ON_FAILURE); } else { promise.setFailure(regFuture.cause()); } } }); } ↓↓↓↓↓ // (2) public abstract class AbstractChannel extends DefaultAttributeMap implements Channel @Override public ChannelFuture bind(SocketAddress localAddress, ChannelPromise promise) { return pipeline.bind(localAddress, promise); } ↓↓↓↓↓ // (3) public class DefaultChannelPipeline implements ChannelPipeline @Override public final ChannelFuture bind(SocketAddress localAddress, ChannelPromise promise) { return tail.bind(localAddress, promise); } ↓↓↓↓↓ @Override public final ChannelFuture bind(SocketAddress localAddress, ChannelPromise promise) { return tail.bind(localAddress, promise); } ↓↓↓↓↓ // (4) abstract class AbstractChannelHandlerContext implements ChannelHandlerContext, ResourceLeakHint @Override public ChannelFuture bind(final SocketAddress localAddress, final ChannelPromise promise) { if (localAddress == null) { throw new NullPointerException("localAddress"); } if (isNotValidPromise(promise, false)) { // cancelled return promise; } final AbstractChannelHandlerContext next = findContextOutbound(MASK_BIND); EventExecutor executor = next.executor(); if (executor.inEventLoop()) { next.invokeBind(localAddress, promise); } else { safeExecute(executor, new Runnable() { @Override public void run() { next.invokeBind(localAddress, promise); } }, promise, null); } return promise; } ↓↓↓↓↓ private void invokeBind(SocketAddress localAddress, ChannelPromise promise) { if (invokeHandler()) { try { ((ChannelOutboundHandler) handler()).bind(this, localAddress, promise); } catch (Throwable t) { notifyOutboundHandlerException(t, promise); } } else { bind(localAddress, promise); } } ↓↓↓↓↓ // (5) Enter the bind method of the handler processor LoggingHandler @Override public void bind(ChannelHandlerContext ctx, SocketAddress localAddress, ChannelPromise promise) throws Exception { if (logger.isEnabled(internalLevel)) { logger.log(internalLevel, format(ctx, "BIND", localAddress)); } ctx.bind(localAddress, promise); } ↓↓↓↓↓ // (6) DefaultChannelPipeline @Override public void bind( ChannelHandlerContext ctx, SocketAddress localAddress, ChannelPromise promise) { unsafe.bind(localAddress, promise); } ↓↓↓↓↓ // (7) AbstractChannel @Override public final void bind(final SocketAddress localAddress, final ChannelPromise promise) { assertEventLoop(); if (!promise.setUncancellable() || !ensureOpen(promise)) { return; } // See: https://github.com/netty/netty/issues/576 if (Boolean.TRUE.equals(config().getOption(ChannelOption.SO_BROADCAST)) && localAddress instanceof InetSocketAddress && !((InetSocketAddress) localAddress).getAddress().isAnyLocalAddress() && !PlatformDependent.isWindows() && !PlatformDependent.maybeSuperUser()) { // Warn a user about the fact that a non-root user can't receive a // broadcast packet on *nix if the socket is bound on non-wildcard address. logger.warn( "A non-root user can't receive a broadcast packet if the socket " + "is not bound to a wildcard address; binding to a non-wildcard " + "address (" + localAddress + ") anyway as requested."); } boolean wasActive = isActive(); try { // !! You can see from the little red flag that the final method here is doBind method. After successful execution, execute the fireChannelActive method of the channel to tell all handler s that the binding has been successful. doBind(localAddress); } catch (Throwable t) { safeSetFailure(promise, t); closeIfClosed(); return; } if (!wasActive && isActive()) { invokeLater(new Runnable() { @Override public void run() { pipeline.fireChannelActive(); } }); } // The last step: safeSetSuccess(promise), tells promise that the task is successful. It can execute the method of the listener. This is the end of the whole startup process safeSetSuccess(promise); } ↓↓↓↓↓ // (8) NioServerSocketChannel: in the end, doBind will track to doBind of NioServerSocketChannel, indicating that Nio is used at the bottom of Netty @Override protected void doBind(SocketAddress localAddress) throws Exception { if (PlatformDependent.javaVersion() >= 7) { javaChannel().bind(localAddress, config.getBacklog()); } else { javaChannel().socket().bind(localAddress, config.getBacklog()); } }

- ④ Next to the last step, the server will enter a loop code (NioEventLoop class) to listen:

Netty startup process

- Create 2 EventLoopGroup thread pool arrays. The default size of the array is CPU*2, which is convenient for the chooser to select thread pool to improve performance.

- BootStrap sets boss as the group attribute and worker as the child attribute.

- Start with the bind method. The internal important methods are initAndRegister and dobind.

- The initAndRegister method reflects the creation of NioServerSocketChannel and its related NIO objects, pipeline and unsafe. It also initializes the head node and tail node for pipeline.

- After the register0 method is successful, the invocation calls the doBind0 method in the dobind method. The method calls NioServerSocketChannel's doBind method to bind the channel and port of JDK, completes all the startup of Netty server, and starts monitoring the connection events.

Source code analysis of Netty receiving request process

explain

- After the server starts, it must accept the client's request and return the information the client wants. The following source code analyzes how Netty accepts the client's request after it starts.

- From the source code of server startup, we know that the server finally registers an Accept event to wait for the client's connection. We also know that NioServerSocketChannel registers itself on the boss singleton thread pool (reactor thread), that is, NioEventLoop.

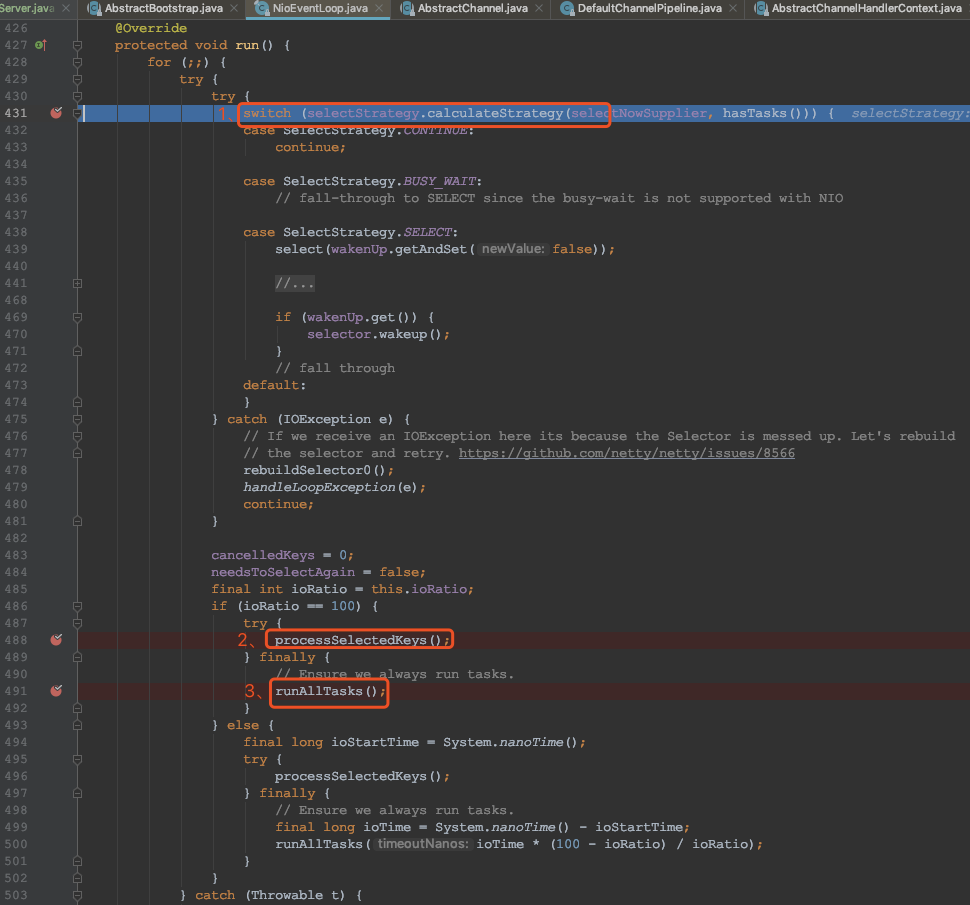

- Let's start with the logic of NioEventLoop:

The function of EventLoop is a dead cycle, and three things are done in this cycle: *Conditional waiting for Nio event *Handling Nio events *Processing tasks in message queues

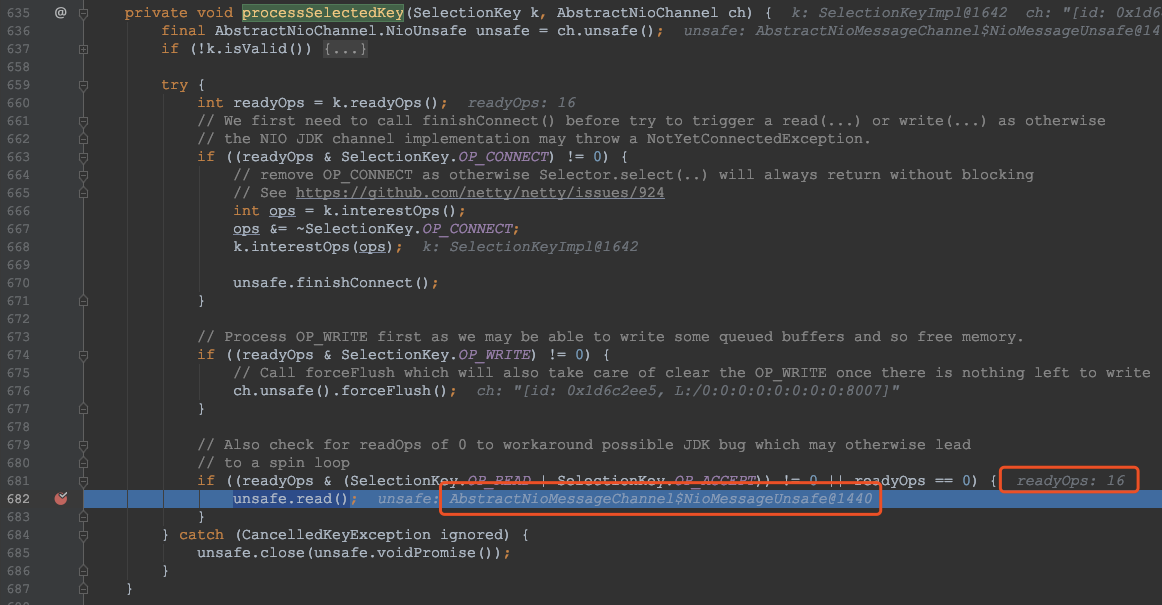

- Still use the previous project to analyze: after entering the NioEventLoop source code, start debugging in the private void processSelectedKey(SelectionKey k, AbstractNioChannel ch) method.

// public final class NioEventLoop extends SingleThreadEventLoop private void processSelectedKeys() { if (selectedKeys != null) { processSelectedKeysOptimized(); } else { processSelectedKeysPlain(selector.selectedKeys()); } } ↓↓↓↓↓ private void processSelectedKeysPlain(Set<SelectionKey> selectedKeys) { // check if the set is empty and if so just return to not create garbage by // creating a new Iterator every time even if there is nothing to process. // See https://github.com/netty/netty/issues/597 if (selectedKeys.isEmpty()) { return; } Iterator<SelectionKey> i = selectedKeys.iterator(); for (;;) { final SelectionKey k = i.next(); final Object a = k.attachment(); i.remove(); if (a instanceof AbstractNioChannel) {' // Get in here processSelectedKey(k, (AbstractNioChannel) a); } else { @SuppressWarnings("unchecked") NioTask<SelectableChannel> task = (NioTask<SelectableChannel>) a; processSelectedKey(k, task); } if (!i.hasNext()) { break; } if (needsToSelectAgain) { selectAgain(); selectedKeys = selector.selectedKeys(); // Create the iterator again to avoid ConcurrentModificationException if (selectedKeys.isEmpty()) { break; } else { i = selectedKeys.iterator(); } } } }

- Finally, we need to analyze the doBeginRead method of AbstractNioChannel. When we reach this method, the connection to this client is completed. Then we can listen to the read events

Source code analysis

- ① Analysis entry: private void processselectedkey (selectionkey K, abstractniochannel CH) = > > unsafe.read () method, Debug can see that readyOps is 16, which is an Accept event, indicating that the request has come in.

- This unsafe method is the AbstractNioMessageChannelNioMessageUnsafe object of NioServerSocketChannel in the boss thread. We enter the AbstractNioMessageChannelNioMessageUnsafe object. We enter the AbstractNioMessageChannelNioMessageUnsafe object. We enter the read method of AbstractNioMessageChannelNioMessageUnsafe

// public abstract class AbstractNioMessageChannel extends AbstractNioChannel private final class NioMessageUnsafe extends AbstractNioUnsafe { private final List<Object> readBuf = new ArrayList<Object>(); @Override public void read() { // Check whether the eventloop thread is the current thread assert eventLoop().inEventLoop(); final ChannelConfig config = config(); final ChannelPipeline pipeline = pipeline(); final RecvByteBufAllocator.Handle allocHandle = unsafe().recvBufAllocHandle(); allocHandle.reset(config); boolean closed = false; Throwable exception = null; try { try { do { // Core method 1: execute the doReadMessages method and pass in a readBuf variable, which is a List container // doReadMessages reads the requests received by NioServerSocketChannel in the boss thread and puts them into the container int localRead = doReadMessages(readBuf); if (localRead == 0) { break; } if (localRead < 0) { closed = true; break; } allocHandle.incMessagesRead(localRead); } while (allocHandle.continueReading()); } catch (Throwable t) { exception = t; } // Loop through the readBuf collection and execute the fireChannelRead method to handle these accepted requests or other events int size = readBuf.size(); for (int i = 0; i < size; i ++) { readPending = false; // Core method 2 pipeline.fireChannelRead(readBuf.get(i)); } readBuf.clear(); allocHandle.readComplete(); pipeline.fireChannelReadComplete(); if (exception != null) { closed = closeOnReadError(exception); pipeline.fireExceptionCaught(exception); } if (closed) { inputShutdown = true; if (isOpen()) { close(voidPromise()); } } } finally { // Check if there is a readPending which was not processed yet. // This could be for two reasons: // * The user called Channel.read() or ChannelHandlerContext.read() in channelRead(...) method // * The user called Channel.read() or ChannelHandlerContext.read() in channelReadComplete(...) method // // See https://github.com/netty/netty/issues/2254 if (!readPending && !config.isAutoRead()) { removeReadOp(); } } } }

- ② First look at the doReadMessages method:

// NioServerSocketChannel @Override protected int doReadMessages(List<Object> buf) throws Exception { // Through the SocketUtils tool class, call the accept method of serverSocketChannel encapsulated internally by NioServerSocketChannel. This is Nio's approach SocketChannel ch = SocketUtils.accept(javaChannel()); try { if (ch != null) { // Obtain the SocketChannel of a JDK, then use NioSocketChannel to encapsulate it, and then add it to the container. In this way, NioSocketChannel exists in the container buf buf.add(new NioSocketChannel(this, ch)); // Success returns 1 return 1; } } catch (Throwable t) { logger.warn("Failed to create a new channel from an accepted socket.", t); try { ch.close(); } catch (Throwable t2) { logger.warn("Failed to close a socket.", t2); } } return 0; }

- ③ Next, take a look at the fireChannelRead method:

// Cycle to call the fireChannelRead method of the pipeline of ServerSocket, and start to execute the ChannelRead method of the handler in the pipeline // (1)DefaultChannelPipeline @Override public final ChannelPipeline fireChannelRead(Object msg) { AbstractChannelHandlerContext.invokeChannelRead(head, msg); return this; } ↓↓↓↓↓ // (2) AbstractChannelHandlerContext static void invokeChannelRead(final AbstractChannelHandlerContext next, Object msg) { final Object m = next.pipeline.touch(ObjectUtil.checkNotNull(msg, "msg"), next); EventExecutor executor = next.executor(); if (executor.inEventLoop()) { next.invokeChannelRead(m); } else { executor.execute(new Runnable() { @Override public void run() { next.invokeChannelRead(m); } }); } } ↓↓↓↓↓ private void invokeChannelRead(Object msg) { if (invokeHandler()) { try { ((ChannelInboundHandler) handler()).channelRead(this, msg); } catch (Throwable t) { notifyHandlerException(t); } } else { fireChannelRead(msg); } } ↓↓↓↓↓ // (3) public class ServerBootstrap extends AbstractBootstrap<ServerBootstrap, ServerChannel> // Private static class serverbootstrappacceptor extensions channelinboundhandler adapter @Override @SuppressWarnings("unchecked") public void channelRead(ChannelHandlerContext ctx, Object msg) { // The strong conversion of msg to Channel is actually NioSocketChannel final Channel child = (Channel) msg; // Add the handler of NioSocketChannel to the pipeline, which is in the childHandler method set in our main method child.pipeline().addLast(childHandler); // Set various properties of NioSocketChannel setChannelOptions(child, childOptions, logger); for (Entry<AttributeKey<?>, Object> e: childAttrs) { child.attr((AttributeKey<Object>) e.getKey()).set(e.getValue()); } try { // Register the NioSocketChannel to an EventLoop in the childGroup and add a listener childGroup.register(child).addListener(new ChannelFutureListener() { @Override public void operationComplete(ChannelFuture future) throws Exception { if (!future.isSuccess()) { forceClose(child, future.cause()); } } }); } catch (Throwable t) { forceClose(child, t); } }

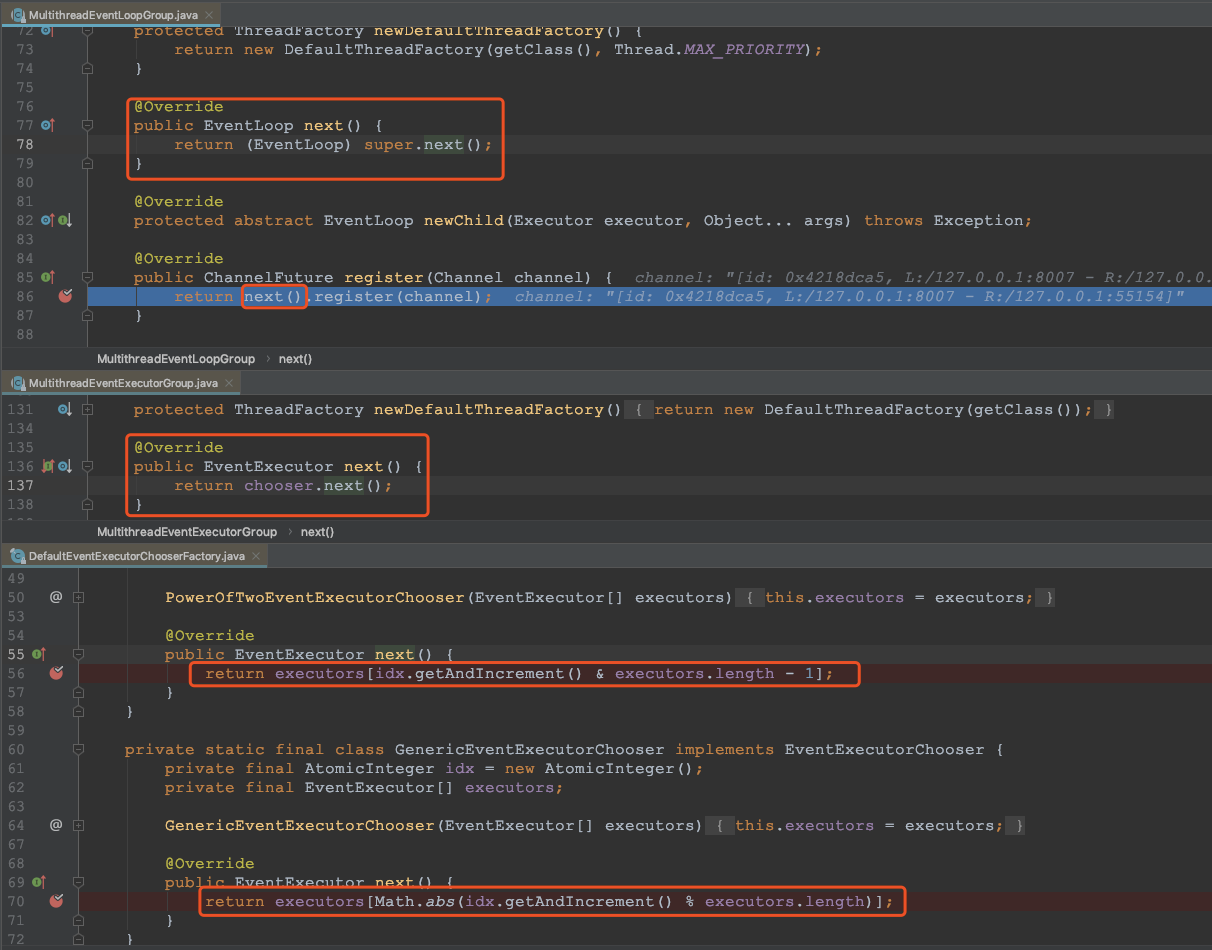

- ④ Enter the register method to view:

// (1)MultithreadEventLoopGroup @Override public ChannelFuture register(Channel channel) { return next().register(channel); } ↓↓↓↓↓ // (2)public abstract class SingleThreadEventLoop extends SingleThreadEventExecutor implements EventLoop @Override public ChannelFuture register(Channel channel) { return register(new DefaultChannelPromise(channel, this)); } @Override public ChannelFuture register(final ChannelPromise promise) { ObjectUtil.checkNotNull(promise, "promise"); promise.channel().unsafe().register(this, promise); return promise; } // (3)protected abstract class AbstractUnsafe implements Unsafe @Override public final void register(EventLoop eventLoop, final ChannelPromise promise) { if (eventLoop == null) { throw new NullPointerException("eventLoop"); } if (isRegistered()) { promise.setFailure(new IllegalStateException("registered to an event loop already")); return; } if (!isCompatible(eventLoop)) { promise.setFailure( new IllegalStateException("incompatible event loop type: " + eventLoop.getClass().getName())); return; } AbstractChannel.this.eventLoop = eventLoop; if (eventLoop.inEventLoop()) { // Core approach register0(promise); } else { try { eventLoop.execute(new Runnable() { @Override public void run() { // Core approach register0(promise); } }); } catch (Throwable t) { logger.warn( "Force-closing a channel whose registration task was not accepted by an event loop: {}", AbstractChannel.this, t); closeForcibly(); closeFuture.setClosed(); safeSetFailure(promise, t); } } }

- next() method trace:

- ⑤ Finally, the doBeginRead method, which is the method of AbstractNioChannel class, will be called: when it is executed here, the connection to the client is completed, and then the read event can be monitored

@Override protected void doBeginRead() throws Exception { // Channel.read() or ChannelHandlerContext.read() was called final SelectionKey selectionKey = this.selectionKey; if (!selectionKey.isValid()) { return; } readPending = true; final int interestOps = selectionKey.interestOps(); if ((interestOps & readInterestOp) == 0) { selectionKey.interestOps(interestOps | readInterestOp); } }

Netty's request acceptance process

- Overall process: accept connection = = > create a new niosocketchannel = = > register to a worker EventLoop = = > register selecot Read event.

- (1) the server polls the Accept event and calls the read method of unsafe after getting the event. The unsafe is the internal class of ServerSocket, which consists of two parts.

- ② doReadMessages is used to create a NioSocketChannel object that wraps the Nio Channel client of the JDK. This method is similar to creating ServerSocketChanel to create related pipeline, unsafe and config.

- ③ Follow up pipeline.fireChannelRead Method and bind yourself to an EventLoop in the workerGroup selected by a chooser selector. And register a 0, indicating that the registration is successful, but no read (1) event is registered

Source code analysis of Pipeline, Handler and HandlerContext creation

- The ChannelPipeline, ChannelHandler and ChannelHandlerContext in Netty are very core components. We analyze how Netty designs these three core components from the source code, and how to create and coordinate the work.

Relationship among the three

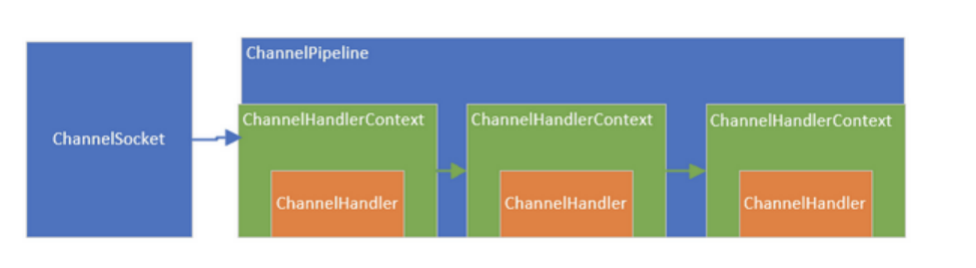

- Every time ServerSocket creates a new connection, it will create a Socket, corresponding to the target client.

- Each newly created Socket will be assigned a new channel pipeline.

- Each ChannelPipeline contains multiple ChannelHandlerContext (hereinafter referred to as Context).

- Together, they form a two-way linked list. These contexts are used to wrap the ChannelHandler (hereinafter referred to as handler) that we added when we called the addLast method.

- ChannelSocket and ChannelPipeline are one-to-one relationships, while multiple contexts within the pipeline form a linked list, and Context is only the encapsulation of Handler.

- When a request comes in, it will enter the pipeline corresponding to the Socket and pass through all the handler s of the pipeline. Yes, it is the filter mode in the design mode.

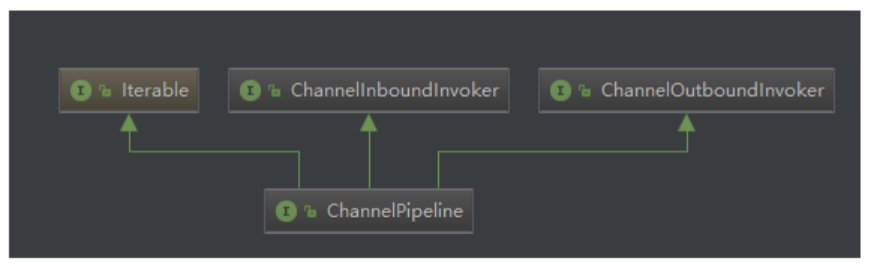

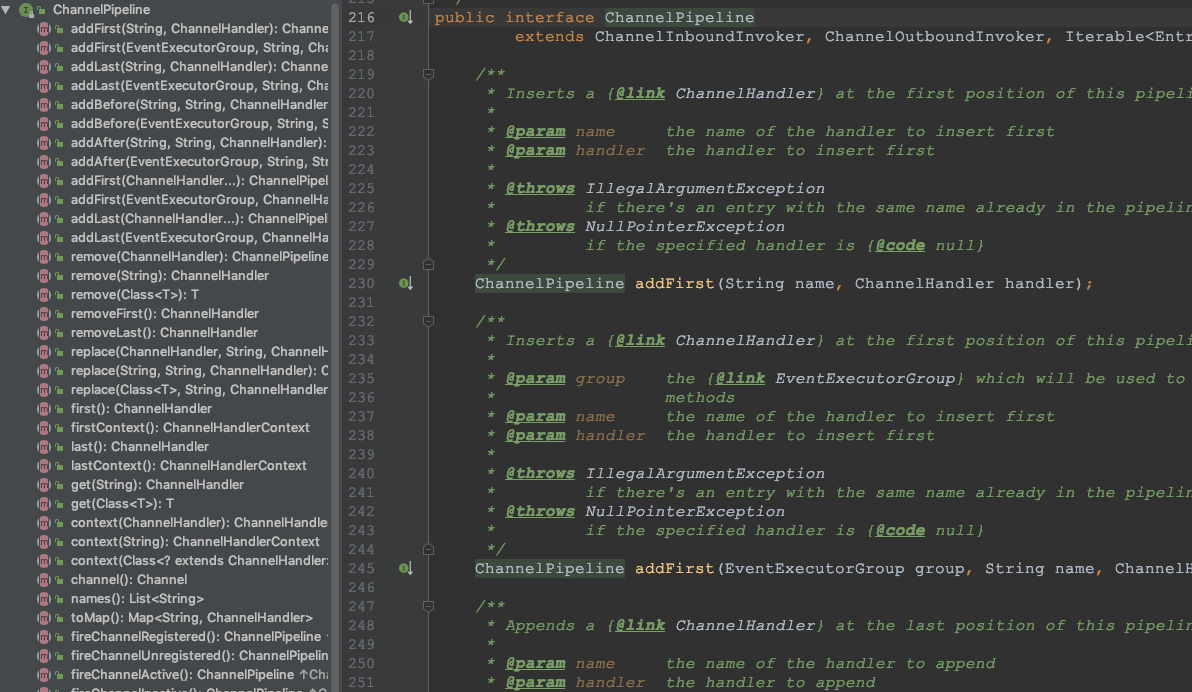

Function and design of ChannelPipeline

- Interface design of pipeline:

- Some methods:

- It can be seen that the interface inherits the interface of inBound, outBound and Iterable, which means that it can call the outBound and inBound methods of data, and it can also traverse the internal linked list. It can be seen that several representative methods are basically for the insertion, addition, deletion and replacement of the handler linked list, similar to a LinkedList. At the same time, it can also return channel (socket).

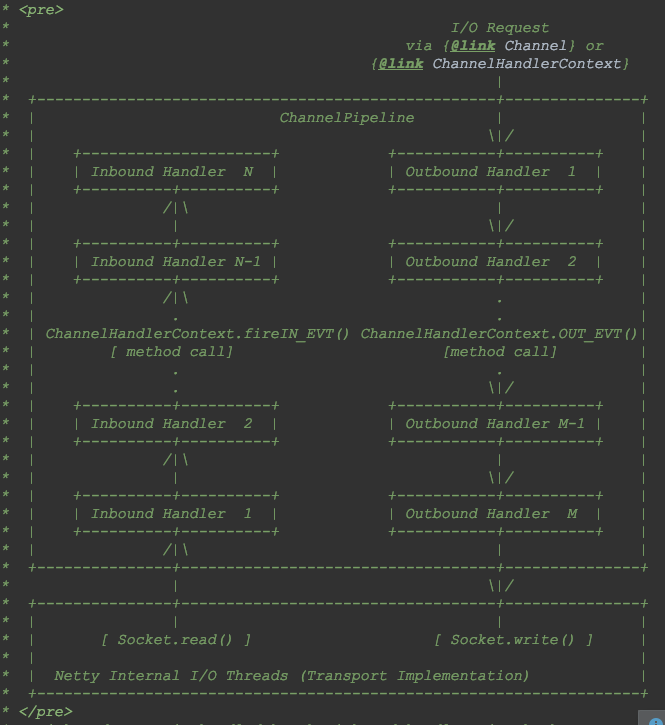

- On the interface document of pipeline, a diagram is provided:

Explanation of the above figure: - This is a list of handlers, which are used to handle or intercept inbound and outbound events. Pipeline implements the advanced form of filters, so that users can control how events are handled and how handlers interact in the pipeline.

- The figure above describes a typical way for handler to handle I/O events in pipeline. IO events are handled by inboundHandler or outBoundHandler, and are processed by calling C hannelHandlerContext.fireChannelRead Method to its nearest handler.

- Inbound events are handled by the inbound handler in a bottom-up direction, as shown. The inbound handler typically processes the inbound data generated by the IO thread at the bottom of the figure. Inbound data usually comes from SocketChannel.read(ByteBuffer) get.

- Generally, a pipeline has multiple handlers. For example, a typical server has the following handlers in the pipeline of each channel: protocol decoder - convert binary data to Java objects, protocol encoder - Convert Java objects to binary data, business logic handler - perform the actual business logic (such as database access).

- Your business program cannot block threads, which will affect the speed of IO and the performance of the entire Netty program. If your business program is fast, it can be placed in the IO thread. Otherwise, you need to execute it asynchronously. Or add a thread pool when adding a handler, for example:

// When the following task is executed, IO threads will not be blocked. The executed threads are from the group thread pool pipeline.addLast(group, "handler", new MyBusinessLogicHandler());

Function and design of ChannelHandler

- Source code:

public interface ChannelHandler { // Called when the ChannelHandler is added to the pipeline void handlerAdded(ChannelHandlerContext ctx) throws Exception; // Called when removed from the pipeline void handlerRemoved(ChannelHandlerContext ctx) throws Exception; // Called when an exception occurs to the pipeline during processing @Deprecated void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception; @Inherited @Documented @Target(ElementType.TYPE) @Retention(RetentionPolicy.RUNTIME) @interface Sharable { // no value } }

- The role of ChannelHandler is to process IO events or intercept IO events and forward them to the next Handler, ChannelHandler. When Handler handles events, the operations in both directions are different. Therefore, Netty defines two sub interfaces to inherit ChannelHandler.

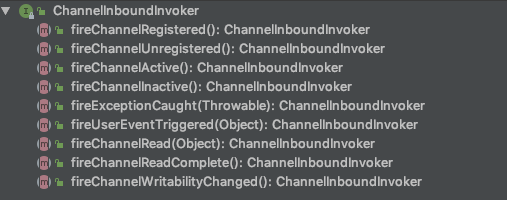

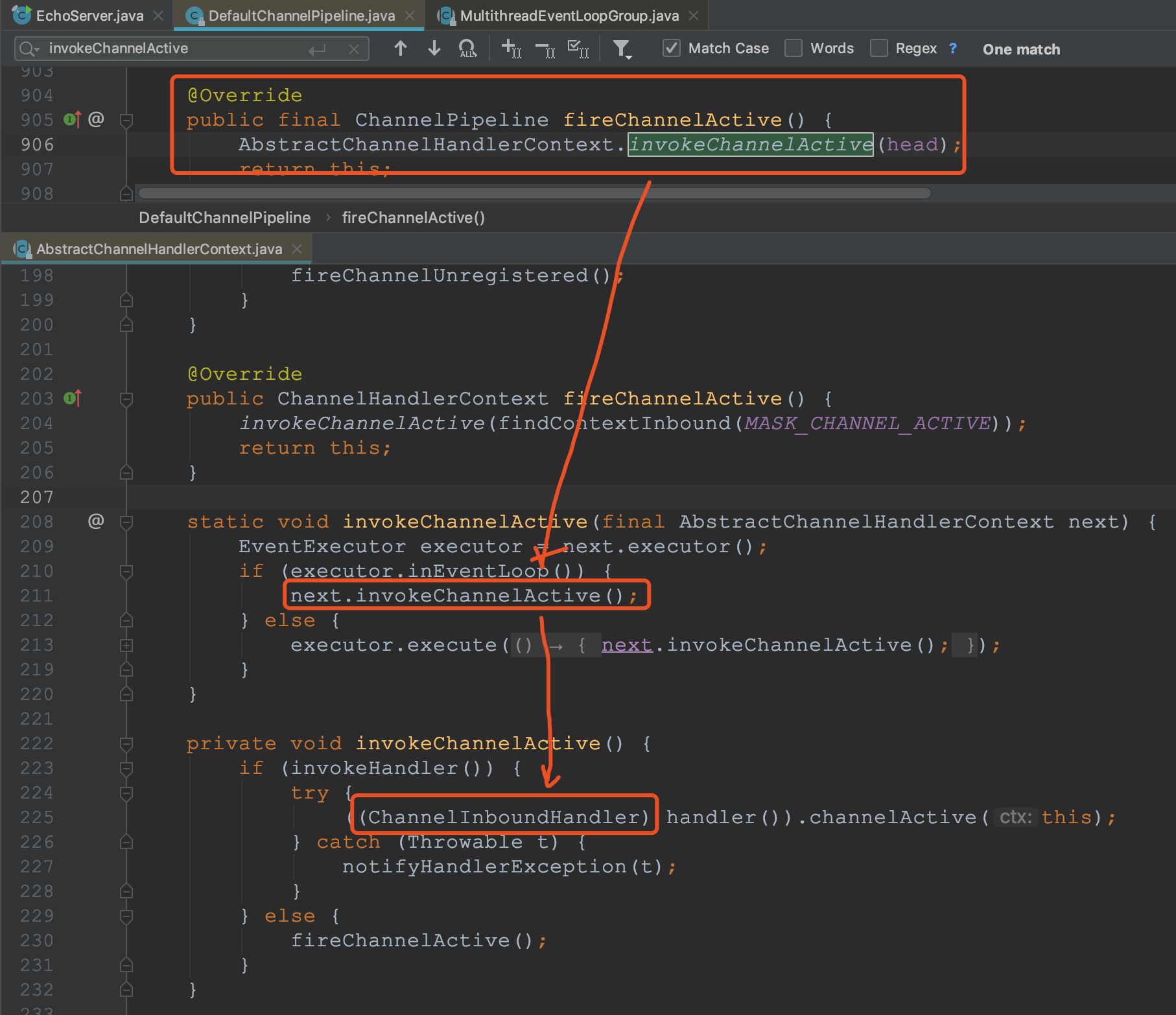

ChannelInboundHandler inbound event interface

- Programmers need to rewrite some methods. When an event of concern occurs, we need to implement our business logic in the method, because when an event occurs, Netty will call back the corresponding method

// Called when Channel is active void channelActive(ChannelHandlerContext ctx) throws Exception; // Methods such as called when reading data from Channel void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception;

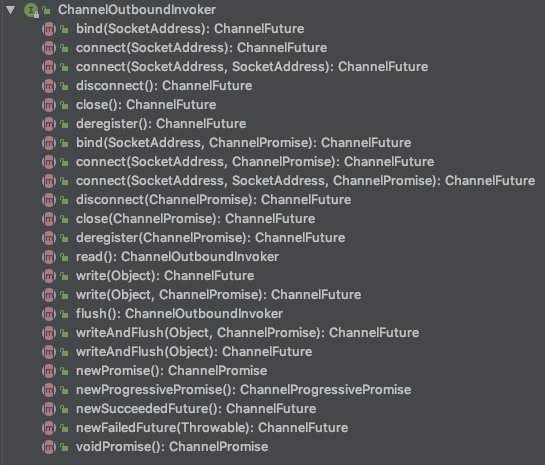

Channel outbound handler outbound event interface

- Outbound operations are all ways to connect and write data

// Called when a request binds a Channel to a local address void bind(ChannelHandlerContext ctx, SocketAddress localAddress, ChannelPromise promise) throws Exception; // Called when Channel is requested to be closed, etc void close(ChannelHandlerContext ctx, ChannelPromise promise) throws Exception;

ChannelDuplexHandler handles outbound and inbound events

- ChannelDuplexHandler indirectly implements the inbound interface and directly implements the outbound interface. It is a general class that can handle both inbound and outbound events.

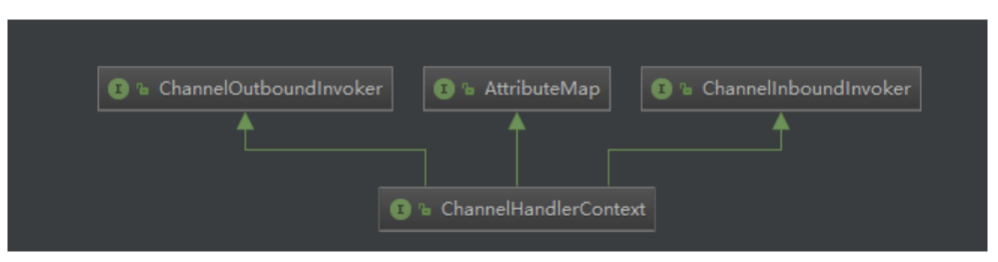

Function and design of ChannelHandlerContext

- ChannelHandlerContext inherits the outbound method call interface and the inbound method call interface

public interface ChannelHandlerContext extends AttributeMap, ChannelInboundInvoker, ChannelOutboundInvoker

- Channel outbound invoker part of the source code:

- Some source codes of ChannelInboundInvoker:

- Channel outbound invoker and channel inbound invoker are two kinds of invokers for inbound or outbound methods. They are to wrap another layer on the outer layer of the inbound or outbound handler to intercept and do some specific operations before and after the method.

- ChannelHandlerContext not only inherits their two methods, but also defines some of their own methods. These methods can obtain whether the corresponding channel s, executor s, handlers, pipeline s, memory allocators, and associated handlers in Context environment are deleted.

- Context is to wrap everything related to handler, so that context can operate handler conveniently in pipeline.

ChannelPipeline | ChannelHandler | ChannelHandlerContext creation process

The creation process is divided into three steps:

- When any ChannelSocket is created, a pipeline will be created.

- When the user or the system calls the add * * method of pipeline to add a handler, a Context will be created to wrap the handler.

- These contexts form a two-way linked list in the pipeline.

Create Pipeline when SocketChannel is created

protected AbstractChannel(Channel parent) { this.parent = parent; id = newId(); unsafe = newUnsafe(); pipeline = newChannelPipeline(); } ↓↓↓↓↓ protected DefaultChannelPipeline newChannelPipeline() { return new DefaultChannelPipeline(this); } ↓↓↓↓↓ // DefaultChannelPipeline protected DefaultChannelPipeline(Channel channel) { // Assign channel to the channel field for pipeline operation channel this.channel = ObjectUtil.checkNotNull(channel, "channel"); // Create a future and promise for asynchronous callbacks succeededFuture = new SucceededChannelFuture(channel, null); voidPromise = new VoidChannelPromise(channel, true); // Create an inbound tailContext and a headContext of both inbound and outbound types // tailContext and HeadContext are two very important methods. All events in the pipeline will flow through them tail = new TailContext(this); head = new HeadContext(this); // Connect two contexts to form a two-way linked list head.next = tail; tail.prev = head; }

Create Context when add * * adds a processor**

@Override public final ChannelPipeline addLast(EventExecutorGroup executor, ChannelHandler... handlers) { if (handlers == null) { throw new NullPointerException("handlers"); } for (ChannelHandler h: handlers) { if (h == null) { break; } addLast(executor, null, h); } return this; } ↓↓↓↓↓ @Override public final ChannelPipeline addLast(EventExecutorGroup group, String name, ChannelHandler handler) { final AbstractChannelHandlerContext newCtx; // pipeline adds handler. The parameter is thread pool, name is null, and handler is the handler passed in by us or the system. Netty synchronizes this code to prevent multiple threads from causing security problems synchronized (this) { // Check whether the handler instance is shared. If not, and it has been used by another pipeline, an exception will be thrown checkMultiplicity(handler); // Create a Context. Each time a handler is added, an associated Context will be created. Call the addLast method to append the Context to the linked list. newCtx = newContext(group, filterName(name, handler), handler); addLast0(newCtx); // If the registered is false it means that the channel was not registered on an eventLoop yet. // In this case we add the context to the pipeline and add a task that will call // ChannelHandler.handlerAdded(...) once the channel is registered. if (!registered) { // If the channel is not registered on selecor, add the Context to the to-do task of the pipeline newCtx.setAddPending(); callHandlerCallbackLater(newCtx, true); return this; } EventExecutor executor = newCtx.executor(); if (!executor.inEventLoop()) { callHandlerAddedInEventLoop(newCtx, executor); return this; } } // When the registration is completed, the callHandlerAdded0 method will be called (by default, the user can implement this method) callHandlerAdded0(newCtx); return this; }

Pipeline Handler HandlerContext creation process combing

- Every time you create a ChannelSocket, you will create a bound pipeline, a one-to-one relationship. When you create a pipeline, you will also create a tail node and a head node to form the initial linked list.

- When the pipeline's addLast method is called, a Context will be created according to the given handler, and then the Context will be inserted at the end of the list (before the tail).

- Context wraps the handler. Multiple contexts form a two-way linked list in the pipeline. The inbound direction is called inbound, starting from the head node, outbound method is called outbound, and starting from the tail node.

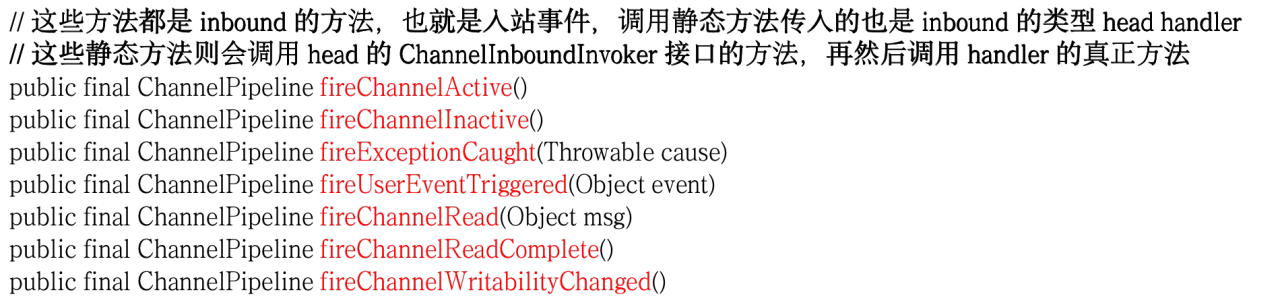

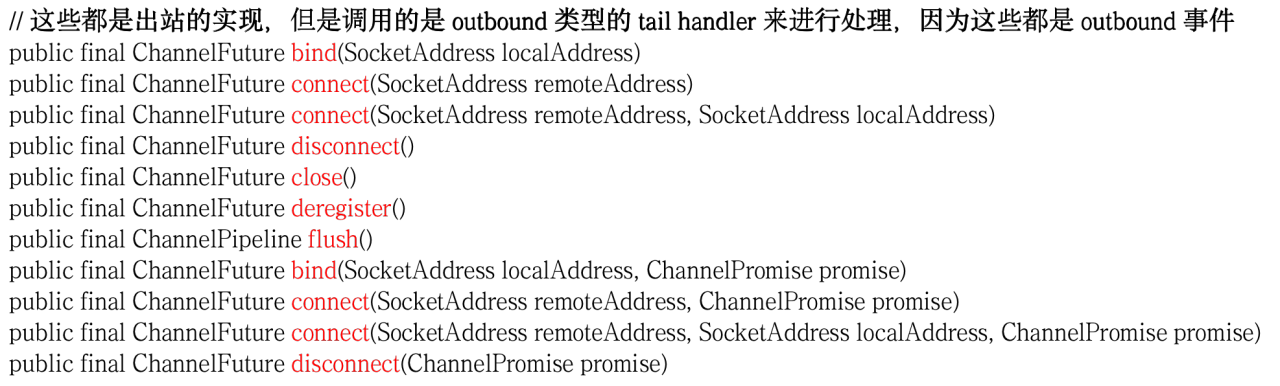

Source code analysis of channel pipeline scheduling handler

- How does ChannelPipeline call these internal handler s when a request comes in? Let's analyze it together.

- First of all, when a request comes in, the relevant methods of pipeline will be called first. If it is an inbound event, these methods start with fire, which means to start the flow of pipeline and let the subsequent handler continue to process.

Source code analysis

- Analysis portal: DefaultChannelPipeline

- Take the public final ChannelPipeline fireChannelActive() method as an example to trace the source code

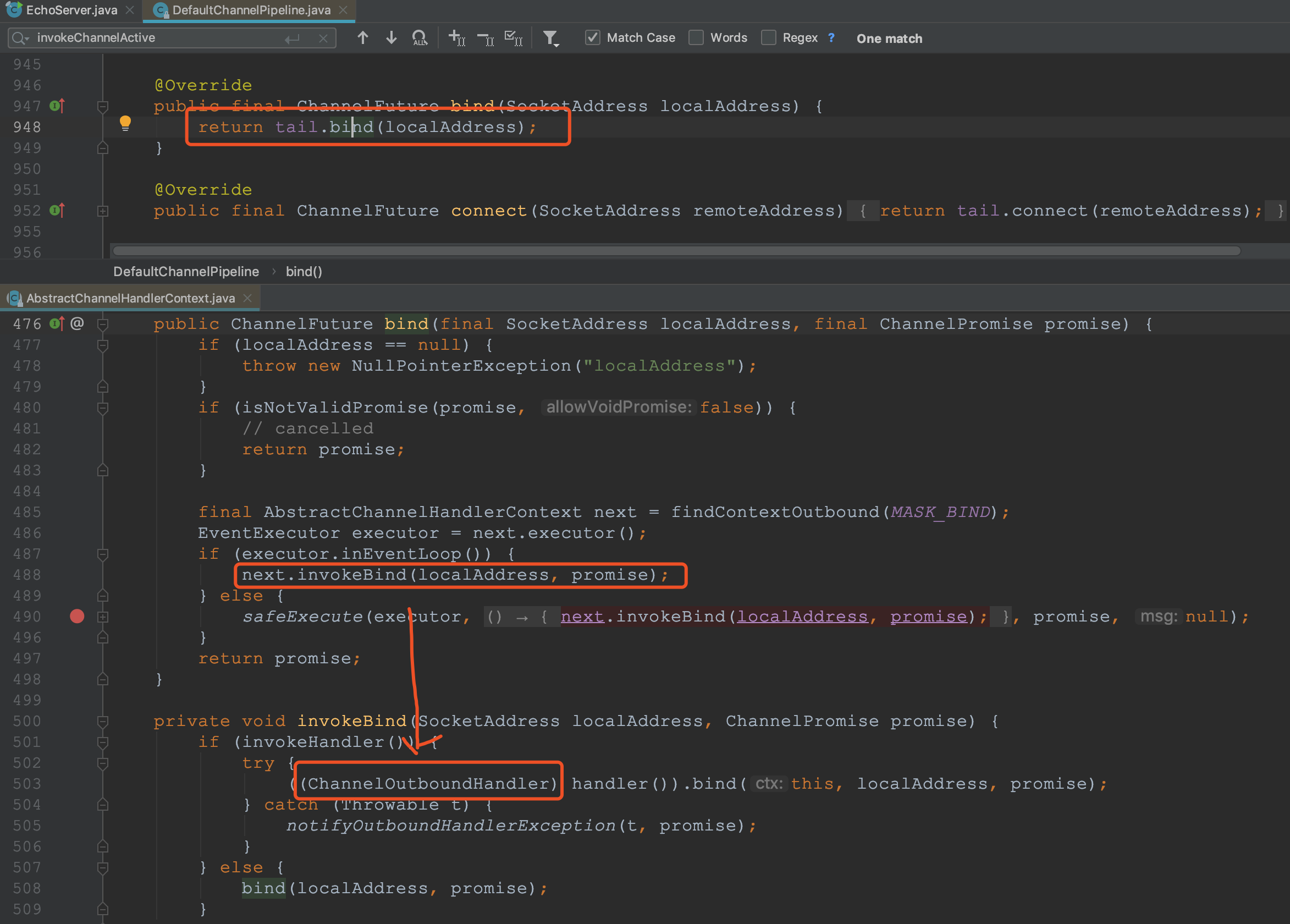

- Take the public final ChannelFuture bind(SocketAddress localAddress) method as an example to trace the source code

- Outbound starts from tail and inbound starts from head. Because the outbound is written from the inside and outside, starting from the tail, the previous handler can be processed to prevent the handler from being missed, such as encoding. On the contrary, the inbound is of course input from the head to the inside, so that the later handler can process the input data. For example, decoding. Therefore, although head also implements the outbound interface, it does not perform outbound tasks from head

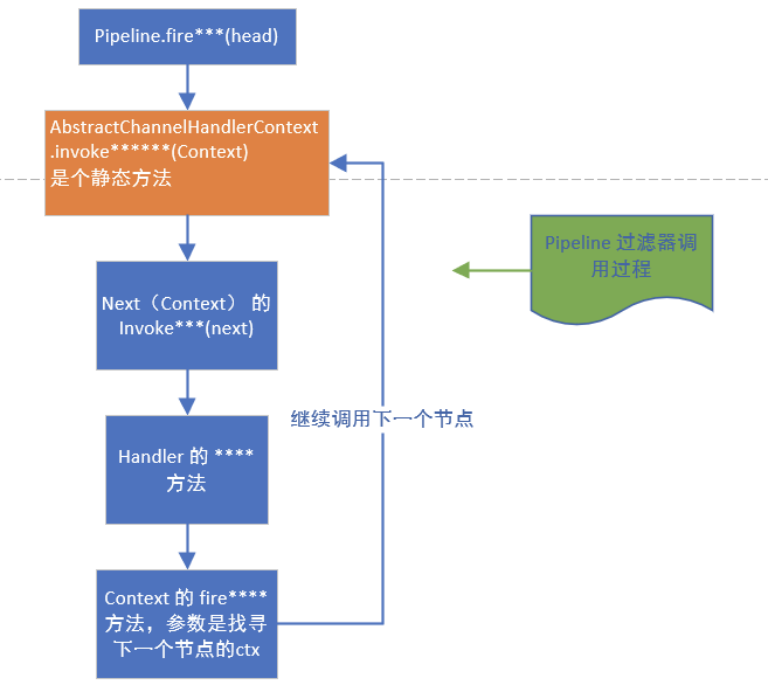

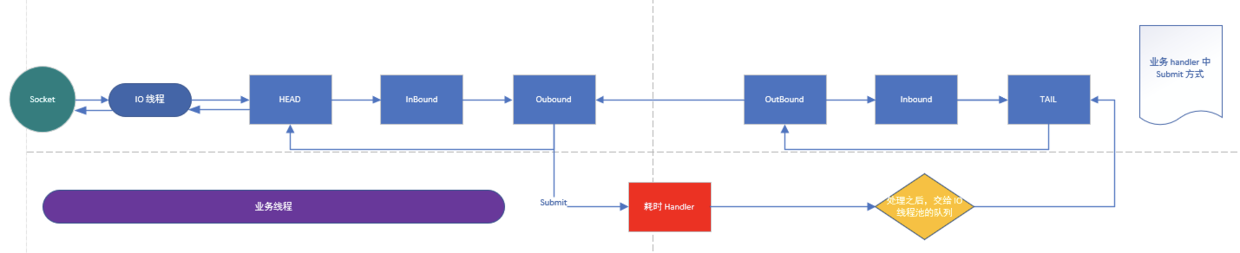

- How to call it is shown in a figure:

- ① pipeline first calls the static method fireXXX of Context and passes in Context.

- ② Then, the static method calls the invoker method of the Context, and the invoker method will call the real XXX method of the Handler contained in the Context. After the call, if it needs to continue to pass backward, it will call the fireXXX2 method of the Context, which will go back and forth.

ChannelPipeline scheduling handler combing

- Context wraps the handler. Multiple contexts form a two-way linked list in the pipeline. The inbound direction is called inbound, starting from the head node, the outbound method is called outbound, starting from the tail node.

- The transfer in the middle of the node finds the next node of the current node through the fire series of methods inside the AbstractChannelHandlerContext class. Is a filter form to complete the scheduling of handlers.

Source code analysis of Netty heartbeat service

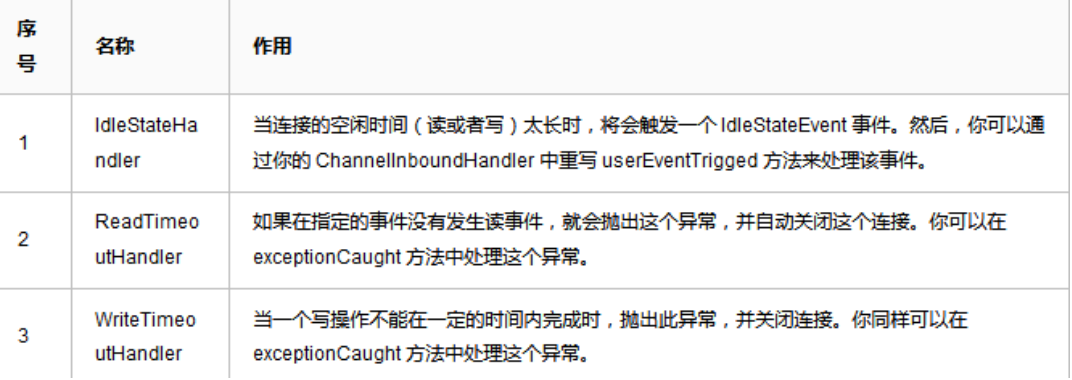

- As a network framework, netty provides many functions, such as encoding and decoding. Netty also provides a very important service (heartbeat mechanism). It is an essential function in RPC framework to check whether the other party is effective through heartbeat.

- Netty provides three handlers: IdleStateHandler, ReadTimeoutHandler and WriteTimeoutHandler to check the validity of the connection. ReadTimeout event and WriteTimeout event will automatically close the connection and belong to exception handling, so this is just an introduction. Let's focus on idlestadehandler.

IdleStateHandler analysis

- Four attributes

// Whether to consider slow outbound conditions. The default value is false private final boolean observeOutput; // Read event idle time, 0 means disable event private final long readerIdleTimeNanos; // Write event idle time, 0 means disable event private final long writerIdleTimeNanos; // Read write event idle time, 0 means disable event private final long allIdleTimeNanos;

- handlerAdded method: when the handler is added to the pipeline, the initialize method is called

@Override public void handlerAdded(ChannelHandlerContext ctx) throws Exception { if (ctx.channel().isActive() && ctx.channel().isRegistered()) { // channelActive() event has been fired already, which means this.channelActive() will // not be invoked. We have to initialize here instead. initialize(ctx); } else { // channelActive() event has not been fired yet. this.channelActive() will be invoked // and initialization will occur there. } } ↓↓↓↓↓ private void initialize(ChannelHandlerContext ctx) { // Avoid the case where destroy() is called before scheduling timeouts. // See: https://github.com/netty/netty/issues/143 switch (state) { case 1: case 2: return; } // As long as the given parameter is greater than 0, a timing task is created, and each event is created. // At the same time, set the state state to 1 to prevent repeated initialization. Call the initOutputChanged method to initialize the monitor outbound data properties. state = 1; initOutputChanged(ctx); lastReadTime = lastWriteTime = ticksInNanos(); // There are three timing task classes in this class, corresponding to read, write and read-write events. There is a parent class (AbstractIdleTask), which provides a template method if (readerIdleTimeNanos > 0) { readerIdleTimeout = schedule(ctx, new ReaderIdleTimeoutTask(ctx), readerIdleTimeNanos, TimeUnit.NANOSECONDS); } if (writerIdleTimeNanos > 0) { writerIdleTimeout = schedule(ctx, new WriterIdleTimeoutTask(ctx), writerIdleTimeNanos, TimeUnit.NANOSECONDS); } if (allIdleTimeNanos > 0) { allIdleTimeout = schedule(ctx, new AllIdleTimeoutTask(ctx), allIdleTimeNanos, TimeUnit.NANOSECONDS); } } ↓↓↓↓↓ private abstract static class AbstractIdleTask implements Runnable { private final ChannelHandlerContext ctx; AbstractIdleTask(ChannelHandlerContext ctx) { this.ctx = ctx; } // When the channel is closed, the task is not performed. Instead, execute the run method of the subclass. @Override public void run() { if (!ctx.channel().isOpen()) { return; } run(ctx); } protected abstract void run(ChannelHandlerContext ctx); }

Analysis of the run method of the read event (that is, the run method of the reader idletimeouttask)

@Override protected void run(ChannelHandlerContext ctx) { // Get the timeout set by the user long nextDelay = readerIdleTimeNanos; // If the read operation is finished (the channelReadComplete method setting is executed), subtract the given time and the last read operation time (the channelReadComplete method setting is executed) from the current time if (!reading) { nextDelay -= ticksInNanos() - lastReadTime; } // If it is less than 0, the event is triggered. Instead, continue to queue. Interval is the new calculated time. if (nextDelay <= 0) { /* The trigger logic is: first, put the task in the queue again. The time is the time set at the beginning. A promise object is returned for cancellation. Then, set the first property to false, indicating that the next read is no longer the first time, and this property will be changed to true in the channelRead method. Generally speaking, each read operation will record a time. When the time of the scheduled task is up, the interval between the current time and the last read time will be calculated. If the interval exceeds the set time, the UserEventTriggered method will be triggered */ // Reader is idle - set a new timeout and notify the callback. // Use to cancel task promise readerIdleTimeout = schedule(ctx, this, readerIdleTimeNanos, TimeUnit.NANOSECONDS); boolean first = firstReaderIdleEvent; firstReaderIdleEvent = false; try { // Resubmit task // Create a read event object of type IdleStateEvent and pass it to the user's UserEventTriggered method. Complete the action that triggered the event IdleStateEvent event = newIdleStateEvent(IdleState.READER_IDLE, first); // Trigger user handler use channelIdle(ctx, event); } catch (Throwable t) { ctx.fireExceptionCaught(t); } } else { // Read occurred before the timeout - set a new timeout with shorter delay. readerIdleTimeout = schedule(ctx, this, nextDelay, TimeUnit.NANOSECONDS); } }

Analysis of the run method for writing events (that is, the run method for writer idletimeouttask)

- The run code logic of the write task is basically the same as that of the read task. The only difference is that there is a judgment about the outbound slow data hasOutputChanged

@Override protected void run(ChannelHandlerContext ctx) { long lastWriteTime = IdleStateHandler.this.lastWriteTime; long nextDelay = writerIdleTimeNanos - (ticksInNanos() - lastWriteTime); if (nextDelay <= 0) { // Writer is idle - set a new timeout and notify the callback. writerIdleTimeout = schedule(ctx, this, writerIdleTimeNanos, TimeUnit.NANOSECONDS); boolean first = firstWriterIdleEvent; firstWriterIdleEvent = false; try { // Judgment of outbound slow data if (hasOutputChanged(ctx, first)) { return; } IdleStateEvent event = newIdleStateEvent(IdleState.WRITER_IDLE, first); channelIdle(ctx, event); } catch (Throwable t) { ctx.fireExceptionCaught(t); } } else { // Write occurred before the timeout - set a new timeout with shorter delay. writerIdleTimeout = schedule(ctx, this, nextDelay, TimeUnit.NANOSECONDS); } }

Analysis of run method of all events (i.e. run method of AllIdleTimeoutTask)

- Indicates that this is monitoring all events. When a read-write event occurs, it is recorded. The code logic is basically the same as that of writing events.

@Override protected void run(ChannelHandlerContext ctx) { long nextDelay = allIdleTimeNanos; // !! Attention if (!reading) { // Subtract the last write or read time from the current time. If it is greater than 0, it means that it has timed out // The time calculation here is based on the maximum value in the read-write event. Then, just like writing events, judge whether there is a slow writing situation. nextDelay -= ticksInNanos() - Math.max(lastReadTime, lastWriteTime); } if (nextDelay <= 0) { // Both reader and writer are idle - set a new timeout and // notify the callback. allIdleTimeout = schedule(ctx, this, allIdleTimeNanos, TimeUnit.NANOSECONDS); boolean first = firstAllIdleEvent; firstAllIdleEvent = false; try { if (hasOutputChanged(ctx, first)) { return; } IdleStateEvent event = newIdleStateEvent(IdleState.ALL_IDLE, first); channelIdle(ctx, event); } catch (Throwable t) { ctx.fireExceptionCaught(t); } } else { // Either read or write occurred before the timeout - set a new // timeout with shorter delay. allIdleTimeout = schedule(ctx, this, nextDelay, TimeUnit.NANOSECONDS); } }

Summary of the heartbeat mechanism of Netty

- IdleStateHandler can realize the heartbeat function. When there is no read-write interaction between the server and the client and the given time is exceeded, the userEventTriggered method of the user handler will be triggered. The user can try to send information to the other party in this method. If the sending fails, the connection will be closed.

- The implementation of idlestadehandler is based on the timed task of EventLoop. Each reading and writing will record a value. When the timed task is running, it can judge whether it is idle by calculating the results of current time, setting time and last event occurrence time.

- There are three internal scheduled tasks, corresponding to read event, write event and read write event. It's usually enough for users to listen for read and write events.

- At the same time, some extreme situations are considered inside the idlestationhandler: the client receives data slowly, and the speed of receiving data at one time exceeds the set idle time. Netty uses the observeOutput property in the constructor to determine whether to judge the outbound buffer.

- If the outbound is slow, Netty doesn't think it's idle and doesn't trigger the idle event. But the first time it's triggered anyway. Because for the first time, it is impossible to judge whether the outbound is slow or idle. Of course, if the outbound is slow, it may cause the problem of oom, which is bigger than the problem of idle.

- Therefore, when your application has memory overflow, OOM and so on, and write idle rarely occurs (using observeOutput to be true), you need to pay attention to whether the data outbound speed is too slow.

- Another thing to note: ReadTimeoutHandler, which inherits from idlestadehandler, is triggered when a read idle event is triggered ctx.fireExceptionCaught Method, and pass in a ReadTimeoutException, then close Socket.

- However, the implementation of WriteTimeoutHandler is not based on idlestadehandler. Its principle is that when the write method is called, a timing task will be created. The task content is to judge whether it exceeds the write time according to the completion of the incoming promise. When the scheduled task starts to run according to the specified time, it is found that the isDone method of promise returns false, indicating that it has not been written, indicating that it has timed out, an exception is thrown. When the write method is complete, the scheduled task is interrupted.

Source code analysis of Netty core component NioEventLoop

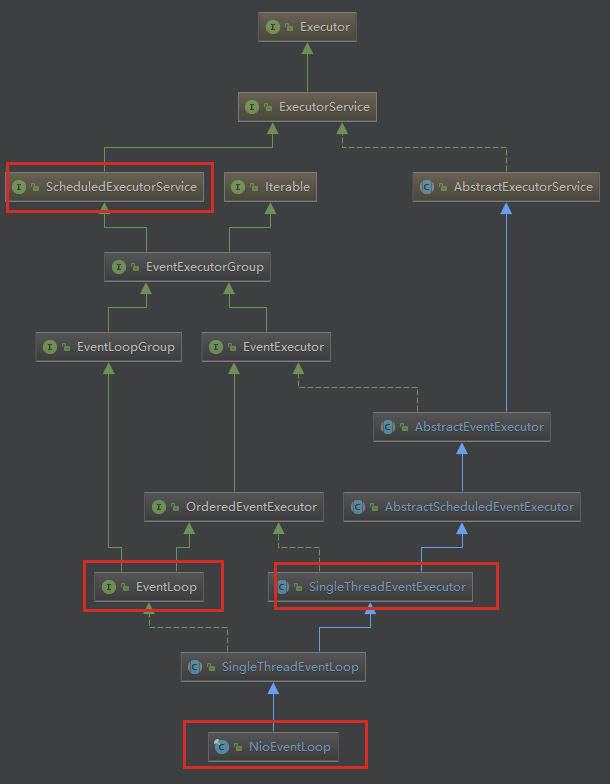

NioEventLoop inheritance relationship

- The ScheduledExecutorService interface indicates that it is a timed task interface, so NioEventLoop can accept timed tasks.

- SingleThreadEventExecutor indicates that this is a single thread thread pool.

- NioEventLoop is a single instance thread pool, which contains a dead loop thread that continuously does three things: listening to the port, processing port events, and processing queue events. Each NioEventLoop can be bound to multiple channels, and each Channel can only be processed by one NioEventLoop.

Source code analysis of the execute method of NioEventLoop

- ① In the SingleThreadEventExecutor class:

@Override public void execute(Runnable task) { if (task == null) { throw new NullPointerException("task"); } // First, judge whether the thread of the NioEventLoop is the current thread boolean inEventLoop = inEventLoop(); // Core method 1: add task to queue addTask(task); if (!inEventLoop) { // Core method 2: start thread startThread(); if (isShutdown()) { // If the thread has stopped and the task deletion fails, the rejection policy will be executed boolean reject = false; try { if (removeTask(task)) { reject = true; } } catch (UnsupportedOperationException e) { // The task queue does not support removal so the best thing we can do is to just move on and // hope we will be able to pick-up the task before its completely terminated. // In worst case we will log on termination. } if (reject) { reject(); } } } // If addTaskWakesUp is false and the task is not of type NonWakeupRunnable, try to wake up the selector // At this time, the thread blocked in selecor will immediately return if (!addTaskWakesUp && wakesUpForTask(task)) { wakeup(inEventLoop); } }

- ② First look at the addTask method:

protected void addTask(Runnable task) { if (task == null) { throw new NullPointerException("task"); } if (!offerTask(task)) { reject(task); } } ↓↓↓↓↓ final boolean offerTask(Runnable task) { if (isShutdown()) { reject(); } return taskQueue.offer(task); }

- ③ Take another look at the startThread method: when executing the execute method, if the current thread is not the thread of NioEventLoop, try to start the thread, that is, the startThread method

private void startThread() { // This method first determines whether it has been started, and ensures that there is only one thread in NioEventLoop // If not, try to use Cas to change state to ST_STARTED, that is, started. // Then call the doStartThread method. If it fails, roll back if (state == ST_NOT_STARTED) { if (STATE_UPDATER.compareAndSet(this, ST_NOT_STARTED, ST_STARTED)) { try { doStartThread(); } catch (Throwable cause) { STATE_UPDATER.set(this, ST_NOT_STARTED); PlatformDependent.throwException(cause); } } } } ↓↓↓↓↓ private void doStartThread() { assert thread == null; // First, call the execute method of the executor, which is the threadpertakexector class created when creating the Event LoopGroup // The execute method wraps Runnable as a FastThreadLocalThread of Netty. executor.execute(new Runnable() { @Override public void run() { // First, judge thread interrupt status thread = Thread.currentThread(); if (interrupted) { thread.interrupt(); } boolean success = false; // Then set the last execution time updateLastExecutionTime(); try { // !! Execute the run method of the current NioEventLoop. Note: this method is a dead loop and the core of the whole NioEventLoop SingleThreadEventExecutor.this.run(); success = true; } catch (Throwable t) { logger.warn("Unexpected exception from an event executor: ", t); } finally { // Use CAS to modify state status continuously, and change to ST_SHUTTING_DOWN for (;;) { int oldState = state; if (oldState >= ST_SHUTTING_DOWN || STATE_UPDATER.compareAndSet( SingleThreadEventExecutor.this, oldState, ST_SHUTTING_DOWN)) { break; } } // Check if confirmShutdown() was called at the end of the loop. if (success && gracefulShutdownStartTime == 0) { if (logger.isErrorEnabled()) { logger.error("Buggy " + EventExecutor.class.getSimpleName() + " implementation; " + SingleThreadEventExecutor.class.getSimpleName() + ".confirmShutdown() must " + "be called before run() implementation terminates."); } } // When the thread Loop ends, close the thread, and finally make a Loop to confirm whether it is closed, otherwise it will not break. try { // Run all remaining tasks and shutdown hooks. for (;;) { if (confirmShutdown()) { break; } } } finally { try { // Then, perform the cleanup operation and update the status to ST_TERMINATED and release the current thread lock cleanup(); } finally { // Lets remove all FastThreadLocals for the Thread as we are about to terminate and notify // the future. The user may block on the future and once it unblocks the JVM may terminate // and start unloading classes. // See https://github.com/netty/netty/issues/6596. FastThreadLocal.removeAll(); STATE_UPDATER.set(SingleThreadEventExecutor.this, ST_TERMINATED); threadLock.release(); // If the task queue is not empty, how many unfinished tasks remain in the print queue. And call back the terminationFuture method if (!taskQueue.isEmpty()) { if (logger.isWarnEnabled()) { logger.warn("An event executor terminated with " + "non-empty task queue (" + taskQueue.size() + ')'); } } terminationFuture.setSuccess(null); } } } } }); }

- ④ Let's take a look at the core NioEventLoop's run method: this method is in NioEventLoop

@Override protected void run() { for (;;) { try { try { switch (selectStrategy.calculateStrategy(selectNowSupplier, hasTasks())) { case SelectStrategy.CONTINUE: continue; case SelectStrategy.BUSY_WAIT: // fall-through to SELECT since the busy-wait is not supported with NIO case SelectStrategy.SELECT: select(wakenUp.getAndSet(false)); // A bunch of comments are omitted if (wakenUp.get()) { selector.wakeup(); } // fall through default: } } catch (IOException e) { // If we receive an IOException here its because the Selector is messed up. Let's rebuild // the selector and retry. https://github.com/netty/netty/issues/8566 rebuildSelector0(); handleLoopException(e); continue; } cancelledKeys = 0; needsToSelectAgain = false; final int ioRatio = this.ioRatio; if (ioRatio == 100) { try { processSelectedKeys(); } finally { // Ensure we always run tasks. runAllTasks(); } } else { final long ioStartTime = System.nanoTime(); try { processSelectedKeys(); } finally { // Ensure we always run tasks. final long ioTime = System.nanoTime() - ioStartTime; runAllTasks(ioTime * (100 - ioRatio) / ioRatio); } } } catch (Throwable t) { handleLoopException(t); } // Always handle shutdown even if the loop processing threw an exception. try { if (isShuttingDown()) { closeAll(); if (confirmShutdown()) { return; } } } catch (Throwable t) { handleLoopException(t); } } } // As can be seen from the above steps, the whole run method does three things: 1,select Get events of interest 2,processSelectedKeys Handling events 3,runAllTasks Perform tasks in the queue

- ⑤ Let's go into the code and look at the select method: how does non blocking work?

private void select(boolean oldWakenUp) throws IOException { Selector selector = this.selector; try { int selectCnt = 0; long currentTimeNanos = System.nanoTime(); long selectDeadLineNanos = currentTimeNanos + delayNanos(currentTimeNanos); for (;;) { long timeoutMillis = (selectDeadLineNanos - currentTimeNanos + 500000L) / 1000000L; if (timeoutMillis <= 0) { if (selectCnt == 0) { selector.selectNow(); selectCnt = 1; } break; } // If a task was submitted when wakenUp value was true, the task didn't get a chance to call // Selector#wakeup. So we need to check task queue again before executing select operation. // If we don't, the task might be pended until select operation was timed out. // It might be pended until idle timeout if IdleStateHandler existed in pipeline. if (hasTasks() && wakenUp.compareAndSet(false, true)) { selector.selectNow(); selectCnt = 1; break; } int selectedKeys = selector.select(timeoutMillis); // Default one second selectCnt ++; // If it returns after 1 second, there is a return value, or select is awakened by the user, or there is a task in the task queue, or there is a scheduled task to be executed // Out of the loop if (selectedKeys != 0 || oldWakenUp || wakenUp.get() || hasTasks() || hasScheduledTasks()) { // - Selected something, // - waken up by user, or // - the task queue has a pending task. // - a scheduled task is ready for processing break; } if (Thread.interrupted()) { // Thread was interrupted so reset selected keys and break so we not run into a busy loop. // As this is most likely a bug in the handler of the user or it's client library we will // also log it. // // See https://github.com/netty/netty/issues/2426 if (logger.isDebugEnabled()) { logger.debug("Selector.select() returned prematurely because " + "Thread.currentThread().interrupt() was called. Use " + "NioEventLoop.shutdownGracefully() to shutdown the NioEventLoop."); } selectCnt = 1; break; } long time = System.nanoTime(); if (time - TimeUnit.MILLISECONDS.toNanos(timeoutMillis) >= currentTimeNanos) { // timeoutMillis elapsed without anything selected. selectCnt = 1; } else if (SELECTOR_AUTO_REBUILD_THRESHOLD > 0 && selectCnt >= SELECTOR_AUTO_REBUILD_THRESHOLD) { // The code exists in an extra method to ensure the method is not too big to inline as this // branch is not very likely to get hit very frequently. selector = selectRebuildSelector(selectCnt); selectCnt = 1; break; } currentTimeNanos = time; } if (selectCnt > MIN_PREMATURE_SELECTOR_RETURNS) { if (logger.isDebugEnabled()) { logger.debug("Selector.select() returned prematurely {} times in a row for Selector {}.", selectCnt - 1, selector); } } } catch (CancelledKeyException e) { if (logger.isDebugEnabled()) { logger.debug(CancelledKeyException.class.getSimpleName() + " raised by a Selector {} - JDK bug?", selector, e); } // Harmless exception - log anyway } } // Call the select method of the selector, and block for one second by default. If there is a scheduled task, block based on the remaining time of the scheduled task plus 0.5 seconds. When executing the execute method, that is, when adding tasks, selecor will wake up to prevent selecotr from blocking for too long

A summary of the operation mechanism of the Netty core component NioEventLoop

- Each time you execute the execute method, you add tasks to the queue. Start the thread when you first add it and execute the run method. And the run method is the core of the whole NioEventLoop, just like the name of NioEventLoop, Loop, endless Loop.

- Loop does three things:

- ① Call the select method of the selector, and block for one second by default. If there is a scheduled task, block based on the remaining time of the scheduled task plus 0.5 seconds. When executing the execute method, that is, when adding tasks, it wakes up selecor to prevent selecotr from blocking for too long.

- ② When the selector returns, the callback uses the processSelectedKeys method to process the selectKey.

- ③ When the processSelectedKeys method is finished, run the runAllTasks method according to the proportion of ioRatio. By default, the IO task time and non IO task time are the same. You can also tune according to your application characteristics. For example, if there are many non IO tasks, you can turn down the ioRatio, so that the non IO tasks can be executed longer, to prevent the queue clock from accumulating too many tasks.

Source code analysis of adding thread pool in handler and adding thread pool in Context

- Doing time-consuming and unpredictable operations in Netty, such as database and network requests, will seriously affect the processing speed of Netty's Socket.

- The solution is to add time-consuming tasks to the asynchronous thread pool. However, there are two ways to add thread pool, and the difference between the two ways is quite big.

- The first way to deal with time-consuming business is to join the thread pool in the handler.

- The second way to deal with time-consuming business is to add thread pools in Context.

Treatment 1

- Asynchronously in the channelRead method of the EchoServerHandler:

public class EchoServerHandler extends ChannelInboundHandlerAdapter { static final EventLoopGroup group = new DefaultEventLoopGroup(16); @Override public void channelRead(ChannelHandlerContext ctx, Object msg) { final Object msgCop = msg; final ChannelHandlerContext cxtCop = ctx; group.submit(new Callable<Object>() { @Override public Object call() throws Exception { ByteBuf buf = (ByteBuf) msgCop; byte[] req = new byte[buf.readableBytes()]; buf.readBytes(req); String body = new String(req, "UTF-8"); Thread.sleep(10 * 1000); System.err.println(body + " " + Thread.currentThread().getName()); String reqString = "Hello i am server~~~"; ByteBuf resp = Unpooled.copiedBuffer(reqString.getBytes()); cxtCop.writeAndFlush(resp); return null; } }); System.out.println("go on .."); } }

- Processing flow:

- When the IO thread polls for a socket event, then the IO thread begins to process it. When it reaches the time-consuming handler, the time-consuming task is handed over to the business thread pool.

- When the pipeline write method is executed after the execution of the time-consuming task (the context write method is used in the code, and the pipeline method is shown above), the task will be handed over to the IO thread again.

- Take a look at the write method of AbstractChannelHandlerContext:

private void write(Object msg, boolean flush, ChannelPromise promise) { // ... omitted final AbstractChannelHandlerContext next = findContextOutbound(flush ? (MASK_WRITE | MASK_FLUSH) : MASK_WRITE); final Object m = pipeline.touch(msg, next); EventExecutor executor = next.executor(); if (executor.inEventLoop()) { if (flush) { next.invokeWriteAndFlush(m, promise); } else { next.invokeWrite(m, promise); } } else { final AbstractWriteTask task; if (flush) { task = WriteAndFlushTask.newInstance(next, m, promise); } else { task = WriteTask.newInstance(next, m, promise); } if (!safeExecute(executor, task, promise, m)) { // We failed to submit the AbstractWriteTask. We need to cancel it so we decrement the pending bytes // and put it back in the Recycler for re-use later. // // See https://github.com/netty/netty/issues/8343. task.cancel(); } } }

- When it is determined that the executor thread of outbound is not the current thread, the current work will be encapsulated as task, and then put into the mpsc queue, waiting for the IO task to finish executing and then executing the tasks in the queue.

- Here you can Debug to verify (Note: when debugging, the server-side debugging, the client-side Run mode). When we use the group.submit(new Callable() {} join the thread pool in the handler, and it will enter safeExecute(executor, task, promise, m); If the code is removed and the time-consuming business is executed in a normal way, then it will not enter the safe execute (executor, task, promise, m);

Treatment 2

- When pipeline adds handler, a thread pool can be added.

static final EventExecutorGroup group = new DefaultEventExecutorGroup(16); p.addLast(group, new EchoServerHandler());

- The code in the handler handles the time-consuming business in a normal way.

- When we call the addLast method to add a thread pool, the handler will give priority to the thread pool (that is, the thread pool corresponding to the first parameter group). If not, the IO thread will be used.

- When you go to the invokeChannelRead method of AbstractChannelHandlerContext, executor.inEventLoop() will not pass, because the executor of the current thread is IO thread Context (i.e. Handler) is a business thread, so it will execute asynchronously.

static void invokeChannelRead(final AbstractChannelHandlerContext next, Object msg) { final Object m = next.pipeline.touch(ObjectUtil.checkNotNull(msg, "msg"), next); EventExecutor executor = next.executor(); if (executor.inEventLoop()) { next.invokeChannelRead(m); } else { // Asynchronous processing logic that will walk here // Change to p.addLast(new EchoServerHandler()); it will not enter here, but the above logic executor.execute(new Runnable() { @Override public void run() { next.invokeChannelRead(m); } }); } }

Comparison of the two methods

- The first way is to add asynchrony to the handler, which may be more free. For example, if you need to access the database, it will be asynchrony; if you don't need it, it will not be asynchrony, which will delay the interface response time. Because the task needs to be put into mpscTask. If the IO time is very short and there are many tasks, there may be no time to execute the whole task in one cycle, resulting in the response time not reaching the target.

- The second approach is the Netty standard (i.e. join the queue), but doing so leaves the entire handler to the business thread pool. Whether it's time-consuming or not, it's not flexible enough to join the queue.

- Each has its own advantages and disadvantages. Considering flexibility, the first one is better.

Netty implements dubbo RPC

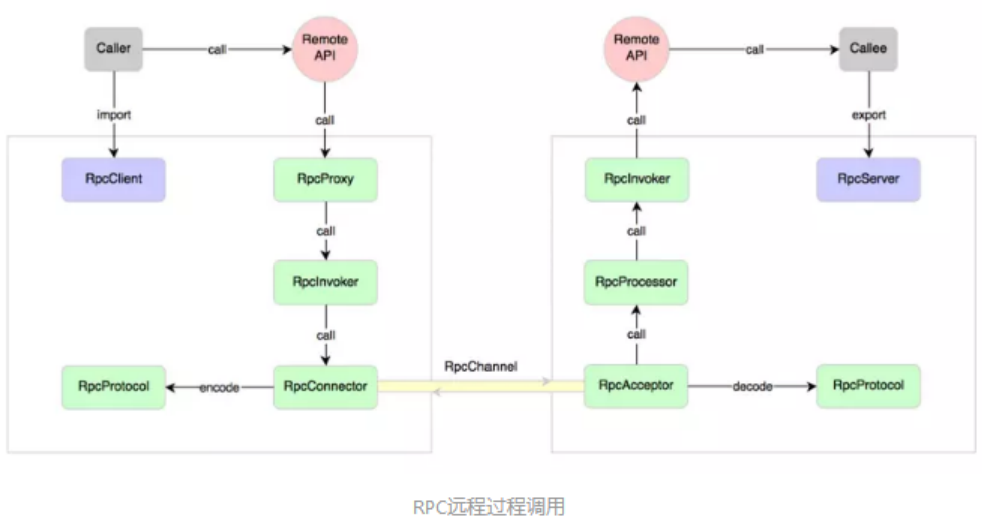

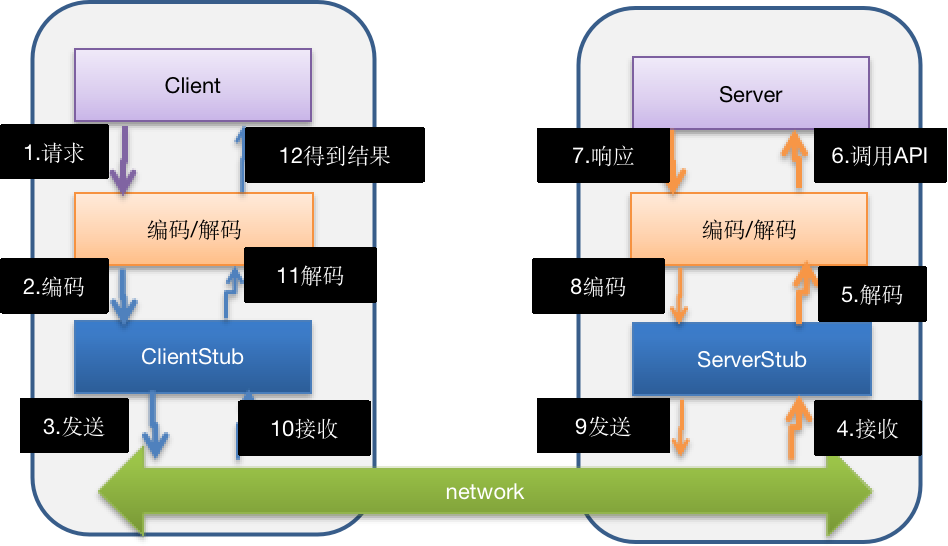

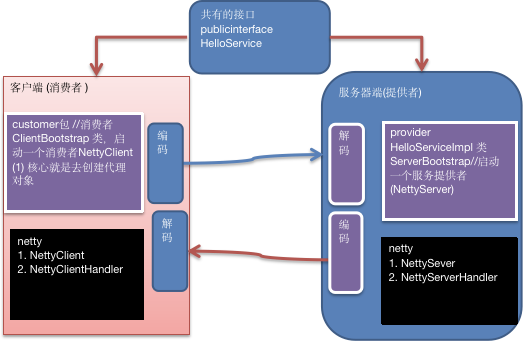

RPC introduction