Network IO Model and Classification

Internet IO model is a frequently mentioned problem. Different books or blogs may have different opinions, so there is no need to cut corners, the key is to understand.

Socket connection

Regardless of the model, the socket connections used are the same.

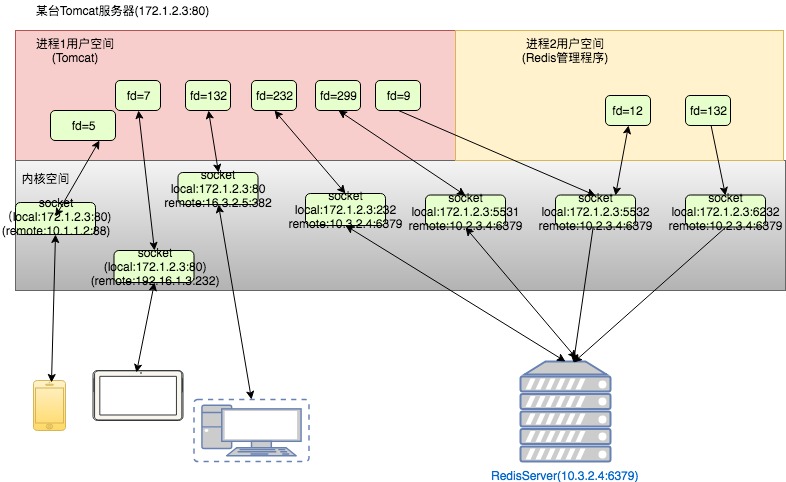

The following is a typical connection on an application server. Customer devices interact with the Tomcat process through the Http protocol. Tomcat needs to access the Redis server, which has several connections with the Redis server. Although the client has a short connection with Tomcat, which will be disconnected soon, and Tomcat and Redis are long connections, they are essentially the same.

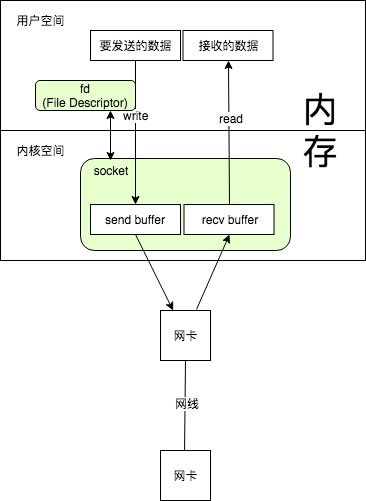

After the establishment of a socket, it is a pair of "local IP+port and remote IP+port". This socket is created by the system call of the application process calling the operating system. There will be a corresponding structure in the kernel space, and the application gets a File Describer, which can be read and written just like opening a common file. Different processes have their own file descriptor space, such as process 1 has a socket fd of 100, process 2 also has a socket fd of 100, their corresponding sockets are different (of course, it may be the same, because sockets can also be shared).

Socket is full duplex and can read and write at the same time.

For different application scenarios, the choice of network IO model and other options are different.

For example, for client-side http requests, we usually use short connections, because there are too many customers and many customers who use App at the same time, but the number of clients sending requests at the same time is far less than the number of customers in use. If long connections are established, memory will certainly not be enough, so we will use short connections, of course, there will be a keep-alive strategy of HTTP to make a tcp connection interact more. Secondary http data, which can reduce chain building. For applications within the system, such as Tomcat accessing Redis, the number of machines accessed is limited. If we use short connections every time, there will be too much wastage in building chains, so using long connections can greatly improve efficiency.

These are long connections and short connections. Generally, when discussing IO model, we do not consider this, but consider synchronous asynchronism, blocking and non-blocking. It depends on the scenario to determine which IO model to use. For CPU-intensive applications, such as a request that requires 100% running of two cores for a minute at a time, and then returning the result, the application uses the same IO model, because the bottleneck is in the CPU. So it is usually IO-intensive applications that consider how to adjust the IO model to maximize efficiency. The most typical is Web applications, and applications like Redis.

The concept of synchronous asynchrony and blocking nonblocking

Synchronization and asynchronization: Describes the interaction between user threads and the kernel. Synchronization means that user threads need to wait or poll for the completion of the kernel IO operation before they can continue to execute. Asynchronization means that user threads continue to execute after the IO request is initiated by user threads. When the kernel IO operation is completed, user threads will be notified or callback functions registered by user threads will be called.

Blocking and non-blocking: Description is the way the user thread calls the kernel IO operation. Blocking refers to IO operation that needs to be completely completed before returning to user space; non-blocking refers to IO operation is called immediately after returning to the user a status value, without waiting for the IO operation to be completely completed.

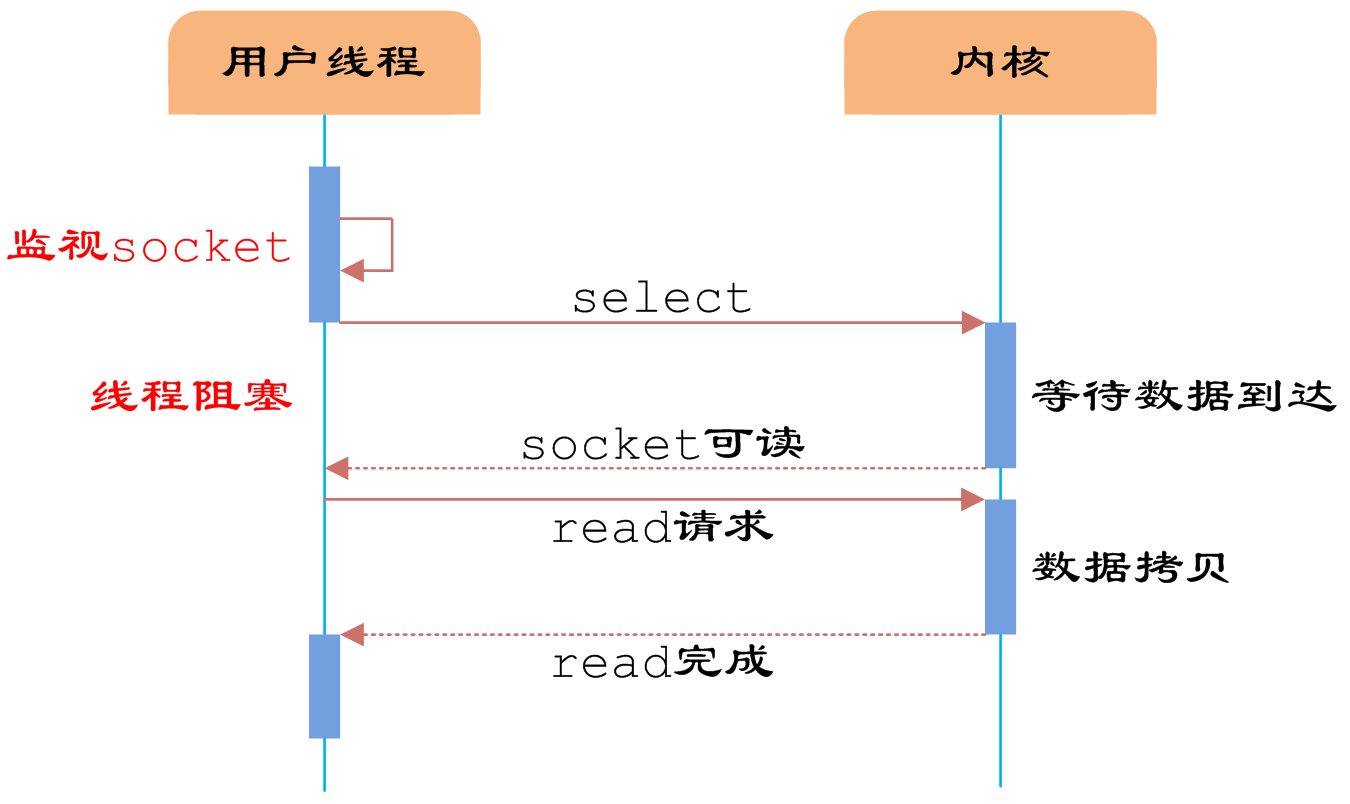

Use read function calls to illustrate different IO modes. Reading data from the opposite end is divided into two stages

(1) Data from device to kernel space (graph waiting for data arrival)

(2) Data from Kernel Space to User Space (Data Copy in Graph)

The following blocking IO, non-blocking IO, IO multiplexing are all synchronous IO, and finally asynchronous IO. This place may not be easy to understand, in short, synchronous IO must be thread call read-write function, always blocking, or polling the results, while asynchronous IO, read-write function immediately returned, after the operation is completed, the operating system actively told the thread.

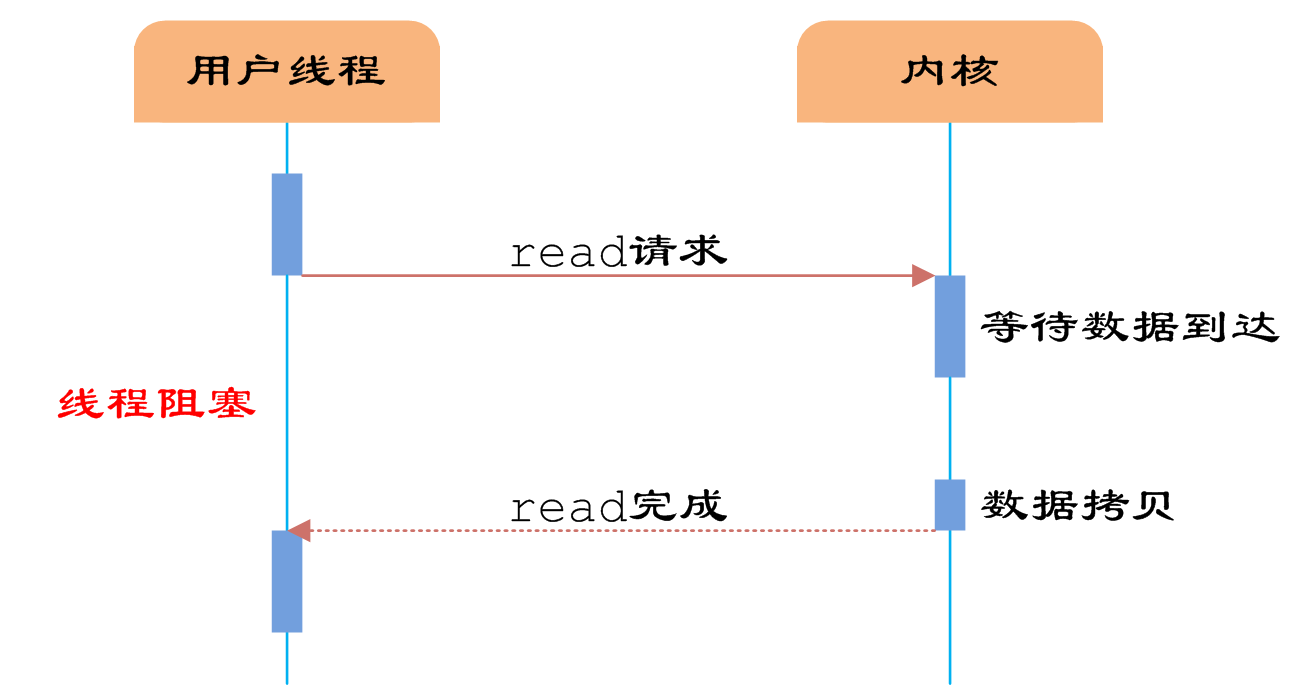

Blocking IO

Blocking IO means that after calling read, you must wait for the data to arrive and copy it to user space before returning, otherwise the whole thread is waiting.

So the problem with blocking IO is that threads can't do anything else while reading and writing IO.

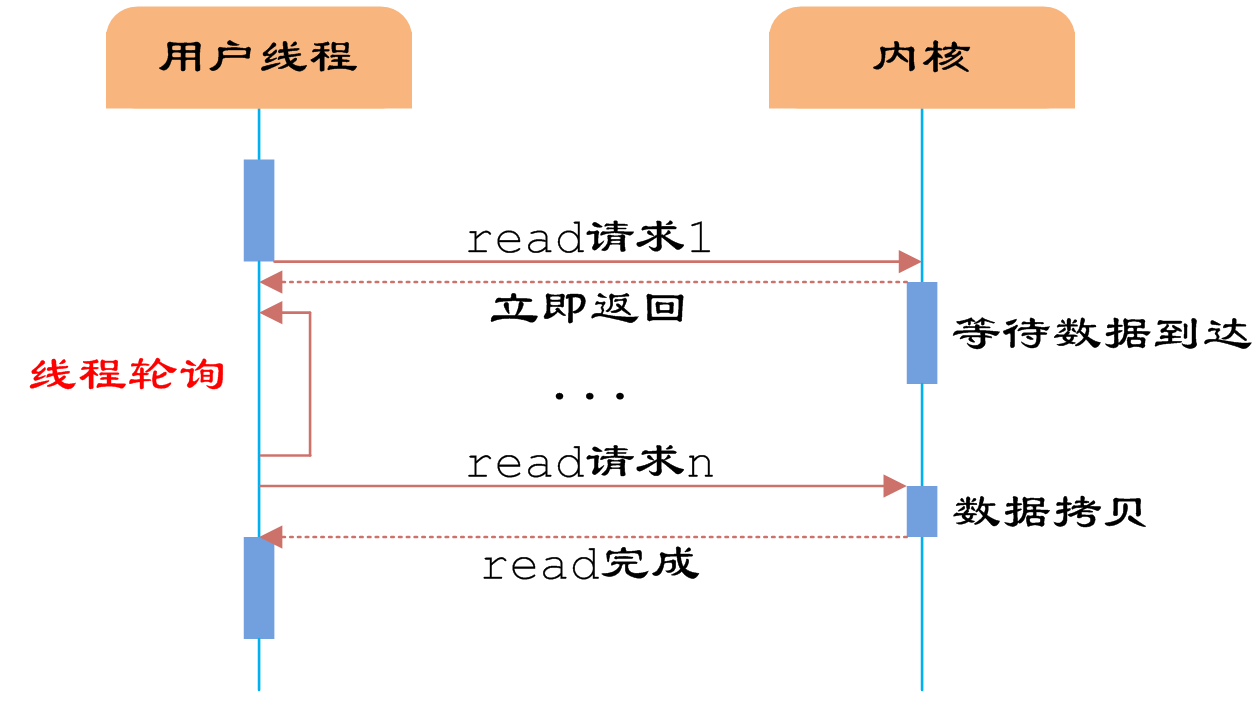

non-blocking IO

Non-blocking IO can return immediately after calling read, and then ask the operating system if the data is ready in the kernel space. If it is ready, it can read out. Because I don't know when I'm ready. To ensure real-time, I have to poll constantly.

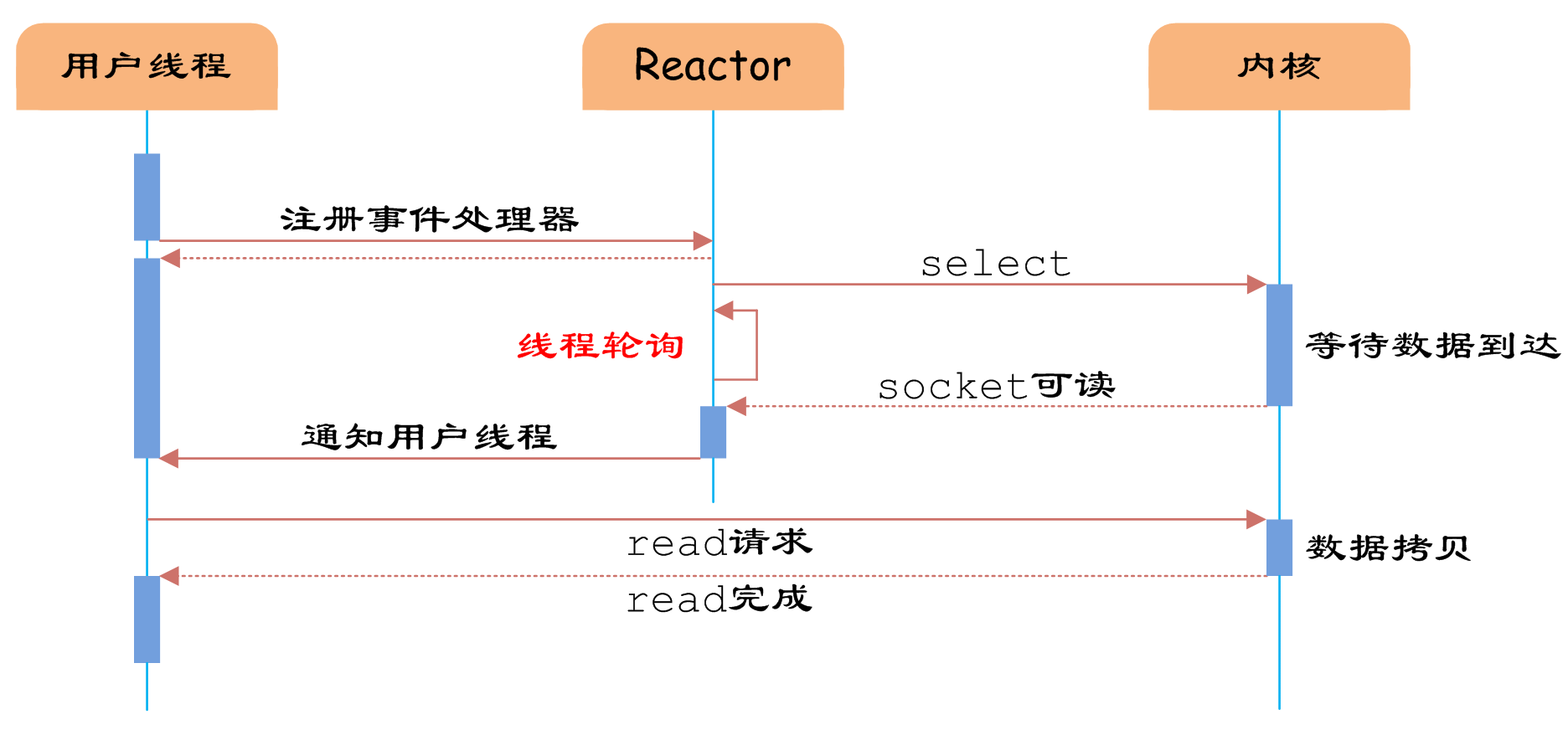

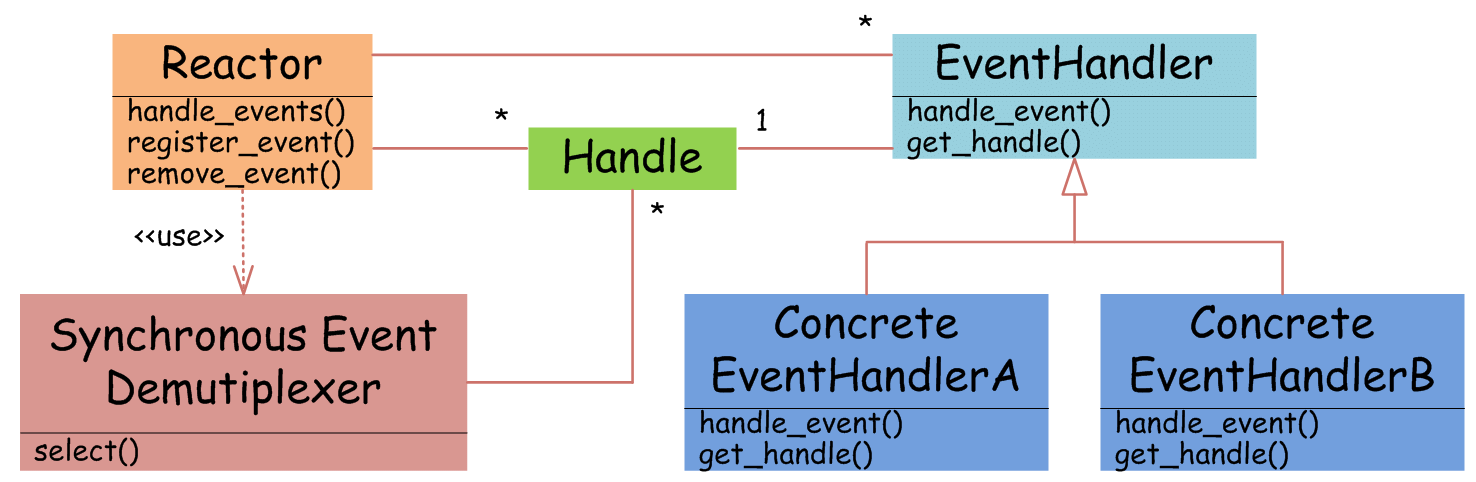

IO multiplexing (non-blocking IO)

When using non-blocking IO, if each thread polls continuously after accessing the network, the thread is occupied, which is no different from blocking IO. Each thread polls its own socket, and these threads can't do anything else.

If you can have a special thread to poll all socket s, if the data is ready, find a thread to process, which is IO multiplexing. Of course, polling threads can also be processed by themselves without looking for other threads, such as redis.

IO multiplexing allows one or more threads to manage many (thousands of) socket connections, so that the number of connections is no longer limited to the number of threads the system can start.

We extract the select polling and put it in a thread. The user thread registers relevant socket or IO requests to the user thread and notifies the user thread when the data arrives, which can improve the CPU utilization of the user thread. Thus, the asynchronous mode is realized.

The Reactor design pattern is used.

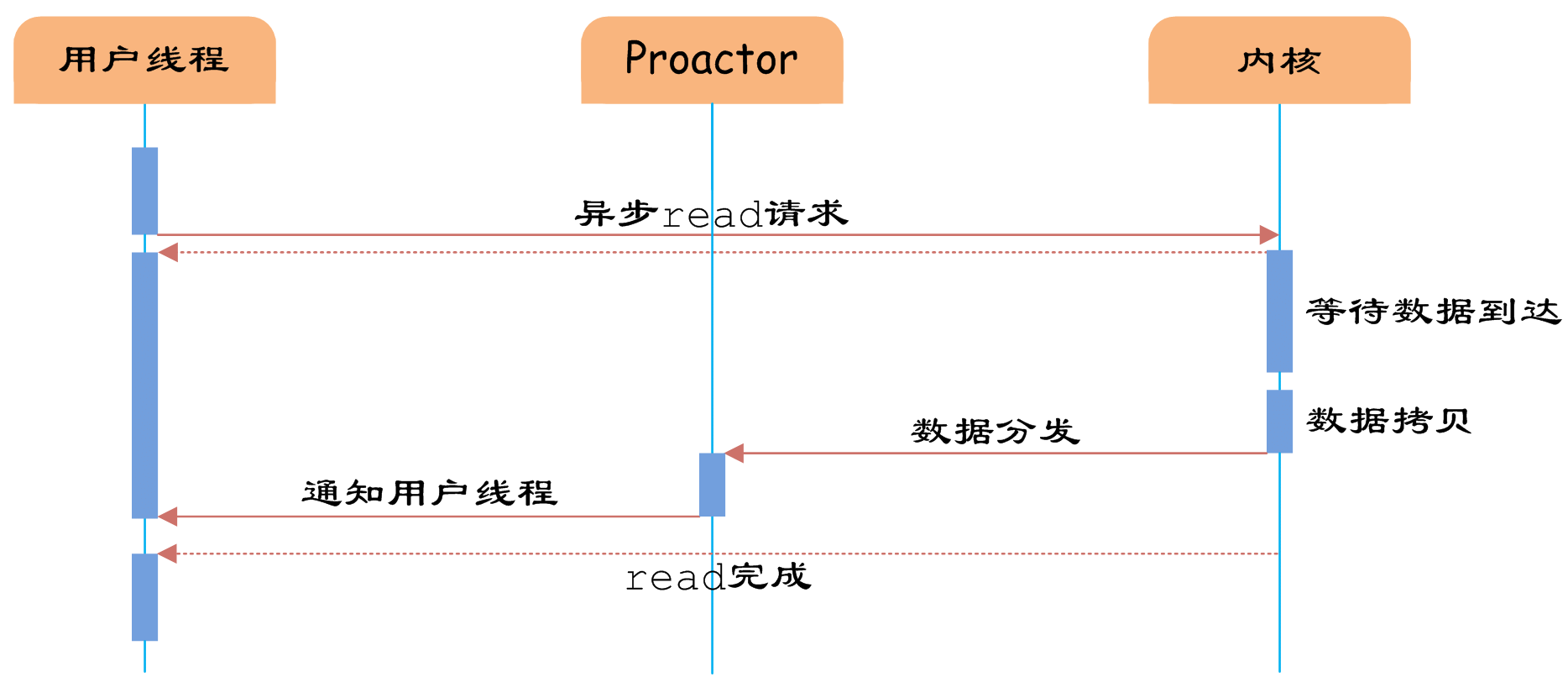

Asynchronous IO

True asynchronous IO requires stronger support from the operating system. In IO multiplexing model, the user thread notifies the user thread when the data arrives at the kernel, and the user thread copies the data from the kernel space. In asynchronous IO model, when the user thread receives the notification, the data has been copied by the operating system from the kernel to the buffer specified by the user, and the user thread can be used directly.

Asynchronous IO uses the Proactor design pattern.

Asynchronous IO is seldom used in common Web systems. This article does not discuss it too much.

Next, a simple java redis is used to illustrate various IO models.

actual combat

Next I'll write a simple java Edition of Edits, which only has get and set functions, and only supports strings, just to demonstrate various IO models, some of which are not in place for exception handling.

1. Blocking IO + single thread + short connection

This approach is only used to write HelloWorld programs, where the main purpose is to debug and propose some common classes.

First write a Redis interface

package org.ifool.niodemo.redis;

public interface RedisClient {

public String get(String key);

public void set(String key,String value);

public void close();

}In addition, there is a tool class for processing requests and returning results after getting the request data. There are also some byte to String, String to byte, adding some functions such as length before byte for subsequent use.

The input is get|key or set|key|value, and the output is 0|value or 1|null or 2|bad command.

package org.ifool.niodemo.redis;

import java.util.Map;

public class Util {

//Add a byte to the front of a String to indicate length

public static byte[] addLength(String str) {

byte len = (byte)str.length();

byte[] ret = new byte[len+1];

ret[0] = len;

for(int i = 0; i < len; i++) {

ret[i+1] = (byte)str.charAt(i);

}

return ret;

}

//Returns an output based on input, operates on the cache, prefixLength is true, then lengthens ahead

//input:

//->get|key

//->set|key|value

//output:

//->errorcode|response

// ->0| response set succeeded or get has value

// -> 1 | response get null

// ->2|bad command

public static byte[] proce***equest(Map<String,String> cache, byte[] request, int length, boolean prefixLength) {

if(request == null) {

return prefixLength ? addLength("2|bad command") : "2|bad command".getBytes();

}

String req = new String(request,0,length);

Util.log_debug("command:"+req);

String[] params = req.split("\\|");

if( params.length < 2 || params.length > 3 || !(params[0].equals("get") || params[0].equals("set"))) {

return prefixLength ? addLength("2|bad command") : "2|bad command".getBytes();

}

if(params[0].equals("get")) {

String value = cache.get(params[1]);

if(value == null) {

return prefixLength ? addLength("1|null") : "1|null".getBytes();

} else {

return prefixLength ? addLength("0|"+value) : ("0|"+value).getBytes();

}

}

if(params[0].equals("set") && params.length >= 3) {

cache.put(params[1],params[2]);

return prefixLength ? addLength("0|success"): ("0|success").getBytes();

} else {

return prefixLength ? addLength("2|bad command") : "2|bad command".getBytes();

}

}

public static int LOG_LEVEL = 0; //0 info 1 debug

public static void log_debug(String str) {

if(LOG_LEVEL >= 1) {

System.out.println(str);

}

}

public static void log_info(String str) {

if(LOG_LEVEL >= 0) {

System.out.println(str);

}

}

}The server code is as follows. When creating the server socket, the incoming port 8888. The function of backlog is the queue length that the server can't process immediately when the client establishes the connection and can wait for. Server-side code

package org.ifool.niodemo.redis.redis1;

import org.ifool.niodemo.redis.Util;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.InetSocketAddress;

import java.net.ServerSocket;

import java.net.Socket;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

public class RedisServer1 {

//Global cache

public static Map<String,String> cache = new ConcurrentHashMap<String,String>();

public static void main(String[] args) throws IOException {

ServerSocket serverSocket = new ServerSocket(8888,10);

byte[] buffer = new byte[512];

while(true) {

//Accept client connection requests

Socket clientSocket = null;

clientSocket = serverSocket.accept();

System.out.println("client address:" + clientSocket.getRemoteSocketAddress().toString());

//Read the data and manipulate the cache, then write back the data

try {

//Read data

InputStream in = clientSocket.getInputStream();

int bytesRead = in.read(buffer,0,512);

int totalBytesRead = 0;

while(bytesRead != -1) {

totalBytesRead += bytesRead;

bytesRead = in.read(buffer,totalBytesRead,512-totalBytesRead);

}

//Operation cache

byte[] response = Util.proce***equest(cache,buffer,totalBytesRead,false);

Util.log_debug("response:"+new String(response));

//Write back data

OutputStream os = clientSocket.getOutputStream();

os.write(response);

os.flush();

clientSocket.shutdownOutput();

} catch (IOException e) {

System.out.println("read or write data exception");

} finally {

try {

clientSocket.close();

} catch (IOException ex) {

ex.printStackTrace();

}

}

}

}

}The client code is as follows:

package org.ifool.niodemo.redis.redis1;

import org.ifool.niodemo.redis.RedisClient;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.Socket;

public class RedisClient1 implements RedisClient {

public static void main(String[] args) {

RedisClient redis = new RedisClient1("127.0.0.1",8888);

redis.set("123","456");

String value = redis.get("123");

System.out.print(value);

}

private String ip;

private int port;

public RedisClient1(String ip, int port) {

this.ip = ip;

this.port = port;

}

public String get(String key) {

Socket socket = null;

try {

socket = new Socket(ip, port);

} catch(IOException e) {

throw new RuntimeException("connect to " + ip + ":" + port + " failed");

}

try {

//Writing data

OutputStream os = socket.getOutputStream();

os.write(("get|"+key).getBytes());

socket.shutdownOutput(); //Without shutdown, the other end will wait for read

//Read data

InputStream in = socket.getInputStream();

byte[] buffer = new byte[512];

int offset = 0;

int bytesRead = in.read(buffer);

while(bytesRead != -1) {

offset += bytesRead;

bytesRead = in.read(buffer, offset, 512-offset);

}

String[] response = (new String(buffer,0,offset)).split("\\|");

if(response[0].equals("2")) {

throw new RuntimeException("bad command");

} else if(response[0].equals("1")) {

return null;

} else {

return response[1];

}

} catch(IOException e) {

throw new RuntimeException("network error");

} finally {

try {

socket.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

public void set(String key, String value) {

Socket socket = null;

try {

socket = new Socket(ip, port);

} catch(IOException e) {

throw new RuntimeException("connect to " + ip + ":" + port + " failed");

}

try {

OutputStream os = socket.getOutputStream();

os.write(("set|"+key+"|"+value).getBytes());

os.flush();

socket.shutdownOutput();

InputStream in = socket.getInputStream();

byte[] buffer = new byte[512];

int offset = 0;

int bytesRead = in.read(buffer);

while(bytesRead != -1) {

offset += bytesRead;

bytesRead = in.read(buffer, offset, 512-offset);

}

String bufString = new String(buffer,0,offset);

String[] response = bufString.split("\\|");

if(response[0].equals("2")) {

throw new RuntimeException("bad command");

}

} catch(IOException e) {

throw new RuntimeException("network error");

} finally {

try {

socket.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

public void close() {

}

}## 2. Blocking IO+Multithread+Short Connection

Generally, application servers use this model. The main thread blocks accept all the time. When a connection comes, it is handed over to a thread. It continues to wait for the connection. Then the processing thread is responsible for closing the connection after reading and writing.

Server-side code

package org.ifool.niodemo.redis.redis2;

import org.ifool.niodemo.redis.Util;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.ServerSocket;

import java.net.Socket;

import java.util.Map;

import java.util.concurrent.*;

public class RedisServer2 {

//Global cache

public static Map<String,String> cache = new ConcurrentHashMap<String,String>();

public static void main(String[] args) throws IOException {

//Thread pool for processing requests

ThreadPoolExecutor threadPool = new ThreadPoolExecutor(200, 1000, 30, TimeUnit.SECONDS, new ArrayBlockingQueue<Runnable>(1000));

ServerSocket serverSocket = new ServerSocket(8888,1000);

while(true) {

//Accept client connection requests

Socket clientSocket = serverSocket.accept();

Util.log_debug(clientSocket.getRemoteSocketAddress().toString());

//Let the thread pool process the request

threadPool.execute(new RequestHandler(clientSocket));

}

}

}

class RequestHandler implements Runnable{

private Socket clientSocket;

public RequestHandler(Socket socket) {

clientSocket = socket;

}

public void run() {

byte[] buffer = new byte[512];

//Read the data and manipulate the cache, then write back the data

try {

//Read data

InputStream in = clientSocket.getInputStream();

int bytesRead = in.read(buffer,0,512);

int totalBytesRead = 0;

while(bytesRead != -1) {

totalBytesRead += bytesRead;

bytesRead = in.read(buffer,totalBytesRead,512-totalBytesRead);

}

//Operation cache

byte[] response = Util.proce***equest(RedisServer2.cache,buffer,totalBytesRead,false);

Util.log_debug("response:"+new String(response));

//Write back data

OutputStream os = clientSocket.getOutputStream();

os.write(response);

os.flush();

clientSocket.shutdownOutput();

} catch (IOException e) {

System.out.println("read or write data exception");

} finally {

try {

clientSocket.close();

} catch (IOException ex) {

ex.printStackTrace();

}

}

}

}

Client code, the code does not change with the front, but this time I added a multi-threaded read and write, 10 threads read and write 10,000 times per thread.

public static void main(String[] args) {

final RedisClient redis = new RedisClient1("127.0.0.1",8888);

redis.set("123","456");

String value = redis.get("123");

System.out.print(value);

redis.close();

System.out.println(new Timestamp(System.currentTimeMillis()));

testMultiThread();

System.out.println(new Timestamp(System.currentTimeMillis()));

}

public static void testMultiThread() {

Thread[] threads = new Thread[10];

for(int i = 0; i < 10; i++) {

threads[i] = new Thread(new Runnable() {

public void run() {

RedisClient redis = new RedisClient2("127.0.0.1",8888);

for(int j=0; j < 300; j++) {

Random rand = new Random();

String key = String.valueOf(rand.nextInt(1000));

String value = String.valueOf(rand.nextInt(1000));

redis.set(key,value);

String value1 = redis.get(key);

}

}

});

threads[i].start();

}

for(int i = 0; i < 10; i++) {

try {

threads[i].join();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}In this way, in the case of 10 concurrent non-stop reads and writes, 10,000 write times, there are some anomalies that can not be connected, as follows:

java.net.NoRouteToHostException: Can't assign requested address

After checking the configuration of the following system parameters, the mac did not know how to adjust it, but read and write 300 times without error. It took about 1 s.

## 3. Blocking IO + Multithreading + Long Connection

When using short connections, we can use inputstream. read ()== - 1 to judge the end of reading, but when using long connections, the data is endless, there may be sticky packages or half packages, we need to find the beginning and end of a request from the flow. There are many ways, such as using fixed length, fixed separator, adding length in front and so on. In this paper, we use the method of adding length to the front, and put a byte in front to indicate the length of a request. The maximum byte is 127, so the request length should not be greater than 127 bytes.

Because the way our client accesses is to wait for the server to return the data after writing the request. During the waiting period, the socket will not be written by other people, so there is no sticky package problem, only half package problem. Some requests may be made by allowing other threads to write without waiting for the server to return, which may result in half a package.

Generally, when a client uses a long connection, it builds a connection pool and locks it to get the connection. In this place, we directly let a thread hold one connection and one read-write connection. This reduces the cost of thread switching and locking, and achieves greater throughput.

The client code has changed a lot this time.

package org.ifool.niodemo.redis.redis3;

import org.ifool.niodemo.redis.RedisClient;

import org.ifool.niodemo.redis.Util;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.Socket;

import java.sql.Timestamp;

import java.util.Random;

public class RedisClient3 implements RedisClient {

public static void main(String[] args) {

RedisClient redis = new RedisClient3("127.0.0.1",8888);

redis.set("123","456");

String value = redis.get("123");

System.out.print(value);

redis.close();

System.out.println(new Timestamp(System.currentTimeMillis()));

testMultiThread();

System.out.println(new Timestamp(System.currentTimeMillis()));

}

public static void testMultiThread() {

Thread[] threads = new Thread[10];

for(int i = 0; i < 10; i++) {

threads[i] = new Thread(new Runnable() {

public void run() {

RedisClient redis = new RedisClient3("127.0.0.1",8888);

for(int j=0; j < 50; j++) {

Random rand = new Random();

String key = String.valueOf(rand.nextInt(1000));

String value = String.valueOf(rand.nextInt(1000));

redis.set(key,value);

String value1 = redis.get(key);

}

}

});

threads[i].start();

}

for(int i = 0; i < 10; i++) {

try {

threads[i].join();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

private String ip;

private int port;

private Socket socket;

public RedisClient3(String ip, int port) {

this.ip = ip;

this.port = port;

try {

socket = new Socket(ip, port);

} catch(IOException e) {

throw new RuntimeException("connect to " + ip + ":" + port + " failed");

}

}

public String get(String key) {

try {

//Write data with a byte storage length in front

OutputStream os = socket.getOutputStream();

String cmd = "get|"+key;

byte length = (byte)cmd.length();

byte[] data = new byte[cmd.length()+1];

data[0] = length;

for(int i = 0; i < cmd.length(); i++) {

data[i+1] = (byte)cmd.charAt(i);

}

os.write(data);

os.flush();

//Read the data. The first byte is the length.

InputStream in = socket.getInputStream();

int len = in.read();

if(len == -1) {

throw new RuntimeException("network error");

}

byte[] buffer = new byte[len];

int offset = 0;

int bytesRead = in.read(buffer,0,len);

while(offset < len) {

offset += bytesRead;

bytesRead = in.read(buffer, offset, len-offset);

}

String[] response = (new String(buffer,0,offset)).split("\\|");

if(response[0].equals("2")) {

throw new RuntimeException("bad command");

} else if(response[0].equals("1")) {

return null;

} else {

return response[1];

}

} catch(IOException e) {

throw new RuntimeException("network error");

} finally {

}

}

public void set(String key, String value) {

try {

//Write data with a byte storage length in front

OutputStream os = socket.getOutputStream();

String cmd = "set|"+key + "|" + value;

byte length = (byte)cmd.length();

byte[] data = new byte[cmd.length()+1];

data[0] = length;

for(int i = 0; i < cmd.length(); i++) {

data[i+1] = (byte)cmd.charAt(i);

}

os.write(data);

os.flush();

InputStream in = socket.getInputStream();

int len = in.read();

if(len == -1) {

throw new RuntimeException("network error");

}

byte[] buffer = new byte[len];

int offset = 0;

int bytesRead = in.read(buffer,0,len);

while(offset < len) {

offset += bytesRead;

bytesRead = in.read(buffer, offset, len-offset);

}

String bufString = new String(buffer,0,offset);

Util.log_debug(bufString);

String[] response = bufString.split("\\|");

if(response[0].equals("2")) {

throw new RuntimeException("bad command");

}

} catch(IOException e) {

throw new RuntimeException("network error");

} finally {

}

}

public void close() {

try {

socket.close();

} catch(IOException ex) {

ex.printStackTrace();

}

}

}

When the server establishes a connection, it will be processed by a thread all the time. If there is data, it will be processed. If there is no data, it will not be processed. In this way, each connection has a thread, and if the number of connections is large, there will be problems.

package org.ifool.niodemo.redis.redis3;

import org.ifool.niodemo.redis.Util;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.InetSocketAddress;

import java.net.ServerSocket;

import java.net.Socket;

import java.util.Map;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

public class RedisServer3 {

//Global cache

public static Map<String,String> cache = new ConcurrentHashMap<String,String>();

public static void main(String[] args) throws IOException {

//Thread pool for processing requests

ThreadPoolExecutor threadPool = new ThreadPoolExecutor(20, 1000, 30, TimeUnit.SECONDS, new ArrayBlockingQueue<Runnable>(5));

ServerSocket serverSocket = new ServerSocket(8888, 10);

byte[] buffer = new byte[512];

while (true) {

//Accept client connection requests

Socket clientSocket = null;

try {

clientSocket = serverSocket.accept();

Util.log_debug(clientSocket.getRemoteSocketAddress().toString());

} catch (IOException e) {

e.printStackTrace();

}

//Let the thread pool process the request

threadPool.execute(new RequestHandler(clientSocket));

}

}

}

class RequestHandler implements Runnable{

private Socket clientSocket;

public RequestHandler(Socket socket) {

clientSocket = socket;

}

public void run() {

byte[] buffer = new byte[512];

//Read the data and manipulate the cache, then write back the data

try {

while(true) {

//Read data

InputStream in = clientSocket.getInputStream();

int len = in.read(); //Read length

if(len == -1) {

throw new IOException("socket closed by client");

}

int bytesRead = in.read(buffer, 0, len);

int totalBytesRead = 0;

while (totalBytesRead < len) {

totalBytesRead += bytesRead;

bytesRead = in.read(buffer, totalBytesRead, len - totalBytesRead);

}

//Operation cache

byte[] response = Util.proce***equest(RedisServer3.cache,buffer, totalBytesRead,true);

Util.log_debug("response:" + new String(response));

//Write back data

OutputStream os = clientSocket.getOutputStream();

os.write(response);

os.flush();

}

} catch (IOException e) {

System.out.println("read or write data exception");

} finally {

try {

clientSocket.close();

Util.log_debug("socket closed");

} catch (IOException ex) {

ex.printStackTrace();

}

}

}

}In this way, 10 threads read and write 10,000 times in a row, that is to say, it only takes 3 seconds to access 20 million times.

4. Blocking IO + single thread polling + multithreading + long connection (infeasible)

Multithreading and long connections greatly improve efficiency, but if you have too many connections, you need too many threads, which is certainly not feasible. In this way, most threads can't do anything else even without data, so they are consumed in this connection.

Can we have a thread wait for these socket s and tell the worker thread pool when there is data?

The code is as follows: add a thread to traverse the connected socket, and then notify the thread pool if socket. Getinputstream(). Available() > 0.

<u> This program can work normally in some cases, but it is actually problematic. The key is that the available function above is blocked. Every time we poll all sockets, we need to wait one by one for the data, so it is serial. There's no way to set sockets as non-blocking in java. You have to start with NIO. If it's possible to use C, it's not possible here. </u>

package org.ifool.niodemo.redis.redis4;

import org.ifool.niodemo.redis.Util;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.InetSocketAddress;

import java.net.ServerSocket;

import java.net.Socket;

import java.util.HashSet;

import java.util.Iterator;

import java.util.Map;

import java.util.Set;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

public class RedisServer4 {

//Global cache

public static Map<String,String> cache = new ConcurrentHashMap<String,String>();

//Current socket

final public static Set<Socket> socketSet = new HashSet<Socket>(10);

public static void main(String[] args) throws IOException {

//Thread pool for processing requests

final ThreadPoolExecutor threadPool = new ThreadPoolExecutor(20, 1000, 30, TimeUnit.SECONDS, new ArrayBlockingQueue<Runnable>(1000));

ServerSocket serverSocket = new ServerSocket(8888,100);

//Start a thread to scan the socket that can read data all the time and remove the closed connection

Thread thread = new Thread(new Runnable() {

public void run() {

//Find socket s that can be read and process

while (true) {

synchronized (socketSet) {

Iterator<Socket> it = socketSet.iterator();

while(it.hasNext()) {

Socket socket = it.next();

if (socket.isConnected()) {

try {

if (!socket.isInputShutdown() && socket.getInputStream().available() > 0) {

it.remove();

threadPool.execute(new RequestHandler(socket));

}

} catch (IOException ex) {

System.out.println("socket already closed1");

socketSet.remove(socket);

try {

socket.close();

} catch (IOException e) {

System.out.println("socket already closed2");

}

}

} else {

socketSet.remove(socket);

try {

socket.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

}

}

});

thread.start();

while(true) {

//Accept client connection requests and add new sockets to socketset

Socket clientSocket = null;

try {

clientSocket = serverSocket.accept();

Util.log_debug("client address:" + clientSocket.getRemoteSocketAddress().toString());

synchronized (socketSet) {

socketSet.add(clientSocket);

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

class RequestHandler implements Runnable{

private Socket clientSocket;

public RequestHandler(Socket socket) {

clientSocket = socket;

}

public void run() {

byte[] buffer = new byte[512];

//Read data and operate on cache, then write back data

try {

//Read data

InputStream in = clientSocket.getInputStream();

int len = in.read(); //Read length

if(len == -1) {

throw new IOException("socket closed by client");

}

int bytesRead = in.read(buffer, 0, len);

int totalBytesRead = 0;

while (totalBytesRead < len) {

totalBytesRead += bytesRead;

bytesRead = in.read(buffer, totalBytesRead, len - totalBytesRead);

}

//Operation cache

byte[] response = Util.proce***equest(RedisServer4.cache,buffer, totalBytesRead,true);

Util.log_debug("response:" + new String(response));

//Write back data

OutputStream os = clientSocket.getOutputStream();

os.write(response);

os.flush();

synchronized (RedisServer4.socketSet) {

RedisServer4.socketSet.add(clientSocket);

}

} catch (IOException e) {

e.printStackTrace();

System.out.println("read or write data exception");

} finally {

}

}

}5.IO Multiplexing + Single Thread Polling + Multithread Processing + Long Connection

In the above example, we try to use ordinary socket s to implement select-like functions, which is not feasible in Java, and we must use NIO. We only need a select function to poll for all connections to be ready for data, ready to call thread processing in the thread pool.

To use NIO, you need to know about ByteBuffer, Channel, etc. For example, the design of ByteBuffer is more troublesome, and it will not be expanded here.

Client code does not need NIO for the time being, or the original, the server code is as follows:

package org.ifool.niodemo.redis.redis5;

import org.ifool.niodemo.redis.Util;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.io.SyncFailedException;

import java.net.InetSocketAddress;

import java.net.ServerSocket;

import java.net.Socket;

import java.nio.ByteBuffer;

import java.nio.channels.SelectionKey;

import java.nio.channels.Selector;

import java.nio.channels.ServerSocketChannel;

import java.nio.channels.SocketChannel;

import java.util.*;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

public class RedisServer5 {

//Global cache

public static Map<String,String> cache = new ConcurrentHashMap<String,String>();

public static void main(String[] args) throws IOException {

//Thread pool for processing requests

final ThreadPoolExecutor threadPool = new ThreadPoolExecutor(20, 1000, 30, TimeUnit.SECONDS, new ArrayBlockingQueue<Runnable>(1000));

ServerSocketChannel ssc = ServerSocketChannel.open();

ssc.socket().bind(new InetSocketAddress(8888),1000);

Selector selector = Selector.open();

ssc.configureBlocking(false); //Must be set to non-blocking

ssc.register(selector, SelectionKey.OP_ACCEPT); //SerrSocket only cares about accept

while(true) {

int num = selector.select();

if(num == 0) {

continue;

}

Set<SelectionKey> selectionKeys = selector.selectedKeys();

Iterator<SelectionKey> it = selectionKeys.iterator();

while(it.hasNext()) {

SelectionKey key = it.next();

it.remove();

if(key.isAcceptable()) {

SocketChannel sc = ssc.accept();

sc.configureBlocking(false); //Set to non-blocking to listen

sc.register(key.selector(), SelectionKey.OP_READ, ByteBuffer.allocate(512) );

System.out.println("new connection");

}

if(key.isReadable()) {

SocketChannel clientSocketChannel = (SocketChannel)key.channel();

//System.out.println("socket readable");

if(!clientSocketChannel.isConnected()) {

clientSocketChannel.finishConnect();

key.cancel();

clientSocketChannel.close();

System.out.println("socket closed2");

continue;

}

ByteBuffer buffer = (ByteBuffer)key.attachment();

int len = clientSocketChannel.read(buffer);

Socket socket = clientSocketChannel.socket();

if(len == -1) {

clientSocketChannel.finishConnect();

key.cancel();

clientSocketChannel.close();

System.out.println("socket closed1");

} else {

threadPool.execute(new RequestHandler(clientSocketChannel, buffer));

}

}

}

}

}

}

class RequestHandler implements Runnable{

private SocketChannel channel;

private ByteBuffer buffer;

public RequestHandler(SocketChannel channel, Object buffer) {

this.channel = channel;

this.buffer = (ByteBuffer)buffer;

}

public void run() {

//Read the data and manipulate the cache, then write back the data

try {

int position = buffer.position();

//Switch to read mode to read the first byte to length

buffer.flip();

int len = buffer.get(); //Read length

if(len > position + 1) {

buffer.position(position);

buffer.limit(buffer.capacity());

return;

}

byte[] data = new byte[len];

buffer.get(data,0,len);

//Operation cache

byte[] response = Util.proce***equest(RedisServer5.cache,data, len,true);

Util.log_debug("response:" + new String(response));

buffer.clear();

buffer.put(response);

buffer.flip();

channel.write(buffer);

buffer.clear();

} catch (IOException e) {

System.out.println("read or write data exception");

} finally {

}

}

}

There are many pits in writing NIO programs by oneself. Sometimes the above code will go wrong and some exceptions are not handled properly. But 10 threads write 10,000 times without stopping, which is more than 3 seconds.

IO multiplexing + Netty

Writing native NIO programs using java can be problematic because the API is complex and there are many exceptions to be handled, such as closing connections, gluing half packages, etc. Using a mature framework such as Netty will be easier to write.

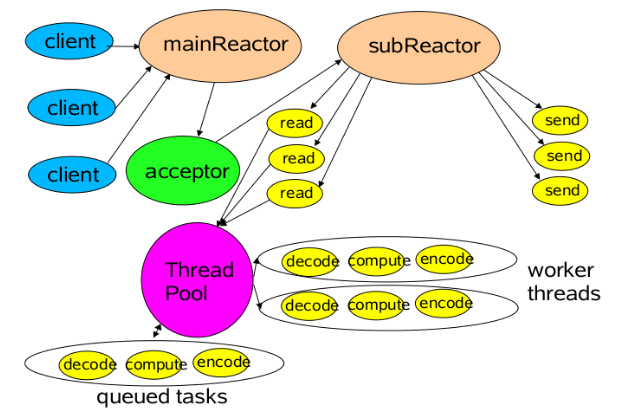

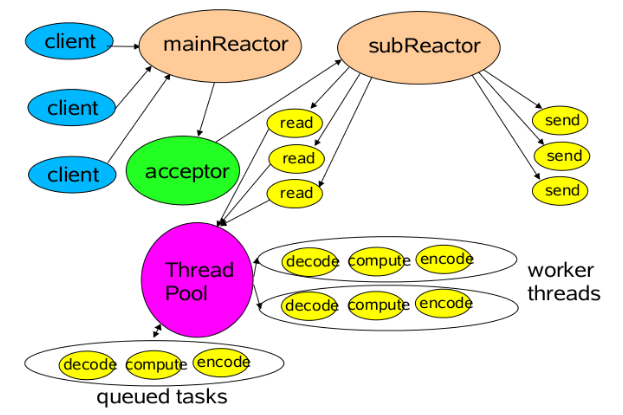

Netty's commonly used thread model is shown in the following figure. The mainReactor is responsible for listening for server socket s, accept s new connections, and assigns the established sockets to subReactors. subReactor is responsible for multiplexing connected sockets, reading and writing network data, and processing business functions, which are thrown to the worker thread pool for completion. Generally, the number of subreactors can be equal to the number of CPU s.

The client code is shown below. Two of these NioEventLoop s are the main Reactor and the subReactor above. The first parameter is 0, which means that the default number of threads is used, so the mainReactor is generally 1, and the subReactor is generally connected to the CPU core.

We only have a boss (main Reactor) and a worker(subReactor). Normally, there is a thread pool to handle real business logic, because worker is used to read and decode data. It is not appropriate to process business logic in this worker, such as accessing database. It's just that our scenario is similar to Redis, so we don't use another thread pool.

package org.ifool.niodemo.redis.redis6;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.LengthFieldBasedFrameDecoder;

import org.ifool.niodemo.redis.Util;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.util.Map;

import java.util.concurrent.*;

import java.util.concurrent.atomic.AtomicInteger;

public class RedisServer6 {

//Global cache

public static Map<String,String> cache = new ConcurrentHashMap<String,String>();

public static void main(String[] args) throws IOException, InterruptedException {

//Thread pool for handling accept events

EventLoopGroup bossGroup = new NioEventLoopGroup(0, new ThreadFactory() {

AtomicInteger index = new AtomicInteger(0);

public Thread newThread(Runnable r) {

return new Thread(r,"netty-boss-"+index.getAndIncrement());

}

});

//Thread pool for processing read events

EventLoopGroup workerGroup = new NioEventLoopGroup(0, new ThreadFactory() {

AtomicInteger index = new AtomicInteger(0);

public Thread newThread(Runnable r) {

return new Thread(r,"netty-worker-"+index.getAndIncrement());

}

});

ServerBootstrap bootstrap = new ServerBootstrap();

bootstrap.group(bossGroup,workerGroup)

.channel(NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG,50)

.childHandler(new ChildChannelHandler());

ChannelFuture future = bootstrap.bind(8888).sync();

future.channel().closeFuture().sync();

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

}

/**This class is called by netty-worker**/

class ChildChannelHandler extends ChannelInitializer<SocketChannel> {

protected void initChannel(SocketChannel socketChannel) throws Exception {

//Subcontract through a Length Field BasedFrame Decoder and pass it to RequestHandler

socketChannel.pipeline()

.addLast(new RedisDecoder(127,0,1))

.addLast(new RequestHandler());

}

}

class RequestHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ByteBuf buf = (ByteBuf)msg;

int len = buf.readableBytes() - 1;

int lenField = buf.readByte();

if(len != lenField) {

ByteBuf resp = Unpooled.copiedBuffer("2|bad cmd".getBytes());

ctx.write(resp);

}

byte[] req = new byte[len];

buf.readBytes(req,0,len);

byte[] response = Util.proce***equest(RedisServer6.cache,req,len,true);

ByteBuf resp = Unpooled.copiedBuffer(response);

ctx.write(resp);

}

@Override

public void channelReadComplete(ChannelHandlerContext ctx) throws Exception {

ctx.flush();

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

ctx.close();

}

}

class RedisDecoder extends LengthFieldBasedFrameDecoder {

public RedisDecoder(int maxFrameLength, int lengthFieldOffset, int lengthFieldLength) {

super(maxFrameLength, lengthFieldOffset, lengthFieldLength);

}

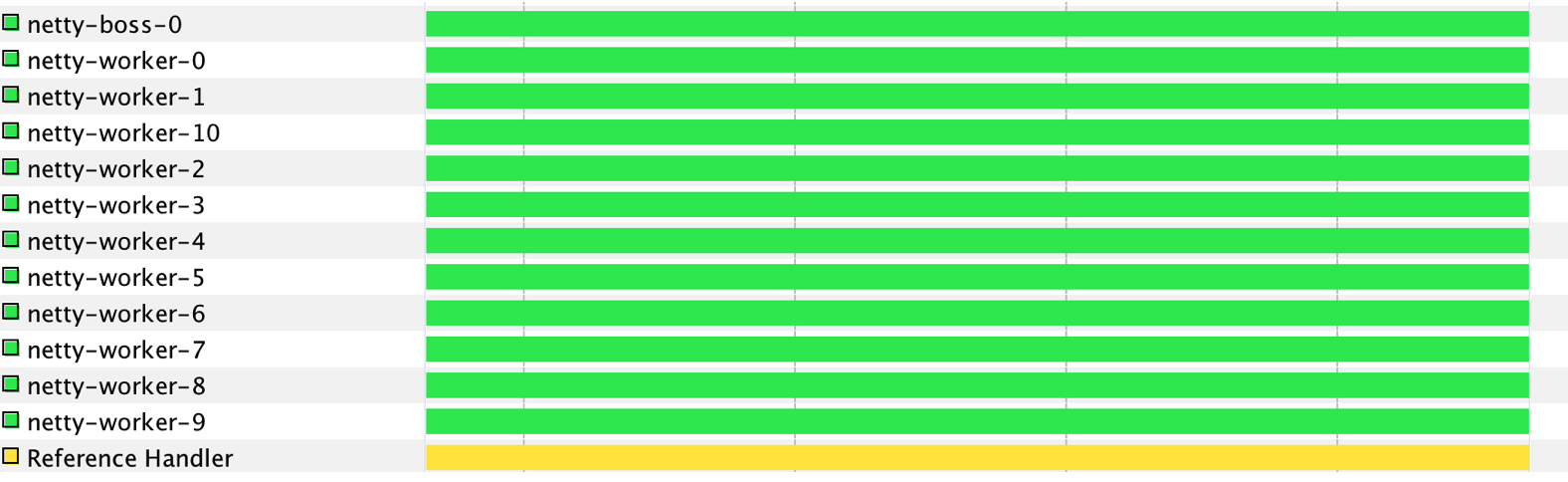

}As you can see, a boss thread and 10 worker threads are generated after running.

Writing with netty is more stable, 10 times written without stopping 10,000 times is also 3 seconds, but don't worry about the number of threads.

summary

Network IO model is difficult to understand only by looking at concepts, but only through examples can it understand more deeply. Let's summarize by answering a question:

Why can redis achieve tens of thousands of tps through a single thread?

We refine the process of redis processing requests:

(1) read the original data

(2) Parsing and processing data (processing business logic)

(3) Writing returns data

Reading data is achieved through IO multiplexing, and at the bottom, through epoll. Compared with select (not Java NIO's select, but Linux's select), epoll has the following two advantages:

First, it improves the efficiency of traversing socket s, even if there are millions of connections, it will only traverse connections with events, and select needs to traverse all of them.

Second, the shared memory of kernel state and user state is realized through mmap, that is, the data is copied from the network card to the kernel space and does not need to be copied to the user space. Therefore, if epoll is used, the data in the memory is ready and does not need to be copied.

From the above, it can be concluded that reading data is very fast.

Next comes data processing, which is the essential reason for using single threads. The business logic of redis is pure memory operation, which takes nanoseconds, so events can be ignored. If we are a complex web application, business logic involves reading databases and calling other modules, then we can not use single thread.

Similarly, writing data is shared memory through epoll, as long as the results are calculated and put into user memory, and then inform the operating system.

So the premise that redis can support tens of thousands of tps in a single thread is that every request is a memory operation and events are very short, but if one request is slow, it will lead to request blocking. Assuming that 99.99% of the request response time is less than 1ms and 0.01% of the request time is 1s, the remaining 1ms of requests in the single-threaded model have to be queued when processing 1s requests.