Use of kinect2 in VS

- 1. Download and debug the kinectV2 software development kit

- 2. Download and installation of VS2013

- 3. Installation configuration of opencv

- 3.1 Configuring environment variables

- 3.2 opencv Configuration in VS

- 3.3 New vs Project Test Configuration Successful

- 4. Configuration of Kinectv2 in VS (used in conjunction with opencv)

- 5. Configuration of PCL in VS

- 5.1 Download and Install

- 5.2 Configuring environment variables

- Configuration of 5.3 PCL in VS

- Small Test for 5.4 PCL

- 5.5 PCL combined with opencv to read kinectv2 point cloud pictures in VS

- 5.6 PCL combined with opencv to save kinectv2 point cloud pictures in VS

- 6. A series of issues encountered by VS2013 in conjunction with pcl in the Storage Point Cloud process

Foreword: Record your previous notes

1. Download and debug the kinectV2 software development kit

KitSDK-v2.0_1409-Setup Official Download Address

Official document for the kinect for Windows version 2.0 SDK

kinect2 complete disassembly

Kinect Data Extraction and Coordinate Transformation

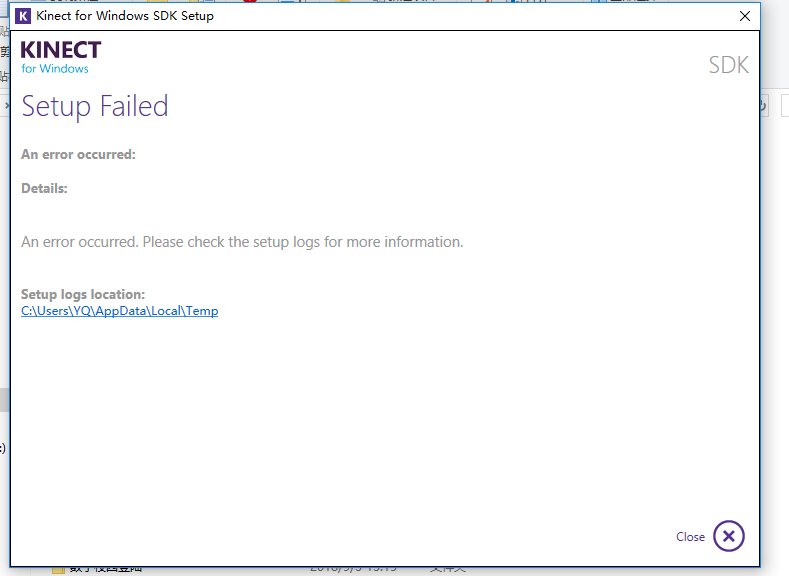

1.1 Installation

After downloading the SDK package, it is easy to install it by double-clicking, then restart your computer as prompted. If the installation process shows the following picture, please reset your computer (if the installation fails after the system has been reset, the computer system has not been successfully reset, please reconfigure it again. This happened before, and then reconfigure itOkay)

Note: When this happened before, I went online to find a solution, mostly to uninstall all the SDK packages that I just downloaded, and then download them again. Some people on the Internet succeeded, but after I tried, the same problem happened. Later, I thought I had uninstalled a lot of things on my computer that didn't look good. I guess it happened at that time.Problem, I guess Microsoft's own driver should be uninstalled along with it.

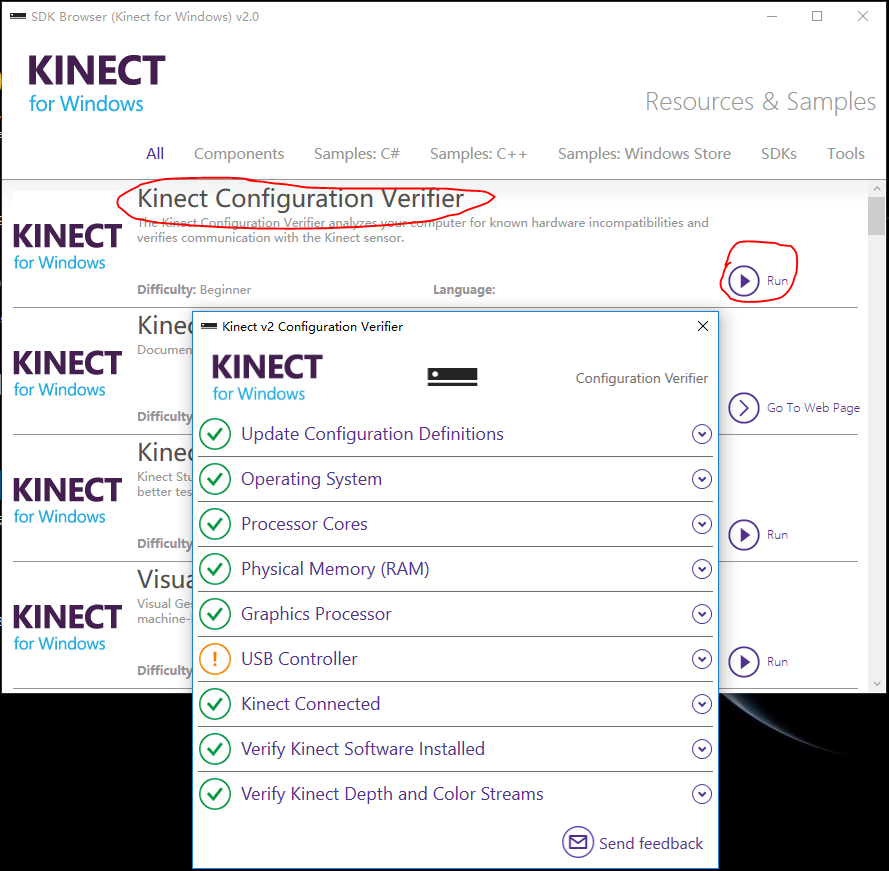

1.2 Debugging

Connecting kinectv2 to USB3.0 (computer blue port) will react to the camera, as win10 will automatically install the main driver of kinectv2.Open the SDK Browser and open the camera's self-test program. The picture below shows that kinectv2 is connected.

Note: The exclamation mark on the USB line indicates that the contact is bad and does not affect other debugging of the camera.

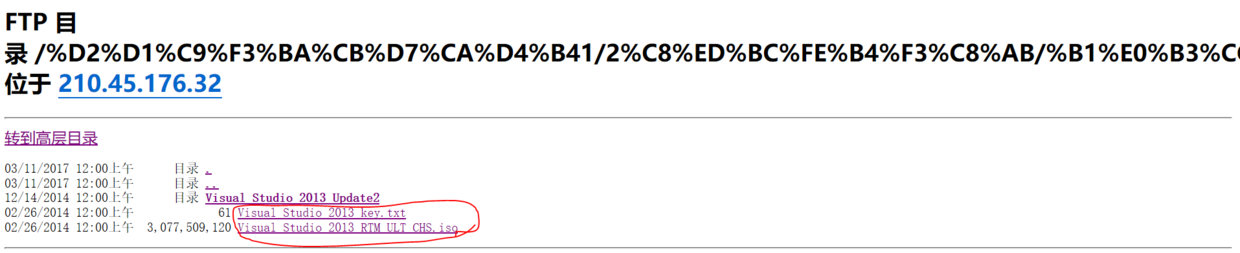

2. Download and installation of VS2013

2.1 Download

VS2013 Download Address

VS2013 Official Document

Note: I only downloaded the flagship version and key circled in the screenshot (to register the product). If I can't open the link, it's probably because I chose the school download address for the convenience and faster download. You can also download it from the WeChat public number "Software Installation Housekeeper" (good public number, highly recommended)

2.2 Installation

Refer to the tutorial in the WeChat Public Number Software Installation Housekeeper

3. Installation configuration of opencv

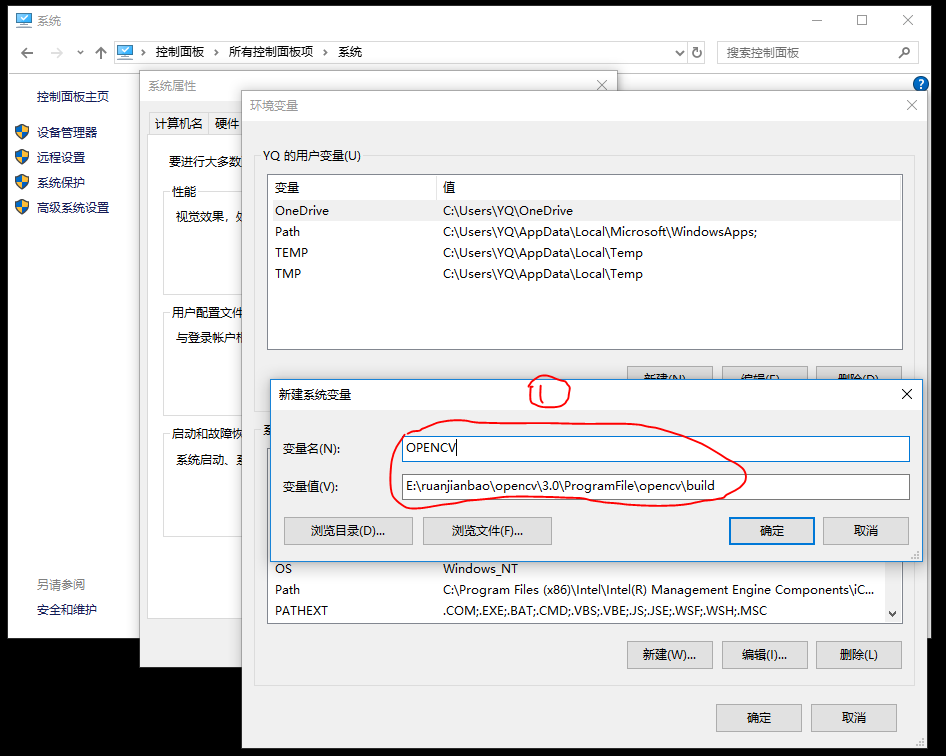

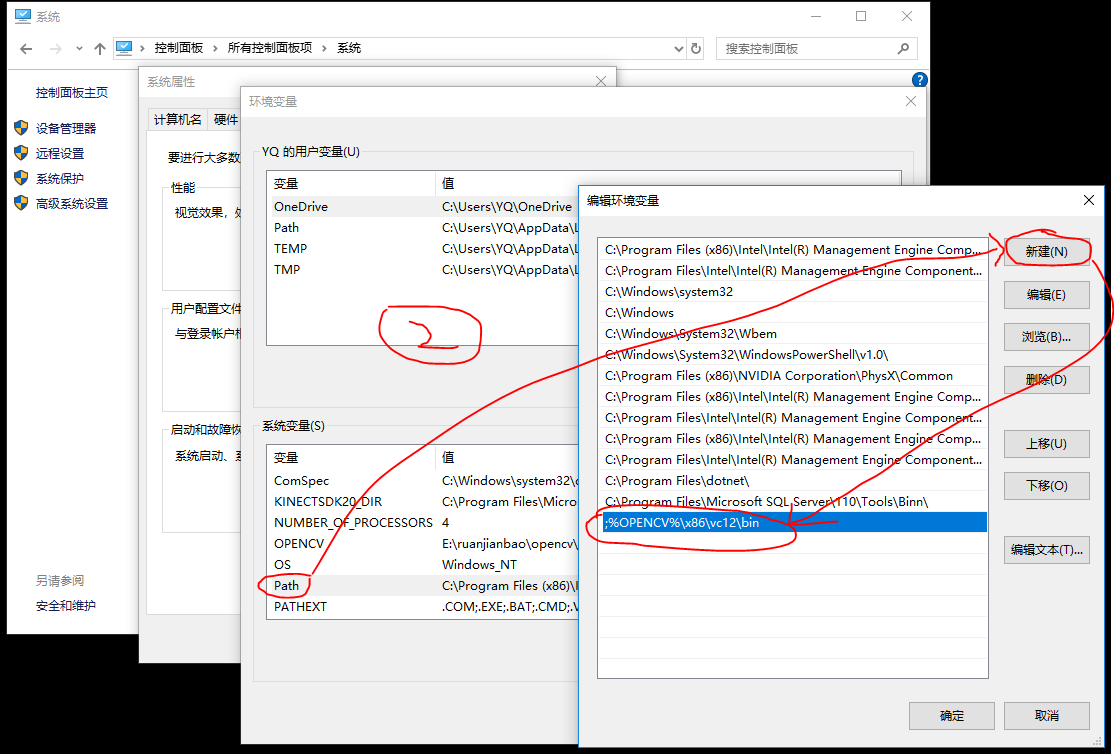

3.1 Configuring environment variables

Control Panel_System_Advanced System Settings_Environment Variables_New System Variables

Control Panel_System_Advanced System Settings_Environment Variables_Path

Note:

- x86 and x64 represent 32-bit and 64-bit VS projects, vc10, vc11, and vc12 represent the compiler versions used by Visual Studio for VS2010, VS2012, and VS2013, respectively.

- Restart the computer after setting the environment variables to ensure that the system environment variables take effect immediately and avoid a series of Path-Related problems.

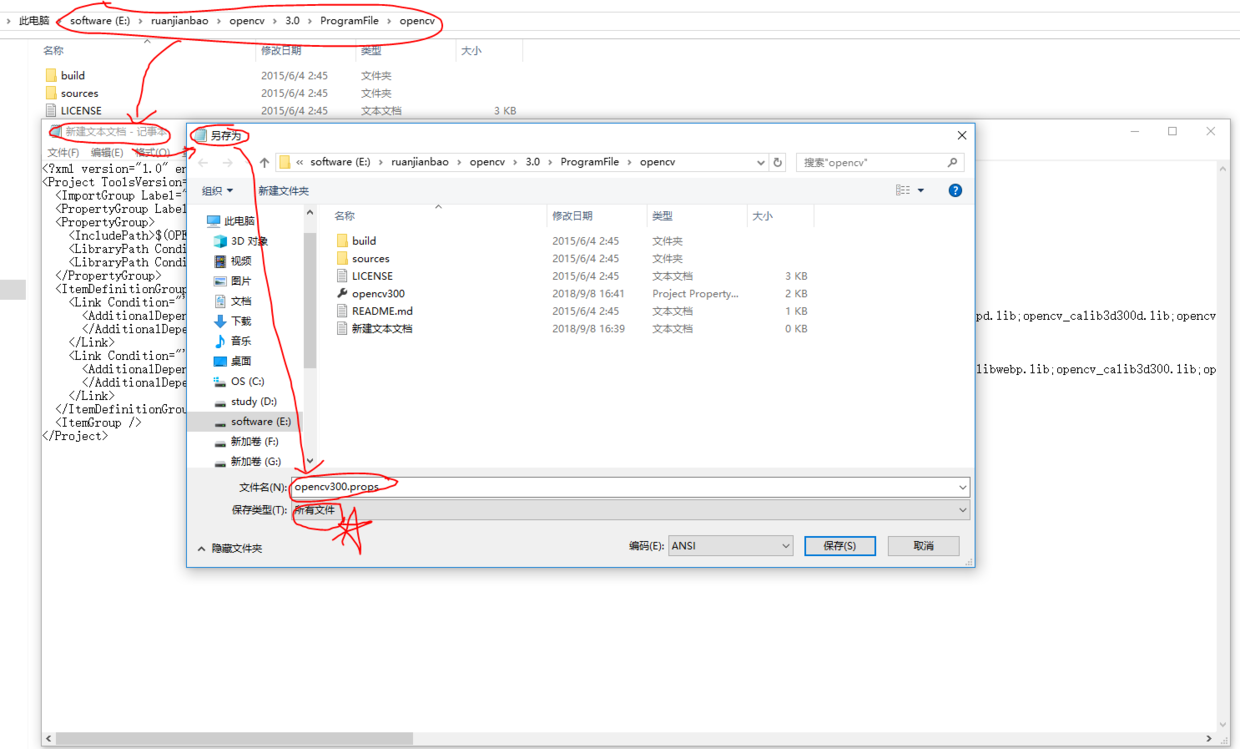

3.2 opencv Configuration in VS

Add the attribute table of opencv3.0 to the text file first (as shown below)

<?xml version="1.0" encoding="utf-8"?>

<Project ToolsVersion="4.0" xmlns="http://schemas.microsoft.com/developer/msbuild/2003">

<ImportGroup Label="PropertySheets" />

<PropertyGroup Label="UserMacros" />

<PropertyGroup>

<IncludePath>$(OPENCV)\include;$(IncludePath)</IncludePath>

<LibraryPath Condition="'$(Platform)'=='Win32'">$(OPENCV)\x86\vc12\lib;$(OPENCV)\x86\vc12\staticlib;$(LibraryPath)</LibraryPath>

<LibraryPath Condition="'$(Platform)'=='X64'">$(OPENCV)\x64\vc12\lib;$(OPENCV)\x64\vc12\staticlib;$(LibraryPath)</LibraryPath>

</PropertyGroup>

<ItemDefinitionGroup>

<Link Condition="'$(Configuration)'=='Debug'">

<AdditionalDependencies>opencv_ts300d.lib;opencv_world300d.lib;IlmImfd.lib;libjasperd.lib;libjpegd.lib;libpngd.lib;libtiffd.lib;libwebpd.lib;opencv_calib3d300d.lib;opencv_core300d.lib;opencv_features2d300d.lib;opencv_flann300d.lib;opencv_highgui300d.lib;opencv_imgcodecs300d.lib;opencv_imgproc300d.lib;opencv_ml300d.lib;opencv_objdetect300d.lib;opencv_photo300d.lib;opencv_shape300d.lib;opencv_stitching300d.lib;opencv_superres300d.lib;opencv_ts300d.lib;opencv_video300d.lib;opencv_videoio300d.lib;opencv_videostab300d.lib;zlibd.lib;%(AdditionalDependencies)

</AdditionalDependencies>

</Link>

<Link Condition="'$(Configuration)'=='Release'">

<AdditionalDependencies>opencv_ts300.lib;opencv_world300.lib;IlmImf.lib;ippicvmt.lib;libjasper.lib;libjpeg.lib;libpng.lib;libtiff.lib;libwebp.lib;opencv_calib3d300.lib;opencv_core300.lib;opencv_features2d300.lib;opencv_flann300.lib;opencv_highgui300.lib;opencv_imgcodecs300.lib;opencv_imgproc300.lib;opencv_ml300.lib;opencv_objdetect300.lib;opencv_photo300.lib;opencv_shape300.lib;opencv_stitching300.lib;opencv_superres300.lib;opencv_ts300.lib;opencv_video300.lib;opencv_videoio300.lib;opencv_videostab300.lib;zlib.lib;%(AdditionalDependencies)

</AdditionalDependencies>

</Link>

</ItemDefinitionGroup>

<ItemGroup />

</Project>

Note:

Among them, using text files to add the content of the attribute table to it, and then to generate the attribute table process, I have found a number of cases on the Internet, saying that adding content to the text file directly changes the suffix name to the props format, but I can't do that here. After changing the suffix name, you will see that its properties are still in the txt format, which really makes it easier for me to change the suffix name to the props format.Sorry, I wasted a lot of time on this, but I finally found the right way. Here's a screenshot of my process to help you avoid detours. When you save as, you must remember to choose the save type "All Files" (I deliberately drew stars in the screenshot).

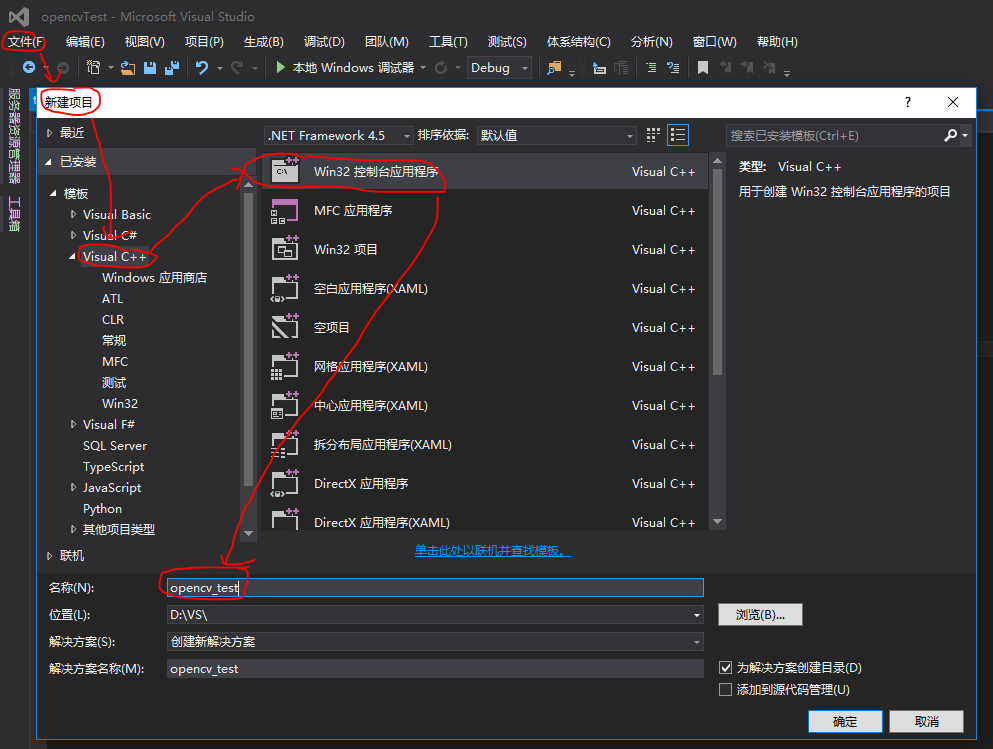

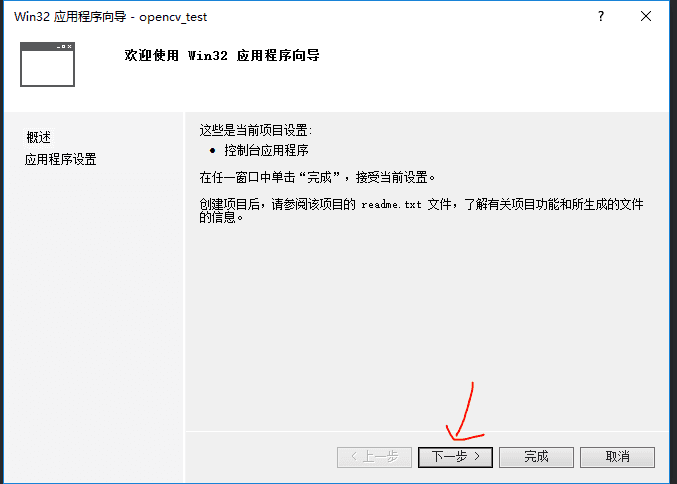

3.3 New vs Project Test Configuration Successful

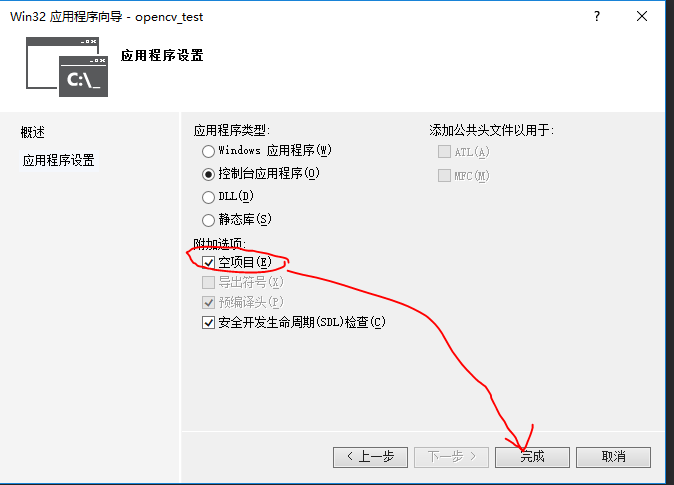

Open VS2013 File New Project Visual C++ Win32 Console Application New Project Name opencv_test OK Next Step Empty Project Check Finish

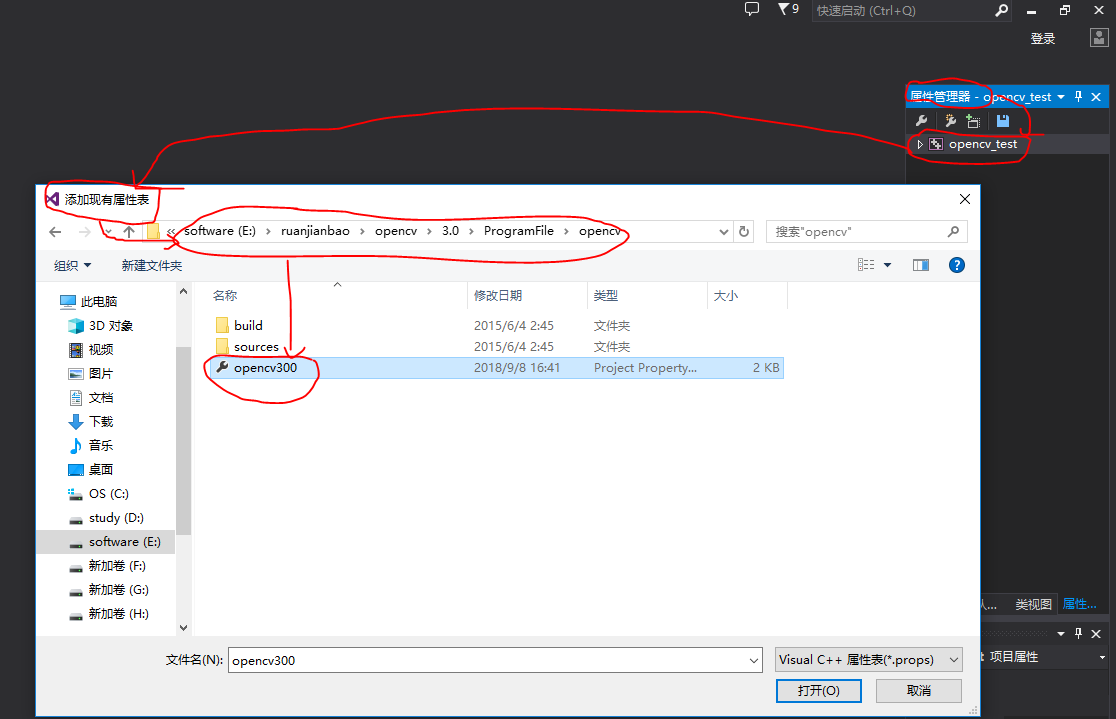

Search Bar Search Attribute Manager Right-click opencv_test Add Existing Attribute Table Find Attribute Table Path Open

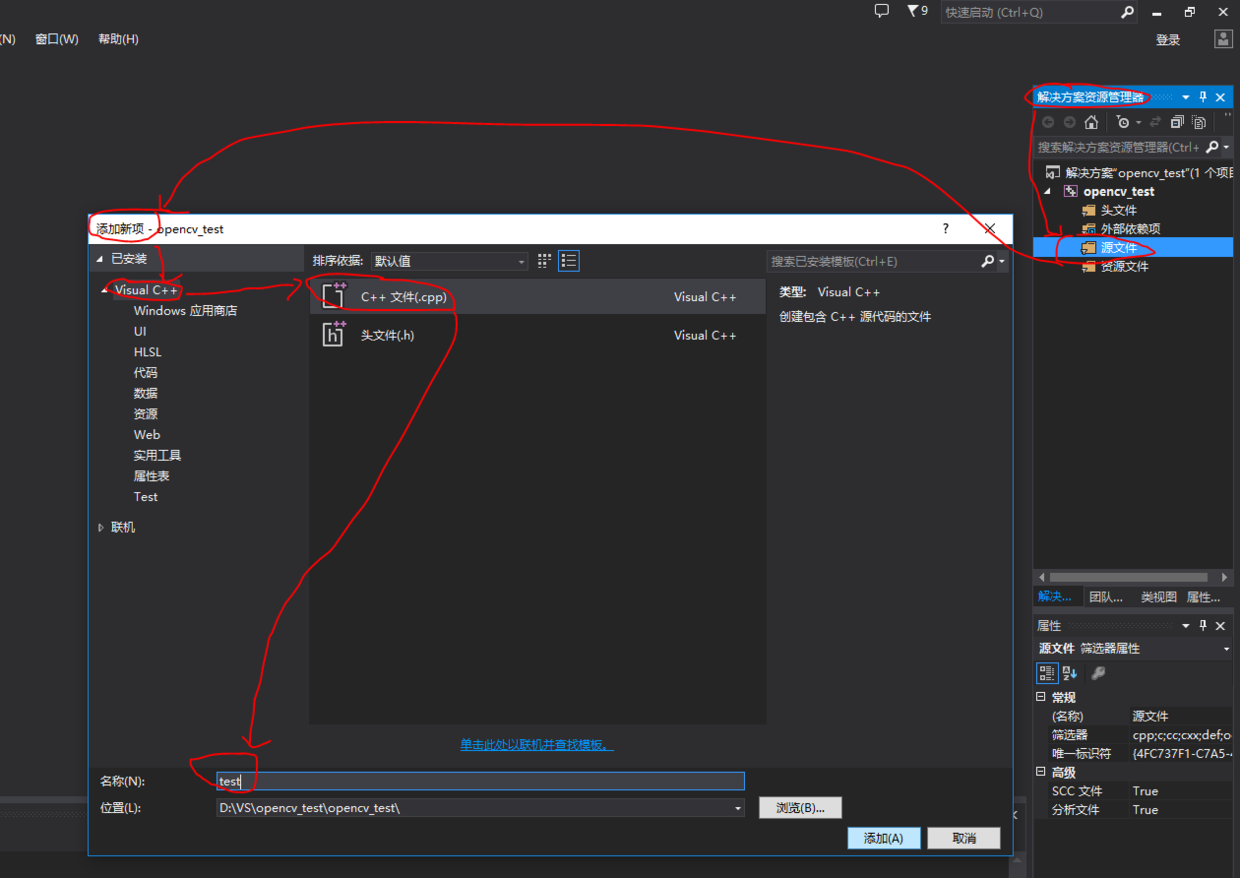

Search bar searches for "Solution Explorer" (Ctrl+Alt+L) Right-click Source File_Add_New Item_Visual C++C++ File_Source File named test (will automatically add suffix)

Add the following code to your program

#include <opencv2\opencv.hpp>

#include <iostream>

#include <string>

using namespace cv;

using namespace std;

int main()

{

Mat img = imread("baobao.jpg");

if (img.empty())

{

cout << "error";

return -1;

}

imshow("baby: ", img);

waitKey();

return 0;

}

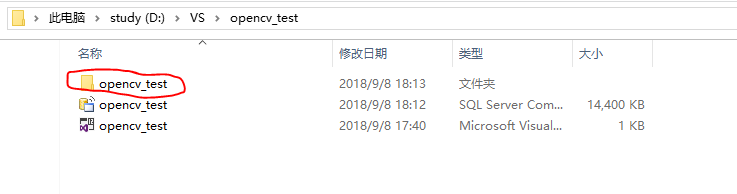

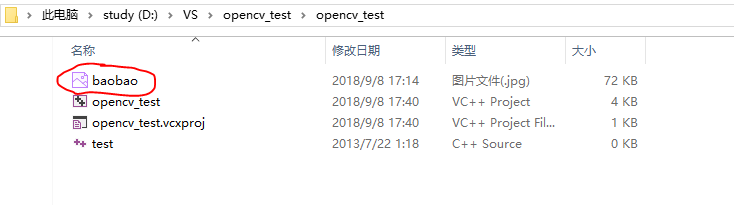

Find a folder with the same project name you created earlier and double-click to open Add a baobao picture

Back in the program, press F5 to start debugging and you will see the baby photo

4. Configuration of Kinectv2 in VS (used in conjunction with opencv)

4.1 New Project

Consistent with opencv

Configuration of 4.2 Kinectv 2

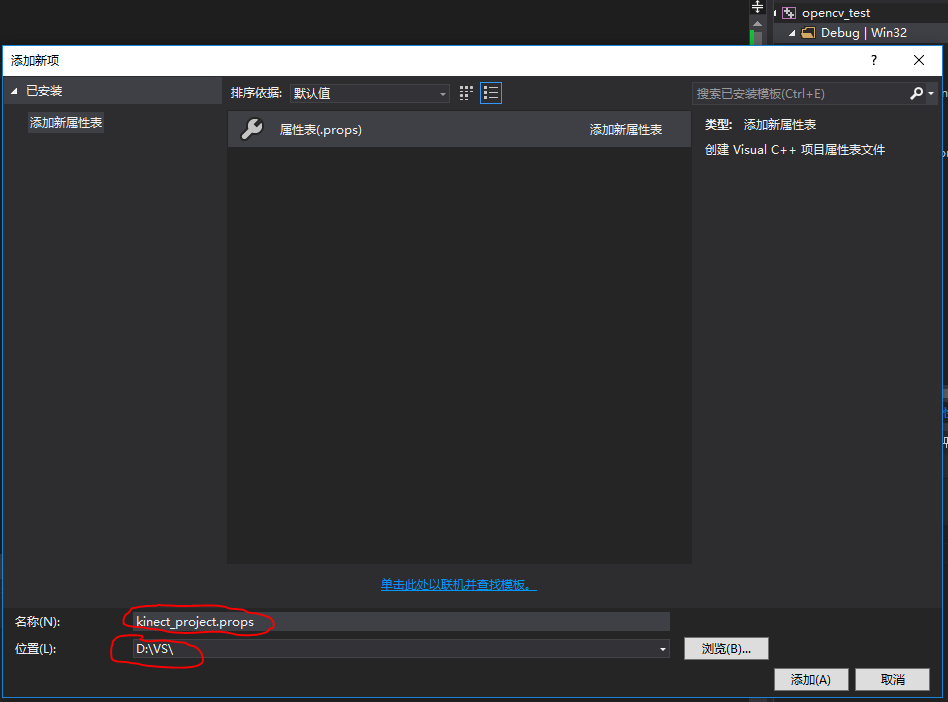

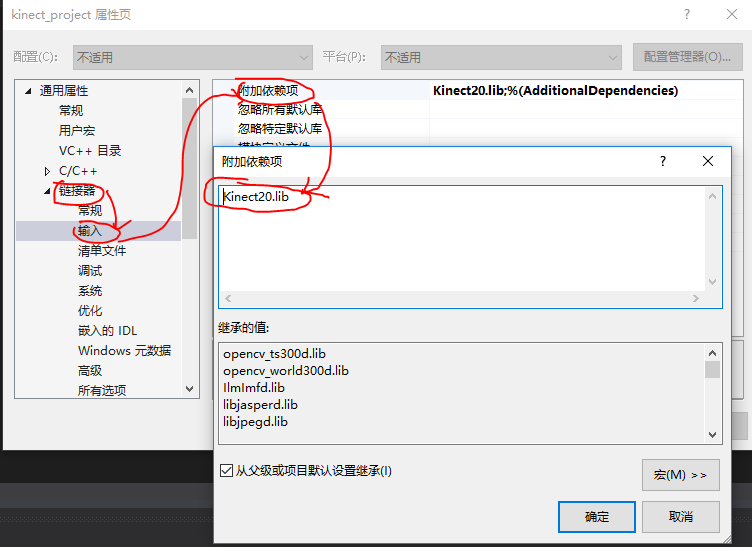

The search bar searches for Attribute Manager_Right-click Debug|Win32, chooses Add New Project Attribute Table_Name named kinect_project.props, and places the path in the home folder where the VS project is saved (you can directly "Add Existing Attribute Table" to find "kinect_project.props" when using kinectv2 later)

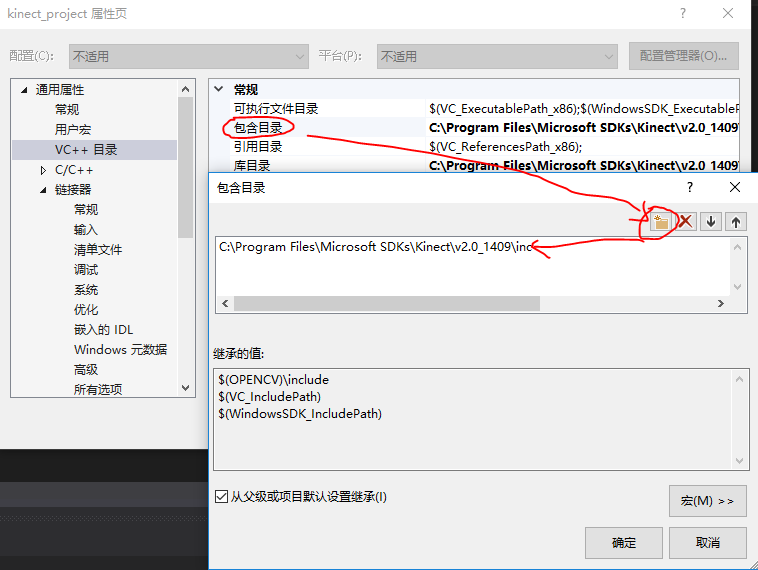

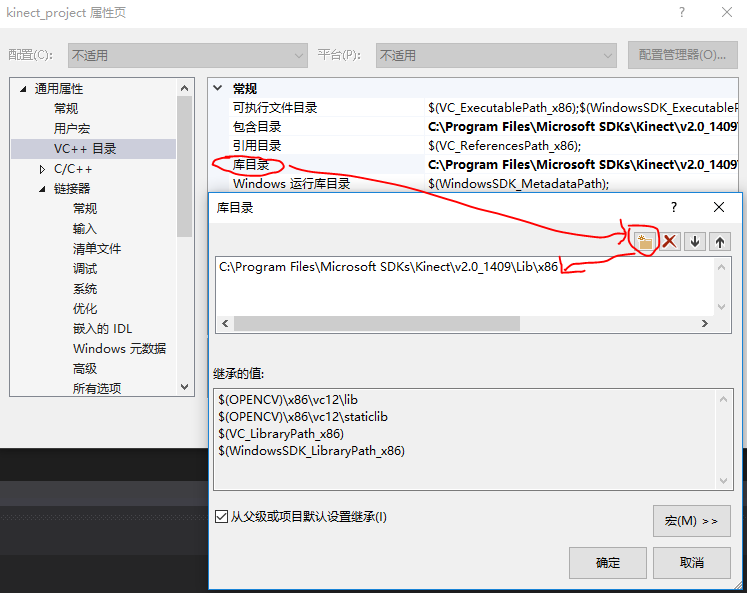

Double-click the kinect_project.props_VC++ directory_inc containing the directory Add kinect_library directory Add Kinect x 86 bit lib_connector input Add "Kinect20.lib"

4.3 Add the following code to your program

#Include <Kinect.h> //Kinect header file

#include <iostream>

#Include <opencv2\highgui.hpp>//opencv header file

using namespace std;

using namespace cv;

int main(void)

{

IKinectSensor * mySensor = nullptr;

GetDefaultKinectSensor(&mySensor); //Get Sensors

mySensor->Open(); //Switch on the sensor

IDepthFrameSource * mySource = nullptr; //Getting depth data

mySensor->get_DepthFrameSource(&mySource);

int height = 0, width = 0;

IFrameDescription * myDescription = nullptr; //Resolution to obtain depth data

mySource->get_FrameDescription(&myDescription);

myDescription->get_Height(&height);

myDescription->get_Width(&width);

myDescription->Release();

IDepthFrameReader * myReader = nullptr;

mySource->OpenReader(&myReader); //Open Reader for Depth Data

IDepthFrame * myFrame = nullptr;

Mat temp(height, width, CV_16UC1); //Establish Image Matrix

Mat img(height, width, CV_8UC1);

while (1)

{

if (myReader->AcquireLatestFrame(&myFrame) == S_OK) //Reader tries to get the latest frame depth data, puts it into the depth frame, and determines if it is successfully obtained

{

myFrame->CopyFrameDataToArray(height * width, (UINT16 *)temp.data); //Save the data in a 16-bit image matrix first

temp.convertTo(img, CV_8UC1, 255.0 / 4500); //Convert 16 Bits to 8 Bits

imshow("TEST", img);

myFrame->Release();

}

if (waitKey(30) == VK_ESCAPE)

break;

}

myReader->Release(); //Release unused variables and turn off sensors

mySource->Release();

mySensor->Close();

mySensor->Release();

return 0;

}

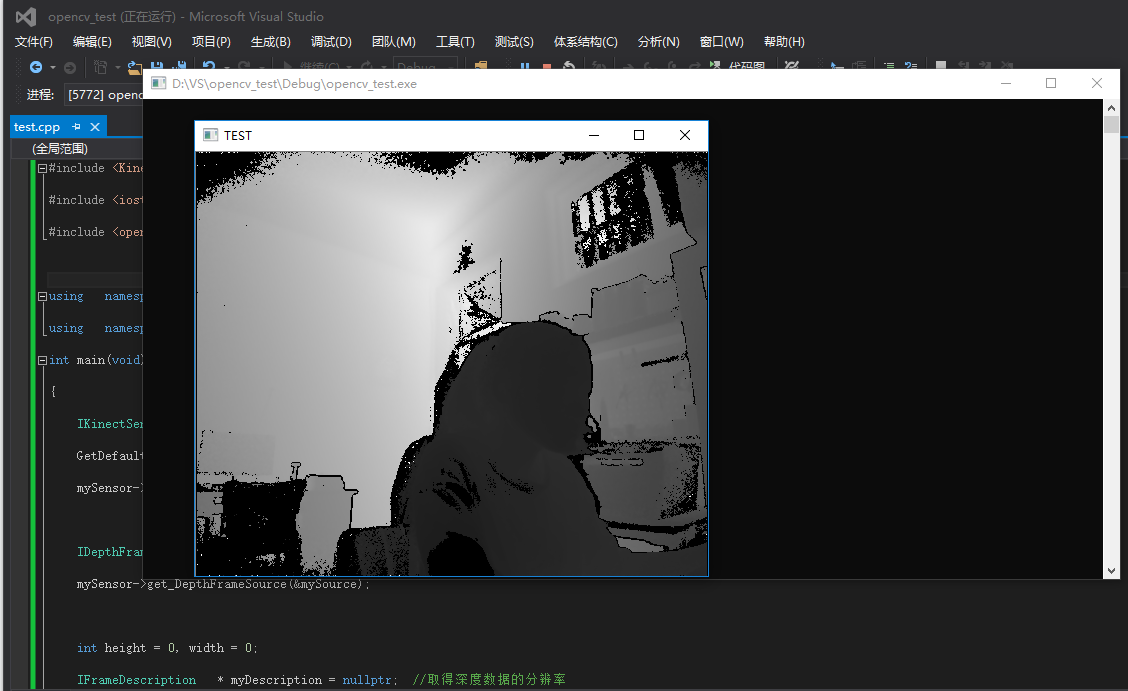

Back in the program, press F5 to start debugging and you will see the depth image:

Or add the following programs to get Kinect color, depth, and infrared maps

#include <Kinect.h>

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

using namespace cv;

using namespace std;

// Safe Release Pointer

template<class Interface>

inline void SafeRelease(Interface *& pInterfaceToRelease)

{

if (pInterfaceToRelease != NULL)

{

pInterfaceToRelease->Release();

pInterfaceToRelease = NULL;

}

}

int main()

{

// Get Kinect Devices

IKinectSensor* m_pKinectSensor;

HRESULT hr;

hr = GetDefaultKinectSensor(&m_pKinectSensor);

if (FAILED(hr))

{

return hr;

}

IMultiSourceFrameReader* m_pMultiFrameReader = NULL;

if (m_pKinectSensor)

{

hr = m_pKinectSensor->Open();

if (SUCCEEDED(hr))

{

// Get Multiple Data Sources to Reader

hr = m_pKinectSensor->OpenMultiSourceFrameReader( FrameSourceTypes::FrameSourceTypes_Color | FrameSourceTypes::FrameSourceTypes_Infrared | FrameSourceTypes::FrameSourceTypes_Depth,

&m_pMultiFrameReader);

}

}

if (!m_pKinectSensor || FAILED(hr))

{

return E_FAIL;

}

// Three data frames and references

IDepthFrameReference* m_pDepthFrameReference = NULL;

IColorFrameReference* m_pColorFrameReference = NULL;

IInfraredFrameReference* m_pInfraredFrameReference = NULL;

IInfraredFrame* m_pInfraredFrame = NULL;

IDepthFrame* m_pDepthFrame = NULL;

IColorFrame* m_pColorFrame = NULL;

// Three picture formats

Mat i_rgb(1080, 1920, CV_8UC4); //Note: This must be a 4-channel graph. Kinect data can only be sent in Bgra format

Mat i_depth(424, 512, CV_8UC1);

Mat i_ir(424, 512, CV_16UC1);

UINT16 *depthData = new UINT16[424 * 512];

IMultiSourceFrame* m_pMultiFrame = nullptr;

while (true)

{

// Get a new multisource data frame

hr = m_pMultiFrameReader->AcquireLatestFrame(&m_pMultiFrame);

if (FAILED(hr) || !m_pMultiFrame)

{

//cout << "!!!" << endl;

continue;

}

// Separating color, depth and infrared data from source data frames

if (SUCCEEDED(hr))

hr = m_pMultiFrame->get_ColorFrameReference(&m_pColorFrameReference);

if (SUCCEEDED(hr))

hr = m_pColorFrameReference->AcquireFrame(&m_pColorFrame);

if (SUCCEEDED(hr))

hr = m_pMultiFrame->get_DepthFrameReference(&m_pDepthFrameReference);

if (SUCCEEDED(hr))

hr = m_pDepthFrameReference->AcquireFrame(&m_pDepthFrame);

if (SUCCEEDED(hr))

hr = m_pMultiFrame->get_InfraredFrameReference(&m_pInfraredFrameReference);

if (SUCCEEDED(hr))

hr = m_pInfraredFrameReference->AcquireFrame(&m_pInfraredFrame);

// Copy color to picture

UINT nColorBufferSize = 1920 * 1080 * 4;

if (SUCCEEDED(hr))

hr = m_pColorFrame->CopyConvertedFrameDataToArray(nColorBufferSize, reinterpret_cast<BYTE*>(i_rgb.data), ColorImageFormat::ColorImageFormat_Bgra);

// Copy depth to picture

if (SUCCEEDED(hr))

{

hr = m_pDepthFrame->CopyFrameDataToArray(424 * 512, depthData);

for (int i = 0; i < 512 * 424; i++)

{

// 0-255 depth map, only 8 bits lower in depth data for visualization

BYTE intensity = static_cast<BYTE>(depthData[i] % 256);

reinterpret_cast<BYTE*>(i_depth.data)[i] = intensity;

}

// Actual 16-bit unsigned int data

//hr = m_pDepthFrame->CopyFrameDataToArray(424 * 512, reinterpret_cast<UINT16*>(i_depth.data));

}

// Copy infrared to picture

if (SUCCEEDED(hr))

{

hr = m_pInfraredFrame->CopyFrameDataToArray(424 * 512, reinterpret_cast<UINT16*>(i_ir.data));

}

// display

imshow("rgb", i_rgb);

if (waitKey(1) == VK_ESCAPE)

break;

imshow("depth", i_depth);

if (waitKey(1) == VK_ESCAPE)

break;

imshow("ir", i_ir);

if (waitKey(1) == VK_ESCAPE)

break;

// Release Resources

SafeRelease(m_pColorFrame);

SafeRelease(m_pDepthFrame);

SafeRelease(m_pInfraredFrame);

SafeRelease(m_pColorFrameReference);

SafeRelease(m_pDepthFrameReference);

SafeRelease(m_pInfraredFrameReference);

SafeRelease(m_pMultiFrame);

}

// Close windows, devices

cv::destroyAllWindows();

m_pKinectSensor->Close();

std::system("pause");

return 0;

}

5. Configuration of PCL in VS

Official Point Cloud Website

Reference Blog 1

Reference Blog 2

Reference Blog 3

Some ideas on how to find and use PCL Library Learning Resources

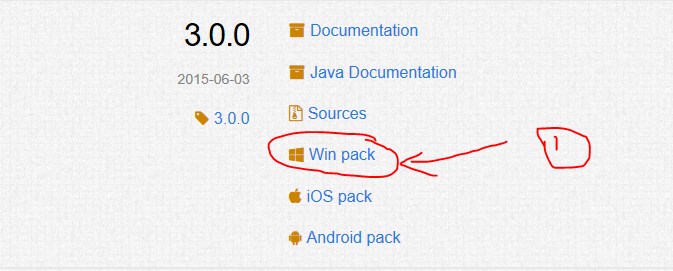

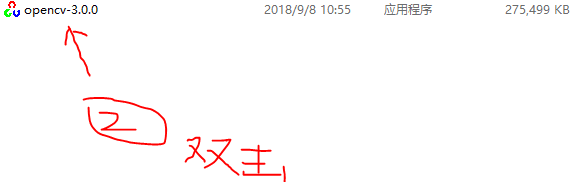

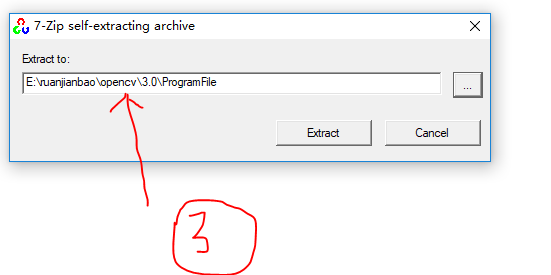

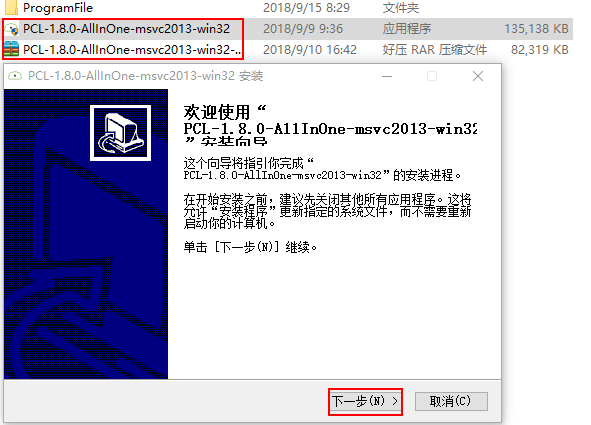

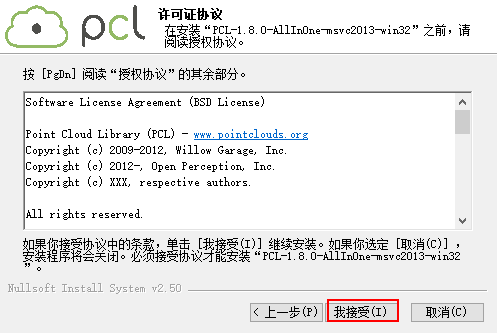

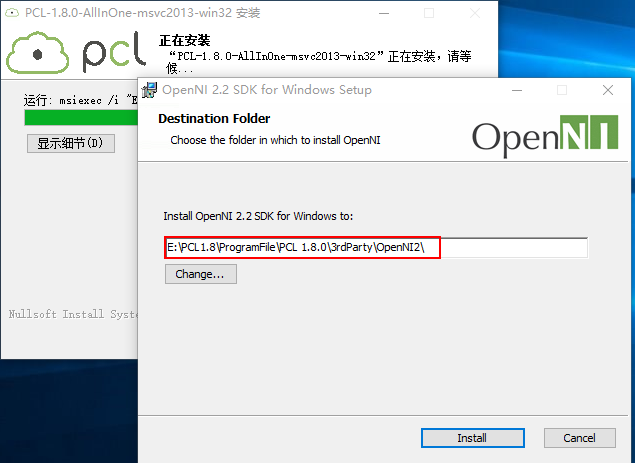

5.1 Download and Install

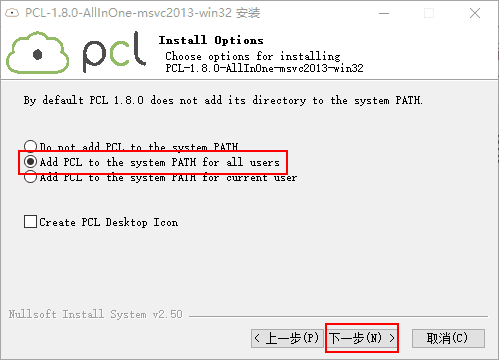

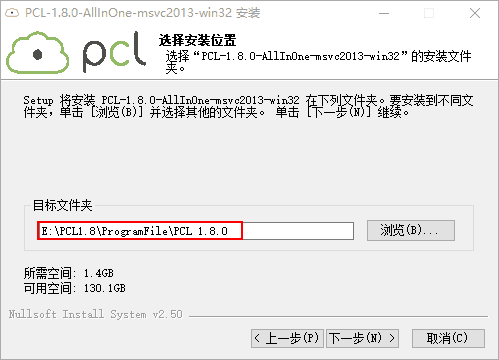

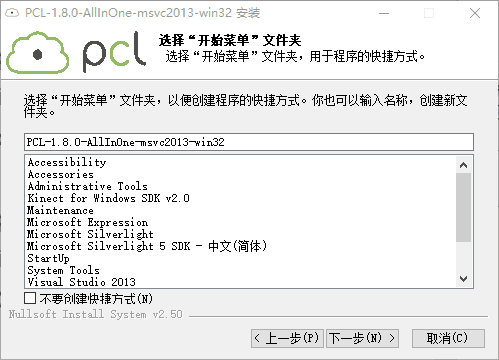

VS default configuration 32-bit project, download 32-bit PCL library, consistent with reference blog, except installation path is inconsistent.

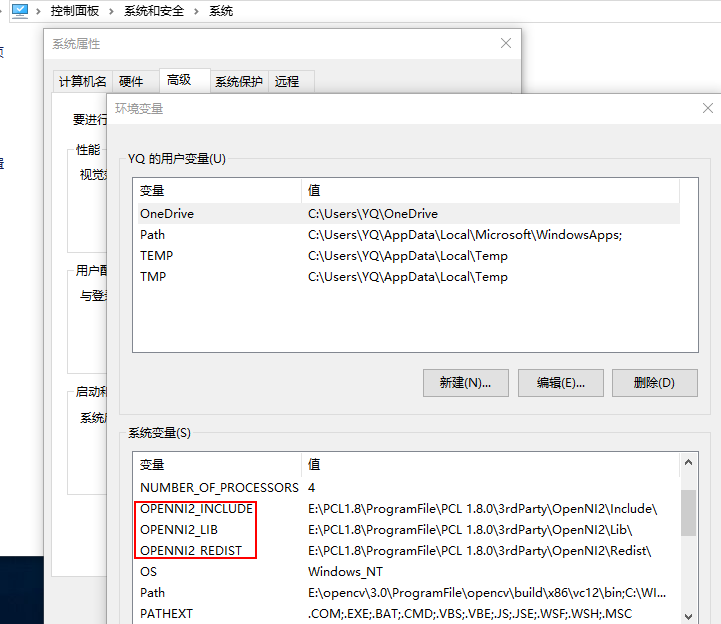

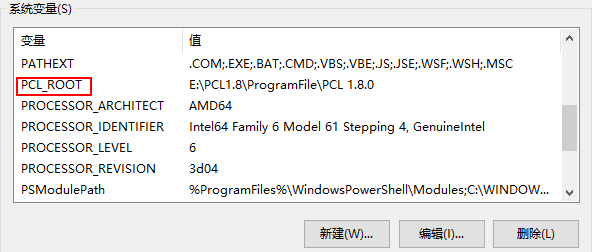

5.2 Configuring environment variables

After the PCL is installed, the system automatically configures the following for us

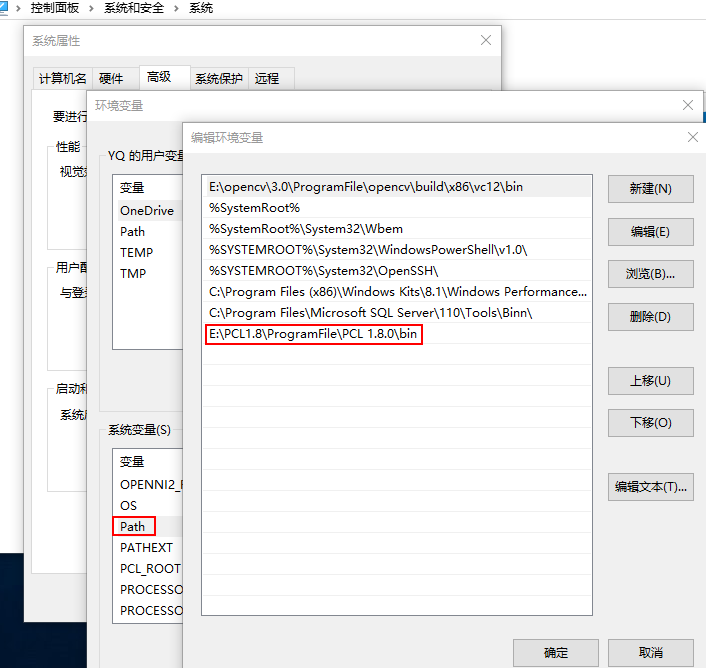

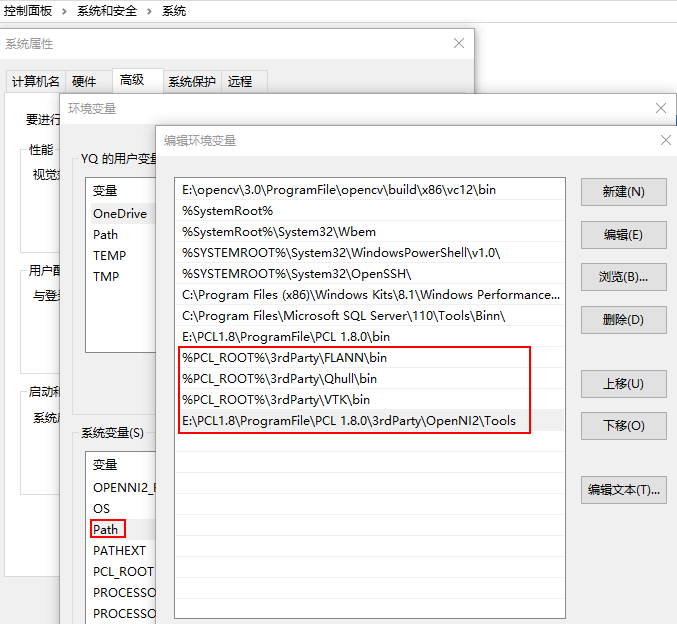

Next, similar to the opencv configuration, add the following to Path:

%PCL_ROOT%\3rdParty\FLANN\bin %PCL_ROOT%\3rdParty\Qhull\bin %PCL_ROOT%\3rdParty\VTK\bin E:\ruanjianbao\PCL\ProgramFile\PCL1.8.0\OpenNI2\Tools

Restart the computer after adding (remember to restart, otherwise the dll file I mentioned later can't be found)

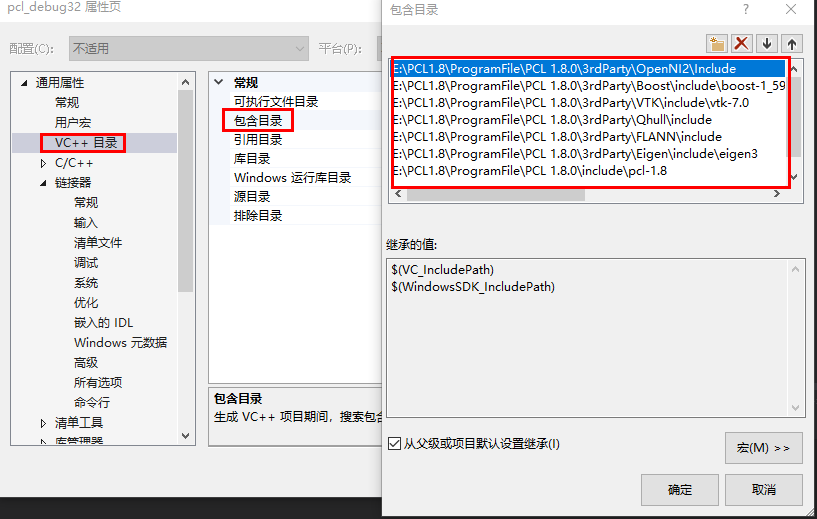

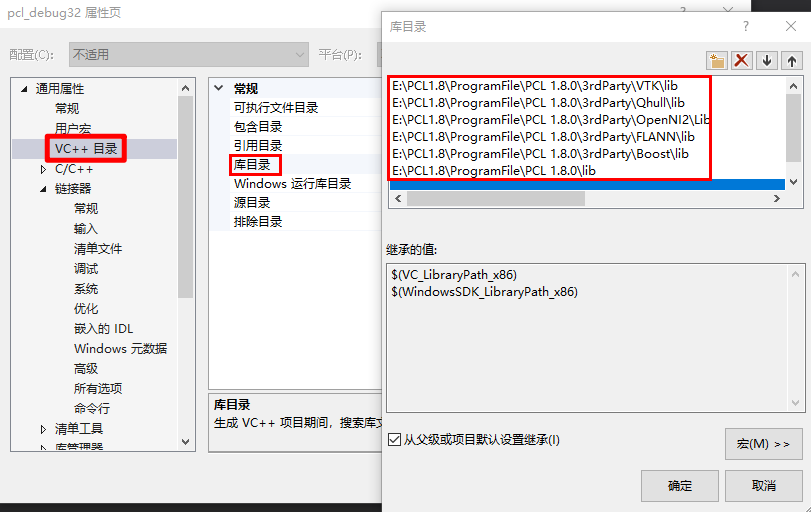

Configuration of 5.3 PCL in VS

New project pcl_test, same as before

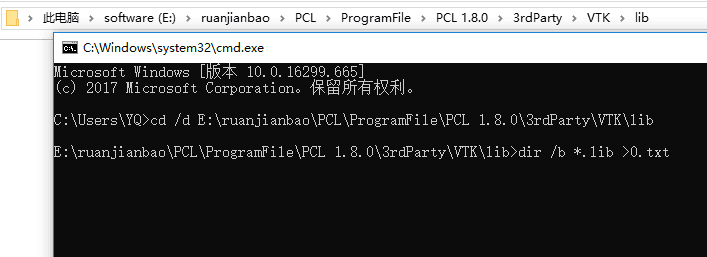

Tips for getting all the static link library file names under E:\ruanjianbao\PCL\ProgramFile\PCL 1.8.0\3rdParty\VTK\lib and storing them in text 0.txt:

- win+R

- cmd→Enter

- cd /d E:\ruanjianbao\PCL1.8\ProgramFile\PCL 1.8.0\3rdParty\VTK\lib →Enter

- dir /b *.lib >0.txt→Enter

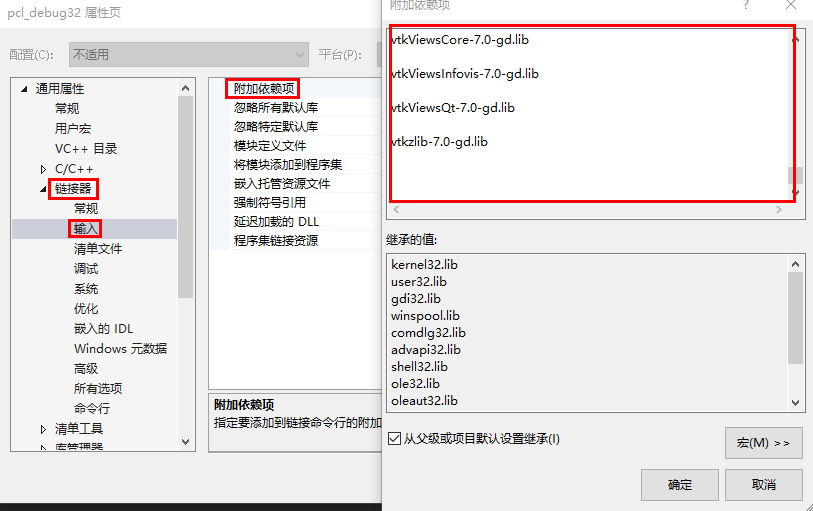

Add all the following dependencies

pcl_common_debug.lib pcl_features_debug.lib pcl_filters_debug.lib pcl_io_debug.lib pcl_io_ply_debug.lib pcl_kdtree_debug.lib pcl_keypoints_debug.lib pcl_ml_debug.lib pcl_octree_debug.lib pcl_outofcore_debug.lib pcl_people_debug.lib pcl_recognition_debug.lib pcl_registration_debug.lib pcl_sample_consensus_debug.lib pcl_search_debug.lib pcl_segmentation_debug.lib pcl_stereo_debug.lib pcl_surface_debug.lib pcl_tracking_debug.lib pcl_visualization_debug.lib libboost_atomic-vc120-mt-gd-1_59.lib libboost_chrono-vc120-mt-gd-1_59.lib libboost_container-vc120-mt-gd-1_59.lib libboost_context-vc120-mt-gd-1_59.lib libboost_coroutine-vc120-mt-gd-1_59.lib libboost_date_time-vc120-mt-gd-1_59.lib libboost_exception-vc120-mt-gd-1_59.lib libboost_filesystem-vc120-mt-gd-1_59.lib libboost_graph-vc120-mt-gd-1_59.lib libboost_iostreams-vc120-mt-gd-1_59.lib libboost_locale-vc120-mt-gd-1_59.lib libboost_log-vc120-mt-gd-1_59.lib libboost_log_setup-vc120-mt-gd-1_59.lib libboost_math_c99-vc120-mt-gd-1_59.lib libboost_math_c99f-vc120-mt-gd-1_59.lib libboost_math_c99l-vc120-mt-gd-1_59.lib libboost_math_tr1-vc120-mt-gd-1_59.lib libboost_math_tr1f-vc120-mt-gd-1_59.lib libboost_math_tr1l-vc120-mt-gd-1_59.lib libboost_mpi-vc120-mt-gd-1_59.lib libboost_prg_exec_monitor-vc120-mt-gd-1_59.lib libboost_program_options-vc120-mt-gd-1_59.lib libboost_random-vc120-mt-gd-1_59.lib libboost_regex-vc120-mt-gd-1_59.lib libboost_serialization-vc120-mt-gd-1_59.lib libboost_signals-vc120-mt-gd-1_59.lib libboost_system-vc120-mt-gd-1_59.lib libboost_test_exec_monitor-vc120-mt-gd-1_59.lib libboost_thread-vc120-mt-gd-1_59.lib libboost_timer-vc120-mt-gd-1_59.lib libboost_unit_test_framework-vc120-mt-gd-1_59.lib libboost_wave-vc120-mt-gd-1_59.lib libboost_wserialization-vc120-mt-gd-1_59.lib flann-gd.lib flann_cpp_s-gd.lib flann_s-gd.lib qhull-gd.lib qhullcpp-gd.lib qhullstatic-gd.lib qhullstatic_r-gd.lib qhull_p-gd.lib qhull_r-gd.lib vtkalglib-7.0-gd.lib vtkChartsCore-7.0-gd.lib vtkCommonColor-7.0-gd.lib vtkCommonComputationalGeometry-7.0-gd.lib vtkCommonCore-7.0-gd.lib vtkCommonDataModel-7.0-gd.lib vtkCommonExecutionModel-7.0-gd.lib vtkCommonMath-7.0-gd.lib vtkCommonMisc-7.0-gd.lib vtkCommonSystem-7.0-gd.lib vtkCommonTransforms-7.0-gd.lib vtkDICOMParser-7.0-gd.lib vtkDomainsChemistry-7.0-gd.lib vtkDomainsChemistryOpenGL2-7.0-gd.lib vtkexoIIc-7.0-gd.lib vtkexpat-7.0-gd.lib vtkFiltersAMR-7.0-gd.lib vtkFiltersCore-7.0-gd.lib vtkFiltersExtraction-7.0-gd.lib vtkFiltersFlowPaths-7.0-gd.lib vtkFiltersGeneral-7.0-gd.lib vtkFiltersGeneric-7.0-gd.lib vtkFiltersGeometry-7.0-gd.lib vtkFiltersHybrid-7.0-gd.lib vtkFiltersHyperTree-7.0-gd.lib vtkFiltersImaging-7.0-gd.lib vtkFiltersModeling-7.0-gd.lib vtkFiltersParallel-7.0-gd.lib vtkFiltersParallelImaging-7.0-gd.lib vtkFiltersProgrammable-7.0-gd.lib vtkFiltersSelection-7.0-gd.lib vtkFiltersSMP-7.0-gd.lib vtkFiltersSources-7.0-gd.lib vtkFiltersStatistics-7.0-gd.lib vtkFiltersTexture-7.0-gd.lib vtkFiltersVerdict-7.0-gd.lib vtkfreetype-7.0-gd.lib vtkGeovisCore-7.0-gd.lib vtkglew-7.0-gd.lib vtkhdf5-7.0-gd.lib vtkhdf5_hl-7.0-gd.lib vtkImagingColor-7.0-gd.lib vtkImagingCore-7.0-gd.lib vtkImagingFourier-7.0-gd.lib vtkImagingGeneral-7.0-gd.lib vtkImagingHybrid-7.0-gd.lib vtkImagingMath-7.0-gd.lib vtkImagingMorphological-7.0-gd.lib vtkImagingSources-7.0-gd.lib vtkImagingStatistics-7.0-gd.lib vtkImagingStencil-7.0-gd.lib vtkInfovisCore-7.0-gd.lib vtkInfovisLayout-7.0-gd.lib vtkInteractionImage-7.0-gd.lib vtkInteractionStyle-7.0-gd.lib vtkInteractionWidgets-7.0-gd.lib vtkIOAMR-7.0-gd.lib vtkIOCore-7.0-gd.lib vtkIOEnSight-7.0-gd.lib vtkIOExodus-7.0-gd.lib vtkIOExport-7.0-gd.lib vtkIOGeometry-7.0-gd.lib vtkIOImage-7.0-gd.lib vtkIOImport-7.0-gd.lib vtkIOInfovis-7.0-gd.lib vtkIOLegacy-7.0-gd.lib vtkIOLSDyna-7.0-gd.lib vtkIOMINC-7.0-gd.lib vtkIOMovie-7.0-gd.lib vtkIONetCDF-7.0-gd.lib vtkIOParallel-7.0-gd.lib vtkIOParallelXML-7.0-gd.lib vtkIOPLY-7.0-gd.lib vtkIOSQL-7.0-gd.lib vtkIOVideo-7.0-gd.lib vtkIOXML-7.0-gd.lib vtkIOXMLParser-7.0-gd.lib vtkjpeg-7.0-gd.lib vtkjsoncpp-7.0-gd.lib vtklibxml2-7.0-gd.lib vtkmetaio-7.0-gd.lib vtkNetCDF-7.0-gd.lib vtkNetCDF_cxx-7.0-gd.lib vtkoggtheora-7.0-gd.lib vtkParallelCore-7.0-gd.lib vtkpng-7.0-gd.lib vtkproj4-7.0-gd.lib vtkRenderingAnnotation-7.0-gd.lib vtkRenderingContext2D-7.0-gd.lib vtkRenderingContextOpenGL2-7.0-gd.lib vtkRenderingCore-7.0-gd.lib vtkRenderingFreeType-7.0-gd.lib vtkRenderingImage-7.0-gd.lib vtkRenderingLabel-7.0-gd.lib vtkRenderingLOD-7.0-gd.lib vtkRenderingOpenGL2-7.0-gd.lib vtkRenderingQt-7.0-gd.lib vtkRenderingVolume-7.0-gd.lib vtkRenderingVolumeOpenGL2-7.0-gd.lib vtksqlite-7.0-gd.lib vtksys-7.0-gd.lib vtktiff-7.0-gd.lib vtkverdict-7.0-gd.lib vtkViewsContext2D-7.0-gd.lib vtkViewsCore-7.0-gd.lib vtkViewsInfovis-7.0-gd.lib vtkViewsQt-7.0-gd.lib vtkzlib-7.0-gd.lib

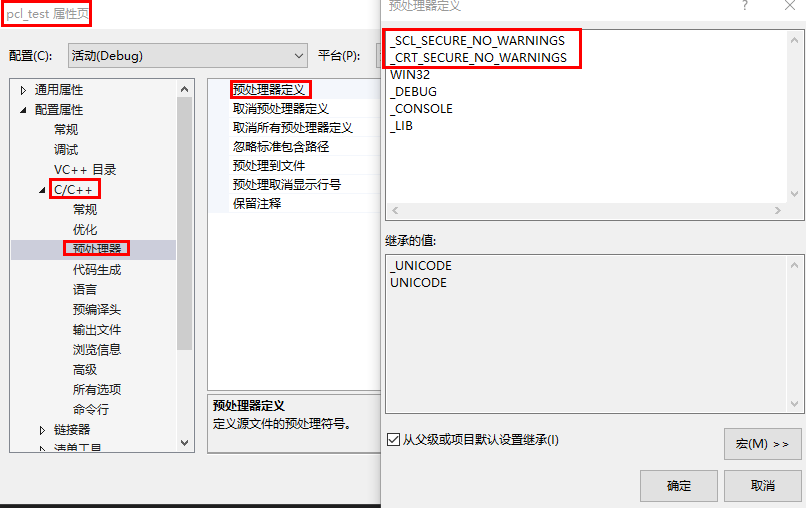

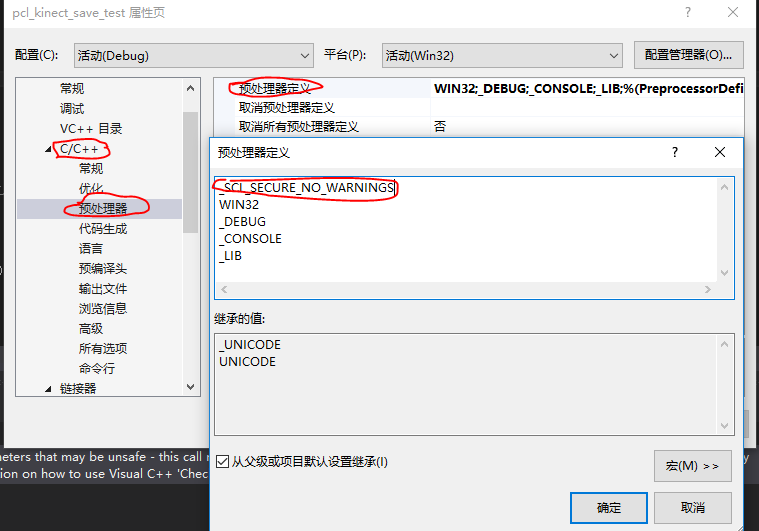

Add the following two items

_SCL_SECURE_NO_WARNINGS _CRT_SECURE_NO_WARNINGS

Note: If you do not perform this operation, the error mentioned later will occur.

Small Test for 5.4 PCL

#include <pcl/visualization/cloud_viewer.h> #include <iostream> #include <pcl/io/io.h> #include <pcl/io/pcd_io.h> int user_data; void viewerOneOff(pcl::visualization::PCLVisualizer& viewer) { viewer.setBackgroundColor(1.0, 0.5, 1.0); pcl::PointXYZ o; o.x = 1.0; o.y = 0; o.z = 0; viewer.addSphere(o, 0.25, "sphere", 0); std::cout << "i only run once" << std::endl; } void viewerPsycho(pcl::visualization::PCLVisualizer& viewer) { static unsigned count = 0; std::stringstream ss; ss << "Once per viewer loop: " << count++; viewer.removeShape("text", 0); viewer.addText(ss.str(), 200, 300, "text", 0); //FIXME: possible race condition here: user_data++; } int main() { pcl::PointCloud<pcl::PointXYZRGBA>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZRGBA>); pcl::io::loadPCDFile("my_point_cloud.pcd", *cloud); pcl::visualization::CloudViewer viewer("Cloud Viewer"); //blocks until the cloud is actually rendered viewer.showCloud(cloud); //use the following functions to get access to the underlying more advanced/powerful //PCLVisualizer //This will only get called once viewer.runOnVisualizationThreadOnce(viewerOneOff); //This will get called once per visualization iteration viewer.runOnVisualizationThread(viewerPsycho); while (!viewer.wasStopped()) { //you can also do cool processing here //FIXME: Note that this is running in a separate thread from viewerPsycho //and you should guard against race conditions yourself... user_data++; } return 0; }

5.5 PCL combined with opencv to read kinectv2 point cloud pictures in VS

#include <Kinect.h> #include <opencv2\opencv.hpp> #include <opencv2/highgui/highgui.hpp> #include <pcl/visualization/cloud_viewer.h> #include <pcl/visualization/pcl_visualizer.h> #include <pcl/point_cloud.h> #include <pcl/io/pcd_io.h> #include <pcl/point_types.h> using namespace cv; using namespace std; IKinectSensor* pSensor; ICoordinateMapper *pMapper; const int iDWidth = 512, iDHeight = 424;//Depth map size const int iCWidth = 1920, iCHeight = 1080;//Color Map Size CameraSpacePoint depth2xyz[iDWidth*iDHeight]; ColorSpacePoint depth2rgb[iCWidth*iCHeight]; void viewerOneOff(pcl::visualization::PCLVisualizer& viewer) { viewer.setBackgroundColor(1.0, 1.0, 1.0);//Set background color } //Start Kinect bool initKinect() { if (FAILED(GetDefaultKinectSensor(&pSensor))) return false; if (pSensor) { pSensor->get_CoordinateMapper(&pMapper); pSensor->Open(); cout << "Camera turned on" << endl; return true; } else return false; } //Get Depth Frame Mat DepthData() { IDepthFrameSource* pFrameSource = nullptr; pSensor->get_DepthFrameSource(&pFrameSource); IDepthFrameReader* pFrameReader = nullptr; pFrameSource->OpenReader(&pFrameReader); IDepthFrame* pFrame = nullptr; Mat mDepthImg(iDHeight, iDWidth, CV_16UC1); while (true) { if (pFrameReader->AcquireLatestFrame(&pFrame) == S_OK) { pFrame->CopyFrameDataToArray(iDWidth * iDHeight, reinterpret_cast<UINT16*>(mDepthImg.data)); cout << "Depth frame acquired" << endl; pFrame->Release(); return mDepthImg; break; } } } //Get color frames Mat RGBData() { IColorFrameSource* pFrameSource = nullptr; pSensor->get_ColorFrameSource(&pFrameSource); IColorFrameReader* pFrameReader = nullptr; pFrameSource->OpenReader(&pFrameReader); IColorFrame* pFrame = nullptr; Mat mColorImg(iCHeight, iCWidth, CV_8UC4); while (true) { if (pFrameReader->AcquireLatestFrame(&pFrame) == S_OK) { pFrame->CopyConvertedFrameDataToArray(iCWidth * iCHeight * 4, mColorImg.data, ColorImageFormat_Bgra); cout << "Getted color frame" << endl; pFrame->Release(); return mColorImg; break; } } } int main() { initKinect(); pcl::visualization::CloudViewer viewer("Cloud Viewer"); viewer.runOnVisualizationThreadOnce(viewerOneOff); pcl::PointCloud<pcl::PointXYZRGBA>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZRGBA>); Mat mColorImg; Mat mDepthImg; while (cv::waitKey(30) != VK_ESCAPE) { mColorImg = RGBData(); mDepthImg = DepthData(); imshow("RGB", mColorImg); pMapper->MapDepthFrameToColorSpace(iDHeight*iDWidth, reinterpret_cast<UINT16*>(mDepthImg.data), iDHeight*iDWidth, depth2rgb);//Depth Map to Color Mapping pMapper->MapDepthFrameToCameraSpace(iDHeight*iDWidth, reinterpret_cast<UINT16*>(mDepthImg.data), iDHeight*iDWidth, depth2xyz);//Depth Map to Camera 3D Space Mapping //for (int i = 0; i < iDWidth*iDHeight; i++) //{ // cout << i << ": " << "X=" << depth2rgb[i].X << "; Y=" << depth2rgb[i].Y<<endl; //} float maxX = depth2xyz[0].X, maxY = depth2xyz[0].Y, maxZ = depth2xyz[0].Z; float minX = depth2xyz[0].X, minY = depth2xyz[0].Y, minZ = depth2xyz[0].Z; for (size_t i = 0; i < iDWidth; i++) { for (size_t j = 0; j < iDHeight; j++) { pcl::PointXYZRGBA pointTemp; if (depth2xyz[i + j*iDWidth].Z > 0.5&&depth2rgb[i + j*iDWidth].X<1920 && depth2rgb[i + j*iDWidth].X>0 && depth2rgb[i + j*iDWidth].Y<1080 && depth2rgb[i + j*iDWidth].Y>0) { pointTemp.x = -depth2xyz[i + j*iDWidth].X; if (depth2xyz[i + j*iDWidth].X > maxX) maxX = -depth2xyz[i + j*iDWidth].X; if (depth2xyz[i + j*iDWidth].X < minX) minX = -depth2xyz[i + j*iDWidth].X; pointTemp.y = depth2xyz[i + j*iDWidth].Y; if (depth2xyz[i + j*iDWidth].Y > maxY) maxY = depth2xyz[i + j*iDWidth].Y; if (depth2xyz[i + j*iDWidth].Y < minY) minY = depth2xyz[i + j*iDWidth].Y; pointTemp.z = depth2xyz[i + j*iDWidth].Z; if (depth2xyz[i + j*iDWidth].Z != 0.0) { if (depth2xyz[i + j*iDWidth].Z > maxZ) maxZ = depth2xyz[i + j*iDWidth].Z; if (depth2xyz[i + j*iDWidth].Z < minZ) minZ = depth2xyz[i + j*iDWidth].Z; } pointTemp.b = mColorImg.at<cv::Vec4b>(depth2rgb[i + j*iDWidth].Y, depth2rgb[i + j*iDWidth].X)[0]; pointTemp.g = mColorImg.at<cv::Vec4b>(depth2rgb[i + j*iDWidth].Y, depth2rgb[i + j*iDWidth].X)[1]; pointTemp.r = mColorImg.at<cv::Vec4b>(depth2rgb[i + j*iDWidth].Y, depth2rgb[i + j*iDWidth].X)[2]; pointTemp.a = mColorImg.at<cv::Vec4b>(depth2rgb[i + j*iDWidth].Y, depth2rgb[i + j*iDWidth].X)[3]; cloud->push_back(pointTemp); } } } viewer.showCloud(cloud); mColorImg.release(); mDepthImg.release(); pcl::io::savePCDFileASCII("cloud.pcd",*cloud); cloud->clear(); waitKey(10); } return 0; }

5.6 PCL combined with opencv to save kinectv2 point cloud pictures in VS

#include <Kinect.h> #include<vector> #include <opencv2\opencv.hpp> #include <opencv2/highgui/highgui.hpp> //#include <pcl/io/io.h> #include <pcl/io/pcd_io.h> #include <pcl/point_types.h> #include <pcl/point_cloud.h> #include <pcl/visualization/cloud_viewer.h> #include <pcl/visualization/pcl_visualizer.h> #include <pcl/io/ply_io.h> using namespace cv; using namespace std; //typedef pcl::PointXYZRGBA PointT; //typedef pcl::PointCloud< PointT> PointCloud; IKinectSensor* pSensor; ICoordinateMapper *pMapper; const int iDWidth = 512, iDHeight = 424;//Depth map size const int iCWidth = 1920, iCHeight = 1080;//Color Map Size CameraSpacePoint depth2xyz[iDWidth*iDHeight]; ColorSpacePoint depth2rgb[iCWidth*iCHeight]; void viewerOneOff(pcl::visualization::PCLVisualizer& viewer) { viewer.setBackgroundColor(1.0, 1.0, 1.0);//Set background color } //Start Kinect bool initKinect() { if (FAILED(GetDefaultKinectSensor(&pSensor))) return false; if (pSensor) { pSensor->get_CoordinateMapper(&pMapper); pSensor->Open(); cout << "Camera turned on" << endl; return true; } else return false; } //Get Depth Frame Mat DepthData() { IDepthFrameSource* pFrameSource = nullptr; pSensor->get_DepthFrameSource(&pFrameSource); IDepthFrameReader* pFrameReader = nullptr; pFrameSource->OpenReader(&pFrameReader); IDepthFrame* pFrame = nullptr; Mat mDepthImg(iDHeight, iDWidth, CV_16UC1); while (true) { if (pFrameReader->AcquireLatestFrame(&pFrame) == S_OK) { pFrame->CopyFrameDataToArray(iDWidth * iDHeight, reinterpret_cast<UINT16*>(mDepthImg.data)); cout << "Depth frame acquired" << endl; pFrame->Release(); return mDepthImg; break; } } } //Get color frames Mat RGBData() { IColorFrameSource* pFrameSource = nullptr; pSensor->get_ColorFrameSource(&pFrameSource); IColorFrameReader* pFrameReader = nullptr; pFrameSource->OpenReader(&pFrameReader); IColorFrame* pFrame = nullptr; Mat mColorImg(iCHeight, iCWidth, CV_8UC4); while (true) { if (pFrameReader->AcquireLatestFrame(&pFrame) == S_OK) { pFrame->CopyConvertedFrameDataToArray(iCWidth * iCHeight * 4, mColorImg.data, ColorImageFormat_Bgra); cout << "Getted color frame" << endl; pFrame->Release(); return mColorImg; break; } } } int main(int argc, char** argv) { initKinect(); pcl::visualization::CloudViewer viewer("Cloud Viewer"); viewer.runOnVisualizationThreadOnce(viewerOneOff); pcl::PointCloud<pcl::PointXYZRGBA>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZRGBA>); //PointCloud::Ptr cloud(new PointCloud); Mat mColorImg; Mat mDepthImg; while (cv::waitKey(30) != VK_ESCAPE) { mColorImg = RGBData(); mDepthImg = DepthData(); imwrite("color.jpg", mColorImg); imshow("RGB", mColorImg); imwrite("depth.jpg", mDepthImg); imshow("Depth", mDepthImg); pMapper->MapDepthFrameToColorSpace(iDHeight*iDWidth, reinterpret_cast<UINT16*>(mDepthImg.data), iDHeight*iDWidth, depth2rgb);//Depth Map to Color Mapping pMapper->MapDepthFrameToCameraSpace(iDHeight*iDWidth, reinterpret_cast<UINT16*>(mDepthImg.data), iDHeight*iDWidth, depth2xyz);//Depth Map to Camera 3D Space Mapping //for (int i = 0; i < iDWidth*iDHeight; i++) //{ // cout << i << ": " << "X=" << depth2rgb[i].X << "; Y=" << depth2rgb[i].Y<<endl; //} float maxX = depth2xyz[0].X, maxY = depth2xyz[0].Y, maxZ = depth2xyz[0].Z; float minX = depth2xyz[0].X, minY = depth2xyz[0].Y, minZ = depth2xyz[0].Z; //pcl::PointCloud<pcl::PointXYZ> savecloud; //savecloud.width = 512; //savecloud.height = 424; //savecloud.is_dense = false; //savecloud.points.resize(savecloud.width * savecloud.height); for (size_t i = 0; i < iDWidth; i++) { for (size_t j = 0; j < iDHeight; j++) { pcl::PointXYZRGBA pointTemp; //PointT pointTemp; if (depth2xyz[i + j*iDWidth].Z > 0.5&&depth2rgb[i + j*iDWidth].X<1920 && depth2rgb[i + j*iDWidth].X>0 && depth2rgb[i + j*iDWidth].Y<1080 && depth2rgb[i + j*iDWidth].Y>0) { pointTemp.x = -depth2xyz[i + j*iDWidth].X; //savecloud[i,j].x = -depth2xyz[i + j*iDWidth].X; if (depth2xyz[i + j*iDWidth].X > maxX) maxX = -depth2xyz[i + j*iDWidth].X; if (depth2xyz[i + j*iDWidth].X < minX) minX = -depth2xyz[i + j*iDWidth].X; //savecloud[i, j].y = depth2xyz[i + j*iDWidth].Y; pointTemp.y = depth2xyz[i + j*iDWidth].Y; if (depth2xyz[i + j*iDWidth].Y > maxY) maxY = depth2xyz[i + j*iDWidth].Y; if (depth2xyz[i + j*iDWidth].Y < minY) minY = depth2xyz[i + j*iDWidth].Y; //savecloud[i, j].z = depth2xyz[i + j*iDWidth].Z; pointTemp.z = depth2xyz[i + j*iDWidth].Z; if (depth2xyz[i + j*iDWidth].Z != 0.0) { if (depth2xyz[i + j*iDWidth].Z > maxZ) maxZ = depth2xyz[i + j*iDWidth].Z; if (depth2xyz[i + j*iDWidth].Z < minZ) minZ = depth2xyz[i + j*iDWidth].Z; } pointTemp.b = mColorImg.at<cv::Vec4b>(depth2rgb[i + j*iDWidth].Y, depth2rgb[i + j*iDWidth].X)[0]; pointTemp.g = mColorImg.at<cv::Vec4b>(depth2rgb[i + j*iDWidth].Y, depth2rgb[i + j*iDWidth].X)[1]; pointTemp.r = mColorImg.at<cv::Vec4b>(depth2rgb[i + j*iDWidth].Y, depth2rgb[i + j*iDWidth].X)[2]; pointTemp.a = mColorImg.at<cv::Vec4b>(depth2rgb[i + j*iDWidth].Y, depth2rgb[i + j*iDWidth].X)[3]; cloud->push_back(pointTemp); } } //cloud->height = 1; //cloud->width = cloud->points.size(); //cloud->is_dense = false; //pcl::io::savePCDFileASCII("pointcloud.pcd", *cloud); //cout << "point cloud size = " << cloud->points.size() << endl; //pcl::io::savePCDFileASCII("test_pcd.pcd", savecloud); } pcl::io::savePCDFileASCII("cloud.pcd", *cloud); viewer.showCloud(cloud); //std::cerr << "Saved " << savecloud.points.size() << " data points to test_pcd.pcd." << std::endl; //for (size_t i = 0; i < savecloud.points.size(); ++i) //std::cerr << " " << savecloud.points[i].x << " " << savecloud.points[i].y << " " << savecloud.points[i].z << std::endl; //std::cerr << "Saved " << savecloud.points.size() << " data points to test_pcd.pcd." << std::endl; //pcl::io::savePCDFileASCII("cloud.pcd", *cloud); mColorImg.release(); mDepthImg.release(); //pcl::io::savePCDFileASCII("cloud.pcd", *cloud); //pcl::PLYWriter writer; //writer.write("cloud.ply", *cloud); cloud->clear(); waitKey(3000); } return 0; }

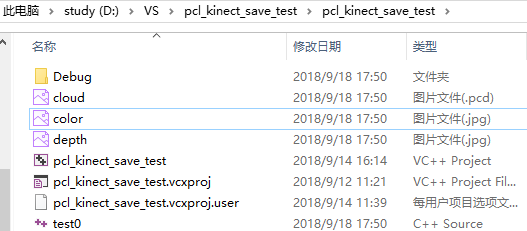

You can see the saved RGB, Depth, Point Cloud pictures when you enter the project catalog

6. A series of issues encountered by VS2013 in conjunction with pcl in the Storage Point Cloud process

Question 1:

Cannot open or create a new project "no editor option definition export found for the given option name"

- Solution:

- Turn off VS

- Clean up the cache in the directory of C disk user files, my path is as follows:

C:\User\YQ\AppData\Local\Microsoft\VisualStudio\12.0\ComponentModelCache - Reopen VS

Note:

visual studio 2012 corresponds to 11.0 folders;

visual studio 2013 corresponds to 12.0 folders;

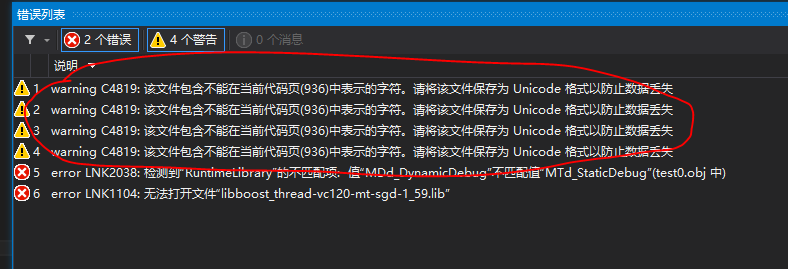

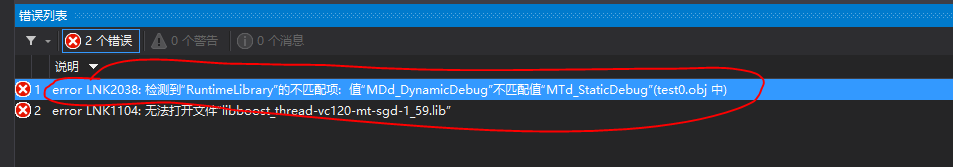

Question 2:

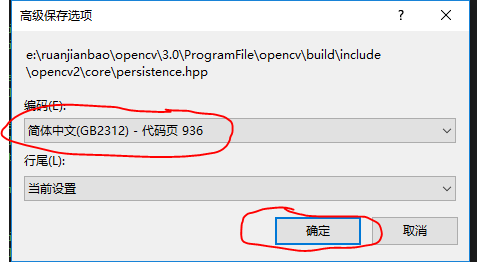

- Solution:

- Double-click warning

- File Advanced Save Options Change the encoding column to the following Click OK

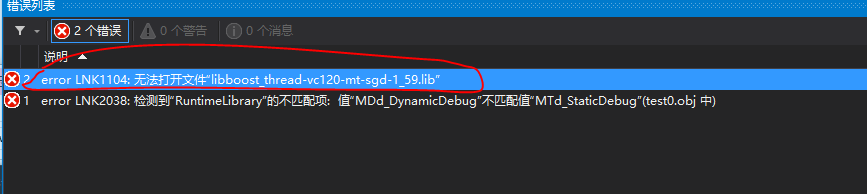

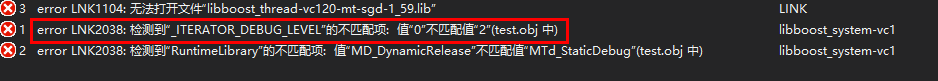

Question 3:

- Solution:

- boost website Find the boost version you need and download it (mine is 1.5.9)

- decompression

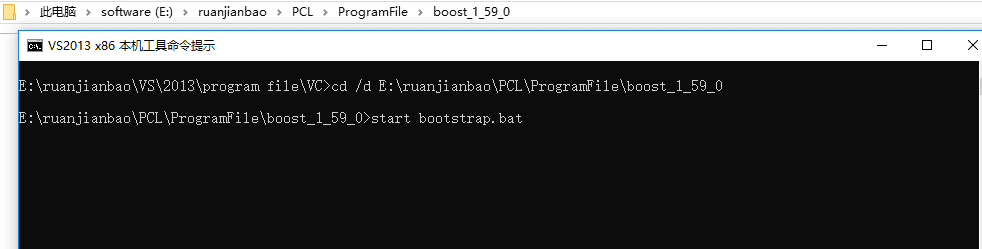

- Open Visual Studio Tools for VS and select the X86 command prompt (because I've been using 32-bit VS projects)

- Under the unzipped directory_enter the boost_1_59_0 directory (cd/d E:ruanjianbaoPCLProgramFileboost_1_59_0)run the bootstrap.bat file (bootstrap.bat)

- After running the bootstrap.bat file, generate b2.exe and bjam.exe_Compile b2.exe to generate a 32-bit static lib

b2 stage --toolset=msvc-12.0 architecture=x86 --stagedir=".\lib\vc12_x86" link=static runtime-link=static threading=multi debug releas

- Reconfiguring PCL in VS takes the same steps as previous PCL configurations except that you need to replace all boost-related content with lib and include generated in the downloaded boost

Attachment:

Tips for using cmd and then cd to a specified folder:

Find the folder you need to locate Click on it Shift and right-click at the same time. The option to copy as a path appears and paste it where you want it.

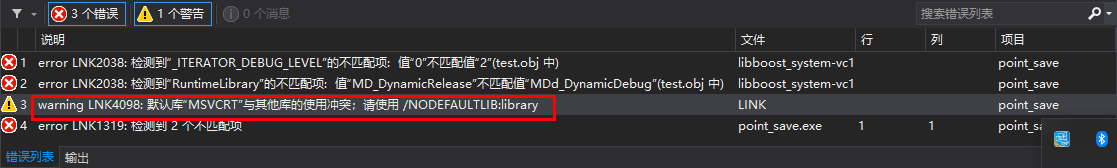

Question 4:

- Solution: Pending

Note:

Multithreaded debugging Dll (/MDd) MD_DynamicDebug

Multithreaded Dll (/MD):MD_DynamicRelease

Multithreaded (/MT):MD_StaticRelease

Multithreaded (/MTd):MD_StaticDebug

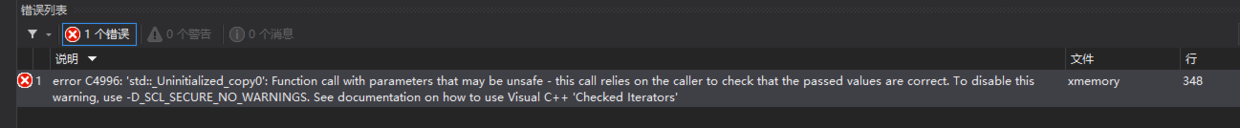

Question 5:

- Solution:

Add the following statement to the picture

_SCL_SECURE_NO_WARNINGS

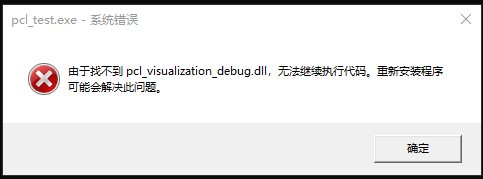

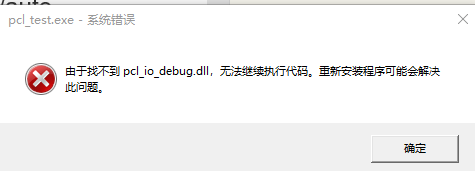

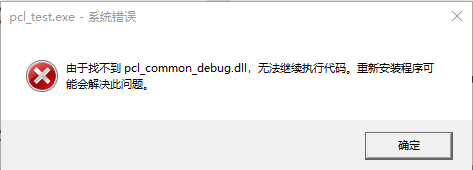

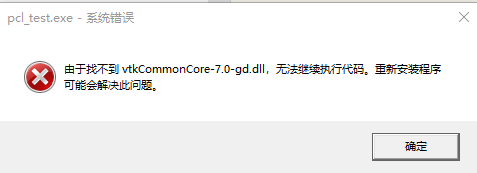

Question 6:

- Reason:

The root cause of this problem is that on the setting of environment variables, the computer will only look for the.Dll file that the program will run in the directory contained under the path. If the.Dll file we want to use is not included in the path of environment variable, an error will occur: the computer lost the xxx.dll file.However, I have explicitly configured in the environment configuration before, but this error occurred. The real reason is that I did not restart the computer after the environment configuration, resulting in the configuration of environment variables does not take effect immediately. - Solution:

Problem solving after restarting the computer

Question 7:

- Reason:

1 The value "0" does not match the value "2", Debug uses the library file under Release.

(2) The value "2" does not match the value "0", Release uses the library file under Debug - Solution:

Scenario 1: Project->Properties->Configuration Properties->C/C+++>Preprocessor->Add to Preprocessing Definition:_ITERATOR_DEBUG_LEVEL=0

Scenario 2: Project->Properties->Configuration Properties->C/C+++>Preprocessor->Add to Preprocessing Definition:_ITERATOR_DEBUG_LEVEL=2

Question 8:

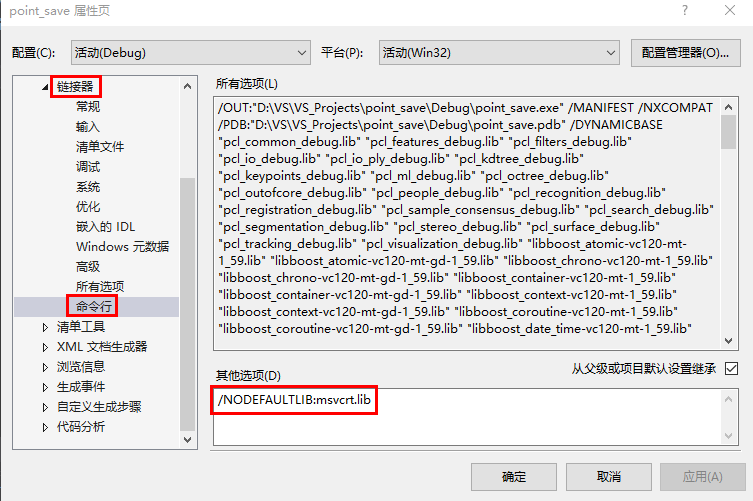

Add: /NODEFAULTLIB:msvcrt.lib