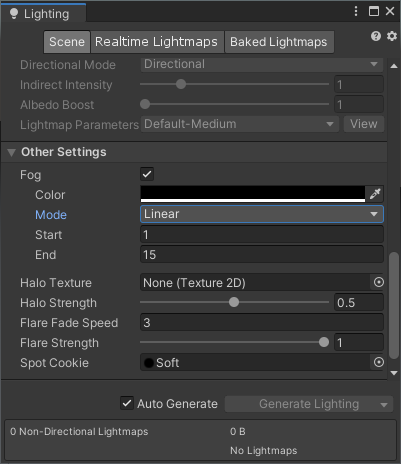

Unity native supports fog effect, which can be set through Window/Rendering/Lighting Settings:

Unity supports three modes of fog effect, Linear, Exponential and Exponential Squared.

The formula of fog efficiency coefficient corresponding to Linear mode is as follows:

f

=

E

−

c

E

−

S

f = \dfrac{E - c}{E - S}

f=E−SE−c

Where E is the end distance of the fog effect, S is the start distance, and c is the fog effect coordinate of the current point.

The formula of fog efficiency coefficient corresponding to Exponential mode is as follows:

f

=

1

2

c

d

f = \dfrac{1}{2^{cd}}

f=2cd1

Where, d is the density coefficient of fog effect.

The formula of fog efficiency coefficient corresponding to Exponential Squared mode is as follows:

f

=

1

2

(

c

d

)

2

f = \dfrac{1}{2^{(cd)^2}}

f=2(cd)21

In forward rendering, we can interpolate the fog effect color and the calculated pixel color according to the value of fog effect coefficient to obtain the final color:

float4 ApplyFog (float4 color, Interpolators i) {

float viewDistance = length(_WorldSpaceCameraPos - i.worldPos.xyz);

#if FOG_DEPTH

viewDistance = UNITY_Z_0_FAR_FROM_CLIPSPACE(i.worldPos.w);

#endif

UNITY_CALC_FOG_FACTOR_RAW(viewDistance);

float3 fogColor = 0;

#if defined(FORWARD_BASE_PASS)

fogColor = unity_FogColor.rgb;

#endif

color.rgb = lerp(fogColor, color.rgb, saturate(unityFogFactor));

return color;

}

There are two ways to calculate the fog effect coordinates of the current point: one is to calculate the distance from the current point to the camera position, and the other is to calculate the depth z of the current point in the camera space. Unity provides UNITY_Z_0_FAR_FROM_CLIPSPACE is an API for calculation. It accepts Z in a homogeneous clipping space as a parameter:

#if defined(UNITY_REVERSED_Z)

#if UNITY_REVERSED_Z == 1

//D3d with reversed Z => z clip range is [near, 0] -> remapping to [0, far]

//max is required to protect ourselves from near plane not being correct/meaningfull in case of oblique matrices.

#define UNITY_Z_0_FAR_FROM_CLIPSPACE(coord) max(((1.0-(coord)/_ProjectionParams.y)*_ProjectionParams.z),0)

#else

//GL with reversed z => z clip range is [near, -far] -> should remap in theory but dont do it in practice to save some perf (range is close enough)

#define UNITY_Z_0_FAR_FROM_CLIPSPACE(coord) max(-(coord), 0)

#endif

#elif UNITY_UV_STARTS_AT_TOP

//D3d without reversed z => z clip range is [0, far] -> nothing to do

#define UNITY_Z_0_FAR_FROM_CLIPSPACE(coord) (coord)

#else

//Opengl => z clip range is [-near, far] -> should remap in theory but dont do it in practice to save some perf (range is close enough)

#define UNITY_Z_0_FAR_FROM_CLIPSPACE(coord) (coord)

#endif

This function determines whether to enable reverse according to different API s_ z. Normalize z in clip space to the range of [0, f]. Here f is the far clipping plane_ ProjectionParams is a 4-dimensional vector whose y component represents near clipping plane n and z component represents far clipping plane F.

After the fog effect coordinates are obtained, the apiunity provided by Unity can be substituted_ CALC_ FOG_ FACTOR_ Raw coefficient for calculating fog effect:

#if defined(FOG_LINEAR)

// factor = (end-z)/(end-start) = z * (-1/(end-start)) + (end/(end-start))

#define UNITY_CALC_FOG_FACTOR_RAW(coord) float unityFogFactor = (coord) * unity_FogParams.z + unity_FogParams.w

#elif defined(FOG_EXP)

// factor = exp(-density*z)

#define UNITY_CALC_FOG_FACTOR_RAW(coord) float unityFogFactor = unity_FogParams.y * (coord); unityFogFactor = exp2(-unityFogFactor)

#elif defined(FOG_EXP2)

// factor = exp(-(density*z)^2)

#define UNITY_CALC_FOG_FACTOR_RAW(coord) float unityFogFactor = unity_FogParams.x * (coord); unityFogFactor = exp2(-unityFogFactor*unityFogFactor)

#else

#define UNITY_CALC_FOG_FACTOR_RAW(coord) float unityFogFactor = 0.0

#endif

unity_FogParams is a 4-dimensional vector that stores fog related parameters in Light Setting:

// x = density / sqrt(ln(2)), useful for Exp2 mode

// y = density / ln(2), useful for Exp mode

// z = -1/(end-start), useful for Linear mode

// w = end/(end-start), useful for Linear mode

float4 unity_FogParams;

After calculating the fog effect coefficient, you need to consider whether to superimpose the fog effect color according to whether it is forward base pass. This is because when there are multiple light sources, forward pass will be executed multiple times, but in fact, we only need to superimpose the fog effect color once.

In the delayed rendering path, it is a little troublesome to use fog effect. Because the geometric information of the scene is saved in the G-Buffer, not the information after illumination calculation, a strategy similar to forward rendering path cannot be used in the geometry pass phase. We need to stack the final output color and fog effect through post-processing. The shader reference used in post-processing is as follows:

Shader "Custom/Deferred Fog" {

Properties {

_MainTex ("Source", 2D) = "white" {}

}

SubShader {

Cull Off

ZTest Always

ZWrite Off

Pass {

CGPROGRAM

#pragma vertex VertexProgram

#pragma fragment FragmentProgram

#pragma multi_compile_fog

#define FOG_DISTANCE

// #define FOG_SKYBOX

#include "UnityCG.cginc"

sampler2D _MainTex, _CameraDepthTexture;

float3 _FrustumCorners[4];

struct VertexData {

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct Interpolators {

float4 pos : SV_POSITION;

float2 uv : TEXCOORD0;

#if defined(FOG_DISTANCE)

float3 ray : TEXCOORD1;

#endif

};

Interpolators VertexProgram (VertexData v) {

Interpolators i;

i.pos = UnityObjectToClipPos(v.vertex);

i.uv = v.uv;

#if defined(FOG_DISTANCE)

i.ray = _FrustumCorners[v.uv.x + 2 * v.uv.y];

#endif

return i;

}

float4 FragmentProgram (Interpolators i) : SV_Target {

float depth = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv);

depth = Linear01Depth(depth);

float viewDistance =

depth * _ProjectionParams.z - _ProjectionParams.y;

#if defined(FOG_DISTANCE)

viewDistance = length(i.ray * depth);

#endif

UNITY_CALC_FOG_FACTOR_RAW(viewDistance);

unityFogFactor = saturate(unityFogFactor);

#if !defined(FOG_SKYBOX)

if (depth > 0.9999) {

unityFogFactor = 1;

}

#endif

#if !defined(FOG_LINEAR) && !defined(FOG_EXP) && !defined(FOG_EXP2)

unityFogFactor = 1;

#endif

float3 sourceColor = tex2D(_MainTex, i.uv).rgb;

float3 foggedColor =

lerp(unity_FogColor.rgb, sourceColor, unityFogFactor);

return float4(foggedColor, 1);

}

ENDCG

}

}

}

The implementation idea is similar to forward rendering, but during post-processing, our vertex information actually only has four vertices of quad, which is not enough to calculate the distance from the current pixel to the camera position. So it's also borrowed here_ FrustumCorners parameter, which transmits the visual cone information of the camera. The ray from each pixel of the quad to the camera position is interpolated through the four vertices of the quad, and multiplied by the 01 depth of the current pixel in the camera space to calculate the distance from the object corresponding to the current pixel to the camera position. In addition, it is worth mentioning that in order to avoid mixing fog effect colors into skybox during post-processing, it can be set artificially. When the depth of camera space is close to the far clipping plane, the influence of fog effect can be ignored.

Reference

[1] Fog