introduce

before this series, we studied creating our own custom Native Container and adding support for functions, such as deallocating when jobs are completed and adding parallel Job support. In this section, we will study another method of using [NativeSetThreadIndex] to add support for parallel writing.

this article will not use the code in the previous article, but implement a new Native Container. So let's assume you already know how to do it. If not, you can go back and read the previous articles in this series.

1) NativeSummedFloat3 Setup

the container we want to implement is called NativeSummedFloat3. This container holds a float3, but allows multiple threads to be added to it in parallel. For example, this can be useful when calculating the average position of a large group of entities.

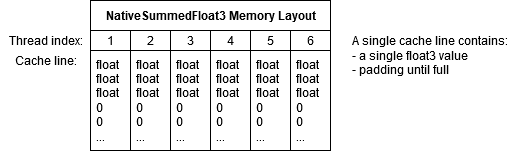

in the following code, we have made all the basic settings for our custom container. But it's worth noting the amount of memory we allocate. We will allocate a cache row for each worker thread. This allows us to make our container thread safe. By letting each thread write to its own part of memory, that is, the cache line, there will never be multiple threads writing to the same memory. It also allows better cache access to optimize performance. The disadvantage is that we will allocate a lot of memory (starting with Job, a total of 8Kb). We cannot allocate less than one cache row per worker thread because the CPU always loads a cache row when accessing data.

using System;

using System.Runtime.InteropServices;

using Unity.Burst;

using Unity.Collections;

using Unity.Collections.LowLevel.Unsafe;

using Unity.Jobs;

using Unity.Jobs.LowLevel.Unsafe;

using Unity.Mathematics;

[NativeContainer]

[NativeContainerSupportsDeallocateOnJobCompletion]

[StructLayout(LayoutKind.Sequential)]

public unsafe struct NativeSummedFloat3 : IDisposable

{

[NativeDisableUnsafePtrRestriction] internal void* m_Buffer;

#if ENABLE_UNITY_COLLECTIONS_CHECKS

internal AtomicSafetyHandle m_Safety;

[NativeSetClassTypeToNullOnSchedule] internal DisposeSentinel m_DisposeSentinel;

#endif

internal Allocator m_AllocatorLabel;

public NativeSummedFloat3(Allocator allocator)

{

// Safety checks

#if ENABLE_UNITY_COLLECTIONS_CHECKS

if (allocator <= Allocator.None)

throw new ArgumentException("Allocator must be Temp, TempJob or Persistent", nameof(allocator));

// You may want to do other checks when dealing with generic containers and cached rows.

/*

if (!UnsafeUtility.IsBlittable<T>())

throw new ArgumentException(string.Format("{0} used in NativeValue<{0}> must be blittable", typeof(T)));

if (UnsafeUtility.SizeOf<T>() > JobsUtility.CacheLineSize)

throw new ArgumentException(string.Format("{0} used in NativeValue<{0}> had a size of {1} which is greater than the maximum size of {2}", typeof(T), UnsafeUtility.SizeOf<T>(), JobsUtility.CacheLineSize));

*/

DisposeSentinel.Create(out m_Safety, out m_DisposeSentinel, 0, allocator);

#endif

// Allocate a cache row for each worker thread.

m_Buffer = UnsafeUtility.Malloc(JobsUtility.CacheLineSize * JobsUtility.MaxJobThreadCount, JobsUtility.CacheLineSize, allocator);

m_AllocatorLabel = allocator;

Value = float3.zero;

}

//NativeSummedFloat3 is allowed to be converted to float3.

public static implicit operator float3(NativeSummedFloat3 value) { return value.Value; }

/*

* ... Next Code ...

*/

let's take out all the boring code and immediately add it to the end of our NativeSummedFloat3 structure. Again, more information on how this code works can be found in the previous parts of this series.

/*

* ... Previous Code ...

*/

[WriteAccessRequired]

public void Dispose()

{

#if ENABLE_UNITY_COLLECTIONS_CHECKS

if (!UnsafeUtility.IsValidAllocator(m_AllocatorLabel))

throw new InvalidOperationException("The NativeSummedFloat3 can not be Disposed because it was not allocated with a valid allocator.");

DisposeSentinel.Dispose(ref m_Safety, ref m_DisposeSentinel);

#endif

// Free the allocated memory and reset our variables.

UnsafeUtility.Free(m_Buffer, m_AllocatorLabel);

m_Buffer = null;

}

public unsafe JobHandle Dispose(JobHandle inputDeps)

{

#if ENABLE_UNITY_COLLECTIONS_CHECKS

if (!UnsafeUtility.IsValidAllocator(m_AllocatorLabel))

throw new InvalidOperationException("The NativeSummedFloat3 can not be Disposed because it was not allocated with a valid allocator.");

// Disposesentine needs to be cleared on the main thread.

DisposeSentinel.Clear(ref m_DisposeSentinel);

#endif

NativeSummedFloat3DisposeJob disposeJob = new NativeSummedFloat3DisposeJob()

{

Data = new NativeSummedFloat3Dispose() { m_Buffer = m_Buffer, m_AllocatorLabel = m_AllocatorLabel }

};

JobHandle result = disposeJob.Schedule(inputDeps);

#if ENABLE_UNITY_COLLECTIONS_CHECKS

AtomicSafetyHandle.Release(m_Safety);

#endif

m_Buffer = null;

return result;

}

}

[NativeContainer]

internal unsafe struct NativeSummedFloat3Dispose

{

[NativeDisableUnsafePtrRestriction] internal void* m_Buffer;

internal Allocator m_AllocatorLabel;

public void Dispose() { UnsafeUtility.Free(m_Buffer, m_AllocatorLabel); }

}

[BurstCompile]

internal struct NativeSummedFloat3DisposeJob : IJob

{

internal NativeSummedFloat3Dispose Data;

public void Execute() { Data.Dispose(); }

}

2) Single threaded Getter and Setter

let's implement a getter and setter, which can only be accessed from one thread. The getter here is very interesting. We loop through each cache line and add these values to get the final result. We use ReadArrayElementWithStride because our array element is the size of a cache line, but we are only interested in float3 stored at the beginning.

setter first resets all cache rows to 0, and then adds values. We'll look at these methods next.

/*

* ... Other Code ...

*/

public float3 Value

{

get

{

#if ENABLE_UNITY_COLLECTIONS_CHECKS

AtomicSafetyHandle.CheckReadAndThrow(m_Safety);

#endif

// Sums the values stored on each worker thread cache row.

float3 result = UnsafeUtility.ReadArrayElement<float3>(m_Buffer, 0);

for (int i = 1; i < JobsUtility.MaxJobThreadCount; i++)

result += UnsafeUtility.ReadArrayElementWithStride<float3>(m_Buffer, i, JobsUtility.CacheLineSize);

return result;

}

[WriteAccessRequired]

set

{

#if ENABLE_UNITY_COLLECTIONS_CHECKS

AtomicSafetyHandle.CheckWriteAndThrow(m_Safety);

#endif

Reset();

AddValue(value);

}

}

/*

* ... Next Code ...

*/

3) Single threaded method

the AddValue and Reset methods access cache rows in a similar way to our getter. We don't need to worry about multiple writers yet, so we can use WriteArrayElement and just write the first cache line. But for Reset, we need to use WriteArrayElementWithStride again, because our element is the size of a cache line.

/*

* ... Previous Code ...

*/

[WriteAccessRequired]

public void AddValue(float3 value)

{

#if ENABLE_UNITY_COLLECTIONS_CHECKS

AtomicSafetyHandle.CheckWriteAndThrow(m_Safety);

#endif

// Add a value to the sum. We are writing from a single thread, so we will write the first cache line.

float3 current = UnsafeUtility.ReadArrayElement<float3>(m_Buffer, 0);

current += value;

UnsafeUtility.WriteArrayElement(m_Buffer, 0, current);

}

[WriteAccessRequired]

public void Reset()

{

#if ENABLE_UNITY_COLLECTIONS_CHECKS

AtomicSafetyHandle.CheckWriteAndThrow(m_Safety);

#endif

// Reset each worker thread cache row to float3 zero.

for (int i = 0; i < JobsUtility.MaxJobThreadCount; i++)

UnsafeUtility.WriteArrayElementWithStride(m_Buffer, i, JobsUtility.CacheLineSize, float3.zero);

}

/*

* ... Next Code ...

*/

4) Parallel writer with thread index

now is the interesting part, parallel writing. We added code to the NativeSummedFloat3 structure to create a parallel write object, as explained in the previous article. Note that [NativeSetThreadIndex] and m_ThreadIndex variable. Note that the naming here is very important! This variable will receive the thread index when the job is scheduled. We use this variable as the index of cache rows for reading and writing.

/*

* ... Previous Code ...

*/

[NativeContainerIsAtomicWriteOnly]

[NativeContainer]

unsafe public struct ParallelWriter

{

[NativeDisableUnsafePtrRestriction] internal void* m_Buffer;

#if ENABLE_UNITY_COLLECTIONS_CHECKS

internal AtomicSafetyHandle m_Safety;

#endif

[NativeSetThreadIndex]

internal int m_ThreadIndex;

[WriteAccessRequired]

public void AddValue(float3 value)

{

#if ENABLE_UNITY_COLLECTIONS_CHECKS

AtomicSafetyHandle.CheckWriteAndThrow(m_Safety);

#endif

// Add a value to the sum. We are writing in parallel, so we will write to the cache line allocated to the thread.

float3 current = UnsafeUtility.ReadArrayElementWithStride<float3>(m_Buffer, m_ThreadIndex, JobsUtility.CacheLineSize);

current += value;

UnsafeUtility.WriteArrayElementWithStride(m_Buffer, m_ThreadIndex, JobsUtility.CacheLineSize, current);

}

}

public ParallelWriter AsParallelWriter()

{

ParallelWriter writer;

#if ENABLE_UNITY_COLLECTIONS_CHECKS

AtomicSafetyHandle.CheckWriteAndThrow(m_Safety);

writer.m_Safety = m_Safety;

AtomicSafetyHandle.UseSecondaryVersion(ref writer.m_Safety);

#endif

writer.m_Buffer = m_Buffer;

writer.m_ThreadIndex = 0; // The thread index will be set later by the Job schedule.

return writer;

}

/*

* ... More Code ...

*/

usage method

this is all we need to do. With this, we create a custom Native container, which can realize parallel writing by using thread index. The following code shows how we can use it to calculate the average position of all entities with LocalToWorld components in the scene.

using Unity.Collections;

using Unity.Entities;

using Unity.Jobs;

using Unity.Mathematics;

using Unity.Transforms;

public class NativeSummedFloat3System : SystemBase

{

private EntityQuery localToWorldQuery;

protected override void OnUpdate()

{

NativeSummedFloat3 avgPosition = new NativeSummedFloat3(Allocator.TempJob);

NativeSummedFloat3.ParallelWriter avgPositionParallelWriter = avgPosition.AsParallelWriter();

// Add all positions of the entity to the LocalToWorld component.

JobHandle jobHandle = Entities.WithName("AvgPositionJob")

.WithStoreEntityQueryInField(ref localToWorldQuery)

.ForEach((in LocalToWorld localToWorld) =>

{

avgPositionParallelWriter.AddValue(localToWorld.Position);

}).ScheduleParallel(default);

jobHandle.Complete();

// We store the query so that we can calculate how many entities have the LocalToWorld component.

int entityCount = localToWorldQuery.CalculateEntityCount();

UnityEngine.Debug.Log(avgPosition.Value / entityCount);

avgPosition.Dispose();

}

}

summary

in this article, we wrote a new custom Native Container, which uses thread index for parallel writing by allocating its own memory / cache line to each thread. This allows us to create a float3 that can be written in parallel. But we can also generalize this container to allow parallel operations on any value (as long as they are smaller than the cache line). A generic version of this container can be found here, as well as an example of how to use it.