Post processing principle and process

Post processing is to process the image after rendering, and unity uses C# script

It is not displayed after rendering, take one more step, and then display it on the screen after script processing, so as to realize various blur effects, Bloom, stroke and so on

General process of post-treatment

Obtain the current screen image, use the C# script to call the shader to process the image, and display the processed image on the screen

principle

Create a face with exactly the same width and height as the screen, pass the previous rendering results into the script as the rendering texture, and call the shader to sample the texture and render it back to the patch

Therefore, it is necessary to turn off depth writing during post-processing to prevent the rendering texture from covering the last rendered translucent object under special circumstances

Unity provides the OnRenderImage() function to obtain the screen image

MonoBehaviour.OnRenderImage (RenderTexture src, RenderTexture dest)

/*OnRenderImage Using graphics BLIT implementation processing*/ public static void Blit(Texture src, Texture dest); public static void Blit(Texture src, Texture dest, Material mat, int pass = -1); public static void Blit(Texture src, Material mat, int pass = -1);

After the Blit function gets the screen image, it calls shader and assigns the source image src as a render texture to shader. MainTex

Edge detection (stroke)

The original meaning of gradient is a vector (vector), which represents the direction of a function at the point, and the derivative reaches the maximum along the direction, that is, the function changes the fastest along the direction (the direction of the gradient) at the point, and the change rate is the largest (the modulus of the gradient)

Partial differential equations in two-dimensional space

∂

f

(

x

,

y

)

∂

x

=

lim

ϵ

−

>

0

f

(

x

+

ϵ

,

y

)

−

f

(

x

,

y

)

ϵ

\frac{∂f(x,y)}{∂x}=\lim_{\epsilon->0}\frac{f(x+\epsilon,y)-f(x,y)}{\epsilon}

∂x∂f(x,y)=ϵ−>0limϵf(x+ϵ,y)−f(x,y)

∂ f ( x , y ) ∂ y = lim ϵ − > 0 f ( x , y + ϵ ) − f ( x , y ) ϵ \frac{∂f(x,y)}{∂y}=\lim_{\epsilon->0}\frac{f(x,y+\epsilon)-f(x,y)}{\epsilon} ∂y∂f(x,y)=ϵ−>0limϵf(x,y+ϵ)−f(x,y)

Since the pixels are not continuous, as long as the difference of the current pixel along the partial differential direction is calculated, ε= 1, so you only need to perform simple addition and subtraction

After the Pythagorean theorem, the change rate of gray level in two-dimensional space is obtained

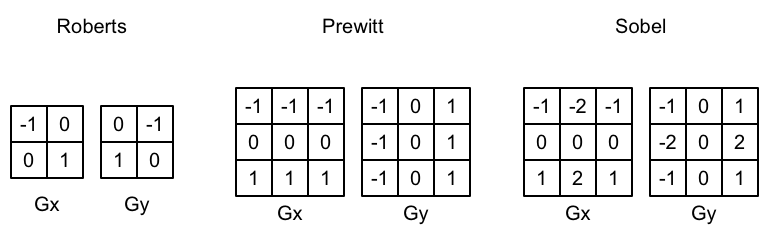

Edge detection operator

Note: the last two operators in the figure are wrong, and the xy direction is reversed!!!

Even operators such as Roberts are generally not used because the result is offset from the original pixel

Prewitt: G x = f ( x + 1 ) + 0 ∗ f ( x ) − f ( x − 1 ) Gx = f(x+1) + 0 * f(x)-f(x-1) Gx=f(x+1)+0 * f(x) − f(x − 1), and the extraction coefficient is [- 1, 0, 1]

So this operator Gx namely: Similarly Gy: [-1, 0, 1] [-1, -1, -1] [-1, 0, 1] [ 0, 0, 0] [-1, 0, 1] [ 1, 1, 1]

Sobel is based on prewitt, which strengthens the detection of pixels on a straight line

Gaussian blur and Bloom effect

Mean fuzzy

The values of the elements of the convolution kernel are the same, and the value of each kernel of n*n is 1/n^2

Gaussian blur

Gaussian fuzzy convolution kernel is also called Gauss kernel

The name of Gaussian blur comes from Gaussian distribution (normal distribution). Its probability density function represents the relationship between the mean value and the sample value, so Gaussian blur is a method of weight reduction according to distance

G

(

x

,

y

)

=

1

2

π

σ

2

e

−

x

2

+

y

2

2

σ

2

G(x,y)=\frac{1}{2\pi\sigma^2}e^{-\frac{x^2+y^2}{2\sigma^2}}

G(x,y)=2πσ21e−2σ2x2+y2

μ

\mu

μ It is 0 because the origin distance of the current pixel is 0 and the variance is 0

σ

\sigma

σ Take standard deviation 1

The Gaussian kernel of n*n can be replaced by two one-dimensional Gaussian kernels

Moreover, because the probability density function is symmetrical, the storage can be optimized

/*Shader*/

_MainTex Get screen image

_MainTex_TexelSize Get texture size

/*Calculate texture coordinates*/

half2 uv[5] : TEXCOORDS0

//Vertical

uv[0]=uv;

uv[1]=uv + float2(0.0, _MainTex_TexelSize * 1.0);//Coordinates of the upper grid

uv[2]=uv - float2(0.0, _MainTex_TexelSize * 1.0);//Coordinates of the lower grid

uv[3]=uv + float2(0.0, _MainTex_TexelSize * 2.0);//Coordinates of the upper two grids

uv[4]=uv - float2(0.0, _MainTex_TexelSize * 2.0);//Coordinates of the lower two grids

//The same goes for horizontal

Bloom effect

Step: extract the bright part in the image (the brightness of the pixel can be calculated by rgb through a certain proportion), Gaussian blur the extracted bright part, and mix the original image and Bloom

luminance = 0.2125 * r + 0.7154 * g + 0.0721 * b. It can be seen that green contributes the most to brightness, followed by red and blue

Motion blur

Methods: cumulative cache (mixing multiple images), speed cache (blur processing according to the motion speed of pixels)

script

/*General format*/

using UnityEngine;

using System.Collections;

public class MyScript : MonoBehaviour {

public Shader MyScriptShader;

private Material MyScriptMaterial = null;

public Material material {

get {

MyScriptMaterial = function(MyScriptShader, MyScriptMaterial);

return MyScriptMaterial;

}

}

//Exposed properties

[Range(0, 4)]

public int temp = 3;

...

//Call shader

void OnRangeImage(RenderTexture src, RenderTexture dest) {

if(material != null) {

material.setFloat("_temp", temp);

//Post processing

Graphics.Blit(src, dest, material);

}

else {

//Direct return

Graphics.Blit(src, dest);

}

}

}